Call for Views in Response to the European Commission's Digital Service

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Recommending Best Answer in a Collaborative Question Answering System

RECOMMENDING BEST ANSWER IN A COLLABORATIVE QUESTION ANSWERING SYSTEM Mrs Lin Chen Submitted in fulfilment of the requirements for the degree of Master of Information Technology (Research) School of Information Technology Faculty of Science & Technology Queensland University of Technology 2009 Recommending Best Answer in a Collaborative Question Answering System Page i Keywords Authority, Collaborative Social Network, Content Analysis, Link Analysis, Natural Language Processing, Non-Content Analysis, Online Question Answering Portal, Prestige, Question Answering System, Recommending Best Answer, Social Network Analysis, Yahoo! Answers. © 2009 Lin Chen Page i Recommending Best Answer in a Collaborative Question Answering System Page ii © 2009 Lin Chen Page ii Recommending Best Answer in a Collaborative Question Answering System Page iii Abstract The World Wide Web has become a medium for people to share information. People use Web- based collaborative tools such as question answering (QA) portals, blogs/forums, email and instant messaging to acquire information and to form online-based communities. In an online QA portal, a user asks a question and other users can provide answers based on their knowledge, with the question usually being answered by many users. It can become overwhelming and/or time/resource consuming for a user to read all of the answers provided for a given question. Thus, there exists a need for a mechanism to rank the provided answers so users can focus on only reading good quality answers. The majority of online QA systems use user feedback to rank users’ answers and the user who asked the question can decide on the best answer. Other users who didn’t participate in answering the question can also vote to determine the best answer. -

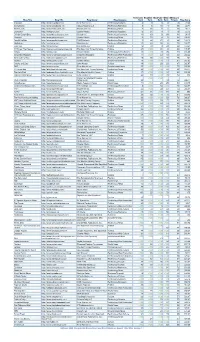

Blog Title Blog URL Blog Owner Blog Category Technorati Rank

Technorati Bloglines BlogPulse Wikio SEOmoz’s Blog Title Blog URL Blog Owner Blog Category Rank Rank Rank Rank Trifecta Blog Score Engadget http://www.engadget.com Time Warner Inc. Technology/Gadgets 4 3 6 2 78 19.23 Boing Boing http://www.boingboing.net Happy Mutants LLC Technology/Marketing 5 6 15 4 89 33.71 TechCrunch http://www.techcrunch.com TechCrunch Inc. Technology/News 2 27 2 1 76 42.11 Lifehacker http://lifehacker.com Gawker Media Technology/Gadgets 6 21 9 7 78 55.13 Official Google Blog http://googleblog.blogspot.com Google Inc. Technology/Corporate 14 10 3 38 94 69.15 Gizmodo http://www.gizmodo.com/ Gawker Media Technology/News 3 79 4 3 65 136.92 ReadWriteWeb http://www.readwriteweb.com RWW Network Technology/Marketing 9 56 21 5 64 142.19 Mashable http://mashable.com Mashable Inc. Technology/Marketing 10 65 36 6 73 160.27 Daily Kos http://dailykos.com/ Kos Media, LLC Politics 12 59 8 24 63 163.49 NYTimes: The Caucus http://thecaucus.blogs.nytimes.com The New York Times Company Politics 27 >100 31 8 93 179.57 Kotaku http://kotaku.com Gawker Media Technology/Video Games 19 >100 19 28 77 216.88 Smashing Magazine http://www.smashingmagazine.com Smashing Magazine Technology/Web Production 11 >100 40 18 60 283.33 Seth Godin's Blog http://sethgodin.typepad.com Seth Godin Technology/Marketing 15 68 >100 29 75 284 Gawker http://www.gawker.com/ Gawker Media Entertainment News 16 >100 >100 15 81 287.65 Crooks and Liars http://www.crooksandliars.com John Amato Politics 49 >100 33 22 67 305.97 TMZ http://www.tmz.com Time Warner Inc. -

Location-Based Services: Industrial and Business Analysis Group 6 Table of Contents

Location-based Services Industrial and Business Analysis Group 6 Huanhuan WANG Bo WANG Xinwei YANG Han LIU Location-based Services: Industrial and Business Analysis Group 6 Table of Contents I. Executive Summary ................................................................................................................................................. 2 II. Introduction ............................................................................................................................................................ 3 III. Analysis ................................................................................................................................................................ 3 IV. Evaluation Model .................................................................................................................................................. 4 V. Model Implementation ........................................................................................................................................... 6 VI. Evaluation & Impact ........................................................................................................................................... 12 VII. Conclusion ........................................................................................................................................................ 16 1 Location-based Services: Industrial and Business Analysis Group 6 I. Executive Summary The objective of the report is to analyze location-based services (LBS) from the industrial -

2020 Annual Report

ACHILLES INTERNATIONAL 2020 ANNUAL REPORT I LETTER FROM THE PRESIDENT Dear Achilles family, Thank you. As we embrace this new year, we do so with the acknowledgement that although 2020 took a toll on all of us, our community came together to embrace chal- lenges; to encourage resilience and strength; and above all, to support one another. We could not have done it without you and I am incredibly grateful for every individual that makes up the Achilles community. As we were ramping up our plans for 2020, the COVID-19 pandemic quickly pushed us to reinvent our approach to programming and connecting with each other. In true Achil- les fashion, our community of athletes, volunteers, staff, supporters and friends rose to the occasion and embraced opportunities to connect, consider and appreciate our shared experiences in new and creative ways. From late night (or early morning) Chap- ter Zoom calls and virtual dance parties to digitally delivered workouts and team-based challenges, we have found meaningful ways to stay active, engaged and connected, even as we remain apart. We did not let the absence of a shared starting line prevent us from supporting athletes in their training and nurturing our community’s determination to achieve big goals. In this report you’ll read about several highlights of the year including our Virtual Hope & Possibility race and the Achilles Cup. In addition to these successful virtual events that brought together thousands of athletes across the globe, Achilles was well represented in several marquee events that moved from the road to the web including the virtual marathons in Boston, New York City and Chicago, among others. -

Speaker Book

Table of Contents Program 5 Speakers 9 NOAH Infographic 130 Trading Comparables 137 2 3 The NOAH Bible, an up-to-date valuation and industry KPI publication. This is the most comprehensive set of valuation comps you'll find in the industry. Reach out to us if you spot any companies or deals we've missed! March 2018 Edition (PDF) Sign up Here 4 Program 5 COLOSSEUM - Day 1 6 June 2018 SESSION TITLE COMPANY TIME COMPANY SPEAKER POSITION Breakfast 8:00 - 10:00 9:00 - 9:15 Between Tradition and Digitisation: What Old and New Economy can Learn from One Another? NOAH Advisors Marco Rodzynek Founder & CEO K ® AUTO1 Group Gerhard Cromme Chairman Facebook Martin Ott VP, MD Central Europe 9:15 - 9:25 Evaneos Eric La Bonnardière CEO CP 9:25 - 9:35 Kiwi.com Oliver Dlouhý CEO 9:35 - 9:45 HomeToGo Dr. Patrick Andrae Co-Founder & CEO FC MR Insight Venture Partners Harley Miller Vice President CP 9:45 - 9:55 GetYourGuide Johannes Reck Co-Founder & CEO MR Travel & Tourism Travel 9:55 - 10:05 Revolution Precrafted Robbie Antonio CEO FC MR FC 10:05 - 10:15 Axel Springer Dr. Mathias Döpfner CEO 10:15 - 10:40 Uber Dara Khosrowshahi CEO FC hy Christoph Keese CEO CP 10:40 - 10:50 Moovit Nir Erez Founder & CEO 10:50 - 11:00 BlaBlaCar Nicolas Brusson MR Co-Founder & CEO FC 11:00 - 11:10 Taxify Markus Villig MR Founder & CEO 11:10 - 11:20 Porsche Sebastian Wohlrapp VP Digital Business Platform 11:20 - 11:30 Drivy Paulin Dementhon CEO 11:30 - 11:40 Optibus Amos Haggiag Co-Founder & CEO 11:40 - 11:50 Blacklane Dr. -

Sustaining Software Innovation from the Client to the Web Working Paper

Principles that Matter: Sustaining Software Innovation from the Client to the Web Marco Iansiti Working Paper 09-142 Copyright © 2009 by Marco Iansiti Working papers are in draft form. This working paper is distributed for purposes of comment and discussion only. It may not be reproduced without permission of the copyright holder. Copies of working papers are available from the author. Principles that Matter: Sustaining Software Innovation 1 from the Client to the Web Marco Iansiti David Sarnoff Professor of Business Administration Director of Research Harvard Business School Boston, MA 02163 June 15, 2009 1 The early work for this paper was based on a project funded by Microsoft Corporation. The author has consulted for a variety of companies in the information technology sector. The author would like to acknowledge Greg Richards and Robert Bock from Keystone Strategy LLC for invaluable suggestions. 1. Overview The information technology industry forms an ecosystem that consists of thousands of companies producing a vast array of interconnected products and services for consumers and businesses. This ecosystem was valued at more than $3 Trillion in 2008.2 More so than in any other industry, unique opportunities for new technology products and services stem from the ability of IT businesses to build new offerings in combination with existing technologies. This creates an unusual degree of interdependence among information technology products and services and, as a result, unique opportunities exist to encourage competition and innovation. In the growing ecosystem of companies that provide software services delivered via the internet - or “cloud computing” - the opportunities and risks are compounded by a significant increase in interdependence between products and services. -

The Topic of Terrorism on Yahoo! Answers : Questions, Answers and Users’ Anonymity

This is a repository copy of The Topic of Terrorism on Yahoo! Answers : Questions, Answers and Users’ Anonymity. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/154122/ Version: Accepted Version Article: Chua, Alton Y.K. and Banerjee, Snehasish orcid.org/0000-0001-6355-0470 (2020) The Topic of Terrorism on Yahoo! Answers : Questions, Answers and Users’ Anonymity. Aslib Journal of Information Management. pp. 1-16. ISSN 2050-3806 https://doi.org/10.1108/AJIM-08-2019-0204 Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Aslib Journal of Information Management Aslib Journal of Information Management The Topic of Terrorism on Yahoo! Answers: Questions, Answers and Users’ Anonymity Journal: Aslib Journal of Information Management Manuscript ID AJIM-08-2019-0204.R1 Manuscript Type: Research Paper Community question answering, online anonymity, online hate speech, Keywords: Terrorism, Question themes, Answer characteristics Page 1 of 34 Aslib Journal of Information Management 1 2 3 The Topic of Terrorism on Yahoo! Answers: Questions, Answers and Users’ Anonymity 4 Aslib Journal of Information Management 5 6 7 8 Abstract 9 10 Purpose: This paper explores the use of community question answering sites (CQAs) on the 11 12 13 topic of terrorism. -

Imille, Immagine Rinnovata E Fatturato 2018 Oltre I 5 Milioni in Crescita Del

ISSN 2499-1759 LA TUA INFORMAZIONE QUOTIDIANA DAL 1989 GIOVEDÌ 20 DICEMBRE 2018 | n° 221 IERI NEL CORSO DI UN EVENTO A MILANO SONO STATI PRESENTATI IL REBRANDING E I RISULTATI DELL’AGENZIA IMILLE, IMMAGINE RINNOVATA E FATTURATO 2018 OLTRE I 5 MILIONI IN CRESCITA DEL 67% Nuovo logo e sito web per la realtà che da quindici anni si colloca come partner creativo PAOLO PASCOLO di aziende e brand innovativi. Il Ceo Paolo Pascolo: “Sin dal 2004 abbiamo vissuto un’evoluzione” pag 5 PER IL PROGRAMMA ‘NICE TO EAt-EU’ DA NOVEMBRE E PER TUTTO IL 2019 BUDGET DI 15 MILIONI DI EURO ALL’INTERNO FORMAGGIO PIAVE WAMI SCEGLIE WAVEMAKER Roberto Cacciapaglia ‘chiude’ in musica il primo ciclo di DOP: GARA DA CONNEXIA PER VINCE IL PITCH Colazioni Digitali di Sorgenia 1,4 MLN PER LA DIGITAL PR & MEDIA DI GRANA pag 9 COMUNICAZIONE SPECIAL ACTIVATION PADANO Audiweb, a ottobre la total digital audience L’incarico riguarda Italia, Le due realtà hanno iniziato Per l’agenzia media guidata a quota 42,7 milioni Germania e Austria e prevede la collaborazione con il progetto dal Ceo Luca Vergani si tratta di pag 16 attività di adv, pr, web, social WAMITrip per la Tanzania con una conferma dell’incarico per media ed eventi pag 3 Simone Bramante pag 6 l’anno prossimo pag 14 ADS: Paolo Nusiner (dg di Avvenire) nominato nuovo IL TERMINE PER IL RICEVIMENTO DELLE OFFERTE È FISSATO AL 14 GENNAIO presidente all’unanimità pag 16 IAB Italia soddisfatta per CONI SERVIZI CERCA UNA l’inserimento della Web Tax nella Manovra di Bilancio SOCIETÀ DI CONSULENZA pag 16 Gruppo Mondadori: -

2019-09-23-UNGA-Release 0.Pdf

News Release FOR IMMEDIATE RELEASE Media contact: September 23, 2019 Bernadette Brijlall [email protected] Alex Martinetti [email protected] Ground-Breaking Immersive Installation Brings to Life Urgency of Climate Action What you need to know: 2019 Climate Action Summit kicked off with an immersive look at devasting impacts of climate change, produced by RYOT a Verizon Media company. Verizon to focus its support of the Sustainable Development Goals (SDGs), emphasizing SDG 4: Quality Education and SDG 13: Climate Action, through social responsibility programs such as Verizon Innovative Learning and the company's sustainability efforts. New York, New York (September 23, 2019) – As world leaders and diplomats convene for the 74th United Nations General Assembly (UNGA), Verizon continues to focus on responsible business and the power of cutting-edge technology to deliver compelling story-telling that reinforces the need for urgent climate action. During UNGA, Verizon will focus on its support of the Sustainable Development Goals (SDGs), emphasizing SDG 4: Quality Education and SDG 13: Climate Action, through social responsibility programs such as Verizon Innovative Learning and the company's sustainability efforts. Verizon has also joined the UN Global Compact, as part of a long-term pledge to a principles-based approach to corporate sustainability that upholds a commitment to people and the planet. As part of UNGA, the 2019 Climate Action Summit kicked off with an immersive look at the devastating impacts climate change is having right now, as well as the vast opportunities ahead as the world moves from the grey to the green economy, with technological innovation playing a key role. -

Why Google Dominates Advertising Markets Competition Policy Should Lean on the Principles of Financial Market Regulation

Why Google Dominates Advertising Markets Competition Policy Should Lean on the Principles of Financial Market Regulation Dina Srinivasan* * Since leaving the industry, and authoring The Antitrust Case Against Face- book, I continue to research and write about the high-tech industry and competition, now as a fellow with Yale University’s antitrust initiative, the Thurman Arnold Pro- ject. Separately, I have advised and consulted on antitrust matters, including for news publishers whose interests are in conflict with Google’s. This Article is not squarely about antitrust, though it is about Google’s conduct in advertising markets, and the idea for writing a piece like this first germinated in 2014. At that time, Wall Street was up in arms about a book called FLASH BOYS by Wall Street chronicler Michael Lewis about speed, data, and alleged manipulation in financial markets. The controversy put high speed trading in the news, giving many of us in advertising pause to appre- ciate the parallels between our market and trading in financial markets. Since then, I have noted how problems related to speed and data can distort competition in other electronic trading markets, how lawmakers have monitored these markets for con- duct they frown upon in equities trading, but how advertising has largely remained off the same radar. This Article elaborates on these observations and curiosities. I am indebted to and thank the many journalists that painstakingly reported on industry conduct, the researchers and scholars whose work I cite, Fiona Scott Morton and Aus- tin Frerick at the Thurman Arnold Project for academic support, as well as Tom Fer- guson and the Institute for New Economic Thinking for helping to fund the research this project entailed. -

Nicole Garcia

NICOLE GARCIA [email protected] · https://ngarcian.github.io · New York, NY · 347 551 3484 EDUCATION 2013 - Present CONVENT OF THE SACRED HEART, NEW YORK, NY Class of 2018 Advanced Classes: AP Computer Science, AP Spanish Language, AP U.S. History, AP English Literature, AP World History, Spanish 3 Honors, Spanish 2 Honors 2012 - Present PREP FOR PREP, NEW YORK, NY Accepted into a highly selective leadership development program and completed rigorous 14-month academic component to prepare for placement in a leading independent school. EXPERIENCE 2017 - Present TUTOR ● Clarify homework questions especially in Spanish and Math ● Set goals to improve study habits 2016 - Present EAST HARLEM SCHOOL / CHILDREN'S AFTER-SCHOOL STUDIO ARTS Advisor ● Teach kids different art projects and techniques ● Clarified instructions to students who spoke Spanish ● Explained concepts of math, science and vocabulary to students ages 11 to 13 Summer 2017 OATH (AOL Infuse) Intern ● Selected to participate in month-long program about the media technology industry ● Heard from industry leaders in Advertising, Technology and Media (HuffPost, Verizon, AOL, RYOT, Yahoo, Tumblr etc.) ● Worked alongside professionals in Optimization Strategy Winter - Spring SPRING BANK (Ariva), NEW YORK, NY 2017 Free Income Tax Preparation Assistance Translator ● Translated tax forms from Spanish to English ● Clarified concerns from non-English speakers ● Recorded data on Google Sheets ACTIVITIES CONVENT OF THE SACRED HEART, NEW YORK, NY 2017 - 2018 ● Diversity, Awareness, and Inclusion Coalition, Co-Founder 2017 - 2018 ● Women of Proud Heritage Affinity Group, Co-Founder 2016 - 2017 ● Women of Proud Heritage Club, Member 2015 - Present ● Computer Science Club, Member 2014 - Present ● Math Team, Member . -

Ad Tech Inquiry: Issues Paper Response from Verizon Media

ACCC - Ad tech Inquiry: Issues Paper Response from Verizon Media Australia April 2020 1. Introduction 1.1. Verizon Media is pleased to provide these initial comments in response to the issues paper. We support competition authorities deepening their understanding of the digital advertising market, to fully understand the competitive dynamics and broader context - regulatory, contractual, and otherwise - within which competition takes place. 1.2. The inquiry impacts our business at all levels. Verizon Media is active in the advertising market as an advertiser, media owner and advertising intermediary. 2. About Verizon Media 2.1. Verizon Media is a global house of digital media and technology brands. Verizon Media’s brands include Yahoo, AOL, Ryot, HuffPost, TechCrunch and BUILD. Through our own operations, and in partnership with others, Verizon Media helps drive diversity and choice in consumer services and brand advertising. 2.2. Verizon Media is a business division of Verizon Communications Inc. Verizon Media operates in Australia as Verizon Media Australia Pty Ltd. 3. General comments 3.1. The digital advertising market provides benefits to both businesses and consumers. Revenue generated by digital advertising funds content creation and is critical for fueling investment in platforms, content creation and journalism. Competition in the ad intermediation market provides publishers the ability to partner with multiple platforms and to maximise the value of their inventory. In turn, this promotes plurality by providing a sustainable revenue stream for digital media. Consumers, in turn, reap the benefits of more diverse media. 3.2. We welcome the ACCC’s commitment to detailed evidence-gathering and analysis.