Network Media Content Aggregator for DLNA Server

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Marantz Guide to Pc Audio

White paper MARANTZ GUIDE TO PCAUDIO Contents: Introduction • Introduction As you know, in recent years the way to listen to music has changed. There has been a progression from the use of physical • Digital Connections media to a more digital approach, allowing access to unlimited digital entertainment content via the internet or from the library • Audio Formats and TAGs stored on a computer. It can be iTunes, Windows Media Player or streaming music or watching YouTube and many more. The com- • System requirements puter is a centre piece to all this entertainment. • System Setup for PC and MAC The computer is just a simple player and in a standard setup the performance is just average or even less. • Tips and Tricks But there is also a way to lift the experience to a complete new level of enjoyment, making the computer a good player, by giving the • High Resolution audio download responsibility for the audio to an external component, for example a “USB-DAC”. A DAC is a Digital to Analogue Converter and the USB • Audio transmission modes terminal is connected to the USB output of the computer. Doing so we won’t be only able to enjoy the above mentioned standard audio, but gain access to high resolution audio too, exceeding the CD quality of 16-bit / 44.1kHz. It is possible to enjoy studio master quality as 24-bit/192kHz recordings or even the SACD format DSD with a bitstream at 2.8MHz and even 5.6MHz. However to reach the above, some equipment is needed which needs to be set up and adjusted. -

What Can Apple TV™ Teach Us About Digital Signage?

What can Apple TV™ teach us about Digital Signage? Mark Hemphill | @ScreenScape July 2016 Executive Summary As a savvy communicator, you could be increasing revenue by building dynamic media channels into every one of your business locations via digital signage, yet something is holding you back. The cost and complexity associated with such technology is still too high for the average business owner to tackle. Or is it? Some of the traditional vendors in the digital signage industry make the task of connecting and controlling a few screens out to be a momentous challenge, a task for high paid experts. On the other hand, a new class of technologies has arrived in the home entertainment world that strips the exercise down to basics. And it’s surging in popularity. Apple TV™, and other similar streaming Internet devices, have managed to successfully eliminate the need for complicated hardware and complex installation processes to allow average non-technical homeowners to bring the Internet to their television screens. This trend in consumer entertainment offers insights we can adapt to the commercial world, lessons for digital signage vendors and their customers, which lead towards to simpler, more cost-effective, more user-friendly, and more scalable solutions. This article explores some of the following lessons in detail: • Simple appliances outperform PCs and Macs as digital media players • Sourcing and mounting a TV is not the critical challenge • In order to scale, digital signage networks must be designed for average non-technical users to operate • Creating an epic viewing experience isn’t as important as enabling a large and diverse network • Simplicity and cost-effectiveness go hand in hand By making it easier to connect and control screens, tomorrow’s digital signage solutions will help more businesses to reap the benefits of place-based media. -

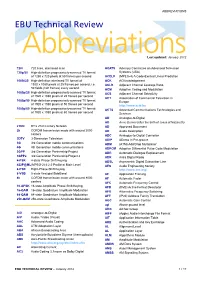

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

I Know What You Streamed Last Night: on the Security and Privacy of Streaming

Digital Investigation xxx (2018) 1e12 Contents lists available at ScienceDirect Digital Investigation journal homepage: www.elsevier.com/locate/diin DFRWS 2018 Europe d Proceedings of the Fifth Annual DFRWS Europe I know what you streamed last night: On the security and privacy of streaming * Alexios Nikas a, Efthimios Alepis b, Constantinos Patsakis b, a University College London, Gower Street, WC1E 6BT, London, UK b Department of Informatics, University of Piraeus, 80 Karaoli & Dimitriou Str, 18534 Piraeus, Greece article info abstract Article history: Streaming media are currently conquering traditional multimedia by means of services like Netflix, Received 3 January 2018 Amazon Prime and Hulu which provide to millions of users worldwide with paid subscriptions in order Received in revised form to watch the desired content on-demand. Simultaneously, numerous applications and services infringing 15 February 2018 this content by sharing it for free have emerged. The latter has given ground to a new market based on Accepted 12 March 2018 illegal downloads which monetizes from ads and custom hardware, often aggregating peers to maximize Available online xxx multimedia content sharing. Regardless of the ethical and legal issues involved, the users of such streaming services are millions and they are severely exposed to various threats, mainly due to poor Keywords: fi Security hardware and software con gurations. Recent attacks have also shown that they may, in turn, endanger Privacy others as well. This work details these threats and presents new attacks on these systems as well as Streaming forensic evidence that can be collected in specific cases. Malware © 2018 Elsevier Ltd. All rights reserved. -

Openbricks Embedded Linux Framework - User Manual I

OpenBricks Embedded Linux Framework - User Manual i OpenBricks Embedded Linux Framework - User Manual OpenBricks Embedded Linux Framework - User Manual ii Contents 1 OpenBricks Introduction 1 1.1 What is it ?......................................................1 1.2 Who is it for ?.....................................................1 1.3 Which hardware is supported ?............................................1 1.4 What does the software offer ?............................................1 1.5 Who’s using it ?....................................................1 2 List of supported features 2 2.1 Key Features.....................................................2 2.2 Applicative Toolkits..................................................2 2.3 Graphic Extensions..................................................2 2.4 Video Extensions...................................................3 2.5 Audio Extensions...................................................3 2.6 Media Players.....................................................3 2.7 Key Audio/Video Profiles...............................................3 2.8 Networking Features.................................................3 2.9 Supported Filesystems................................................4 2.10 Toolchain Features..................................................4 3 OpenBricks Supported Platforms 5 3.1 Supported Hardware Architectures..........................................5 3.2 Available Platforms..................................................5 3.3 Certified Platforms..................................................7 -

Technology Ties: Getting Real After Microsoft

i c a r u s Winter/Spring 2005 Technology Ties: Getting Real after Microsoft The D.C. Circuit’s 2001 decision in United States v. Microsoft opened the door for high-tech monopolists to tie products Scott Sher together if they can demonstrate that the procompetitive Wilson Sonsini Goodrich & Rosati justification for their practice outweighs the competitive harm. Reston, VA Although not yet endorsed by the Supreme Court, the [email protected] Microsoft decision poses a significant threat to stand-alone application providers selling products in dominant operating environments where the operating systems (“OS”) provider also sells competitive stand-alone applications. In the recently-filed private lawsuit—Real Networks v. Microsoft1— Real Networks included in its complaint tying allegations against Microsoft, a dominant OS provider, claiming that the defendant tied the sale of its OS to the purchase of (allegedly less desirable) applications for its operating system. The fate of lawsuits such as Real is almost assuredly contingent upon whether courts follow the Microsoft holding and resulting legal standard or adhere to a more traditional tying analysis, which requires per se condemnation of such practices. These cases and the development of the law of technology ties are likely to have a profound effect on the development of future operating systems and the long-term viability of many independent application providers who offer products that function in such OS’s. With dominant OS providers like Microsoft reaching further into the application world— through the development and/or acquisition of applications that function on their dominant OS environments—OS providers increasingly are becoming competitors to independent application providers, while at the same time providing the industry-standard OS on which these applications run. -

Review: a Digital Video Player to Support Music Practice and Learning Emond, Bruno; Vinson, Norman; Singer, Janice; Barfurth, M

NRC Publications Archive Archives des publications du CNRC ReView: a digital video player to support music practice and learning Emond, Bruno; Vinson, Norman; Singer, Janice; Barfurth, M. A.; Brooks, Martin This publication could be one of several versions: author’s original, accepted manuscript or the publisher’s version. / La version de cette publication peut être l’une des suivantes : la version prépublication de l’auteur, la version acceptée du manuscrit ou la version de l’éditeur. Publisher’s version / Version de l'éditeur: Journal of Technology in Music Learning, 4, 1, 2007 NRC Publications Record / Notice d'Archives des publications de CNRC: https://nrc-publications.canada.ca/eng/view/object/?id=2573dfda-4fa0-4a7f-b8e0-4014b537e486 https://publications-cnrc.canada.ca/fra/voir/objet/?id=2573dfda-4fa0-4a7f-b8e0-4014b537e486 Access and use of this website and the material on it are subject to the Terms and Conditions set forth at https://nrc-publications.canada.ca/eng/copyright READ THESE TERMS AND CONDITIONS CAREFULLY BEFORE USING THIS WEBSITE. L’accès à ce site Web et l’utilisation de son contenu sont assujettis aux conditions présentées dans le site https://publications-cnrc.canada.ca/fra/droits LISEZ CES CONDITIONS ATTENTIVEMENT AVANT D’UTILISER CE SITE WEB. Questions? Contact the NRC Publications Archive team at [email protected]. If you wish to email the authors directly, please see the first page of the publication for their contact information. Vous avez des questions? Nous pouvons vous aider. Pour communiquer directement avec un auteur, consultez la première page de la revue dans laquelle son article a été publié afin de trouver ses coordonnées. -

UNIVERSIDAD AUTÓNOMA DE CIUDAD JUÁREZ Instituto De Ingeniería Y Tecnología Departamento De Ingeniería Eléctrica Y Computación

UNIVERSIDAD AUTÓNOMA DE CIUDAD JUÁREZ Instituto de Ingeniería y Tecnología Departamento de Ingeniería Eléctrica y Computación GRABADOR DE VIDEO DIGITAL UTILIZANDO UN CLUSTER CON TECNOLOGÍA RASPBERRY PI Reporte Técnico de Investigación presentado por: Fernando Israel Cervantes Ramírez. Matrícula: 98666 Requisito para la obtención del título de INGENIERO EN SISTEMAS COMPUTACIONALES Profesor Responsable: M.C. Fernando Estrada Saldaña Mayo de 2015 ii Declaraci6n de Originalidad Yo Fernando Israel Cervantes Ramirez declaro que el material contenido en esta publicaci6n fue generado con la revisi6n de los documentos que se mencionan en la secci6n de Referencias y que el Programa de C6mputo (Software) desarrollado es original y no ha sido copiado de ninguna otra fuente, ni ha sido usado para obtener otro tftulo o reconocimiento en otra Instituci6n de Educaci6n Superior. Nombre alumno IV Dedicatoria A Dios porque Él es quien da la sabiduría y de su boca viene el conocimiento y la inteligencia. A mis padres y hermana por brindarme su apoyo y ayuda durante mi carrera. A mis tíos y abuelos por enseñarme que el trabajo duro trae sus recompensas y que no es imposible alcanzar las metas soñadas, sino que solo es cuestión de perseverancia, trabajo, esfuerzo y tiempo. A mis amigos: Ana, Adriel, Miguel, Angélica, Deisy, Jonathan, Antonio, Daniel, Irving, Lupita, Christian y quienes me falte nombrar, pero que se han convertido en verdaderos compañeros de vida. v Agradecimientos Agradezco a Dios por haberme permitido llegar hasta este punto en la vida, sin Él, yo nada sería y es Él quien merece el primer lugar en esta lista. Gracias Señor porque tu mejor que nadie sabes cuánto me costó, cuanto espere, cuanto esfuerzo y trabajo invertí en todos estos años, gracias. -

Debian \ Amber \ Arco-Debian \ Arc-Live \ Aslinux \ Beatrix

Debian \ Amber \ Arco-Debian \ Arc-Live \ ASLinux \ BeatriX \ BlackRhino \ BlankON \ Bluewall \ BOSS \ Canaima \ Clonezilla Live \ Conducit \ Corel \ Xandros \ DeadCD \ Olive \ DeMuDi \ \ 64Studio (64 Studio) \ DoudouLinux \ DRBL \ Elive \ Epidemic \ Estrella Roja \ Euronode \ GALPon MiniNo \ Gibraltar \ GNUGuitarINUX \ gnuLiNex \ \ Lihuen \ grml \ Guadalinex \ Impi \ Inquisitor \ Linux Mint Debian \ LliureX \ K-DEMar \ kademar \ Knoppix \ \ B2D \ \ Bioknoppix \ \ Damn Small Linux \ \ \ Hikarunix \ \ \ DSL-N \ \ \ Damn Vulnerable Linux \ \ Danix \ \ Feather \ \ INSERT \ \ Joatha \ \ Kaella \ \ Kanotix \ \ \ Auditor Security Linux \ \ \ Backtrack \ \ \ Parsix \ \ Kurumin \ \ \ Dizinha \ \ \ \ NeoDizinha \ \ \ \ Patinho Faminto \ \ \ Kalango \ \ \ Poseidon \ \ MAX \ \ Medialinux \ \ Mediainlinux \ \ ArtistX \ \ Morphix \ \ \ Aquamorph \ \ \ Dreamlinux \ \ \ Hiwix \ \ \ Hiweed \ \ \ \ Deepin \ \ \ ZoneCD \ \ Musix \ \ ParallelKnoppix \ \ Quantian \ \ Shabdix \ \ Symphony OS \ \ Whoppix \ \ WHAX \ LEAF \ Libranet \ Librassoc \ Lindows \ Linspire \ \ Freespire \ Liquid Lemur \ Matriux \ MEPIS \ SimplyMEPIS \ \ antiX \ \ \ Swift \ Metamorphose \ miniwoody \ Bonzai \ MoLinux \ \ Tirwal \ NepaLinux \ Nova \ Omoikane (Arma) \ OpenMediaVault \ OS2005 \ Maemo \ Meego Harmattan \ PelicanHPC \ Progeny \ Progress \ Proxmox \ PureOS \ Red Ribbon \ Resulinux \ Rxart \ SalineOS \ Semplice \ sidux \ aptosid \ \ siduction \ Skolelinux \ Snowlinux \ srvRX live \ Storm \ Tails \ ThinClientOS \ Trisquel \ Tuquito \ Ubuntu \ \ A/V \ \ AV \ \ Airinux \ \ Arabian -

Media Center Oriented Linux Operating System

Media Center oriented Linux Operating System Tudor MIU, Olivia STANESCU, Ana CONSTANTIN, Sorin LACRIŢEANU, Roxana GRIGORE, Domnina BURCA, Tudor CONSTANTINESCU, Alexandru RADOVICI University “Politehnica” of Bucharest Faculty of Engineering in Foreign Languages [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], , [email protected] Abstract: Nowadays there is a high demand of computer controller multimedia home systems. A great variety of computer software media center systems is available on the market, software which transforms an ordinary computer into a home media system. This means it adds some functionality to the normal computer. Our goal is to develop such a user-friendly intuitive system, dedicated for home media centers. In contrast with other proprietary approaches (Windows Media Center, Apple TV), we are building an entire operating system specialized on this. It is based on the Linux kernel, thus providing high portability and flexibility at a very low cost. The system is designed to work out of the box (plug it in and use it), needing zero configurations (no human configuration as much as possible) and no installation (Linux-live system, works from a CD, DVD or USB device). The user interface is not more complicated than a generic TV user interface. In this aim, the file system is hidden from the user, being replaced with an intuitive media library, the driver configurations is done automatically, network configuration is also handled without user actions (as much as possible). Key-words: OS, media center, Linux, multimedia, portable, intuitive, free, open-source 1. -

Bakalářská Práce

ZÁPADO ČESKÁ UNIVERZITA V PLZNI FAKULTA ELEKTROTECHNICKÁ KATEDRA APLIKOVANÉ ELEKTRONIKY A TELEKOMUNIKACÍ BAKALÁ ŘSKÁ PRÁCE Ov ěř ení DLNA technologie Patrik Roule 2014 Ov ěř ení DLNA technologie Patrik Roule 2014 Ov ěř ení DLNA technologie Patrik Roule 2014 Abstrakt Tato bakalá řská práce je zam ěř ena na popis technologie DLNA, jejích sou částí, zp ůsobu propojení a ovládání. Dále se tato práce zabývá popisem možností a využití této technologie, výb ěrem vhodné serverové implementace a v poslední části této práce i jejím ov ěř ením na TV přijíma čích Panasonic. Jako vhodná serverová implementace byl zvolen PS3 Media Server a Windows Media Player, jako ovlada č aplikace Pixel Media Controller. S těmito servery a ovlada čem byla ov ěř ena funk čnost s přijíma čem Panasonic. Klí čová slova Technologie DLNA, UPnP, UPnP AV, digitální mediální server, digitální p řehráva č médií, digitální zobrazova č médií, digitální ovlada č médií, digitální tiskárna médií, mobilní, smartphone, PS3 Media Server, Serviio, Windows Media Player, Windows 7, Panasonic, televize Ov ěř ení DLNA technologie Patrik Roule 2014 Abstract This bachelor’s thesis is focused on the description of DLNA technology, its components, and ways its connected and controlled. Furthermore this thesis covers the description of its possibilities and use, selecting the appropriate server deployments and in the last part of this thesis, system functionality is verified with Panasonic TV. PS3 Media Server and Windows Media Player were chosen like appropriate server implementation. These implementations were used with Pixel Media Controller in the function of controller. Functionality with these servers was checked with Panasonic TV.