Noise Removal in the Developing Process of Digital Negatives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advanced Color Machine Vision and Applications Dr

Advanced Color Machine Vision and Applications Dr. Romik Chatterjee V.P. Business Development Graftek Imaging 2016 V04 - for tutorial 5/4/2016 Outline • What is color vision? Why is it useful? • Comparing human and machine color vision • Physics of color imaging • A model of color image formation • Human color vision and measurement • Color machine vision systems • Basic color machine vision algorithms • Advanced algorithms & applications • Human vision Machine Vision • Case study Important terms What is Color? • Our perception of wavelengths of light . Photometric (neural computed) measures . About 360 nm to 780 nm (indigo to deep red) . “…the rays are not coloured…” - Isaac Newton • Machine vision (MV) measures energy at different wavelengths (Radiometric) nm : nanometer = 1 billionth of a meter Color Image • Color image values, Pi, are a function of: . Illumination spectrum, E(λ) (lighting) λ = Wavelength . Object’s spectral reflectance, R(λ) (object “color”) . Sensor responses, Si(λ) (observer, viewer, camera) . Processing • Human <~> MV . Eye <~> Camera . Brain <~> Processor Color Vision Estimates Object Color • The formation color image values, Pi, has too many unknowns (E(λ) = lighting spectrum, etc.) to directly estimate object color. • Hard to “factor out” these unknowns to get an estimate of object color . In machine vision, we often don’t bother to! • Use knowledge, constraints, and computation to solve for object color estimates . Not perfect, but usually works… • If color vision is so hard, why use it? Material Property • -

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9 ORIGINAL ARTICLE Marshall E Tyler, CRA, FOPS Larry D Hubbard, MAT University of Wisconsin; Madison, WI Wake Forest University Medical Center Boulevard Ken Boydston Winston-Salem, NC 27157-1033 Megavision, Inc.; Santa Barbara, CA [email protected] Anthony J Pugliese Choices for Service in Imaging, Inc. Glen Rock, NJ Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images INTRODUCTION of exposure, a smaller or larger number of photons strikes his article examines the impact of digital fundus cam- each sensel, depending upon the brightness of the incident era system technology on color retinal image quality, light in the scene. Within the dynamic range of the sen- T specifically as it affects tonal resolution (brightness, sor, it can differentiate specific levels of illumination on a contrast, and color balance). We explore three topics: (1) grayscale rather than just black or white. However, that the nature and characteristics of the silicon-based photo range of detection is not infinite; too dark and no light is sensor, (2) the manner in which the digital fundus camera detected to provide pictorial detail; too bright and the sen- incorporates the sensor as part of a complete system, and sor “maxes out”, thus failing to record detail. (3) some considerations as to how to select a digital cam- This latter point requires further explanation. Sensels era system to satisfy your tonal resolution needs, and how have a fixed capacity to hold electrons generated by inci- to accept delivery and training on a new system.1 dent photons. -

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M. Hubel, John Liu and Rudolph J. Guttosch Foveon, Inc. Santa Clara, California Abstract Bayer Background We compared the Spatial Frequency Response (SFR) The Bayer pattern, also known as a Color Filter of image sensors that use the Bayer color filter Array (CFA) or a mosaic pattern, is made up of a pattern and Foveon X3 technology for color image repeating array of red, green, and blue filter material capture. Sensors for both consumer and professional deposited on top of each spatial location in the array cameras were tested. The results show that the SFR (figure 1). These tiny filters enable what is normally for Foveon X3 sensors is up to 2.4x better. In a black-and-white sensor to create color images. addition to the standard SFR method, we also applied the SFR method using a red/blue edge. In this case, R G R G the X3 SFR was 3–5x higher than that for Bayer filter G B G B pattern devices. R G R G G B G B Introduction In their native state, the image sensors used in digital Figure 1 Typical Bayer filter pattern showing the alternate sampling of red, green and blue pixels. image capture devices are black-and-white. To enable color capture, small color filters are placed on top of By using 2 green filtered pixels for every red or blue, each photodiode. The filter pattern most often used is 1 the Bayer pattern is designed to maximize perceived derived in some way from the Bayer pattern , a sharpness in the luminance channel, composed repeating array of red, green, and blue pixels that lie mostly of green information. -

Comparison of Color Demosaicing Methods Olivier Losson, Ludovic Macaire, Yanqin Yang

Comparison of color demosaicing methods Olivier Losson, Ludovic Macaire, Yanqin Yang To cite this version: Olivier Losson, Ludovic Macaire, Yanqin Yang. Comparison of color demosaicing methods. Advances in Imaging and Electron Physics, Elsevier, 2010, 162, pp.173-265. 10.1016/S1076-5670(10)62005-8. hal-00683233 HAL Id: hal-00683233 https://hal.archives-ouvertes.fr/hal-00683233 Submitted on 28 Mar 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Comparison of color demosaicing methods a, a a O. Losson ∗, L. Macaire , Y. Yang a Laboratoire LAGIS UMR CNRS 8146 – Bâtiment P2 Université Lille1 – Sciences et Technologies, 59655 Villeneuve d’Ascq Cedex, France Keywords: Demosaicing, Color image, Quality evaluation, Comparison criteria 1. Introduction Today, the majority of color cameras are equipped with a single CCD (Charge- Coupled Device) sensor. The surface of such a sensor is covered by a color filter array (CFA), which consists in a mosaic of spectrally selective filters, so that each CCD ele- ment samples only one of the three color components Red (R), Green (G) or Blue (B). The Bayer CFA is the most widely used one to provide the CFA image where each pixel is characterized by only one single color component. -

How Digital Cameras Capture Light

1 Open Your Shutters How Digital Cameras Capture Light Avery CirincioneLynch Eighth Grade Project Paper Hilltown Cooperative Charter School June 2014 2 Cover image courtesy of tumblr.com How Can I Develop This: Introduction Imagine you are walking into a dark room; at first you can't see anything, but as your pupils begin to dilate, you can start to see the details and colors of the room. Now imagine you are holding a film camera in a field of flowers on a sunny day; you focus on a beautiful flower and snap a picture. Once you develop it, it comes out just how you wanted it to so you hang it on your wall. Lastly, imagine you are standing over that same flower, but, this time after snapping the photograph, instead of having to developing it, you can just download it to a computer and print it out. All three of these scenarios are examples of capturing light. Our eyes use an optic nerve in the back of the eye that converts the image it senses into a set of electric signals and transmits it to the brain (Wikipedia, Eye). Film cameras use film; once the image is projected through the lens and on to the film, a chemical reaction occurs recording the light. Digital cameras use electronic sensors in the back of the camera to capture the light. Currently, there are two main types of sensors used for digital photography: the chargecoupled device (CCD) and complementary metaloxide semiconductor (CMOS) sensors. Both of these methods convert the intensity of the light at each pixel into binary form, so the picture can be displayed and saved as a digital file (Wikipedia, Image Sensor). -

Raw Image Processing System and Method

(19) TZZ¥__ _T (11) EP 3 416 128 A1 (12) EUROPEAN PATENT APPLICATION (43) Date of publication: (51) Int Cl.: 19.12.2018 Bulletin 2018/51 G06T 3/40 (2006.01) H04N 9/04 (2006.01) H04N 9/64 (2006.01) (21) Application number: 18178109.7 (22) Date of filing: 15.06.2018 (84) Designated Contracting States: (71) Applicant: Blackmagic Design Pty Ltd AL AT BE BG CH CY CZ DE DK EE ES FI FR GB Port Melbourne, Victoria 3207 (AU) GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR (72) Inventor: BUETTNER, Carsten Designated Extension States: Port Melbourne, Victoria 3207 (AU) BA ME Designated Validation States: (74) Representative: Kramer, Dani et al KH MA MD TN Mathys & Squire LLP The Shard (30) Priority: 15.06.2017 AU 2017902284 32 London Bridge Street London SE1 9SG (GB) (54) RAW IMAGE PROCESSING SYSTEM AND METHOD (57) A method (100) of processing raw image data in a camera (10) includes computing (108) a luminance image from the raw image data (102); and computing (112) a chrominance image corresponding to at least one of the sensor’s image colours from the raw image data. The luminance image and chrominance image(s) can represent the same range of colours able to be repre- sented in the raw image data. The chrominance image can have a lower resolution than that of the luminance image. A camera (10) for performing the method is also disclosed. EP 3 416 128 A1 Printed by Jouve, 75001 PARIS (FR) EP 3 416 128 A1 Description Field of the invention 5 [0001] The invention relates to methods of image processing in a camera. -

A Low Complexity Image Compression Algorithm for Bayer Color Filter Array

A LOW COMPLEXITY IMAGE COMPRESSION ALGORITHM FOR BAYER COLOR FILTER ARRAY A Thesis Submitted to the College of Graduate Postdoctoral Studies In Partial Fulfillment of the Requirements For the Degree of Master of Science In the Department of Electrical and Computer Engineering University of Saskatchewan Saskatoon By K M Mafijur Rahman © Copyright K M Mafijur Rahman, May 2018. All rights reserved PERMISSION TO USE In presenting this thesis in partial fulfillment of the requirements for a Postgraduate degree from the University of Saskatchewan, I agree that the Libraries of this University may make it freely available for inspection. I further agree that permission for copying of this thesis in any manner, in whole or in part, for scholarly purposes may be granted by the professor or professors who supervised my thesis work or, in their absence, by the Head of the Department or the Dean of the College in which my thesis work was done. It is understood that any copying or publication or use of this thesis or parts thereof for financial gain shall not be allowed without my written permission. It is also understood that due recognition shall be given to me and to the University of Saskatchewan in any scholarly use which may be made of any material in my thesis/dissertation. Requests for permission to copy or to make other use of material in this thesis in whole or part should be addressed to: Head of the Department of Electrical and Computer Engineering 57 Campus Drive University of Saskatchewan Saskatoon, Saskatchewan, S7N 5A9, Canada OR Dean College of Graduate and Postdoctoral Studies University of Saskatchewan 116 Thorvaldson Building, 110 Science Place Saskatoon, Saskatchewan S7N 5C9, Canada i ABSTRACT Digital image in their raw form requires an excessive amount of storage capacity. -

IMAGE COMPRESSION BEFORE BAYER DEMOSAICING David Martin

Technical Disclosure Commons Defensive Publications Series July 13, 2016 IMAGE COMPRESSION BEFORE BAYER DEMOSAICING David Martin Follow this and additional works at: http://www.tdcommons.org/dpubs_series Recommended Citation Martin, David, "IMAGE COMPRESSION BEFORE BAYER DEMOSAICING", Technical Disclosure Commons, (July 13, 2016) http://www.tdcommons.org/dpubs_series/222 This work is licensed under a Creative Commons Attribution 4.0 License. This Article is brought to you for free and open access by Technical Disclosure Commons. It has been accepted for inclusion in Defensive Publications Series by an authorized administrator of Technical Disclosure Commons. Martin: IMAGE COMPRESSION BEFORE BAYER DEMOSAICING IMAGE COMPRESSION BEFORE BAYER DEMOSAICING BACKGROUND In the typical image processing pipeline in a camera system, a raw Bayer image is first demosaiced to RGB and then afterwards processed and compressed. The resultant file may be, for example, a JPEG file. Image processing may introduce loss, and so when one is interested in the original raw pixels, it may be desirable to move image processing off the camera for post- processing. Post-processing may be performed on more a powerful processor, for example, in a PC. In professional cameras, this may be accomplished by storing the totally raw Bayer image data, and doing all image processing in post-processing. The raw (Bayer) files tend to be relatively large. For example, raw files may be ten times larger than average JPEG files. In many domains, such as most commercial cameras, image compression is not optional. However, compression chips that are used in the domains, such as those used for H.264/AVC or H.265/HEVC, often require raw data to be highly processed before inputting into the compression chip. -

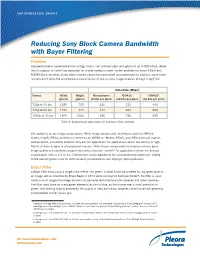

Reducing Sony Block Camera Bandwidth with Bayer Filtering Problem High-Performance Implementations of Gige Vision® Can Achieve Video Throughput of up to 980 Mbps

INFORMATION SHEET Reducing Sony Block Camera Bandwidth with Bayer Filtering Problem High-performance implementations of GigE Vision® can achieve video throughput of up to 980 Mbps. While this throughput is more than adequate for a wide variety of video modes available on Sony FCB-EH and FCB-EV block cameras, some video formats cannot be transmitted uncompressed. In addition, some video formats don’t allow the simultaneous transmission of two or more image streams through a GigE link. Data Rate (Mbps)1 Format Width Height Monochrome YUV4112 YUV4223 (pixels) (pixels) (8 bits per pixel) (12 bits per pixel) (16 bits per pixel) 720p @ 30 fps 1280 720 221 332 442 720p @ 60 fps 1280 720 442 664 885 1080p @ 30 fps 1920 1080 498 746 995 Table 1: Approximate data rates for common video formats One option is to use image compression. While image compression techniques such as MPEG-4, H.264, H.265, JPEG (sometimes referred to as MJPEG or “Motion JPEG”), and JPEG 2000 are mature, well-accepted, and widely adopted, they are not appropriate for applications where low latency or high fidelity of video images is of paramount concern. While these compression techniques achieve good image quality and excellent compression ratios, they are “overkill” for applications where the desired compression ratio is 2:1 or 3:1. Furthermore, these algorithms are computationally expensive, adding to the overall system cost for both cameras (compression) and displays (decompression). Bayer Filter A Bayer filter allows only a single color (either red, green, or blue) to be transmitted for any given pixel on an image sensor. -

A Review on Various Color Filter Array Techniques

International Journal of Scientific & Engineering Research, Volume 6, Issue 4, April-2015 482 ISSN 2229-5518 A review on various color filter array techniques Vikramjeet Singh Goraya, Sharanjit Singh Abstract Most modern digital cameras acquire images using a single image sensor overlaid with a CFA, so demosaicing is part of the processing pipeline required to render these images into a viewable format. Many modern digital cameras can save images in a raw format allowing the user to demosaicing using software, rather than using the camera's built-in firmware. Thus demosaicing becomes major area of research in vision processing applications. In this paper, review of various demosacing techniques that have been proposed by various authors is discussed. It has been found that most of existing techniques suffers from various issues. Therefore the paper ends with a suitable future scope to overcome these issues. Keywords – Artifact, Bayer layer, Demosaicing, Color filter array, Visual sensor —————————— —————————— blue segment. The related unique picture is 1. INTRODUCTION A Demosaicing is a computerized picture procedure familiar with recreate full shade picture from deficient color specimens yield from picture sensor overlaid with one channel show (CFA). Furthermore it is known as CFA insertion. Passage to cam models is spreading broadly since they're simple picture information gadgets. The expanding Bayer Filter Samples fame of cam models gives inspiration to support all variables of the computerized cameras sign chain. To reduce cost, advanced color cameras regularly exploit a solitary picture identifier. Shade imaging that has a solitary locator requires the use of a Color Channel Exhibit (CFA) which covers the indicator show. -

A Study on Various Color Filter Array Based Techniques

International Journal of Computer Applications (0975 – 8887) Volume 114 – No. 4, March 2015 A Study on Various Color Filter Array based Techniques Simranpreet Kaur Richa Sharma Computer Science and Engineering Assistant professor Global Institute Of Management and Emerging Global Institute Of Management and Emerging Technologies, Amritsar Technologies, Amritsar ABSTRACT A demosaicing algorithm is just a digital image process used to reconstruct full color image from the incomplete color samples output from a picture sensor overlaid with a color filter array (CFA). It is also known as CFA interpolation or color reconstruction. Most contemporary digital camera models acquire images using a single image sensor overlaid with a CFA, so demosaicing is the main processing pipeline required to render these images into a viewable format. Many modern Red Green Blue digital camera models can save images in a natural format Figure 1: Bayer Filter Sa mple (adapted from [12]) allowing the consumer to demosaicit using software, as opposed to utilizing the camera's built-in firmware. Thus A digital camera typically has means to reconstruct a whole demosaicing becomes and major area of research in vision RGB image using the above information. The resulting image processing applications. The key objective of the paper is to could be something like this: examine and analyze various image demosaicing techniques. The entire aim would be to explore various limitations of the earlier techniques. This paper ends up with the suitable gaps in earlier techniques. Keywords:- Demosaicing, Cfa, Bayer Layer, Smart Cameras. Original Reconstructed 1. INTRODUCTION A demosaicing [1]- [4] is just a digital image process used to Figure 2: Reconstructed Image (adaptive from [12] reconstruct full color image from incomplete color samples output from image sensor overlaid with a shade filter array 2. -

Image Acquisition and Representation

Image Acquisition and Representation Some Details Brent M. Dingle, Ph.D. 2015 Game Design and Development Program Mathematics, Statistics and Computer Science University of Wisconsin - Stout Lecture Objectives • Previously – Image Acquisition and Generation – Image Display and Image Perception – HTML5 and Canvas • Today – Brief summary of direction of study – What is a Digital Image? What we will Study • Implicitly: Visual Perception – Light and EM Spectrum, Image Acquisition, Sampling, Quantization • Image Compression – General Understanding • Image Manipulation/Enhancement – in the Spatial Domain • noise models, noise filtering, image sharpening… – in the Frequency Domain • Fourier transform, filtering, restoration… • Image Analysis – Object Identification, – Image Recognition, – Edge/corner detection, – Circle/line/ellipse detection… What’s useful in this? • Reasons for Compression – Image data needs to be accessed at different time or location – Limited storage space and transmission bandwidth • Reasons for Manipulation – Image data acquisition was non-ideal, transmission was corrupted, or display device is less than optimal • reasons for restoration, enhancement, interpolation… – Image data may contain sensitive content • hide copyright, prevent counterfeit and forgery • Reasons for Analysis – Reduce burden and error of human operators via automation – Allow a computer to “see” for various AI tasks What we will Discover • Digital Image Processing connects many dots – Linear Algebra, Matrix Theory and Statistics – Calculus and Fourier