Ultraviolet Imaging with Low Cost Smartphone Sensors: Development and Application of a Raspberry Pi-Based UV Camera

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advanced Color Machine Vision and Applications Dr

Advanced Color Machine Vision and Applications Dr. Romik Chatterjee V.P. Business Development Graftek Imaging 2016 V04 - for tutorial 5/4/2016 Outline • What is color vision? Why is it useful? • Comparing human and machine color vision • Physics of color imaging • A model of color image formation • Human color vision and measurement • Color machine vision systems • Basic color machine vision algorithms • Advanced algorithms & applications • Human vision Machine Vision • Case study Important terms What is Color? • Our perception of wavelengths of light . Photometric (neural computed) measures . About 360 nm to 780 nm (indigo to deep red) . “…the rays are not coloured…” - Isaac Newton • Machine vision (MV) measures energy at different wavelengths (Radiometric) nm : nanometer = 1 billionth of a meter Color Image • Color image values, Pi, are a function of: . Illumination spectrum, E(λ) (lighting) λ = Wavelength . Object’s spectral reflectance, R(λ) (object “color”) . Sensor responses, Si(λ) (observer, viewer, camera) . Processing • Human <~> MV . Eye <~> Camera . Brain <~> Processor Color Vision Estimates Object Color • The formation color image values, Pi, has too many unknowns (E(λ) = lighting spectrum, etc.) to directly estimate object color. • Hard to “factor out” these unknowns to get an estimate of object color . In machine vision, we often don’t bother to! • Use knowledge, constraints, and computation to solve for object color estimates . Not perfect, but usually works… • If color vision is so hard, why use it? Material Property • -

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9

Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images 9 ORIGINAL ARTICLE Marshall E Tyler, CRA, FOPS Larry D Hubbard, MAT University of Wisconsin; Madison, WI Wake Forest University Medical Center Boulevard Ken Boydston Winston-Salem, NC 27157-1033 Megavision, Inc.; Santa Barbara, CA [email protected] Anthony J Pugliese Choices for Service in Imaging, Inc. Glen Rock, NJ Characteristics of Digital Fundus Camera Systems Affecting Tonal Resolution in Color Retinal Images INTRODUCTION of exposure, a smaller or larger number of photons strikes his article examines the impact of digital fundus cam- each sensel, depending upon the brightness of the incident era system technology on color retinal image quality, light in the scene. Within the dynamic range of the sen- T specifically as it affects tonal resolution (brightness, sor, it can differentiate specific levels of illumination on a contrast, and color balance). We explore three topics: (1) grayscale rather than just black or white. However, that the nature and characteristics of the silicon-based photo range of detection is not infinite; too dark and no light is sensor, (2) the manner in which the digital fundus camera detected to provide pictorial detail; too bright and the sen- incorporates the sensor as part of a complete system, and sor “maxes out”, thus failing to record detail. (3) some considerations as to how to select a digital cam- This latter point requires further explanation. Sensels era system to satisfy your tonal resolution needs, and how have a fixed capacity to hold electrons generated by inci- to accept delivery and training on a new system.1 dent photons. -

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M. Hubel, John Liu and Rudolph J. Guttosch Foveon, Inc. Santa Clara, California Abstract Bayer Background We compared the Spatial Frequency Response (SFR) The Bayer pattern, also known as a Color Filter of image sensors that use the Bayer color filter Array (CFA) or a mosaic pattern, is made up of a pattern and Foveon X3 technology for color image repeating array of red, green, and blue filter material capture. Sensors for both consumer and professional deposited on top of each spatial location in the array cameras were tested. The results show that the SFR (figure 1). These tiny filters enable what is normally for Foveon X3 sensors is up to 2.4x better. In a black-and-white sensor to create color images. addition to the standard SFR method, we also applied the SFR method using a red/blue edge. In this case, R G R G the X3 SFR was 3–5x higher than that for Bayer filter G B G B pattern devices. R G R G G B G B Introduction In their native state, the image sensors used in digital Figure 1 Typical Bayer filter pattern showing the alternate sampling of red, green and blue pixels. image capture devices are black-and-white. To enable color capture, small color filters are placed on top of By using 2 green filtered pixels for every red or blue, each photodiode. The filter pattern most often used is 1 the Bayer pattern is designed to maximize perceived derived in some way from the Bayer pattern , a sharpness in the luminance channel, composed repeating array of red, green, and blue pixels that lie mostly of green information. -

Comparison of Color Demosaicing Methods Olivier Losson, Ludovic Macaire, Yanqin Yang

Comparison of color demosaicing methods Olivier Losson, Ludovic Macaire, Yanqin Yang To cite this version: Olivier Losson, Ludovic Macaire, Yanqin Yang. Comparison of color demosaicing methods. Advances in Imaging and Electron Physics, Elsevier, 2010, 162, pp.173-265. 10.1016/S1076-5670(10)62005-8. hal-00683233 HAL Id: hal-00683233 https://hal.archives-ouvertes.fr/hal-00683233 Submitted on 28 Mar 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Comparison of color demosaicing methods a, a a O. Losson ∗, L. Macaire , Y. Yang a Laboratoire LAGIS UMR CNRS 8146 – Bâtiment P2 Université Lille1 – Sciences et Technologies, 59655 Villeneuve d’Ascq Cedex, France Keywords: Demosaicing, Color image, Quality evaluation, Comparison criteria 1. Introduction Today, the majority of color cameras are equipped with a single CCD (Charge- Coupled Device) sensor. The surface of such a sensor is covered by a color filter array (CFA), which consists in a mosaic of spectrally selective filters, so that each CCD ele- ment samples only one of the three color components Red (R), Green (G) or Blue (B). The Bayer CFA is the most widely used one to provide the CFA image where each pixel is characterized by only one single color component. -

Photographing Fluorescent Minerals with Mineralight and Blak-Ray

Application Bulletin UVP-AB-120 Corporate Headquarters: UVP, Inc. European Operations: Ultra-Violet Products Limited 2066 W. 11th Street, Upland, CA 91786 USA Unit 1, Trinity Hall Farm Estate, Nuffield Road Telephone: (800)452-6788 or (909)946-3197 Cambridge CB4 1TG UK Telephone: 44(0)1223-420022 E-Mail: [email protected] E-Mail: [email protected] PHOTOGRAPHING FLUORESCENT MINERALS WITH MINERALIGHT AND BLAK-R AY LAMPS Taking pictures in ultraviolet light of fluorescent minerals is a relatively simple operation if one follows certain basic requirements and understands the several variables that control what is obtained on the picture. There are several factors that control obtaining the best picture and among these are the size of power of the ultraviolet light source, the distance of the light source to the subject, the type of film used, the fluorescent color of the mineral specimen, the type of filter on the camera, and the final exposure of the picture. These subjects are taken up below in orde r. The size of the light source will make a difference as far as the picture is concerned because the more powerful the light source, the shorter the exposure. The distance of the ultraviolet light to the subject is also of great importance for the closer the light source is to the subject being photographed, the brighter the fluorescence will be and the shorter exposure required. While tubular light sources do not vary according to the so-called square la w, never- theless, moving the light source into one-half of its original distance will more than double the light intensity with corresponding increase in fluorescent brightness. -

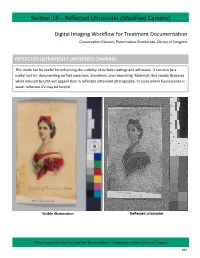

Reflected Ultraviolet (Modified Camera)

Section 18—Reflected Ultraviolet (Modified Camera) Digital Imaging Workflow for Treatment Documentation Conservation Division, Preservation Directorate, Library of Congress REFLECTED ULTRAVIOLET (MODIFIED CAMERA) This mode can be useful for enhancing the visibility of surface coatings and adhesives. It can also be a useful tool for documenting surface abrasions, accretions, and retouching. Materials that visably fluoresce when induced by UVA will appear dark in reflected ultraviolet photography. In cases where fluorescence is weak, reflected UV may be helpful. Visible illumination Reflected ultraviolet Digital Imaging Workflow for Treatment Documentation—Conservation Division, Library of Congress 18.1 Section 18—Reflected Ultraviolet (Modified Camera) Figure 18.01 Figure 18.02 Figure 18.03 Digital Imaging Workflow for Treatment Documentation—Conservation Division, Library of Congress 18.2 Section 18—Reflected Ultraviolet (Modified Camera) Capture Preliminary If not done already, capture a visible illumination image with the modified camera following instructions in Section 14. Wear UV goggles when the UV lights are on. Set Up 1. Use the Nikon D700 modified camera and the CoastalOpt lens. 2. The CoastalOpt lens MUST be set at the minimum aperture setting of f/45 to utilize the electronic aperture function (otherwise the camera will display FEE). 3. The PECA 900 and X-Nite BP1 filters are used for reflected ultraviolet photography with the modified camera (fig 18.01). The filters are stored in the studio cabinet. Very carefully, screw on both filters to the end of the camera lens. The order the filters are screwed on does not matter. 4. Plug in the UV lamps. It is important to plug in both cords (two for each lamp) for at least 20 seconds before switching on lamps. -

Introduction to Photography

Edited with the trial version of Foxit Advanced PDF Editor To remove this notice, visit: www.foxitsoftware.com/shopping Introduction to Photography Study Material for Students : Introduction to Photography CAREER OPPORTUNITIES IN MEDIA WORLD Mass communication and Journalism is institutionalized and source specific. It functions through well-organized professionals and has an ever increasing interlace. Mass media has a global availability and it has converted the whole world in to a global village. A qualified journalism professional can take up a job of educating, entertaining, informing, persuading, interpreting, and guiding. Working in print media offers the opportunities to be a news reporter, news presenter, an editor, a feature writer, a photojournalist, etc. Electronic media offers great opportunities of being a news reporter, news Edited with the trial version of editor, newsreader, programme host, interviewer, cameraman,Foxit Advanced producer, PDF Editor To remove this notice, visit: director, etc. www.foxitsoftware.com/shopping Other titles of Mass Communication and Journalism professionals are script writer, production assistant, technical director, floor manager, lighting director, scenic director, coordinator, creative director, advertiser, media planner, media consultant, public relation officer, counselor, front office executive, event manager and others. 2 : Introduction to Photography INTRODUCTION The book will introduce the student to the techniques of photography. The book deals with the basic steps in photography. Students will also learn the different types of photography. The book also focuses of the various parts of a photographic camera and the various tools of photography. Students will learn the art of taking a good picture. The book also has introduction to photojournalism and the basic steps of film Edited with the trial version of development in photography. -

How Digital Cameras Capture Light

1 Open Your Shutters How Digital Cameras Capture Light Avery CirincioneLynch Eighth Grade Project Paper Hilltown Cooperative Charter School June 2014 2 Cover image courtesy of tumblr.com How Can I Develop This: Introduction Imagine you are walking into a dark room; at first you can't see anything, but as your pupils begin to dilate, you can start to see the details and colors of the room. Now imagine you are holding a film camera in a field of flowers on a sunny day; you focus on a beautiful flower and snap a picture. Once you develop it, it comes out just how you wanted it to so you hang it on your wall. Lastly, imagine you are standing over that same flower, but, this time after snapping the photograph, instead of having to developing it, you can just download it to a computer and print it out. All three of these scenarios are examples of capturing light. Our eyes use an optic nerve in the back of the eye that converts the image it senses into a set of electric signals and transmits it to the brain (Wikipedia, Eye). Film cameras use film; once the image is projected through the lens and on to the film, a chemical reaction occurs recording the light. Digital cameras use electronic sensors in the back of the camera to capture the light. Currently, there are two main types of sensors used for digital photography: the chargecoupled device (CCD) and complementary metaloxide semiconductor (CMOS) sensors. Both of these methods convert the intensity of the light at each pixel into binary form, so the picture can be displayed and saved as a digital file (Wikipedia, Image Sensor). -

Raw Image Processing System and Method

(19) TZZ¥__ _T (11) EP 3 416 128 A1 (12) EUROPEAN PATENT APPLICATION (43) Date of publication: (51) Int Cl.: 19.12.2018 Bulletin 2018/51 G06T 3/40 (2006.01) H04N 9/04 (2006.01) H04N 9/64 (2006.01) (21) Application number: 18178109.7 (22) Date of filing: 15.06.2018 (84) Designated Contracting States: (71) Applicant: Blackmagic Design Pty Ltd AL AT BE BG CH CY CZ DE DK EE ES FI FR GB Port Melbourne, Victoria 3207 (AU) GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR (72) Inventor: BUETTNER, Carsten Designated Extension States: Port Melbourne, Victoria 3207 (AU) BA ME Designated Validation States: (74) Representative: Kramer, Dani et al KH MA MD TN Mathys & Squire LLP The Shard (30) Priority: 15.06.2017 AU 2017902284 32 London Bridge Street London SE1 9SG (GB) (54) RAW IMAGE PROCESSING SYSTEM AND METHOD (57) A method (100) of processing raw image data in a camera (10) includes computing (108) a luminance image from the raw image data (102); and computing (112) a chrominance image corresponding to at least one of the sensor’s image colours from the raw image data. The luminance image and chrominance image(s) can represent the same range of colours able to be repre- sented in the raw image data. The chrominance image can have a lower resolution than that of the luminance image. A camera (10) for performing the method is also disclosed. EP 3 416 128 A1 Printed by Jouve, 75001 PARIS (FR) EP 3 416 128 A1 Description Field of the invention 5 [0001] The invention relates to methods of image processing in a camera. -

Redalyc.Technical Photography for Mural Paintings: the Newly

Conservar Património E-ISSN: 2182-9942 [email protected] Associação Profissional de Conservadores Restauradores de Portugal Portugal Cosentino, A.; Gil, M.; Ribeiro, M.; Di Mauro, R. Technical photography for mural paintings: the newly discovered frescoes in Aci Sant’Antonio (Sicily, Italy) Conservar Património, núm. 20, diciembre, 2014, pp. 23-33 Associação Profissional de Conservadores Restauradores de Portugal Lisboa, Portugal Available in: http://www.redalyc.org/articulo.oa?id=513651365003 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative Article / Artigo Technical photography for mural paintings: the newly discovered frescoes in Aci Sant’Antonio (Sicily, Italy) A. Cosentino1,* M. Gil2 M. Ribeiro3 R. Di Mauro4 1 Cultural Heritage Science Open Source, Piazza Cantarella, 11 Aci Sant’Antonio, 95025, Italy 2 Hercules Laboratory, Évora University, Palácio do Vimioso 8, 7000-809 Évora, Portugal 3 Professional photographer ( freelancer) 4 Professional architect (freelancer) * [email protected] Abstract Keywords A cycle of 18th century frescoes, depicting the last days of Christ on earth, were recently discovered Panoramic photography in Aci Sant’Antonio (Sicily, Italy). The paintings survive along the corners of an originally square Infrared photography chapel that was altered in the early 20th century, acquiring the current octagonal plan. This Ultraviolet photography paper presents the results of the technical photography documentation of these wall paintings Raking light photography and illustrates the methodological challenges that were posed during their examination. Raking Infrared false color light photography was used to reveal the paintings’ state of conservation, details of the plaster Mural paintings work and painting techniques. -

Effects of Light on Materials in Collections

Effects of Light Effects on Materials in Collections Data on Photoflash and Related Sources Schaeffer T. Terry The Getty Conservation Institute research in conservation GCI Effects of Light on Materials in Collections Schaeffer research in conservation The Getty Conservation Institute Effects of Light on Materials in Collections Data on Photoflash and Related Sources Terry T. Schaeffer 2001 Dinah Berland: Editorial Project Manager Elizabeth Maggio: Manuscript Editor Anita Keys: Production Coordinator Hespenheide Design: Designer Printed in the United States of America 10 9 8 7 6 5 4 3 2 © 2001 The J. Paul Getty Trust Second printing 2002 Getty Publications 1200 Getty Center Drive, Suite 500 Los Angeles, California 90049–1682 www.getty.edu The Getty Conservation Institute The Getty Conservation Institute works internationally to advance conservation in the visual arts. The Institute serves the conservation community through scientific research, education and training, model field projects, and the dissemination of information. The Institute is a program of the J. Paul Getty Trust, an international cultural and philanthropic institution devoted to the visual arts and the humanities. Research in Conservation The Research in Conservation reference series presents the findings of research conducted by the Getty Conservation Institute and its individual and institutional research partners, as well as state-of-the-art reviews of conservation literature. Each volume covers a topic of current interest to conservators and conservation scientists. Other volumes in the Research in Conservation series include Biodeterioration of Stone in Tropical Environments: An Overview (Kumar and Kumar 1999); Inert Gases in the Control of Museum Insect Pests (Selwitz and Maekawa 1998); Oxygen-Free Museum Cases (Maekawa 1998); Accelerated Aging: Photochemical and Thermal Aspects (Feller 1994); Airborne Particles in Museums (Nazaroff, Ligoki, et al. -

UV and IR Photography

UV & IR PHOTOGRAPHY IN FORENSIC SCIENCE INTRODUCTION • Ultraviolet and infrared have valuable application in law enforcement photography. • Photography utilizing these radiations may provide information about an object or material which cannot be obtained by other photographic methods. Either or both could be considered as part of a physical examination in cases where visual examination or photography by normal processes fail. • The visible spectrum ranges from blue at 400nm to red at 700nm.Ultraviolet wavelengths are shorter than 400nm and infrared wavelengths are longer than 700nm. • They provide us with no visual response and therefore are not considered to be light. Certain narrow bands of this invisible radiation, however, have practical photographic applications. • All standard photographic papers and photographic films are sensitive to UV. They are not, however, sensitive to infrared. Special film, sensitized to infrared radiations, is required for infrared photography. This infrared film is also sensitive to blue-violet and to ultraviolet. • Developing and printing techniques are similar to the normal processes for both ultraviolet and infrared photography. • The good thing is that both processes are non- destructive to inanimate objects. For life forms, the usual precautions regarding exposure to the eyes should be heeded. In cases of uncertainty as to which technique might produce the best results, you may have to try all. ULTRAVIOLET PHOTOGRAPHY • Ultraviolet Radiation • For practical photographic purposes we use only the long wave ultraviolet band. • Long wave ultraviolet :- 320 to 400 nanometres. • This band is transmitted by regular optical glass, of which most photographic lenses are made and, therefore, is of most practical value in ultraviolet photography.