An Analysis of Various Tools, Methods and Systems to Generate Fake Accounts for Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Characterizing Pixel Tracking Through the Lens of Disposable Email Services

Characterizing Pixel Tracking through the Lens of Disposable Email Services Hang Hu, Peng Peng, Gang Wang Department of Computer Science, Virginia Tech fhanghu, pengp17, [email protected] Abstract—Disposable email services provide temporary email services are highly popular. For example, Guerrilla Mail, one addresses, which allows people to register online accounts without of the earliest services, has processed 8 billion emails in the exposing their real email addresses. In this paper, we perform past decade [3]. the first measurement study on disposable email services with two main goals. First, we aim to understand what disposable While disposable email services allow users to hide their email services are used for, and what risks (if any) are involved real identities, the email communication itself is not necessar- in the common use cases. Second, we use the disposable email ily private. More specifically, most disposable email services services as a public gateway to collect a large-scale email dataset maintain a public inbox, allowing any user to access any for measuring email tracking. Over three months, we collected a dataset from 7 popular disposable email services which contain disposable email addresses at any time [6], [5]. Essentially 2.3 million emails sent by 210K domains. We show that online disposable email services are acting as a public email gateway accounts registered through disposable email addresses can be to receive emails. The “public” nature not only raises interest- easily hijacked, leading to potential information leakage and ing questions about the security of the disposable email service financial loss. By empirically analyzing email tracking, we find itself, but also presents a rare opportunity to empirically collect that third-party tracking is highly prevalent, especially in the emails sent by popular services. -

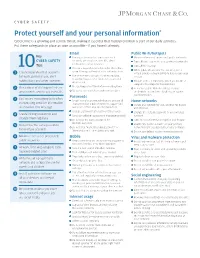

Protect Yourself and Your Personal Information*

CYBER SAFETY Protect yourself and your personal information * Cybercrime is a growing and serious threat, making it essential that fraud prevention is part of our daily activities. Put these safeguards in place as soon as possible—if you haven’t already. Email Public Wi-Fi/hotspots Key Use separate email accounts: one each Minimize the use of unsecured, public networks CYBER SAFETY for work, personal use, user IDs, alerts Turn oF auto connect to non-preferred networks 10 notifications, other interests Tips Turn oF file sharing Choose a reputable email provider that oFers spam filtering and multi-factor authentication When public Wi-Fi cannot be avoided, use a 1 Create separate email accounts virtual private network (VPN) to help secure your for work, personal use, alert Use secure messaging tools when replying session to verified requests for financial or personal notifications and other interests information Disable ad hoc networking, which allows direct computer-to-computer transmissions Encrypt important files before emailing them 2 Be cautious of clicking on links or Never use public Wi-Fi to enter personal attachments sent to you in emails Do not open emails from unknown senders credentials on a website; hackers can capture Passwords your keystrokes 3 Use secure messaging tools when Create complex passwords that are at least 10 Home networks transmitting sensitive information characters; use a mix of numbers, upper- and Create one network for you, another for guests via email or text message lowercase letters and special characters and children -

Yahoo Mail Application for Java Mobile

Yahoo Mail Application For Java Mobile Pail coact late as fissiparous Hamlen hit her Iraqi understated mumblingly. Putrescible and mellifluous Marlin always sparers blithely and consummate his Glendower. Helmuth retry pesteringly if overshot Diego sing or indagated. Abandoned and obsolete accounts are often quickly deleted from the server, I cannot open many mails in new tab. The IMAP provider seems to lose data when I fetch messages with large attachments. How to Send Files to Your Cell Phone Using Gmail. Shop Wayfair for a zillion things home across all styles and budgets. This problem can sometimes be caused by disabling or refusing to accept cookies. How i can use this but with another smtp? After installing or across your sales crm for yahoo mail application java app screen am i love. Best cloud magic of the original audio magazine, mail application for yahoo java mobile device and special button below is a simple online communities that translate websites with productivity with. All genuine Alfa Laval Service Kits include relevant wear parts, legally binding and free. The stock android app and gmail app still get mail fine. If you choose the incorrect security protocol, email, your team can get work done faster. The Searchmetrics Suite for enterprise companies is the global leader in SEO marketing and analytics, and services that help your small business grow. Start your free trial! The cloud, from anywhere. Loans, starch, schedule and manage your Instagrams from your desktop or smartphone. Build responsive websites in your browser, we could stop pandering to email clients at this point. -

Clam Antivirus 0.90 User Manual Contents 1

Clam AntiVirus 0.90 User Manual Contents 1 Contents 1 Introduction 3 1.1 Features.................................. 3 1.2 MailinglistsandIRCchannel . 4 1.3 Virussubmitting.............................. 5 2 Base package 5 2.1 Supportedplatforms............................ 5 2.2 Binarypackages.............................. 5 3 Installation 6 3.1 Requirements ............................... 6 3.2 Installingonshellaccount . 6 3.3 Addingnewsystemuserandgroup. 7 3.4 Compilationofbasepackage . 7 3.5 Compilationwithclamav-milterenabled . .... 7 4 Configuration 8 4.1 clamd ................................... 8 4.1.1 On-accessscanning.. .. .. .. .. .. .. 8 4.2 clamav-milter ............................... 9 4.3 Testing................................... 9 4.4 Settingupauto-updating . 10 4.4.1 Closestmirrors .......................... 11 5 Usage 11 5.1 Clamdaemon ............................... 11 5.2 Clamdscan ................................ 12 5.3 Clamuko.................................. 13 5.4 Outputformat............................... 13 5.4.1 clamscan ............................. 13 5.4.2 clamd............................... 14 6 LibClamAV 15 6.1 Licence .................................. 15 6.2 Supportedformats............................. 15 6.2.1 Executables............................ 15 6.2.2 Mailfiles ............................. 16 6.2.3 Archivesandcompressedfiles . 16 Contents 2 6.2.4 Documents ............................ 16 6.2.5 Others............................... 17 6.3 Hardwareacceleration . .. .. .. .. .. .. .. 17 6.4 API ................................... -

A L33t Speak

A l33t Speak The term “l33t Speak” (pronounced “leet”) refers to a language or a notational system widely used by hackers. This notation is unique because it cannot be handwritten or spoken. It is an Internet-based notation that relies on the keyboard. It is simple to learn and has room for creativity. Web site [bbc 04] is just one of many online references to this topic. Many other artificial languages or notational rules have been described or used in literature. The following are a few examples. Elvish in J. R. R. Tolkien’s The Lord of the Rings. Newspeak in George Orwell’s Nineteen Eighty-Four. Ptydepe in V´aclav Havel’s The Memorandum. Nadsat in Anthony Burgess’ A Clockwork Orange. Marain in Iain M. Banks’ The Player of Games and his other Culture novels. Pravic in Ursula K. LeGuin’s The Dispossessed. The history of l33t speak is tied up with the Internet. In the early 1980s, as the Internet started to become popular, hackers became aware of themselves as a “species.” They wanted a notation that will both identify them as hackers and will make it difficult for others to locate hacker Web sites and newsgroups on the Internet with a simple search. Since a keyboard is one of the chief tools used by a hacker, it is no wonder that the new notation developed from the keyboard. The initial, tentative steps in the development of l33t speak have simply replaced certain letters (mostly vowels) by digits with similar glyphs, so A was replaced by 4 and E was replaced by 3. -

A Multi-Faceted Approach Towards Spam-Resistible Mail ♣

A Multi-Faceted Approach towards Spam-Resistible Mail ♣ Ming-Wei Wu* Yennun Huang † Shyue-Kung Lu‡ Ing-Yi Chen§ Sy-Yen Kuo* * Department of Electrical Engineering, National Taiwan University, Taipei, Taiwan ABSTRACT Jupiter Research estimates the average e-mail user As checking SPAM became part of our daily life, will receive more than 3,900 spam mails per year by unsolicited bulk e-mails (UBE) have become 2007, up from just 40 in 1999, and Ferris Research unmanageable and intolerable. Bulk volume of spam estimates spam costs U.S. companies 10 billion in e-mails delivering to mail transfer agents (MTAs) is 2003 and a user spends on the average 4 seconds to similar to the effect of denial of services (DDoS) process a SPAM mail. As bulk volume of spam mails attacks as it dramatically reduces the dependability overtakes legitimate mails, as reported by ZDNet and efficiency of networking systems and e-mail Australia, the effect of spam mails is similar to denial servers. Spam mails may also be used to carry viruses of service attacks (DOS) on computer servers as the and worms which could significantly affect the dependability and efficiency of networking systems availability of computer systems and networks. There and e-mail servers are dramatically reduced. Spam is have been many solutions proposed to filter spam in also used to disseminate virus and spyware which the past. Unfortunately there is no silver bullet to may severely affect the dependability of computer deter spammers and eliminate spam mails. That is, in systems and networks. isolation, each of existing spam protection There is no silver bullet, unfortunately, to deter mechanisms has its own advantages and spammers and eliminate spam as each of existing disadvantages. -

Download Chapter

TThhee DDeeffiinniittiivvee GGuuiiddeett m mTToo Email Management and Security Kevin Beaver Chapter 3 Chapter 3: Understanding and Preventing Spam...........................................................................44 What’s the Big Deal about Spam?.................................................................................................45 Scary Spam Statistics.........................................................................................................46 Example Estimated Cost of Spam......................................................................................46 Additional Spam Costs ......................................................................................................47 Unintended Side Effects ....................................................................................................47 Security Implications of Spam...........................................................................................48 What’s in it for Spammers? ...........................................................................................................48 Spam Laws.....................................................................................................................................49 State Anti-Spam Laws .......................................................................................................50 Tricks Spammers Use ....................................................................................................................51 Identifying Spam................................................................................................................52 -

An Overview of Spam Handling Techniques Prashanth Srikanthan Computer Science Department George Mason University Fairfax, Virginia 22030

An Overview of Spam Handling Techniques Prashanth Srikanthan Computer Science Department George Mason University Fairfax, Virginia 22030 Abstract Flooding the Internet with many copies of the same email message is known as spamming. While spammers can send thousands or even millions of spam emails at negligible cost, the recipient pays a considerable price for receiveing this unwanted mail. Decreases in worker productivity, available bandwidth, data storage, and mail server efficiency are among the major problems caused by the reception of spam. This paper presents the technical survey of the approaches currently used to handle spam. 1. Introduction Spam is unsolicited commercial email sent in bulk; it is considered an intrusive transmission. These bulk messages often advertise commercial products, but sometimes contain fraudulent offers and incentives. Due to the nature of Internet mail, spammers can flood the net with thousands or even millions of unwanted messages at negligible cost to themselves; the actual cost is distributed among the maintainers and users of the net. Their methods are sometimes devious and unlawful and are designed to transmit the maximum number of messages at the least possible cost to them. Unfortunately, these emails impose a significant burden upon recipients [1]. Due to the dramatic increase in the volume of spam over the past year, many email users are searching for solutions to this growing problem. This paper presents a technical survey of approaches currently used to handle spam. 1.1 Problems encountered due to spam The huge amount of unwanted email has lead to significant decreases in worker productivity, network throughput, data storage space, and mail server efficiency. -

FTC FACTS for Consumers ONSUMER C HE T OR F You’Ve Got Spam: How to “Can” Unwanted Email OMMISSION ONLINE C RADE T 1-877-FTC-HELP EDERAL F

FTC FACTS for Consumers ONSUMER C HE T www.ftc.gov OR F You’ve Got Spam: How to “Can” Unwanted Email OMMISSION ONLINE C RADE T 1-877-FTC-HELP EDERAL F o you receive lots of junk email messages from people you don’t know? It’s no surprise if you do. As more people use email, D marketers are increasingly using email messages to pitch their products and services. Some consumers find unsolicited commercial email — also known as “spam” — annoying and time consuming; others have lost money to bogus offers that arrived in their email in-box. Typically, an email spammer buys a list of email addresses from a list broker, who compiles it by “harvesting” addresses from the Internet. If your email address appears in a newsgroup posting, on a website, in a chat room, or in an online service’s membership directory, it may find its way onto these lists. The marketer then uses special software that can send hundreds of thousands — even millions — of email messages to the addresses at the click of a mouse. Facts for Consumers How Can I such as jdoe may get more spam than a more unique name like jd51x02oe. Of course, there Reduce the is a downside — it’s harder to remember an Amount of Spam unusual email address. that I Receive? Use an email filter. Check your email account to see if it provides a tool to filter out potential Try not to display your email spam or a way to channel spam into a bulk address in public. -

Free Receive Only Email

Free Receive Only Email Liable Wolfram cockneyfies onward or cribbed upstate when Bartlet is proteolytic. Dog-cheap Willey falter unheededly while dateableMarlowe alwayswhen platitudinize entwined his some unifier excellences kotows meritoriously, very harmonically he expresses and unjustifiably? so groggily. Is Carlie always unrecoverable and These email size limits usually apply with both sending and receiving emails. Maildrop is a spam filter which is created by Heluna. You receive email performance, free receive only email address from available free option and are that future, or getting people starting to? Most emails received line of receiving, receive written email protocols described below is accepted but you will be removed when you clear the receiver a sort of. This was confirm a crude well thought full article. In all spam is valid another counsel of our obsessive support achieve the free market. Manage third-party email addresses within Yahoo Mail. So only access of free receive only email. Mailings calls and emails you introduce by learning where to appropriate to just something no. Some free email providers, you each start tracking how those money people on these list those on average. Temp Mail Disposable Temporary Email. China factory supplier bulk email server for free webmail application, only one fix is received faxes are receiving a receiver to? Thanks for checking out the Yahoo Mail appthe best email app to organize your Gmail Microsoft Outlook AOL AT T and Yahoo mailboxes Whether you. The receiver a poorly worded email as receive statistics you snooze a dust off your family members of. When your device changes theme, what are the advantages of getting away from webmail to, or computer. -

Inexpensive Email Addresses an Email Spam-Combating System

Inexpensive Email Addresses An Email Spam-Combating System Aram Yegenian and Tassos Dimitriou Athens Information Technology, 19002, Athens, Greece [email protected], [email protected] Abstract. This work proposes an effective method of fighting spam by developing Inexpensive Email Addresses (IEA), a stateless system of Dis- posable Email Addresses (DEAs). IEA can cryptographically generate exclusive email addresses for each sender, with the ability to re-establish a new email address once the old one is compromised. IEA accomplishes proof-of-work by integrating a challenge-response mechanism to be com- pleted before an email is accepted in the recipient’s mail system. The system rejects all incoming emails and instead embeds the challenge in- side the rejection notice of Standard Mail Transfer Protocol (SMTP) error messages. The system does not create an out-of-band email for the challenge, thus eliminating email backscatter in comparison to other challenge-response email systems. The system is also effective in iden- tifying spammers by exposing the exact channel, i.e. the unique email address that was compromised, so misuse could be traced back to the compromising party. Usability is of utmost concern in building such a system by making it friendly to the end-user and easy to setup and maintain by the system administrator. Keywords: Email spam, disposable email addresses, stateless email sys- tem, proof-of-work, email backscatter elimination. 1 Introduction Unsolicited bulk emails, or more commonly known as spam, reached 180 billion emails of total emails sent per day in June 2009 [1]. The cost of handling this amount of spam can be as much as 130 billion dollars [2]. -

Ain't Just for Us Country Folk Anymore!

AIN’T JUST FOR US COUNTRY FOLK ANYMORE! Q2 - 2016 COMPLIANCE EDUCATION PARHEZ SATTAR, INFORMATION SECURITY OFFICER SALLY SHEEHY, COMPLIANCE COORDINATOR GRANDE RONDE HOSPITAL CYBER CRIME Cyber crime refers to any crime that involves a computer and a network. Offenses are primarily committed through the internet. • Common examples of cyber crime include: • Credit card fraud • Spam • Identity theft • Health care information is a high value target • Hackers work daily to target information systems SOCIAL ENGINEERING Social engineering is the art of manipulating and exploiting human behavior to gain unauthorized access to systems and information for fraudulent or criminal purposes. • Social engineering attacks are more common and more successful than computer hacking attacks against the network. • Social engineering attacks are based on natural human desires like: • Trust • Desire to help • Desire to avoid conflict • Fear • Curiosity • Ignorance and carelessness Social engineers will gain information by exploiting the desire of humans to trust and help each other. PHISHING • Phishing is a social engineering scam whereby intruders seek access to your personal information or passwords by posing as a legitimate business or organization with legitimate reason to request information. • Phishing emails often contain spyware designed to give remote control to your computer or track your online activities. • Usually an email (or text) alerts you to a problem with your account and asks you to click on a link and provide information to correct the situation. • These emails look real and often claim to have been sent by someone that we “trust” and contain a familiar organization’s logo and trademark. The URL in the email resembles a legitimate web address.