Knowledge Spaces Applications in Education Knowledge Spaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Knowledge Spaces Applications in Education Knowledge Spaces

Jean-Claude Falmagne · Dietrich Albert Christopher Doble · David Eppstein Xiangen Hu Editors Knowledge Spaces Applications in Education Knowledge Spaces Jean-Claude Falmagne • Dietrich Albert Christopher Doble • David Eppstein • Xiangen Hu Editors Knowledge Spaces Applications in Education Editors Jean-Claude Falmagne Dietrich Albert School of Social Sciences, Department of Psychology Dept. Cognitive Sciences University of Graz University of California, Irvine Graz, Austria Irvine, CA, USA Christopher Doble David Eppstein ALEKS Corporation Donald Bren School of Information Irvine, CA, USA & Computer Sciences University of California, Irvine Irvine, CA, USA Xiangen Hu Department of Psychology University of Memphis Memphis, TN, USA ISBN 978-3-642-35328-4 ISBN 978-3-642-35329-1 (eBook) DOI 10.1007/978-3-642-35329-1 Springer Heidelberg New York Dordrecht London Library of Congress Control Number: 2013942001 © Springer-Verlag Berlin Heidelberg 2013 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Exempted from this legal reservation are brief excerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright Law of the Publisher’s location, in its current version, and permission for use must always be obtained from Springer. -

Drawing Graphs and Maps with Curves

Report from Dagstuhl Seminar 13151 Drawing Graphs and Maps with Curves Edited by Stephen Kobourov1, Martin Nöllenburg2, and Monique Teillaud3 1 University of Arizona – Tucson, US, [email protected] 2 KIT – Karlsruhe Institute of Technology, DE, [email protected] 3 INRIA Sophia Antipolis – Méditerranée, FR, [email protected] Abstract This report documents the program and the outcomes of Dagstuhl Seminar 13151 “Drawing Graphs and Maps with Curves”. The seminar brought together 34 researchers from different areas such as graph drawing, information visualization, computational geometry, and cartography. During the seminar we started with seven overview talks on the use of curves in the different communities represented in the seminar. Abstracts of these talks are collected in this report. Six working groups formed around open research problems related to the seminar topic and we report about their findings. Finally, the seminar was accompanied by the art exhibition Bending Reality: Where Arc and Science Meet with 40 exhibits contributed by the seminar participants. Seminar 07.–12. April, 2013 – www.dagstuhl.de/13151 1998 ACM Subject Classification I.3.5 Computational Geometry and Object Modeling, G.2.2 Graph Theory, F.2.2 Nonnumerical Algorithms and Problems Keywords and phrases graph drawing, information visualization, computational cartography, computational geometry Digital Object Identifier 10.4230/DagRep.3.4.34 Edited in cooperation with Benjamin Niedermann 1 Executive Summary Stephen Kobourov Martin Nöllenburg Monique Teillaud License Creative Commons BY 3.0 Unported license © Stephen Kobourov, Martin Nöllenburg, and Monique Teillaud Graphs and networks, maps and schematic map representations are frequently used in many fields of science, humanities and the arts. -

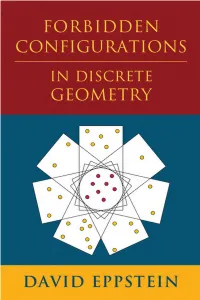

Forbidden Configurations in Discrete Geometry

FORBIDDEN CONFIGURATIONS IN DISCRETE GEOMETRY This book surveys the mathematical and computational properties of finite sets of points in the plane, covering recent breakthroughs on important problems in discrete geometry and listing many open prob- lems. It unifies these mathematical and computational views using for- bidden configurations, which are patterns that cannot appear in sets with a given property, and explores the implications of this unified view. Written with minimal prerequisites and featuring plenty of fig- ures, this engaging book will be of interest to undergraduate students and researchers in mathematics and computer science. Most topics are introduced with a related puzzle or brain-teaser. The topics range from abstract issues of collinearity, convexity, and general position to more applied areas including robust statistical estimation and network visualization, with connections to related areas of mathe- matics including number theory, graph theory, and the theory of per- mutation patterns. Pseudocode is included for many algorithms that compute properties of point sets. David Eppstein is Chancellor’s Professor of Computer Science at the University of California, Irvine. He has more than 350 publications on subjects including discrete and computational geometry, graph the- ory, graph algorithms, data structures, robust statistics, social network analysis and visualization, mesh generation, biosequence comparison, exponential algorithms, and recreational mathematics. He has been the moderator for data structures and algorithms -

Graphs in Nature

Graphs in Nature David Eppstein University of California, Irvine Symposium on Geometry Processing, July 2019 Inspiration: Steinitz's theorem Purely combinatorial characterization of geometric objects: Graphs of convex polyhedra are exactly the 3-vertex-connected planar graphs Image: Kluka [2006] Overview Cracked surfaces, bubble foams, and crumpled paper also form natural graph-like structures What properties do these graphs have? How can we recognize and synthesize them? I. Cracks and Needles Motorcycle graphs: Canonical quad mesh partitioning Paper at SGP'08 [Eppstein et al. 2008] Problem: partition irregular quad-mesh into regular submeshes Inspiration: Light cycle game from TRON movies Mesh partitioning method Grow cut paths outwards from each irregular (non-degree-4) vertex Cut paths continue straight across regular (degree-4) vertices They stop when they run into another path Result: approximation to optimal partition (exact optimum is NP-complete) Mesh-free motorcycle graphs Earlier... Motorcycles move from initial points with given velocities When they hit trails of other motorcycles, they crash [Eppstein and Erickson 1999] Application of mesh-free motorcycle graphs Initially: A simplified model of the inward movement of reflex vertices in straight skeletons, a rectilinear variant of medial axes with applications including building roof construction, folding and cutting problems, surface interpolation, geographic analysis, and mesh construction Later: Subroutine for constructing straight skeletons of simple polygons [Cheng and Vigneron 2007; Huber and Held 2012] Image: Huber [2012] Construction of mesh-free motorcycle graphs Main ideas: Define asymmetric distance: Time when one motorcycle would crash into another's trail Repeatedly find closest pair and eliminate crashed motorcycle Image: Dancede [2011] O(n17=11+) [Eppstein and Erickson 1999] Improved to O(n4=3+) [Vigneron and Yan 2014] Additional log speedup using mutual nearest neighbors instead of closest pairs [Mamano et al. -

Sparsification–A Technique for Speeding up Dynamic Graph Algorithms

Sparsification–A Technique for Speeding Up Dynamic Graph Algorithms DAVID EPPSTEIN University of California, Irvine, Irvine, California ZVI GALIL Columbia University, New York, New York GIUSEPPE F. ITALIANO Universita` “Ca’ Foscari” di Venezia, Venice, Italy AND AMNON NISSENZWEIG Tel-Aviv University, Tel-Aviv, Israel Abstract. We provide data structures that maintain a graph as edges are inserted and deleted, and keep track of the following properties with the following times: minimum spanning forests, graph connectivity, graph 2-edge connectivity, and bipartiteness in time O(n1/2) per change; 3-edge connectivity, in time O(n2/3) per change; 4-edge connectivity, in time O(na(n)) per change; k-edge connectivity for constant k, in time O(nlogn) per change; 2-vertex connectivity, and 3-vertex connectivity, in time O(n) per change; and 4-vertex connectivity, in time O(na(n)) per change. A preliminary version of this paper was presented at the 33rd Annual IEEE Symposium on Foundations of Computer Science (Pittsburgh, Pa.), 1992. D. Eppstein was supported in part by National Science Foundation (NSF) Grant CCR 92-58355. Z. Galil was supported in part by NSF Grants CCR 90-14605 and CDA 90-24735. G. F. Italiano was supported in part by the CEE Project No. 20244 (ALCOM-IT) and by a research grant from Universita` “Ca’ Foscari” di Venezia; most of his work was done while at IBM T. J. Watson Research Center. Authors’ present addresses: D. Eppstein, Department of Information and Computer Science, University of California, Irvine, CA 92717, e-mail: [email protected], internet: http://www.ics. -

Dynamic Generators of Topologically Embedded Graphs David Eppstein

Dynamic Generators of Topologically Embedded Graphs David Eppstein Univ. of California, Irvine School of Information and Computer Science Dynamic generators of topologically embedded graphs D. Eppstein, UC Irvine, SODA 2003 Outline New results and related work Review of topological graph theory Solution technique: tree-cotree decomposition Dynamic generators of topologically embedded graphs D. Eppstein, UC Irvine, SODA 2003 Outline New results and related work Review of topological graph theory Solution technique: tree-cotree decomposition Dynamic generators of topologically embedded graphs D. Eppstein, UC Irvine, SODA 2003 New Results Given cellular embedding of graph on surface, construct and maintain generators of fundamental group Speed up other dynamic graph algorithms for topologically embedded graphs (connectivity, MST) Improve constant in separator theorem for low-genus graphs Construct low-treewidth tree-decompositions of low-genus low-diameter graphs Dynamic generators of topologically embedded graphs D. Eppstein, UC Irvine, SODA 2003 New Results: Fundamental Group Generators Fundamental group is formed by loops on surface Provides important topological information about surface, used as basis for all our other algorithms Can be described by a system of generators (independent loops) and relations (concatenations of loops that bound disks) Time to construct this system: O(n) Related work: Canonical schema of Vegter and Yap [SoCG 90] (set of generators satisfying prespecified relations) Time to construct canonical schema: O(gn) -

Listing K-Cliques in Sparse Real-World Graphs Maximilien Danisch, Oana Balalau, Mauro Sozio

Listing k-cliques in Sparse Real-World Graphs Maximilien Danisch, Oana Balalau, Mauro Sozio To cite this version: Maximilien Danisch, Oana Balalau, Mauro Sozio. Listing k-cliques in Sparse Real-World Graphs. 2018 World Wide Web Conference, Apr 2018, Lyon, France. pp.589-598, 10.1145/3178876.3186125. hal-02085353 HAL Id: hal-02085353 https://hal.archives-ouvertes.fr/hal-02085353 Submitted on 8 Apr 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Listing k-cliques in Sparse Real-World Graphs∗ Maximilien Danischy Oana Balalauz Mauro Sozio Sorbonne Université, CNRS, Max Planck Institute for Informatics, LTCI, Télécom ParisTech University Laboratoire d’Informatique de Paris 6, Saarbrücken, Germany Paris, France LIP6, F-75005 Paris, France [email protected] [email protected] [email protected] ABSTRACT of edges [36, 40, 49] within a few hours. In contrast, listing all k- Motivated by recent studies in the data mining community which cliques is often deemed not feasible with most of the works focusing require to efficiently list all k-cliques, we revisit the iconic algorithm on approximately counting cliques [31, 42]. of Chiba and Nishizeki and develop the most efficient parallel algo- Recent works in the data mining and database community call rithm for such a problem. -

R, 44, 177 Abu-Khzam, Faisal N., 51 Agarwal, Pankaj K., 61, 190

Cambridge University Press 978-1-108-42391-5 — Forbidden Configurations in Discrete Geometry David Eppstein Index More Information Index ∃R, 44, 177 Balko, Martin, 10 Balogh, Jozsef, 93 Abu-Khzam, Faisal N., 51 Bannister, Michael J., 151, 179, 181, Agarwal, Pankaj K., 61, 190 182 Aichholzer, Oswin, 10, 34, 131 Baran, Ilya, 60 Ailon, Nir, 59 Barnett, Vic, 129 algorithm, 33 Beck, József, 89 Alimonti, Paola, 45 betweenness, 14 Alon, Noga, 71 Bézout’s theorem, 74 alphabet, 88 binary relation, 20 Anderson, David Brent, 73 binary search, 139 Anning, Norman H., 142 binomial, 107–109 anti-symmetry, 20 binomial coefficient, 2, 107 antichain, 21, 22, 66, 123, 128, 135, bipartite graph, 168, 173–175 175, 201 bitangent, 16, 17 antimatroid, 164 Björner, Anders, 11 Apollonian network, 185, 186 Borwein, Peter, 53 Appel, Kenneth, 45 bounded obstacles, 25 approximation ratio, 45, 67, 68, 70, 71, bounding box, 198 75, 81–83, 98, 100, 118, 119, 130, Brahmagupta, 142 172, 196, 197, 209, 210 Brandenburg, Franz J., 181, 182 APX, 45, 67, 75, 119, 172, 209 Bremner, David, 136 area, 35 Brinkmann, Gunnar, 184 Arkin, Esther M., 106, 117, 118, brute force search, 44, 51, 62, 77, 172, 130 184 Aronov, Boris, 128 Bukh, Boris, 151, 157 arrangement of lines, 59, 62, 116, Burr, Michael A., 140 177–179, 191, 193 Burr, Stefan A., 54, 55 arrow symbol, 88, 90 Aurenhammer, Franz, 10 Cabello, Sergio, 184 avoids, 27–29, 31, 39, 48, 49, 52, 55, cap, 108 72, 75, 106, 119, 120, 126, 171, cap-set, 91, 92 172 Carathéodory, Constantin, 106 223 © in this web service Cambridge University -

Quasiconvex Programming

Combinatorial and Computational Geometry MSRI Publications Volume 52, 2005 Quasiconvex Programming DAVID EPPSTEIN Abstract. We define quasiconvex programming, a form of generalized lin- ear programming in which one seeks the point minimizing the pointwise maximum of a collection of quasiconvex functions. We survey algorithms for solving quasiconvex programs either numerically or via generalizations of the dual simplex method from linear programming, and describe varied applications of this geometric optimization technique in meshing, scientific computation, information visualization, automated algorithm analysis, and robust statistics. 1. Introduction Quasiconvex programming is a form of geometric optimization, introduced in [Amenta et al. 1999] in the context of mesh improvement techniques and since applied to other problems in meshing, scientific computation, information visual- ization, automated algorithm analysis, and robust statistics [Bern and Eppstein 2001; 2003; Chan 2004; Eppstein 2004]. If a problem can be formulated as a quasiconvex program of bounded dimension, it can be solved algorithmically in a linear number of constant-complexity primitive operations by generalized linear programming techniques, or numerically by generalized gradient descent techniques. In this paper we survey quasiconvex programming algorithms and applications. 1.1. Quasiconvex functions. Let Y be a totally ordered set, for instance the real numbers R or integers Z ordered numerically. For any function f : X 7→ Y , and any value λ ∈ Y , we define the lower level set f ≤λ = {x ∈ X | f(x) ≤ λ} . A function q : X 7→ Y , where X is a convex subset of Rd, is called quasiconvex [Dharmadhikari and Joag-Dev 1988] when its lower level sets are all convex. A one-dimensional quasiconvex function is more commonly called unimodal, and This research was supported in part by NSF grant CCR-9912338. -

Graph-Theoretic Solutions to Computational Geometry Problems

Graph-Theoretic Solutions to Computational Geometry Problems David Eppstein Univ. of California, Irvine Computer Science Department Historically, many connections from graph-theoretic algorithms to computational geometry... 1. Geometric analogues of classical graph algorithm problems Typical issue: using geometric information to speed up naive application of graph algorithms E.g., Euclidean minimum spanning tree = Spanning tree of complete graph with Euclidean distances Solved in O(n log n) time by Delaunay triangulation [Shamos 1978] Graph-theoretic solutions to computational geometry problems D. Eppstein, UC Irvine, 2009 Historically, many connections from graph-theoretic algorithms to computational geometry... 2. Geometric approaches to graph-theoretic problems How many different minimum spanning trees can a graph with linearly varying edge weights form? O(m n1/3) via crossing number inequality [Dey, DCG 1998] Ω(m a(n)) via lower envelopes of line segments [E., DCG 1998] 3 1 2 4 5 1 2 1 3 1 3 2 4 5 4 5 2 3 Graph-theoretic solutions to computational geometry problems D. Eppstein, UC Irvine, 2009 Historically, many connections from graph-theoretic algorithms to computational geometry... Today: 3. Graph-theoretic approaches to geometric problems Geometry leads to auxiliary graph Special properties of auxiliary graph lead to algorithm Algorithm on auxiliary graph leads to solution Minimum-diameter clustering via maximum independent sets in bipartite graphs (more detail later in talk) Graph-theoretic solutions to computational geometry problems D. Eppstein, UC Irvine, 2009 Outline Art gallery theorems Partition into rectangles Minimum diameter clustering Bend minimization Mesh stripification Angle optimization of tilings Metric embedding into stars Graph-theoretic solutions to computational geometry problems D. -

Reactive Proximity Data Structures for Graphs

Reactive Proximity Data Structures for Graphs David Eppstein, Michael T. Goodrich, and Nil Mamano Department of Computer Science, University of California, Irvine, USA feppstein,goodrich,[email protected] Abstract. We consider data structures for graphs where we maintain a subset of the nodes called sites, and allow proximity queries, such as asking for the closest site to a query node, and update operations that enable or disable nodes as sites. We refer to a data structure that can efficiently react to such updates as reactive. We present novel reactive proximity data structures for graphs of polynomial expansion, i.e., the class of graphs with small separators, such as planar graphs and road networks. Our data structures can be used directly in several logisti- cal problems and geographic information systems dealing with real-time data, such as emergency dispatching. We experimentally compare our data structure to Dijkstra's algorithm in a system emulating random queries in a real road network. 1 Introduction Proximity data structures maintain a set of objects of interest, called sites, and support queries concerned with minimizing some distance involving the sites, such as nearest-neighbor or closest-pair queries. They are well known in compu- tational geometry [10], where sites are points in space and distance is measured by Euclidean distance or some other metric (e.g., see [1, 6, 7, 35, 38]). In this chapter, we are interested in proximity data structures that deal with nodes in a graph rather than points in space. We consider that there is an underlying, fixed graph G, such as a road network for a geographic region, and sites are a distinguished subset P of the vertices of G. -

Finding the K Shortest Paths

Finding the k Shortest Paths David Eppstein March 31, 1997 Abstract We give algorithms for ®nding the k shortest paths (not required to be simple) connecting a pair of vertices in a digraph. Our algorithms output an implicit representation of these paths in a digraph with n vertices and m edges, in time O(m n log n k). We can also ®nd the k shortest paths from a given source s to each vertex in the graph,+ in total+ time O(m n log n kn). We de- scribe applications to dynamic programming problems including the knapsack+ problem,+ sequence alignment, maximum inscribed polygons, and genealogical relationship discovery. 1 Introduction We consider a long-studied generalization of the shortest path problem, in which not one but several short paths must be produced. The k shortest paths problem is to list the k paths connecting a given source-destination pair in the digraph with minimum total length. Our techniques also apply to the problem of listing all paths shorter than some given threshhold length. In the version of these problems studied here, cycles of repeated vertices are allowed. We ®rst present a basic version of our algorithm, which is simple enough to be suitable for practical implementation while losing only a logarithmic factor in time complexity. We then show how to achieve optimal time (constant time per path once a shortest path tree has been computed) by applying Frederickson's [26] algorithm for ®nding the min- imum k elements in a heap-ordered tree. 1.1 Applications The applications of shortest path computations are too numerous to cite in detail.