Computing Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Acm's Fy14 Annual Report

acm’s annual report for FY14 DOI:10.1145/2691599 ACM Council PRESIDENT ACM’s FY14 Vinton G. Cerf VICE PRESIDENT Annual Report Alexander L. Wolf SECRETAry/TREASURER As I write this letter, ACM has just issued Vicki L. Hanson a landmark announcement revealing the PAST PRESIDENT monetary level for the ACM A.M. Turing Alain Chesnais Award will be raised this year to $1 million, SIG GOVERNING BOARD CHAIR Erik Altman with all funding provided by Google puter science and mathematics. I was PUBLICATIONS BOARD Inc. The news made the global rounds honored to be a participant in this CO-CHAIRS in record time, bringing worldwide inaugural event; I found it an inspi- Jack Davidson visibility to the award and the Associa- rational gathering of innovators and Joseph A. Konstan tion. Long recognized as the equiva- understudies who will be the next MEMBERS-AT-LARGE lent to the Nobel Prize for computer award recipients. Eric Allman science, ACM’s Turing Award now This year also marked the publica- Ricardo Baeza-Yates carries the financial clout to stand on tion of highly anticipated ACM-IEEE- Cherri Pancake the same playing field with the most CS collaboration Computer Science Radia Perlman esteemed scientific and cultural prizes Curricula 2013 and the acclaimed Re- Mary Lou Soffa honoring game changers whose con- booting the Pathway to Success: Prepar- Eugene Spafford tributions have transformed the world. ing Students for Computer Workforce Salil Vadhan This really is an extraordinary time Needs in the United States, an exhaus- SGB COUNCIL to be part of the world’s largest educa- tive report from ACM’s Education REPRESENTATIVES tional and scientific society in comput- Policy Committee that focused on IT Brent Hailpern ing. -

Software Technical Report

A Technical History of the SEI Larry Druffel January 2017 SPECIAL REPORT CMU/SEI-2016-SR-027 SEI Director’s Office Distribution Statement A: Approved for Public Release; Distribution is Unlimited http://www.sei.cmu.edu Copyright 2016 Carnegie Mellon University This material is based upon work funded and supported by the Department of Defense under Contract No. FA8721-05-C-0003 with Carnegie Mellon University for the operation of the Software Engineer- ing Institute, a federally funded research and development center. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the United States Department of Defense. References herein to any specific commercial product, process, or service by trade name, trade mark, manufacturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by Carnegie Mellon University or its Software Engineering Institute. This report was prepared for the SEI Administrative Agent AFLCMC/PZM 20 Schilling Circle, Bldg 1305, 3rd floor Hanscom AFB, MA 01731-2125 NO WARRANTY. THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN “AS-IS” BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. [Distribution Statement A] This material has been approved for public release and unlimited distribu- tion. -

Computer Viruses and Malware Advances in Information Security

Computer Viruses and Malware Advances in Information Security Sushil Jajodia Consulting Editor Center for Secure Information Systems George Mason University Fairfax, VA 22030-4444 email: [email protected] The goals of the Springer International Series on ADVANCES IN INFORMATION SECURITY are, one, to establish the state of the art of, and set the course for future research in information security and, two, to serve as a central reference source for advanced and timely topics in information security research and development. The scope of this series includes all aspects of computer and network security and related areas such as fault tolerance and software assurance. ADVANCES IN INFORMATION SECURITY aims to publish thorough and cohesive overviews of specific topics in information security, as well as works that are larger in scope or that contain more detailed background information than can be accommodated in shorter survey articles. The series also serves as a forum for topics that may not have reached a level of maturity to warrant a comprehensive textbook treatment. Researchers, as well as developers, are encouraged to contact Professor Sushil Jajodia with ideas for books under this series. Additional tities in the series: HOP INTEGRITY IN THE INTERNET by Chin-Tser Huang and Mohamed G. Gouda; ISBN-10: 0-387-22426-3 PRIVACY PRESERVING DATA MINING by Jaideep Vaidya, Chris Clifton and Michael Zhu; ISBN-10: 0-387- 25886-8 BIOMETRIC USER AUTHENTICATION FOR IT SECURITY: From Fundamentals to Handwriting by Claus Vielhauer; ISBN-10: 0-387-26194-X IMPACTS AND RISK ASSESSMENT OF TECHNOLOGY FOR INTERNET SECURITY.'Enabled Information Small-Medium Enterprises (TEISMES) by Charles A. -

An Interview With

An Interview with LANCE HOFFMAN OH 451 Conducted by Rebecca Slayton on 1 July 2014 George Washington University Washington, D.C. Charles Babbage Institute Center for the History of Information Technology University of Minnesota, Minneapolis Copyright, Charles Babbage Institute Lance Hoffman Interview 1 July 2014 Oral History 451 Abstract This interview with security pioneer Lance Hoffman discusses his entrance into the field of computer security and privacy—including earning a B.S. in math at the Carnegie Institute of Technology, interning at SDC, and earning a PhD at Stanford University— before turning to his research on computer security risk management at as a Professor at the University of California–Berkeley and George Washington University. He also discusses the relationship between his PhD research on access control models and the political climate of the late 1960s, and entrepreneurial activities ranging from the creation of a computerized dating service to the starting of a company based upon the development of a decision support tool, RiskCalc. Hoffman also discusses his work with the Association for Computing Machinery and IEEE Computer Society, including his role in helping to institutionalize the ACM Conference on Computers, Freedom, and Privacy. The interview concludes with some reflections on the current state of the field of cybersecurity and the work of his graduate students. This interview is part of a project conducted by Rebecca Slayton and funded by an ACM History Committee fellowship on “Measuring Security: ACM and the History of Computer Security Metrics.” 2 Slayton: So to start, please tell us a little bit about where you were born, where you grew up. -

BBST Course 3 -- Test Design

BLACK BOX SOFTWARE TESTING: INTRODUCTION TO TEST DESIGN: A SURVEY OF TEST TECHNIQUES CEM KANER, J.D., PH.D. PROFESSOR OF SOFTWARE ENGINEERING: FLORIDA TECH REBECCA L. FIEDLER, M.B.A., PH.D. PRESIDENT: KANER, FIEDLER & ASSOCIATES This work is licensed under the Creative Commons Attribution License. To view a copy of this license, visit http://creativecommons.org/licenses/by-sa/2.0/ or send a letter to Creative Commons, 559 Nathan Abbott Way, Stanford, California 94305, USA. These notes are partially based on research that was supported by NSF Grants EIA- 0113539 ITR/SY+PE: “Improving the Education of Software Testers” and CCLI-0717613 “Adaptation & Implementation of an Activity-Based Online or Hybrid Course in Software Testing.” Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. BBST Test Design Copyright (c) Kaner & Fiedler (2011) 1 NOTICE … … AND THANKS The practices recommended and The BBST lectures evolved out of practitioner-focused courses co- discussed in this course are useful for an authored by Kaner & Hung Quoc Nguyen and by Kaner & Doug Hoffman introduction to testing, but more (now President of the Association for Software Testing), which then experienced testers will adopt additional merged with James Bach’s and Michael Bolton’s Rapid Software Testing practices. (RST) courses. The online adaptation of BBST was designed primarily by Rebecca L. Fiedler. I am writing this course with the mass- market software development industry in Starting in 2000, the course evolved from a practitioner-focused course mind. -

Software Studies: a Lexicon, Edited by Matthew Fuller, 2008

fuller_jkt.qxd 4/11/08 7:13 AM Page 1 ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• S •••••••••••••••••••••••••••••••••••••new media/cultural studies ••••software studies •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• O ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• F software studies\ a lexicon ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• T edited by matthew fuller Matthew Fuller is David Gee Reader in ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• This collection of short expository, critical, Digital Media at the Centre for Cultural ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• W and speculative texts offers a field guide Studies, Goldsmiths College, University of to the cultural, political, social, and aes- London. He is the author of Media ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• thetic impact of software. Computing and Ecologies: Materialist Energies in Art and A digital media are essential to the way we Technoculture (MIT Press, 2005) and ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• work and live, and much has been said Behind the Blip: Essays on the Culture of ••••••••••••••••••••••••••••••••••••• -

United States Patent (10) Patent No.: US 7,092.914 B1 Shear Et Al

US007092914B1 (12) United States Patent (10) Patent No.: US 7,092.914 B1 Shear et al. (45) Date of Patent: *Aug. 15, 2006 (54) METHODS FOR MATCHING, SELECTING, 3,806,874 A 4, 1974 Ehrat NARROWCASTING, AND/OR CLASSIFYING BASED ON RIGHTS MANAGEMENT (Continued) AND/OR OTHER INFORMATION FOREIGN PATENT DOCUMENTS (75) Inventors: Victor H. Shear, Bethesda, MD (US); AU A-36815,97 2, 1998 David M. Van Wie, Sunnyvale, CA (US); Robert P. Weber, Menlo Park, (Continued) CA (US) OTHER PUBLICATIONS (73) Assignee: Intertrust Technologies Corporation, David Arneke and Donna Cunningham, Document from the Sunnyvale, CA (US) Internet: AT&T encryption system protects information services, (News Release), Jan. 9, 1995, 1 page. (*) Notice: Subject to any disclaimer, the term of this Continued patent is extended or adjusted under 35 (Continued) U.S.C. 154(b) by 0 days. Primary Examiner Thomas A. Dixon (74) Attorney, Agent, or Firm—Finnegan, Henderson, This patent is Subject to a terminal dis- Farabow. Garrett & Dunner, LLP claimer. s s (57) ABSTRACT (21) Appl. No.: 09/498,369 (22) Filed: Feb. 4, 2000 Rights management information 1s used at least in part in a matching, narrowcasting, classifying and/or selecting pro Related U.S. Application Data cess. A matching and classification utility system comprising a kind of Commerce Utility System is used to perform the (63) Continuation of application No. 08/965,185, filed on matching, narrowcasting, classifying and/or selecting. The Nov. 6, 1997, now Pat. No. 6,112,181. matching and classification utility system may match, nar rowcast, classify and/or select people and/or things, non (51) Int. -

Annual Report for Fiscal Year 2015

ACM Annual Report for Fiscal Year 2015 Letter from the President As I think back over the last year, I find it a fantastic time to be a member of the world’s largest profes- sional and student membership society in computing. We continue to build on the services and resources our volunteers and staff provide to the worldwide community, from the foremost collection of comput- Alexander L. Wolf, ACM President ing literature to the forefront of computing education and professional development, from expert advice on public policy and professional ethics to the advance- ment of a balanced and representative work force. Also as I think back over the last year, I note it was a year of important firsts. ACM presented its first million-dollar A.M. Turing Award, bringing the financial clout of this renowned honor in computing in line with the world’s most prestigious cultural and scientific prizes. Thanks to the generous support of Google, this new monetary level raises the Turing Award’s visibility as the premier recognition of computer scientists and engineers who have made contributions of enduring technical importance to the computing community. ACM hosted its first conference targeted spe- cifically at the computing practitioner community. Indeed, the Applicative conference was so successful we expect its successor, slated for June, to double in attendance. In addition, ACM recently introduced ACM, the Association for its first acmqueue app—an interactive, socially net- worked, electronic magazine designed to more easily Computing Machinery, is an reach where its audience now lives and works. international scientific and ACM established its first award to recognize the computing talents of high school seniors. -

Improving the Dependability of Distributed Systems Through AIR Software Upgrades

Carnegie Mellon University CARNEGIE INSTITUTE OF TECHNOLOGY THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Doctor of Philosophy TITLE Improving the Dependability of Distributed Systems through AIR Software Upgrades PRESENTED BY Tudor A. Dumitra! ACCEPTED BY THE DEPARTMENT OF Electrical & Computer Engineering ____________________________________________ ________________________ ADVISOR, MAJOR PROFESSOR DATE ____________________________________________ ________________________ DEPARTMENT HEAD DATE APPROVED BY THE COLLEGE COUNCIL ____________________________________________ ________________________ DEAN DATE Improving the Dependability of Distributed Systems through AIR Software Upgrades Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical & Computer Engineering Tudor A. Dumitra! B.S., Computer Science, “Politehnica” University, Bucharest, Romania Diplôme d’Ingénieur, Ecole Polytechnique, Paris, France M.S., Electrical & Computer Engineering, Carnegie Mellon University Carnegie Mellon University Pittsburgh, PA December, 2010 To my parents and my teachers, who showed me the way. To my friends, who gave me a place to stand on. Pentru Tanti Lola. Abstract Traditional fault-tolerance mechanisms concentrate almost entirely on responding to, avoiding, or tolerating unexpected faults or security violations. However, scheduled events, such as software upgrades, account for most of the system unavailability and often introduce data corruption or latent errors. Through -

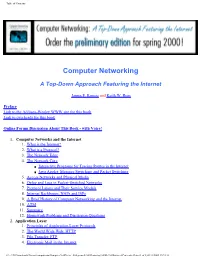

Table of Contents

Table of Contents Computer Networking A Top-Down Approach Featuring the Internet James F. Kurose and Keith W. Ross Preface Link to the Addison-Wesley WWW site for this book Link to overheads for this book Online Forum Discussion About This Book - with Voice! 1. Computer Networks and the Internet 1. What is the Internet? 2. What is a Protocol? 3. The Network Edge 4. The Network Core ■ Interactive Programs for Tracing Routes in the Internet ■ Java Applet: Message Switching and Packet Switching 5. Access Networks and Physical Media 6. Delay and Loss in Packet-Switched Networks 7. Protocol Layers and Their Service Models 8. Internet Backbones, NAPs and ISPs 9. A Brief History of Computer Networking and the Internet 10. ATM 11. Summary 12. Homework Problems and Discussion Questions 2. Application Layer 1. Principles of Application-Layer Protocols 2. The World Wide Web: HTTP 3. File Transfer: FTP 4. Electronic Mail in the Internet file:///D|/Downloads/Livros/computação/Computer%20Netw...20Approach%20Featuring%20the%20Internet/Contents-1.htm (1 of 4)20/11/2004 15:51:32 Table of Contents 5. The Internet's Directory Service: DNS ■ Interactive Programs for Exploring DNS 6. Socket Programming with TCP 7. Socket Programming with UDP 8. Building a Simple Web Server 9. Summary 10. Homework Problems and Discussion Questions 3. Transport Layer 1. Transport-Layer Services and Principles 2. Multiplexing and Demultiplexing Applications 3. Connectionless Transport: UDP 4. Principles of Reliable of Data Transfer ■ Java Applet: Flow Control in Action 5. Connection-Oriented Transport: TCP 6. Principles of Congestion Control 7. TCP Congestion Control 8. -

Case 1:17-Cr-00130-JTN ECF No. 53 Filed 02/23/18 Pageid.2039 Page 1 of 10

Case 1:17-cr-00130-JTN ECF No. 53 filed 02/23/18 PageID.2039 Page 1 of 10 UNITED STATES DISTRICT COURT WESTERN DISTRICT OF MICHIGAN SOUTHERN DIVISION _________________ UNITED STATES OF AMERICA, Plaintiff, Case no. 1:17-cr-130 v. Hon. Janet T. Neff United States District Judge DANIEL GISSANTANER, Hon. Ray Kent Defendant. United States Magistrate Judge / DEFENDANT’S REPLY TO THE GOVERNMENT’S RESPONSE TO MOTION TO EXCLUDE DNA EVIDENCE NOW COMES, the defendant, Daniel Gissantaner, by and through his attorney, Joanna C. Kloet, Assistant Federal Public Defender, and hereby requests to file this Reply to the Government’s Response to the Defendant’s Motion to Exclude DNA Evidence, as authorized by Federal Rule of Criminal Procedure 12 and Local Criminal Rules 47.1 and 47.2. This case was filed in Federal Court after the defendant was acquitted of the charge of felon in possession of a firearm after a July 8, 2016, hearing before the Michigan Department of Corrections, where the only apparent difference between the evidence available to the respective authorities is the DNA likelihood ratio (“LR”) now proffered by the Federal Government. Accordingly, the defense submitted the underlying Motion to Exclude DNA Evidence as a dispositive pre-trial motion. Local Criminal Rule 47.1 allows the Court to permit or require further briefing on dispositive motions following a response. Alternatively, if the Court determines the underlying Motion is non-dispositive, the defense requests leave to file this Reply under Local Case 1:17-cr-00130-JTN ECF No. 53 filed 02/23/18 PageID.2040 Page 2 of 10 Criminal Rule 47.2. -

Software on the Witness Stand: What Should It Take for Us to Trust It?

Software on the Witness Stand: What Should It Take for Us to Trust It? Sergey Bratus1, Ashlyn Lembree2, Anna Shubina1 1 Institute for Security, Technology, and Society, Dartmouth College, Hanover, NH 2 Franklin Pierce Law Center, Concord, NH 1 Motivation We discuss the growing trend of electronic evidence, created automatically by autonomously running software, being used in both civil and criminal court cases. We discuss trustworthiness requirements that we believe should be applied to such software and platforms it runs on. We show that courts tend to regard computer-generated materials as inherently trustworthy evidence, ignoring many software and platform trustworthiness problems well known to computer security researchers. We outline the technical challenges in making evidence-generating software trustworthy and the role Trusted Computing can play in addressing them. This paper is structured as follows: Part I is a case study of electronic evidence in a “file sharing" copyright infringement case, potential trustworthiness issues involved, and ways we believe they should be addressed with state-of-the-art computing practices. Part II is a legal analysis of issues and practices surrounding the use of software-generated evidence by courts. Part I: The Case Study and Technical Challenges 2 Introduction Recently the first author was asked to serve as an expert witness in a civil lawsuit, in which the plaintiffs alleged violation of their copyrights by the defendant by way of a peer-to-peer network. Mavis Roy, of Hudson, New Hampshire, had been charged by four record labels with downloading and distributing hundreds of songs from the Internet. The principal kind of evidence that the plaintiffs provided to the defendant's counsel (the second author), and which, judging by their expert witness' report, they planned to use in court to prove their version of events that implied the defendant's guilt, was a long print-out of a computer program.