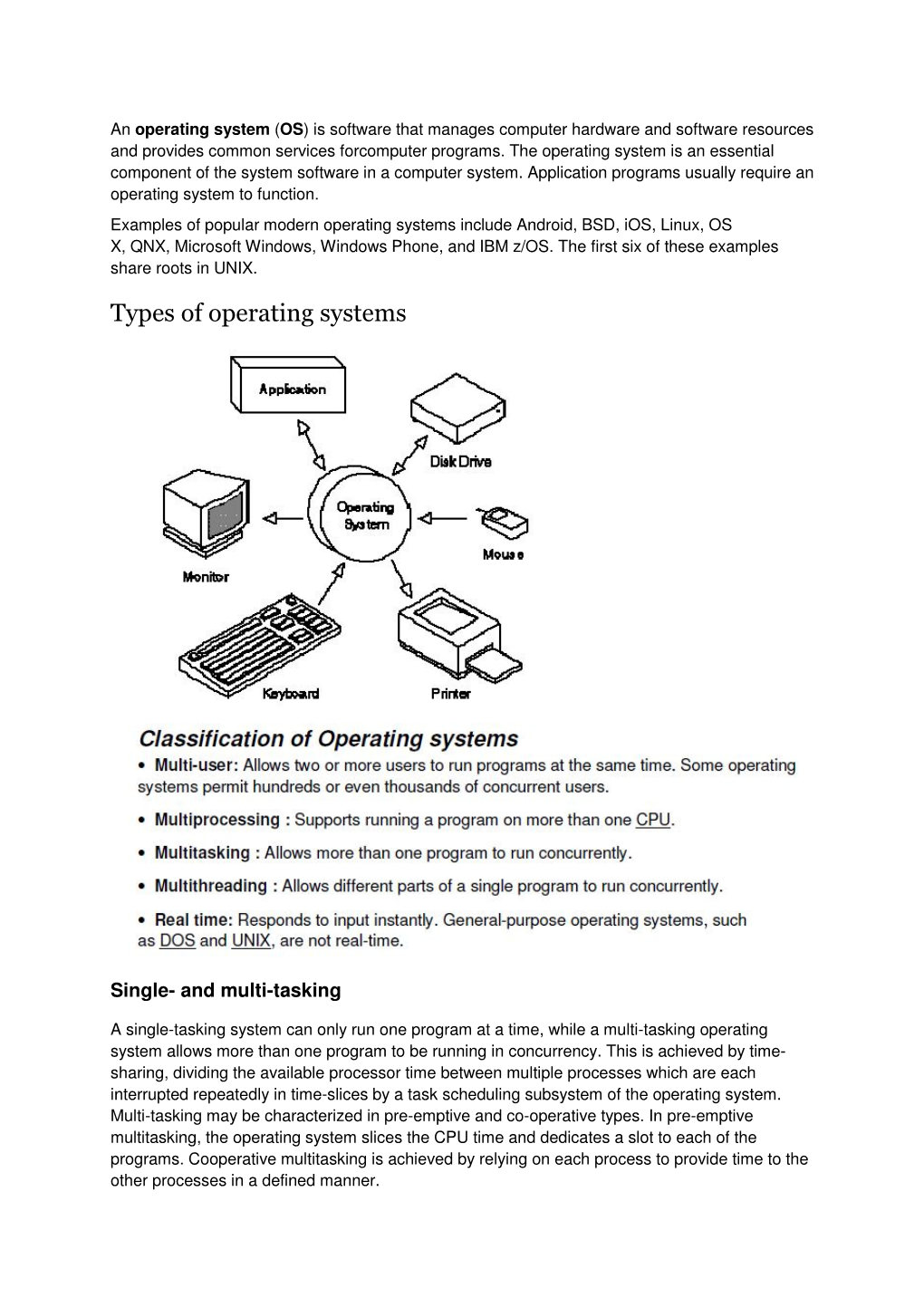

Types of Operating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

Multiboot Guide Booting Fedora and Other Operating Systems

Fedora 23 Multiboot Guide Booting Fedora and other operating systems. Fedora Documentation Project Copyright © 2013 Fedora Project Contributors. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

Formation Debian GNU/Linux

Formation Debian GNU/Linux Alexis de Lattre [email protected] Formation Debian GNU/Linux par Alexis de Lattre Copyright © 2002, 2003 par Alexis de Lattre Ce document est disponible aux formats : • HTML en ligne (http://people.via.ecp.fr/~alexis/formation-linux/) ou HTML zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-html.zip) (3,6 Mo), • PDF zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-pdf.zip) (4 Mo), • RTF zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-rtf.zip) (3,5 Mo), • Texte zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-txt.zip) (215 Ko). La version la plus récente de ce document se trouve à l’adresse http://people.via.ecp.fr/~alexis/formation-linux/. Vous avez le droit de copier, distribuer et/ou modifier ce document selon les termes de la GNU Free Documentation License, version 1.2 ou n’importe quelle version ultérieure, telle que publiée par la Free Software Foundation. Le texte de la licence se trouve dans l’annexe GNU Free Documentation License. Table des matières A propos de ce document ....................................................................................................................................................... i I. Installation de Debian GNU/Linux.................................................................................................................................... i 1. Linux, GNU, logiciels libres,... c’est quoi ?................................................................................................................1 -

Copyrighted Material

Index alias command, 57–58 SYMBOLs alsamixer, 109–110 #! syntax, shells and, 64 AMD-V virtualization support ... (ellipses), omitted information in (svm), 298–299 code, 10 apropos command, 12–13 [ ] (brackets), testing shell scripts APT (Advanced Package Tool). See and, 65 also software, managing with APT ~. (tilde and period), exiting ssh vs. aptitude, 25 sessions and, 262 basics of, 21 > (greater-than sign) commands for security, 295 appending files and, 55 apt-get command to direct output to files, 55 update command, 35, 37 . (dots), in PATH environment clean option, 29–30 variables, 65 installing packages and, 28–29 ! (exclamation points), negating KVMs and, 301 search criterion with, 85 remove option, 29 / (forward slash), IRC commands and, aptitude. See also software, managing 253 with aptitude ; (semicolons), vi and, 315 vs. APT, 25 basics of, 21 A Aptitude Survival Guide, 35 -a option, (debsums), 42 Aptitude User’s Manual, 35 adding apt-key command, 70 content to scripts, 65–68 archives. See also backups to files to archives, 160 compressed archives groups, 286 backing up with SSH, 161–162 passwords, 281 ARP (Address Resolution Protocol), software, virtualization hosts and, 301 checking, 229–230 software collections, 24–25 ASCII art, viewing movies and, 123 text, 91, 93 ASCII text, reading, 99 user accounts,COPYRIGHTED 280–281 at command, MATERIAL 186 Address Resolution Protocol (ARP), Atheros, 225 checking, 229–230 audio, 107–117 administration of remote systems. See adjusting levels, 109–110 remote systems administration encoding, 111–114 Advanced Linux Sound Architecture files, converting, 116–117 (ALSA), 109 playing music, 107–109 Advanced Package Tool. -

Security by Virtualization

Security by virtualization A novel antivirus for personal computers Balduzzi Marco, 2 nd of March 2007 Master Thesis in Computer Engineering, University of Bergamo 1 This page has been left intentionally blank. 2 Content 1. Introduction .............................................................................................................. 5 1.1. Motivations....................................................................................................... 6 2. Security by virtualization........................................................................................ 10 2.1. Introduction to computer virtualization.......................................................... 10 2.2. Virtualization approach to security ................................................................ 16 3. Virus protection ...................................................................................................... 20 4. Image scan .............................................................................................................. 25 4.1. File repair........................................................................................................ 32 5. On-access scan........................................................................................................ 39 5.1. File organization............................................................................................. 48 5.2. Cache design................................................................................................... 54 5.3. Antivirus -

NTFS - Wikipedia, the Free Encyclopedia File:///Data/Doccd/Specs/Filesystems/NTFS.Html

NTFS - Wikipedia, the free encyclopedia file:///data/doccd/specs/filesystems/NTFS.html NTFS From Wikipedia, the free encyclopedia NTFS Developer Microsoft Full name New Technology File System Introduced July 1993 (Windows NT 3.1) Partition 0x07 (MBR) identifier EBD0A0A2-B9E5-4433-87C0-68B6B72699C7 (GPT) Structures Directory B+ tree contents File Bitmap/Extents allocation Bad blocks Bitmap/Extents Limits Max file size 16 TiB with current implementation (16 EiB architecturally) Max number 4,294,967,295 (232-1) of files Max 255 characters filename size Max volume 256 TiB with current implementation (16 size EiB architecturally) Allowed Unicode (UTF-16), any character except "/" characters in filenames Features Dates Creation, modification, POSIX change, recorded access Date range January 1, 1601 - May 28, 60056 Forks Yes Attributes Read-only, hidden, system, archive File system ACLs permissions Transparent Per-file, LZ77 (Windows NT 3.51 onward) compression Transparent Per-file, encryption DESX (Windows 2000 onward), Triple DES (Windows XP onward), AES (Windows XP Service Pack 1, Windows 2003 onward) Supported Windows NT family (Windows NT 3.1 to operating Windows NT 4.0, Windows 2000, Windows systems XP, Windows Server 2003, Windows Vista) NTFS or New Technology File System is the standard file system of Windows NT and its descendants: Windows 2000, Windows XP, Windows Server 2003 and Windows Vista. NTFS replaced Microsoft's previous FAT file system, used in MS-DOS and early versions of Windows. NTFS has several improvements over FAT such as improved support for metadata and the use of advanced data structures to improve performance, reliability and disk space utilization plus additional extensions such as security access control lists and file system journaling. -

Linux Professional Institute Tutorials LPI Exam 101 Prep: Hardware and Architecture Junior Level Administration (LPIC-1) Topic 101

Linux Professional Institute Tutorials LPI exam 101 prep: Hardware and architecture Junior Level Administration (LPIC-1) topic 101 Skill Level: Introductory Ian Shields ([email protected]) Senior Programmer IBM 08 Aug 2005 In this tutorial, Ian Shields begins preparing you to take the Linux Professional Institute® Junior Level Administration (LPIC-1) Exam 101. In this first of five tutorials, Ian introduces you to configuring your system hardware with Linux™. By the end of this tutorial, you will know how Linux configures the hardware found on a modern PC and where to look if you have problems. Section 1. Before you start Learn what these tutorials can teach you and how you can get the most from them. About this series The Linux Professional Institute (LPI) certifies Linux system administrators at two levels: junior level (also called "certification level 1") and intermediate level (also called "certification level 2"). To attain certification level 1, you must pass exams 101 and 102; to attain certification level 2, you must pass exams 201 and 202. developerWorks offers tutorials to help you prepare for each of the four exams. Each exam covers several topics, and each topic has a corresponding self-study tutorial on developerWorks. For LPI exam 101, the five topics and corresponding developerWorks tutorials are: Hardware and architecture © Copyright IBM Corporation 1994, 2008. All rights reserved. Page 1 of 43 developerWorks® ibm.com/developerWorks Table 1. LPI exam 101: Tutorials and topics LPI exam 101 topic developerWorks tutorial Tutorial summary Topic 101 LPI exam 101 prep (topic (This tutorial). Learn to 101): configure your system Hardware and architecture hardware with Linux. -

Solaris X86 FAQ

Solaris x86 FAQ Dan E. Anderson Solaris x86 FAQ Table of Contents r r r r Solaris x86 FAQr r...................................................................................................................................1 r r r Detailed Contentsr r..........................................................................................................................2 r r r r Solaris x86 FAQr r...............................................................................................................................116 r r r Detailed Contentsr r......................................................................................................................117 i r r r r r Solaris x86 FAQr r r r r r r r ENGLISH r r r / r r r r [IPv6-only r r r r r r r / r Japaneser mirrorr RUSSIANr r r (below)rr [Translation]r [Another FAQ]r of this FAQ]r r r r r r r Search Solaris x86 FAQ:r r r r r r r r r r r r r r r r r r r r 9. Interoperability With Other r r r r r Operating Systemsr r r r r r r r r r r r 2. Introductionr r r r r r r r r Complete FAQ:r r r r r r r 3. Resourcesr r r r r r r r r r r 4. Pre-installationr r r r r r ♦ HTML Formatr r r r r r 5. Installationr r r r r ♦ Plain Text Formatr r ♦ Gzip-compressed Text r r r r r 6. Post-installation (Customization)r Formatr ♦ Translation:r r r r r r r r r r 7. Troubleshootingr r r r r r RUSSKAYA / RUSSIAN r r r r Versionr by Dubna r r r r r 8. X Windowsr International Universityr (Version 2.4, 10/1999)r r r r r r r r r r r r Solaris x86 FAQr r 1 Solaris x86 FAQ r r r r r r r Detailed Contentsr r r r r r Read the FAQ as an ebook.r r Upload Palm DOC, Plucker, Text,r r PDF & HTML formats.r r See "Ebook formats" for links.r r r r r r r r r NEXT -> r r r r r r r r r r r r r r r r r r r r r r r r r This web page is not associated with Sun Microsystems.r Copyright © 1997-2010 Dan Anderson. -

NAME OVERVIEW Examples

NTFSPROGS(8) NTFSPROGS(8) NAME libntfs-gnomevfs − Module for GNOME VFS that allows access to NTFS filesystems. OVERVIEW The GNOME virtual filesystem (VFS) provides universal access to different filesystems. The libntfs- gnomevfs module enables GNOME VFS aware clients to seamlessly utilize the NTFS library libntfs. So you can access an NTFS filesystem without needing to use the NTFS utilities themselves (at least in theory anyway). In practice this is probably more useful for programs and programmers to make using libntfs easier, more generic, and to allow easier debugging of libntfs. Examples Prerequisites To be able to follow these examples you will need to have installed the test utilities from the gnome- vfs-2.4.x package. The easiest way to do this is to download and compile the gnome-vfs-2 package, e.g. download from: http://ftp.gnome.org/pub/GNOME/desktop/2.4/2.4.0/sources/gnome-vfs-2.4.0.tar.gz Then run ./configure followed by make and make install (as root). This will install it into /usr/local so it should not conflict with your existing installation from rpm or deb packages which will be in /usr. Note you may also need to add /usr/local/lib to /etc/ld.so.conf and then run ldconfig (as root) to let your system see the installed gnome-vfs-2.4.x libraries. Then run ./configure followed by make and make install (as root) in the main ntfsprogs directory to build and install the libntfs-gnomevfs module and libntfs library which is used by the module. -

Guía De (Auto) Aprendizaje En Software Libre. Currículo Para La Formación Profesional

Guía de (auto) aprendizaje en Software Libre. Currículo para la formación profesional Diego Saravia1 Primera versión: 1 abril del 20002 Índice 1. Introducción 3 1.1. Filosofía ............................................ 4 1.2. Independencia ......................................... 4 1.3. Valores, prácticas y principios del currículo ......................... 4 2. Organización de módulos 5 3. Contenido de los Módulos 6 3.1. Software Libre, sus comunidades, filosofía y prácticas .................... 6 3.2. Ingreso y exploración del sistema ............................... 7 3.3. Ayuda y cooperación ..................................... 7 3.4. Archivos, directorios y programas .............................. 7 3.5. Procesadores de texto, Planillas de Cálculo, Presentaciones ................. 7 3.6. Dibujando ........................................... 7 3.7. Encriptación .......................................... 8 3.8. Editando y mirando páginas web ............................... 8 3.9. Programas varios ....................................... 8 3.10. Instalación con HGA ..................................... 8 3.11. Instalación de software .................................... 8 3.12. Administración elemental ................................... 8 3.13. Periféricos ........................................... 8 3.14. Conectarse a Internet ..................................... 8 3.15. Usar Internet ......................................... 9 3.16. Migración ........................................... 9 3.17. Internet y consola ...................................... -

O'reilly Knoppix Hacks (2Nd Edition).Pdf

SECOND EDITION KNOPPIX HACKSTM Kyle Rankin Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Knoppix Hacks,™ Second Edition by Kyle Rankin Copyright © 2008 O’Reilly Media, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (safari.oreilly.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Brian Jepson Cover Designer: Karen Montgomery Production Editor: Adam Witwer Interior Designer: David Futato Production Services: Octal Publishing, Inc. Illustrators: Robert Romano and Jessamyn Read Printing History: October 2004: First Edition. November 2007: Second Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. The Hacks series designations, Knoppix Hacks, the image of a pocket knife, “Hacks 100 Industrial-Strength Tips and Tools,” and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. Small print: The technologies discussed in this publication, the limitations on these technologies that technology and content owners seek to impose, and the laws actually limiting the use of these technologies are constantly changing. -

Debian Linux on an Averatec 3200 Series Laptop

Debian Linux on an Averatec 3200 Series laptop http://www.ccs.neu.edu/home/gene/averatec-linux.html Debian Linux on an Averatec 3200 Series laptop (version 1.16) Gene Cooperman I am using an Averatec 3220 notebook (3200 series). I also installed Debian Sarge on a 3270. Since then, I upgraded to Etch. First, I describe the current issues, followed by a listing of what I did to configure each component of the laptop. As of summer, 2007, the Averatec battery could not be charged. (The battery light would blink very intermittently.) This site gives instructions for disassembling and re-soldering. This other site gives information for the Averatec 3200 . WARNING: As of my current Linux 2.6.17 kernel, this problem has gone away. I will keep this message here a little longer for those with older kernels. After I had upgraded to the 2.6.12 kernel, the Synaptics touchpad now occasionally freezes. (Don't know if this happens with later kernels.) Tracing comments on the web, I found I had to add append="i8042.nomux" to a stanza in lilo.conf, due to a bug in the i8042 device. If using grub instead of lilo, then add i8042.nomux to the end of the kernel line. WARNING: The vendor of the Smartlink modem appears no longer to support a Linux driver for Linux kernel 2.6.12 or later. See the modem section below for details of how to use either the open source ALSA mode modem (built into some kernels) with the kernel module, snd_via82xx_modem.