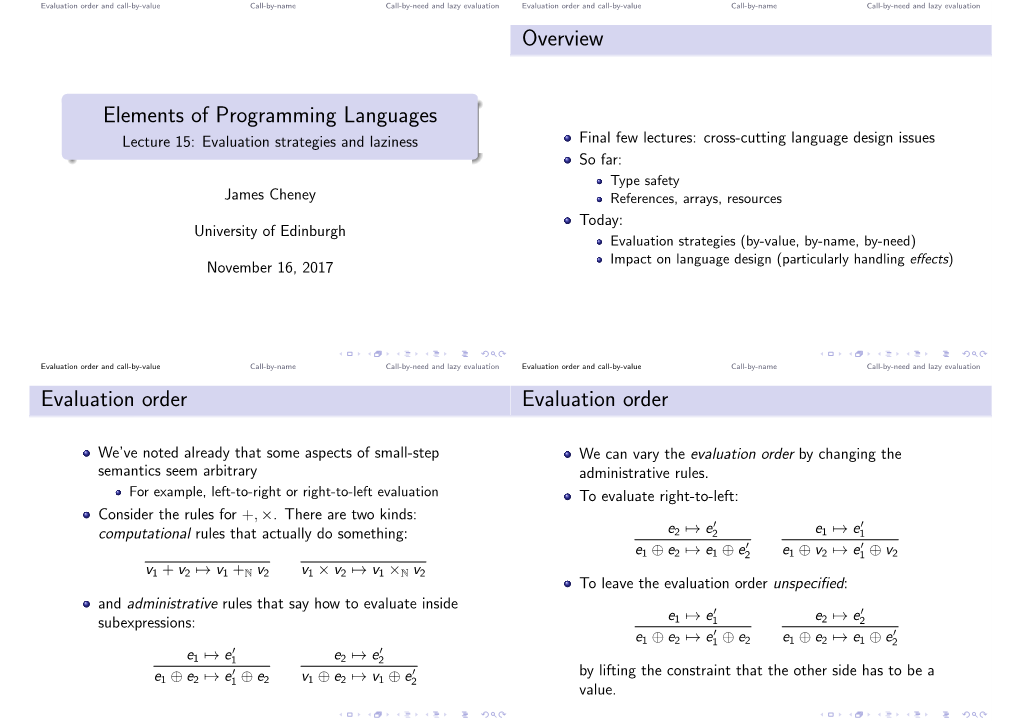

Elements of Programming Languages Overview Evaluation Order

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Scalable Provenance Evaluation Strategy

Debugging Large-scale Datalog: A Scalable Provenance Evaluation Strategy DAVID ZHAO, University of Sydney 7 PAVLE SUBOTIĆ, Amazon BERNHARD SCHOLZ, University of Sydney Logic programming languages such as Datalog have become popular as Domain Specific Languages (DSLs) for solving large-scale, real-world problems, in particular, static program analysis and network analysis. The logic specifications that model analysis problems process millions of tuples of data and contain hundredsof highly recursive rules. As a result, they are notoriously difficult to debug. While the database community has proposed several data provenance techniques that address the Declarative Debugging Challenge for Databases, in the cases of analysis problems, these state-of-the-art techniques do not scale. In this article, we introduce a novel bottom-up Datalog evaluation strategy for debugging: Our provenance evaluation strategy relies on a new provenance lattice that includes proof annotations and a new fixed-point semantics for semi-naïve evaluation. A debugging query mechanism allows arbitrary provenance queries, constructing partial proof trees of tuples with minimal height. We integrate our technique into Soufflé, a Datalog engine that synthesizes C++ code, and achieve high performance by using specialized parallel data structures. Experiments are conducted with Doop/DaCapo, producing proof annotations for tens of millions of output tuples. We show that our method has a runtime overhead of 1.31× on average while being more flexible than existing state-of-the-art techniques. CCS Concepts: • Software and its engineering → Constraint and logic languages; Software testing and debugging; Additional Key Words and Phrases: Static analysis, datalog, provenance ACM Reference format: David Zhao, Pavle Subotić, and Bernhard Scholz. -

Implementing a Vau-Based Language with Multiple Evaluation Strategies

Implementing a Vau-based Language With Multiple Evaluation Strategies Logan Kearsley Brigham Young University Introduction Vau expressions can be simply defined as functions which do not evaluate their argument expressions when called, but instead provide access to a reification of the calling environment to explicitly evaluate arguments later, and are a form of statically-scoped fexpr (Shutt, 2010). Control over when and if arguments are evaluated allows vau expressions to be used to simulate any evaluation strategy. Additionally, the ability to choose not to evaluate certain arguments to inspect the syntactic structure of unevaluated arguments allows vau expressions to simulate macros at run-time and to replace many syntactic constructs that otherwise have to be built-in to a language implementation. In principle, this allows for significant simplification of the interpreter for vau expressions; compared to McCarthy's original LISP definition (McCarthy, 1960), vau's eval function removes all references to built-in functions or syntactic forms, and alters function calls to skip argument evaluation and add an implicit env parameter (in real implementations, the name of the environment parameter is customizable in a function definition, rather than being a keyword built-in to the language). McCarthy's Eval Function Vau Eval Function (transformed from the original M-expressions into more familiar s-expressions) (define (eval e a) (define (eval e a) (cond (cond ((atom e) (assoc e a)) ((atom e) (assoc e a)) ((atom (car e)) ((atom (car e)) (cond (eval (cons (assoc (car e) a) ((eq (car e) 'quote) (cadr e)) (cdr e)) ((eq (car e) 'atom) (atom (eval (cadr e) a))) a)) ((eq (car e) 'eq) (eq (eval (cadr e) a) ((eq (caar e) 'vau) (eval (caddr e) a))) (eval (caddar e) ((eq (car e) 'car) (car (eval (cadr e) a))) (cons (cons (cadar e) 'env) ((eq (car e) 'cdr) (cdr (eval (cadr e) a))) (append (pair (cadar e) (cdr e)) ((eq (car e) 'cons) (cons (eval (cadr e) a) a)))))) (eval (caddr e) a))) ((eq (car e) 'cond) (evcon. -

Comparative Studies of Programming Languages; Course Lecture Notes

Comparative Studies of Programming Languages, COMP6411 Lecture Notes, Revision 1.9 Joey Paquet Serguei A. Mokhov (Eds.) August 5, 2010 arXiv:1007.2123v6 [cs.PL] 4 Aug 2010 2 Preface Lecture notes for the Comparative Studies of Programming Languages course, COMP6411, taught at the Department of Computer Science and Software Engineering, Faculty of Engineering and Computer Science, Concordia University, Montreal, QC, Canada. These notes include a compiled book of primarily related articles from the Wikipedia, the Free Encyclopedia [24], as well as Comparative Programming Languages book [7] and other resources, including our own. The original notes were compiled by Dr. Paquet [14] 3 4 Contents 1 Brief History and Genealogy of Programming Languages 7 1.1 Introduction . 7 1.1.1 Subreferences . 7 1.2 History . 7 1.2.1 Pre-computer era . 7 1.2.2 Subreferences . 8 1.2.3 Early computer era . 8 1.2.4 Subreferences . 8 1.2.5 Modern/Structured programming languages . 9 1.3 References . 19 2 Programming Paradigms 21 2.1 Introduction . 21 2.2 History . 21 2.2.1 Low-level: binary, assembly . 21 2.2.2 Procedural programming . 22 2.2.3 Object-oriented programming . 23 2.2.4 Declarative programming . 27 3 Program Evaluation 33 3.1 Program analysis and translation phases . 33 3.1.1 Front end . 33 3.1.2 Back end . 34 3.2 Compilation vs. interpretation . 34 3.2.1 Compilation . 34 3.2.2 Interpretation . 36 3.2.3 Subreferences . 37 3.3 Type System . 38 3.3.1 Type checking . 38 3.4 Memory management . -

Computer Performance Evaluation Users Group (CPEUG)

COMPUTER SCIENCE & TECHNOLOGY: National Bureau of Standards Library, E-01 Admin. Bidg. OCT 1 1981 19105^1 QC / 00 COMPUTER PERFORMANCE EVALUATION USERS GROUP CPEUG 13th Meeting NBS Special Publication 500-18 U.S. DEPARTMENT OF COMMERCE National Bureau of Standards 3-18 NATIONAL BUREAU OF STANDARDS The National Bureau of Standards^ was established by an act of Congress March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (1) a basis for the Nation's physical measurement system, (2) scientific and technological services for industry and government, (3) a technical basis for equity in trade, and (4) technical services to pro- mote public safety. The Bureau consists of the Institute for Basic Standards, the Institute for Materials Research, the Institute for Applied Technology, the Institute for Computer Sciences and Technology, the Office for Information Programs, and the Office of Experimental Technology Incentives Program. THE INSTITUTE FOR BASIC STANDARDS provides the central basis within the United States of a complete and consist- ent system of physical measurement; coordinates that system with measurement systems of other nations; and furnishes essen- tial services leading to accurate and uniform physical measurements throughout the Nation's scientific community, industry, and commerce. The Institute consists of the Office of Measurement Services, and the following -

Needed Reduction and Spine Strategies for the Lambda Calculus

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector INFORMATION AND COMPUTATION 75, 191-231 (1987) Needed Reduction and Spine Strategies for the Lambda Calculus H. P. BARENDREGT* University of Ngmegen, Nijmegen, The Netherlands J. R. KENNAWAY~ University qf East Anglia, Norwich, United Kingdom J. W. KLOP~ Cenfre for Mathematics and Computer Science, Amsterdam, The Netherlands AND M. R. SLEEP+ University of East Anglia, Norwich, United Kingdom A redex R in a lambda-term M is called needed if in every reduction of M to nor- mal form (some residual of) R is contracted. Among others the following results are proved: 1. R is needed in M iff R is contracted in the leftmost reduction path of M. 2. Let W: MO+ M, + Mz --t .._ reduce redexes R,: M, + M,, ,, and have the property that Vi.3j> i.R, is needed in M,. Then W is normalising, i.e., if M, has a normal form, then Ye is finite and terminates at that normal form. 3. Neededness is an undecidable property, but has several efhciently decidable approximations, various versions of the so-called spine redexes. r 1987 Academic Press. Inc. 1. INTRODUCTION A number of practical programming languages are based on some sugared form of the lambda calculus. Early examples are LISP, McCarthy et al. (1961) and ISWIM, Landin (1966). More recent exemplars include ML (Gordon etal., 1981), Miranda (Turner, 1985), and HOPE (Burstall * Partially supported by the Dutch Parallel Reduction Machine project. t Partially supported by the British ALVEY project. -

Typology of Programming Languages E Evaluation Strategy E

Typology of programming languages e Evaluation strategy E Typology of programming languages Evaluation strategy 1 / 27 Argument passing From a naive point of view (and for strict evaluation), three possible modes: in, out, in-out. But there are different flavors. Val ValConst RefConst Res Ref ValRes Name ALGOL 60 * * Fortran ? ? PL/1 ? ? ALGOL 68 * * Pascal * * C*?? Modula 2 * ? Ada (simple types) * * * Ada (others) ? ? ? ? ? Alphard * * * Typology of programming languages Evaluation strategy 2 / 27 Table of Contents 1 Call by Value 2 Call by Reference 3 Call by Value-Result 4 Call by Name 5 Call by Need 6 Summary 7 Notes on Call-by-sharing Typology of programming languages Evaluation strategy 3 / 27 Call by Value – definition Passing arguments to a function copies the actual value of an argument into the formal parameter of the function. In this case, changes made to the parameter inside the function have no effect on the argument. def foo(val): val = 1 i = 12 foo(i) print (i) Call by value in Python – output: 12 Typology of programming languages Evaluation strategy 4 / 27 Pros & Cons Safer: variables cannot be accidentally modified Copy: variables are copied into formal parameter even for huge data Evaluation before call: resolution of formal parameters must be done before a call I Left-to-right: Java, Common Lisp, Effeil, C#, Forth I Right-to-left: Caml, Pascal I Unspecified: C, C++, Delphi, , Ruby Typology of programming languages Evaluation strategy 5 / 27 Table of Contents 1 Call by Value 2 Call by Reference 3 Call by Value-Result 4 Call by Name 5 Call by Need 6 Summary 7 Notes on Call-by-sharing Typology of programming languages Evaluation strategy 6 / 27 Call by Reference – definition Passing arguments to a function copies the actual address of an argument into the formal parameter. -

HPP) Cooperative Agreement

Hospital Preparedness Program (HPP) Cooperative Agreement FY 12 Budget Period Hospital Preparedness Program (HPP) Performance Measure Manual Guidance for Using the New HPP Performance Measures July 1, 2012 — June 30, 2013 Version: 1.0 — This Page Intentionally Left Blank — U.S. DEPARTMENT OF HEALTH AND HUMAN SERVICES ASSISTANT SECRETARY FOR PREPAREDNESS AND RESPONSE Hospital Preparedness Program (HPP) Performance Measure Manual Guidance for Using the New HPP Performance Measures July 1, 2012 — June 30, 2013 The Hospital Preparedness Program (HPP) Performance Measure Manual, Guidance for Using the New HPP Performance Measures (hereafter referred to as Performance Measure Manual) is a highly iterative document. Subsequent versions will be subject to ongoing updates and changes as reflected in HPP policies and direction. CONTENTS Contents Contents Preface: How to Use This Manual .................................................................................................................. iii Document Organization .................................................................................................................... iii Measures Structure: HPP-PHEP Performance Measures ............................................................................... iv Definitions: HPP Performance Measures ........................................................................................................v Data Element Responses: HPP Performance Measures ..................................................................................v -

Design Patterns for Functional Strategic Programming

Design Patterns for Functional Strategic Programming Ralf Lammel¨ Joost Visser Vrije Universiteit CWI De Boelelaan 1081a P.O. Box 94079 NL-1081 HV Amsterdam 1090 GB Amsterdam The Netherlands The Netherlands [email protected] [email protected] Abstract 1 Introduction We believe that design patterns can be an effective means of con- The notion of a design pattern is well-established in object-oriented solidating and communicating program construction expertise for programming. A pattern systematically names, motivates, and ex- functional programming, just as they have proven to be in object- plains a common design structure that addresses a certain group of oriented programming. The emergence of combinator libraries that recurring program construction problems. Essential ingredients in develop a specific domain or programming idiom has intensified, a pattern are its applicability conditions, the trade-offs of applying rather than reduced, the need for design patterns. it, and sample code that demonstrates its application. In previous work, we introduced the fundamentals and a supporting We contend that design patterns can be an effective means of con- combinator library for functional strategic programming. This is solidating and communicating program construction expertise for an idiom for (general purpose) generic programming based on the functional programming just as they have proven to be in object- notion of a functional strategy: a first-class generic function that oriented programming. One might suppose that the powerful ab- can not only be applied to terms of any type, but which also allows straction mechanisms of functional programming obviate the need generic traversal into subterms and can be customised with type- for design patterns, since many pieces of program construction specific behaviour. -

A Guide to Evaluation in Health Research Prepared By: Sarah

A Guide to Evaluation in Health Research Prepared by: Sarah Bowen, PhD Associate Professor Department of Public Health Sciences, School of Public Health University of Alberta [email protected] INTRODUCTION……..……… ...................................................................................... 1 Purpose and Objectives of Module ............................................................................... 1 Scope and Limitations of the Module ............................................................................ 1 How this Module is Organized……………. ................................................................. 2 Case Studies Used in the Module ................................................................................ 3 SECTION 1: EVALUATION: A BRIEF OVERVIEW ..................................................... 4 Similarities and Differences between Research and Evaluation ................................... 4 Why is it Important that Researchers Understand How to Conduct an Evaluation? ...... 5 Defining Evaluation..……. ............................................................................................ 6 Common Misconceptions about Evaluation .................................................................. 6 Evaluation Approaches…. ............................................................................................ 8 SECTION 2: GETTING STARTED ............................................................................. 11 Step 1: Consider the Purpose(s) of the Evaluation .................................................. -

Swapping Evaluation: a Memory-Scalable Solution for Answer-On-Demand Tabling

Under consideration for publication in Theory and Practice of Logic Programming 1 Swapping Evaluation: A Memory-Scalable Solution for Answer-On-Demand Tabling Pablo Chico de Guzmán Manuel Carro David S. Warren U. Politécnica de Madrid U. Politécnica de Madrid State University of New York at Stony Brook [email protected].fi.upm.es mcarro@fi.upm.es [email protected] Abstract One of the differences among the various approaches to suspension-based tabled evaluation is the scheduling strategy. The two most popular strategies are local and batched evaluation. The former collects all the solutions to a tabled predicate before making any one of them available outside the tabled computation. The latter returns answers one by one before computing them all, which in principle is better if only one answer (or a subset of the answers) is desired. Batched evaluation is closer to SLD evaluation in that it computes solutions lazily as they are demanded, but it may need arbitrarily more memory than local evaluation, which is able to reclaim memory sooner. Some programs which in practice can be executed under the local strategy quickly run out of memory under batched evaluation. This has led to the general adoption of local evaluation at the expense of the more depth-first batched strategy. In this paper we study the reasons for the high memory consumption of batched evaluation and propose a new scheduling strategy which we have termed swapping evaluation. Swapping evaluation also returns answers one by one before completing a tabled call, but its memory usage can be orders of magnitude less than batched evaluation. -

Refining Expression Evaluation Order for Idiomatic C++ (Revision 2)

P0145R1 2016-02-12 Reply-To: [email protected] Refining Expression Evaluation Order for Idiomatic C++ (Revision 2) Gabriel Dos Reis Herb Sutter Jonathan Caves Abstract This paper proposes an order of evaluation of operands in expressions, directly supporting decades-old established and recommended C++ idioms. The result is the removal of embarrassing traps for novices and experts alike, increased confidence and safety of popular programming practices and facilities, hallmarks of modern C++. 1. INTRODUCTION Order of expression evaluation is a recurring discussion topic in the C++ community. In a nutshell, given an expression such as f(a, b, c), the order in which the sub-expressions f, a, b, c (which are of arbitrary shapes) are evaluated is left unspecified by the standard. If any two of these sub-expressions happen to modify the same object without intervening sequence points, the behavior of the program is undefined. For instance, the expression f(i++, i) where i is an integer variable leads to undefined behavior, as does v[i] = i++. Even when the behavior is not undefined, the result of evaluating an expression can still be anybody’s guess. Consider the following program fragment: #include <map> int main() { std::map<int, int> m; m[0] = m.size(); // #1 } What should the map object m look like after evaluation of the statement marked #1? {{0, 0}} or {{0, 1}}? 1.1. CHANGES FROM PREVIOUS VERSIONS a. The original version of this proposal (Dos Reis, et al., 2014) received unanimous support from the Evolution Working Group (EWG) at the Fall 2014 meeting in Urbana, IL, as approved direction, and also strong support for inclusion in C++17. -

Software Reengineering: Technology a Case Study and Lessons Learned U.S

Computer Systems Software Reengineering: Technology A Case Study and Lessons Learned U.S. DEPARTMENT OF COMMERCE National Institute of Mary K. Ruhl and Mary T. Gunn Standards and Technology NIST NIST i PUBUCATiONS 100 500-193 1991 V DATE DUE '11// fr . — >^c"n.u, jnc. i(8-293 ~ 1— NIST Special Publication 500-193 Software Reengineering: A Case Study and Lessons Learned Mary K. Ruhl and Mary T. Gunn Computer Systems Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899 September 1991 U.S. DEPARTMENT OF COMMERCE Robert A. Mosbacher, Secretary NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director Reports on Computer Systems Technology The National Institute of Standards and Technology (NIST) has a unique responsibility for computer systems technology within the Federal government. NIST's Computer Systems Laboratory (CSL) devel- ops standards and guidelines, provides technical assistance, and conducts research for computers and related telecommunications systems to achieve more effective utilization of Federal information technol- ogy resources. CSL's responsibilities include development of technical, management, physical, and ad- ministrative standards and guidelines for the cost-effective security and privacy of sensitive unclassified information processed in Federal computers. CSL assists agencies in developing security plans and in improving computer security awareness training. This Special Publication 500 series reports CSL re- search and guidelines to Federal agencies as well as to organizations in industry, government, and academia. National Institute of Standards and Technology Special Publication 500-193 Natl. Inst. Stand. Technol. Spec. Publ. 500-193, 39 pages (Sept. 1991) CODEN: NSPUE2 U.S. GOVERNMENT PRINTING OFFICE WASHINGTON: 1991 For sale by the Superintendent of Documents, U.S.