Right-Wing Extremists' Persistent Online Presence: History And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Changing Face of American White Supremacy Our Mission: to Stop the Defamation of the Jewish People and to Secure Justice and Fair Treatment for All

A report from the Center on Extremism 09 18 New Hate and Old: The Changing Face of American White Supremacy Our Mission: To stop the defamation of the Jewish people and to secure justice and fair treatment for all. ABOUT T H E CENTER ON EXTREMISM The ADL Center on Extremism (COE) is one of the world’s foremost authorities ADL (Anti-Defamation on extremism, terrorism, anti-Semitism and all forms of hate. For decades, League) fights anti-Semitism COE’s staff of seasoned investigators, analysts and researchers have tracked and promotes justice for all. extremist activity and hate in the U.S. and abroad – online and on the ground. The staff, which represent a combined total of substantially more than 100 Join ADL to give a voice to years of experience in this arena, routinely assist law enforcement with those without one and to extremist-related investigations, provide tech companies with critical data protect our civil rights. and expertise, and respond to wide-ranging media requests. Learn more: adl.org As ADL’s research and investigative arm, COE is a clearinghouse of real-time information about extremism and hate of all types. COE staff regularly serve as expert witnesses, provide congressional testimony and speak to national and international conference audiences about the threats posed by extremism and anti-Semitism. You can find the full complement of COE’s research and publications at ADL.org. Cover: White supremacists exchange insults with counter-protesters as they attempt to guard the entrance to Emancipation Park during the ‘Unite the Right’ rally August 12, 2017 in Charlottesville, Virginia. -

Post-Truth Protest: How 4Chan Cooked Up...Zagate Bullshit | Tuters

5/5/2019 Post-Truth Protest: How 4chan Cooked Up the Pizzagate Bullshit | Tuters | M/C Journal M/C JOURNAL Home > Vol 21, No 3 (2018) > Tuters ARTICLE TOOLS HOME Post-Truth Protest: How 4chan Cooked Up the Pizzagate Bullshit PRINT THIS ARTICLE Marc Tuters, Emilija Jokubauskaitė, Daniel Bach CURRENT ISSUE INDEXING METADATA HOW TO CITE ITEM UPCOMING ISSUES Introduction FINDING REFERENCES ARCHIVES On 4 December 2016, a man entered a Washington, D.C., pizza parlor armed with an AR15 assault rifle in an attempt to save the victims of an alleged satanic pedophilia ring run by EMAIL THIS ARTICLE CONTRIBUTORS prominent members of the Democratic Party. While the story had already been discredited (LOGIN REQUIRED) (LaCapria), at the time of the incident, nearly half of Trump voters were found to give a ABOUT M/C JOURNAL measure of credence to the same rumors that had apparently inspired the gunman (Frankovic). EMAIL THE AUTHOR Was we will discuss here, the bizarre conspiracy theory known as "Pizzagate" had in fact (LOGIN REQUIRED) USER HOME originated a month earlier on 4chan/pol/, a message forum whose very raison d’être is to protest against “political correctness” of the liberal establishment, and which had recently ABOUT THE AUTHORS JOURNAL CONTENT become a hub for “loose coordination” amongst members the insurgent US ‘altright’ movement Marc Tuters SEARCH (Hawley 48). Over a period of 25 hours beginning on 3 November 2016, contributors to the /pol/ forum combed through a cache of private emails belonging to Hillary Clinton’s campaign https://oilab.eu manager John Podesta, obtained by Russian hackers (FranceschiBicchierai) and leaked by University of Amsterdam SEARCH SCOPE Julian Assange (Wikileaks). -

COVID-19: How Hateful Extremists Are Exploiting the Pandemic

COVID-19 How hateful extremists are exploiting the pandemic July 2020 Contents 3 Introduction 5 Summary 6 Findings and recommendations 7 Beliefs and attitudes 12 Behaviours and activities 14 Harms 16 Conclusion and recommendations Commission for Countering Extremism Introduction that COVID-19 is punishment on China for their treatment of Uighurs Muslims.3 Other conspiracy theories suggest the virus is part of a Jewish plot4 or that 5G is to blame.5 The latter has led to attacks on 5G masts and telecoms engineers.6 We are seeing many of these same narratives reoccur across a wide range of different ideologies. Fake news about minority communities has circulated on social media in an attempt to whip up hatred. These include false claims that mosques have remained open during 7 Since the outbreak of the coronavirus (COVID-19) lockdown. Evidence has also shown that pandemic, the Commission for Countering ‘Far Right politicians and news agencies [...] Extremism has heard increasing reports of capitalis[ed] on the virus to push forward their 8 extremists exploiting the crisis to sow division anti-immigrant and populist message’. Content and undermine the social fabric of our country. such as this normalises Far Right attitudes and helps to reinforce intolerant and hateful views We have heard reports of British Far Right towards ethnic, racial or religious communities. activists and Neo-Nazi groups promoting anti-minority narratives by encouraging users Practitioners have told us how some Islamist to deliberately infect groups, including Jewish activists may be exploiting legitimate concerns communities1 and of Islamists propagating regarding securitisation to deliberately drive a anti-democratic and anti-Western narratives, wedge between communities and the British 9 claiming that COVID-19 is divine punishment state. -

Download Download

Proceedings of the Fifteenth International AAAI Conference on Web and Social Media (ICWSM 2021) A Large Open Dataset from the Parler Social Network Max Aliapoulios1, Emmi Bevensee2, Jeremy Blackburn3, Barry Bradlyn4, Emiliano De Cristofaro5, Gianluca Stringhini6, Savvas Zannettou7 1New York University, 2SMAT, 3Binghamton University, 4University of Illinois at Urbana-Champaign, 5University College London, 6Boston University, 7Max Planck Institute for Informatics [email protected], [email protected], [email protected], [email protected], [email protected], [email protected], [email protected] Abstract feasible in technical terms to create a new social media plat- Parler is as an “alternative” social network promoting itself form, but marketing the platform towards specific polarized as a service that allows to “speak freely and express yourself communities is an extremely successful strategy to bootstrap openly, without fear of being deplatformed for your views.” a user base. In other words, there is a subset of users on Twit- Because of this promise, the platform become popular among ter, Facebook, Reddit, etc., that will happily migrate to a new users who were suspended on mainstream social networks platform, especially if it advertises moderation policies that for violating their terms of service, as well as those fearing do not restrict the growth and spread of political polariza- censorship. In particular, the service was endorsed by several tion, conspiracy theories, extremist ideology, hateful and vi- conservative public figures, encouraging people to migrate olent speech, and mis- and dis-information. from traditional social networks. After the storming of the US Capitol on January 6, 2021, Parler has been progressively de- Parler. -

Identity & In-Group Critique in James Mason's Siege

A Paler Shade of White: Identity & In-group Critique in James Mason’s Siege J.M. Berger RESOLVE NETWORK | April 2021 Racially and Ethnically Motivated Violent Extremism Series https://doi.org/10.37805/remve2021.1 The views expressed in this publication are those of the author. They do not necessarily reflect the views of the RESOLVE Network, the U.S. Institute of Peace, or any entity of the U.S. government. CONTENTS EXECUTIVE SUMMARY ......................................................................................... 1 INTRODUCTION ...................................................................................................... 2 HISTORY AND CONTEXT ...................................................................................... 4 METHODOLOGY: LINKAGEBASED ANALYSIS ............................................... 6 OVERVIEW OF CONTENT ..................................................................................... 7 INGROUP CRISIS: A PALER SHADE OF WHITE .............................................13 INGROUPS IN CRISIS ........................................................................................20 THE OUTGROUP IN THE INGROUP ...............................................................23 CONCLUSION: INSIGHTS & RECOMMENDATIONS .....................................25 BIBLIOGRAPHY .....................................................................................................28 EXECUTIVE SUMMARY Discussions of extremist ideologies naturally focus on how in-groups criticize and attack out-groups. But -

The Radical Roots of the Alt-Right

Gale Primary Sources Start at the source. The Radical Roots of the Alt-Right Josh Vandiver Ball State University Various source media, Political Extremism and Radicalism in the Twentieth Century EMPOWER™ RESEARCH The radical political movement known as the Alt-Right Revolution, and Evolian Traditionalism – for an is, without question, a twenty-first century American audience. phenomenon.1 As the hipster-esque ‘alt’ prefix 3. A refined and intensified gender politics, a suggests, the movement aspires to offer a youthful form of ‘ultra-masculinism.’ alternative to conservatism or the Establishment Right, a clean break and a fresh start for the new century and .2 the Millennial and ‘Z’ generations While the first has long been a feature of American political life (albeit a highly marginal one), and the second has been paralleled elsewhere on the Unlike earlier radical right movements, the Alt-Right transnational right, together the three make for an operates natively within the political medium of late unusual fusion. modernity – cyberspace – because it emerged within that medium and has been continuously shaped by its ongoing development. This operational innovation will Seminal Alt-Right figures, such as Andrew Anglin,4 continue to have far-reaching and unpredictable Richard Spencer,5 and Greg Johnson,6 have been active effects, but researchers should take care to precisely for less than a decade. While none has continuously delineate the Alt-Right’s broader uniqueness. designated the movement as ‘Alt-Right’ (including Investigating the Alt-Right’s incipient ideology – the Spencer, who coined the term), each has consistently ferment of political discourses, images, and ideas with returned to it as demarcating the ideological territory which it seeks to define itself – one finds numerous they share. -

Name of Registered Political Party Or Independent Total

Final Results 2016 GLA ELECTIONS ELECTION OF THE LONDON ASSEMBLY MEMBERS Declaration of Results of Poll I hereby give notice as Greater London Returning Officer at the election of the London Wide Assembly Members held on 5th May 2016 that the number of votes recorded at the election is as follows: - Name of Registered Political Party or Independent Total Votes Animal Welfare Party 25810 Britain First - Putting British people first 39071 British National Party 15833 Caroline Pidgeon's London Liberal Democrats 165580 Christian Peoples Alliance 27172 Conservative Party 764230 Green Party - "vote Green on orange" 207959 Labour Party 1054801 Respect (George Galloway) 41324 The House Party - Homes for Londoners 11055 UK Independence Party (UKIP) 171069 Women's Equality Party 91772 Total number of good votes 2615676 The number of ballot papers rejected was as follows:- (a) Unmarked 18842 (b) Uncertain 1127 (c) Voting for too many 9613 (d) Writing identifying voter 145 (e) Want of official mark 6 Total 29733 And I do hereby declare that on the basis of the total number of London votes cast for each party and number of constituency seats they have gained, the eleven London Member seats have been allocated and filled as follows. Seat Number Name of Registered Political Party or Independent 1 Green Party - "vote Green on orange" 2 UK Independence Party (UKIP) 3 Caroline Pidgeon's London Liberal Democrats 4 Conservative Party 5 Conservative Party 6 Labour Party 7 Green Party - "vote Green on orange" 8 Labour Party 9 Conservative Party 10 Labour Party -

What Is Gab? a Bastion of Free Speech Or an Alt-Right Echo Chamber?

What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber? Savvas Zannettou Barry Bradlyn Emiliano De Cristofaro Cyprus University of Technology Princeton Center for Theoretical Science University College London [email protected] [email protected] [email protected] Haewoon Kwak Michael Sirivianos Gianluca Stringhini Qatar Computing Research Institute Cyprus University of Technology University College London & Hamad Bin Khalifa University [email protected] [email protected] [email protected] Jeremy Blackburn University of Alabama at Birmingham [email protected] ABSTRACT ACM Reference Format: Over the past few years, a number of new “fringe” communities, Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, like 4chan or certain subreddits, have gained traction on the Web Michael Sirivianos, Gianluca Stringhini, and Jeremy Blackburn. 2018. What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber?. In WWW at a rapid pace. However, more often than not, little is known about ’18 Companion: The 2018 Web Conference Companion, April 23–27, 2018, Lyon, how they evolve or what kind of activities they attract, despite France. ACM, New York, NY, USA, 8 pages. https://doi.org/10.1145/3184558. recent research has shown that they influence how false informa- 3191531 tion reaches mainstream communities. This motivates the need to monitor these communities and analyze their impact on the Web’s information ecosystem. 1 INTRODUCTION In August 2016, a new social network called Gab was created The Web’s information ecosystem is composed of multiple com- as an alternative to Twitter. -

S.C.R.A.M. Gazette

MARCH EDITION Chief of Police Dennis J. Mc Enerney 2017—Volume 4, Issue 3 S.c.r.a.m. gazette FBI, Sheriff's Office warn of scam artists who take aim at lonely hearts DOWNTOWN — The FBI is warning of and report that they have sent thousands of "romance scams" that can cost victims dollars to someone they met online,” Croon thousands of dollars for Valentine's Day said. “And [they] have never even met that weekend. person, thinking they were in a relationship with that person.” A romance scam typically starts on a dating or social media website, said FBI spokesman If you meet someone online and it seems "too Garrett Croon. A victim will talk to someone good to be true" and every effort you make to online for weeks or months and develop a meet that person fails, "watch out," Croon relationship with them, and the other per- Croon said. warned. Scammers might send photos from son sometimes even sends gifts like flowers. magazines and claim the photo is of them, say Victims can be bilked for hundreds or thou- they're in love with the victim or claim to be The victim and the other person are never sands of dollars this way, and Croon said the unable to meet because they're a U.S. citizen able to meet, with the scammer saying they most common victims are women who are 40 who is traveling or working out of the coun- live or work out of the country or canceling -60 years old who might be widowed, di- try, Croon said. -

Printed Covers Sailing Through the Air As Their Tables Are REVIEWS and REFUTATIONS Ipped

42 RECOMMENDED READING Articles Apoliteic music: Neo-Folk, Martial Industrial and metapolitical fascism by An- ton Shekhovtsov Former ELF/Green Scare Prisoner Exile Now a Fascist by NYC Antifa Books n ٻThe Nature of Fascism by Roger Gri Modernism and Fascism: The Sense of a Beginning under Mussolini and Hitler by Roger n ٻGri n ٻFascism edited by Roger Gri Black Sun: Aryan Cults, Esoteric Nazism, and the Politics of Identity by Nicholas Go- A FIELD GUIDE TO STRAW MEN odrick-Clarke Gods of the Blood: The Pagan Revival and White Separatism by Mattias Gardell Modernity and the Holocaust by Zygmunt Bauman Sadie and Exile, Esoteric Fascism, and Exterminate All the Brutes by Sven Lindqvist Confronting Fascism: Discussion Documents for a Militant Movement by Don Hamerquist, Olympias Little White Lies J. Sakai, Anti-Racist Action Chicago, and Mark Salotte Baedan: A Journal of Queer Nihilism Baedan 2: A Queer Journal of Heresy Baedan 3: A Journal of Queer Time Travel Against His-story, Against Leviathan! by Fredy Perlman Caliban and the Witch: Women, the Body, and Primitive Accumulation by Silvia Federici Witchcraft and the Gay Counterculture by Arthur Evans The Many-Headed Hydra: Sailors, Slaves, Commoners, and the Hidden History of the Revo- lutionary Atlantic by Peter Linebaugh and Marcus Rediker Gone to Croatan: Origins of North American Dropout Culture edited by Ron Sakolsky and James Koehnline The Subversion of Politics: European Autonomous Social Movements and the Decolonization of Everyday Life by Georgy Katsia cas 1312 committee // February 2016 How the Irish Became White by Noel Ignatiev How Deep is Deep Ecology? by George Bradford Against the Megamachine: Essays on Empire and Its Enemies by David Watson Elements of Refusal by John Zerzan Against Civilization edited by John Zerzan This World We Must Leave and Other Essays by Jacques Camatte 41 total resistance mounted to the advent of industrial society. -

Far-Right Anthology

COUNTERINGDEFENDING EUROPE: “GLOBAL BRITAIN” ANDTHE THEFAR FUTURE RIGHT: OFAN EUROPEAN ANTHOLOGY GEOPOLITICSEDITED BY DR RAKIB EHSAN AND DR PAUL STOTT BY JAMES ROGERS DEMOCRACY | FREEDOM | HUMAN RIGHTS ReportApril No 2020. 2018/1 Published in 2020 by The Henry Jackson Society The Henry Jackson Society Millbank Tower 21-24 Millbank London SW1P 4QP Registered charity no. 1140489 Tel: +44 (0)20 7340 4520 www.henryjacksonsociety.org © The Henry Jackson Society, 2020. All rights reserved. The views expressed in this publication are those of the author and are not necessarily indicative of those of The Henry Jackson Society or its Trustees. Title: “COUNTERING THE FAR RIGHT: AN ANTHOLOGY” Edited by Dr Rakib Ehsan and Dr Paul Stott Front Cover: Edinburgh, Scotland, 23rd March 2019. Demonstration by the Scottish Defence League (SDL), with supporters of National Front and white pride, and a counter demonstration by Unite Against Facism demonstrators, outside the Scottish Parliament, in Edinburgh. The Scottish Defence League claim their protest was against the sexual abuse of minors, but the opposition claim the rally masks the SDL’s racist beliefs. Credit: Jeremy Sutton-Hibbert/Alamy Live News. COUNTERINGDEFENDING EUROPE: “GLOBAL BRITAIN” ANDTHE THEFAR FUTURE RIGHT: OFAN EUROPEAN ANTHOLOGY GEOPOLITICSEDITED BY DR RAKIB EHSAN AND DR PAUL STOTT BY JAMES ROGERS DEMOCRACY | FREEDOM | HUMAN RIGHTS ReportApril No 2020. 2018/1 Countering the Far Right: An Anthology About the Editors Dr Paul Stott joined the Henry Jackson Society’s Centre on Radicalisation and Terrorism as a Research Fellow in January 2019. An experienced academic, he received an MSc in Terrorism Studies (Distinction) from the University of East London in 2007, and his PhD in 2015 from the University of East Anglia for the research “British Jihadism: The Detail and the Denial”. -

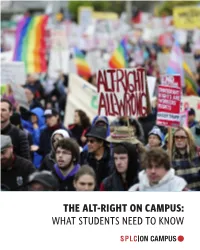

The Alt-Right on Campus: What Students Need to Know

THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW About the Southern Poverty Law Center The Southern Poverty Law Center is dedicated to fighting hate and bigotry and to seeking justice for the most vulnerable members of our society. Using litigation, education, and other forms of advocacy, the SPLC works toward the day when the ideals of equal justice and equal oportunity will become a reality. • • • For more information about the southern poverty law center or to obtain additional copies of this guidebook, contact [email protected] or visit www.splconcampus.org @splcenter facebook/SPLCenter facebook/SPLConcampus © 2017 Southern Poverty Law Center THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW RICHARD SPENCER IS A LEADING ALT-RIGHT SPEAKER. The Alt-Right and Extremism on Campus ocratic ideals. They claim that “white identity” is under attack by multicultural forces using “politi- An old and familiar poison is being spread on col- cal correctness” and “social justice” to undermine lege campuses these days: the idea that America white people and “their” civilization. Character- should be a country for white people. ized by heavy use of social media and memes, they Under the banner of the Alternative Right – or eschew establishment conservatism and promote “alt-right” – extremist speakers are touring colleges the goal of a white ethnostate, or homeland. and universities across the country to recruit stu- As student activists, you can counter this movement. dents to their brand of bigotry, often igniting pro- In this brochure, the Southern Poverty Law Cen- tests and making national headlines. Their appear- ances have inspired a fierce debate over free speech ter examines the alt-right, profiles its key figures and the direction of the country.