The History of Communications and Its Implications for the Internet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Subscriber Line (DSL) Technologies

CHAPTER21 Chapter Goals • Identify and discuss different types of digital subscriber line (DSL) technologies. • Discuss the benefits of using xDSL technologies. • Explain how ASDL works. • Explain the basic concepts of signaling and modulation. • Discuss additional DSL technologies (SDSL, HDSL, HDSL-2, G.SHDSL, IDSL, and VDSL). Digital Subscriber Line Introduction Digital Subscriber Line (DSL) technology is a modem technology that uses existing twisted-pair telephone lines to transport high-bandwidth data, such as multimedia and video, to service subscribers. The term xDSL covers a number of similar yet competing forms of DSL technologies, including ADSL, SDSL, HDSL, HDSL-2, G.SHDL, IDSL, and VDSL. xDSL is drawing significant attention from implementers and service providers because it promises to deliver high-bandwidth data rates to dispersed locations with relatively small changes to the existing telco infrastructure. xDSL services are dedicated, point-to-point, public network access over twisted-pair copper wire on the local loop (last mile) between a network service provider’s (NSP) central office and the customer site, or on local loops created either intrabuilding or intracampus. Currently, most DSL deployments are ADSL, mainly delivered to residential customers. This chapter focus mainly on defining ADSL. Asymmetric Digital Subscriber Line Asymmetric Digital Subscriber Line (ADSL) technology is asymmetric. It allows more bandwidth downstream—from an NSP’s central office to the customer site—than upstream from the subscriber to the central office. This asymmetry, combined with always-on access (which eliminates call setup), makes ADSL ideal for Internet/intranet surfing, video-on-demand, and remote LAN access. Users of these applications typically download much more information than they send. -

A Technology Comparison Adopting Ultra-Wideband for Memsen’S File Sharing and Wireless Marketing Platform

A Technology Comparison Adopting Ultra-Wideband for Memsen’s file sharing and wireless marketing platform What is Ultra-Wideband Technology? Memsen Corporation 1 of 8 • Ultra-Wideband is a proposed standard for short-range wireless communications that aims to replace Bluetooth technology in near future. • It is an ideal solution for wireless connectivity in the range of 10 to 20 meters between consumer electronics (CE), mobile devices, and PC peripheral devices which provides very high data-rate while consuming very little battery power. It offers the best solution for bandwidth, cost, power consumption, and physical size requirements for next generation consumer electronic devices. • UWB radios can use frequencies from 3.1 GHz to 10.6 GHz, a band more than 7 GHz wide. Each radio channel can have a bandwidth of more than 500 MHz depending upon its center frequency. Due to such a large signal bandwidth, FCC has put severe broadcast power restrictions. By doing so UWB devices can make use of extremely wide frequency band while emitting very less amount of energy to get detected by other narrower band devices. Hence, a UWB device signal can not interfere with other narrower band device signals and because of this reason a UWB device can co-exist with other wireless devices. • UWB is considered as Wireless USB – replacement of standard USB and fire wire (IEEE 1394) solutions due to its higher data-rate compared to USB and fire wire. • UWB signals can co-exists with other short/large range wireless communications signals due to its own nature of being detected as noise to other signals. -

Fax, Modem, and Text Support Over IP Configuration Guide, Cisco IOS Release 15M&T

Fax, Modem, and Text Support over IP Configuration Guide, Cisco IOS Release 15M&T Last Modified: 2015-07-31 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

Communication & Implementation for Social Change

Communication & Implementation for Social Change: Mobilizing knowledge across geographic and academic borders Krystle van Hoof Communication for Development One-year master 15 Credits Spring/2016 Supervisor: Helen Hambly Odame ABSTRACT In many academic disciplines, there are promising discoveries and valuable information, which have the potential to improve lives but have not been transferred to or taken up in ‘real world’ practice. There are multiple, complex reasons for this divide between theory and practice—sometimes referred to as the ‘know-do’ gap— and there are a number of disciplines and research fields that have grown out of the perceived need to close these gaps. In the field of health, Knowledge Translation (KT) and its related research field, Implementation Science (IS) aim to shorten the time between discovery and implementation to save and improve lives. In the field of humanitarian development, the discipline of Communication for Development (ComDev) arose from a belief that communication methods could help close the perceived gap in development between high- and low-income societies. While Implementation Science and Communication for Development share some historical roots and key characteristics and IS is being increasingly applied in development contexts, there has been limited knowledge exchange between these fields. The aim of this paper is to provide an overview of the characteristics of IS and ComDev, analyze some key similarities and differences between them and discuss how knowledge from each could help inform the other to more effectively achieve their common goals. Keywords: Communication for development and social change, Diffusion of Innovations, Implementation Science, Knowledge Translation 1 TABLE OF CONTENTS 1. -

The History of the Telephone

STUDENT VERSION THE HISTORY OF THE TELEPHONE Activity Items There are no separate items for this activity. Student Learning Objectives • I will be able to name who invented the telephone and say why that invention is important. • I will be able to explain how phones have changed over time. THE HISTORY OF THE TELEPHONE STUDENT VERSION NAME: DATE: The telephone is one of the most important inventions. It lets people talk to each other at the same time across long distances, changing the way we communicate today. Alexander Graham Bell, the inventor of the telephone CENSUS.GOV/SCHOOLS HISTORY | PAGE 1 THE HISTORY OF THE TELEPHONE STUDENT VERSION 1. Like many inventions, the telephone was likely thought of many years before it was invented, and by many people. But it wasn’t until 1876 when a man named Alexander Graham Bell, pictured on the previous page, patented the telephone and was allowed to start selling it. Can you guess what “patented” means? CENSUS.GOV/SCHOOLS HISTORY | PAGE 2 THE HISTORY OF THE TELEPHONE STUDENT VERSION 2. The picture below, from over 100 years ago, shows Alexander Graham Bell using one of his first telephones to make a call from New York to Chicago. Alexander Graham Bell making a telephone call from New York to Chicago in 1892 Why do you think it was important that someone in New York could use the telephone to talk to someone in Chicago? CENSUS.GOV/SCHOOLS HISTORY | PAGE 3 THE HISTORY OF THE TELEPHONE STUDENT VERSION 3. Today, millions of people make phone calls each day, and many people have a cellphone. -

Title: Communicating with Light: from Telephony to Cell Phones Revision

Title: Communicating with Light: From Telephony to Cell Phones Revision: February 1, 2006 Authors: Jim Overhiser, Luat Vuong Appropriate Physics, Grades 9-12 Level: Abstract: This series of six station activities introduces the physics of transmitting "voice" information using electromagnetic signals or light. Students explore how light can be modulated to encode voice information using a simple version of Bell's original photophone. They observe the decrease of the intensity of open-air signals by increasing the distance between source and receiver, and learn the advantage of using materials with different indices of refraction to manipulate and guide light signals. Finally, students are introduced to the concept of bandwidth by using two different wavelengths of light to send two signals at the same time. Special Kit available on loan from CIPT lending library. Equipment: Time Required: Two 80-minute periods NY Standards 4.1b Energy may be converted among mechanical, electromagnetic, Met: nuclear, and thermal forms 4.1j Energy may be stored in electric or magnetic fields. This energy may be transferred through conductors or space and may be converted to other forms of energy. 4.3b Waves carry energy and information without transferring mass. This energy may be carried by pulses or periodic waves. 4.3i When a wave moves from one medium into another, the waves may refract due a change in speed. The angle of refraction depends on the angle of incidence and the property of the medium. 4.3h When a wave strikes a boundary between two media, reflection, transmission, and absorption occur. A transmitted wave may be refracted. -

Fast Detection of Forgery Image Using Discrete Cosine Transform Four Step Search Algorithm

Journal of Korea Multimedia Society Vol. 22, No. 5, May 2019(pp. 527-534) https://doi.org/10.9717/kmms.2019.22.5.527 Fast Detection of Forgery Image using Discrete Cosine Transform Four Step Search Algorithm Yong-Dal Shin†, Yong-Suk Cho†† ABSTRACT Recently, Photo editing softwares such as digital cameras, Paintshop Pro, and Photoshop digital can create counterfeit images easily. Various techniques for detection of tamper images or forgery images have been proposed in the literature. A form of digital forgery is copy-move image forgery. Copy-move is one of the forgeries and is used wherever you need to cover a part of the image to add or remove information. Copy-move image forgery refers to copying a specific area of an image itself and pasting it into another area of the same image. The purpose of copy-move image forgery detection is to detect the same or very similar region image within the original image. In this paper, we proposed fast detection of forgery image using four step search based on discrete cosine transform and a four step search algorithm using discrete cosine transform (FSSDCT). The computational complexity of our algorithm reduced 34.23 % than conventional DCT three step search algorithm (DCTTSS). Key words: Duplicated Forgery Image, Discrete Cosine Transform, Four Step Search Algorithm 1. INTRODUCTION and Fig. 1(b) shows an original image and a copy- moved forged image respectively. Digital cameras and smart phones, and digital J. Fridrich [1] proposed an exacting match image processing software such as Photoshop, method for the forged image detection of a copy- Paintshop Pro, you can duplicate digital counterfeit move forged image. -

IP Telephony Fundamentals: What You Need to Know to “Go to Market”

IP Telephony Fundamentals: What You Need to Know to “Go To Market” Ken Agress Senior Consultant PlanNet Consulting What Will Be Covered • What is Voice over IP? • VoIP Technology Basics • How Do I Know if We’re Ready? • What “Real” Cost Savings Should I Expect? • Putting it All Together • Conclusion, Q&A 2 What is Voice Over IP? • The Simple Answer – It’s your “traditional” voice services transported across a common IP infrastructure. • The Real Answer – It’s the convergence of numerous protocols, components, and requirements that must be balanced to provide a quality voice experience. 3 Recognize the Reality of IP Telephony • IP is the catalyst for convergence of technology and organizations • There are few plan templates for convergence projects • Everybody seems to have a strong opinion • Requires an educational investment in the technology (learning curve) – Requires an up-front investment in the technology that can be leveraged for subsequent deployments • Surveys indicate deployment is usually more difficult than anticipated • Most implementations are event driven (that means there is a broader plan) 4 IP Telephony vs. VoIP • Voice over IP – A broad technology that encompasses many, many facets. • IP Telephony – What you’re going to implement to actually deliver services across your network – Focuses more on features than possibilities – Narrows focus to specific implementations and requirements – Sets appropriate context for discussions 5 Why Does Convergence Matter? • Converged networks provide a means to simplify support structures and staffing. • Converged networks create new opportunities for a “richer” communications environment – Improved Unified Messaging – Unified Communications – The Promise of Video • Converged networks provide methods to reduce costs (if you do things right) 6 The Basics – TDM (vs. -

Fact Sheet: Information and Communication Technology

Fact Sheet: Information and Communication Technology • Approximately one billion youth live in the world today. This means that approximately one person in five is between the age of 15 to 24 years; • The number of youth living in developing countries will grow by 2025, to 89.5%: • Therefore, it is a must to take youth issues into considerations in the ICT development agenda and ICT policies of each country. • For people who live in the 32 countries where broadband is least affordable – most of them UN-designated Least Developed Countries – a fixed broadband subscription costs over half the average monthly income. • For the majority of countries, over half the Internet users log on at least once a day. • There are more ICT users than ever before, with over five billion mobile phone subscriptions worldwide, and more than two billion Internet users Information and communication technologies have become a significant factor in development, having a profound impact on the political, economic and social sectors of many countries. ICTs can be differentiated from more traditional communication means such as telephone, TV, and radio and are used for the creation, storage, use and exchange of information. ICTs enable real time communication amongst people, allowing them immediate access to new information. ICTs play an important role in enhancing dialogue and understanding amongst youth and between the generations. The proliferation of information and communication technologies presents both opportunities and challenges in terms of the social development and inclusion of youth. There is an increasing emphasis on using information and communication technologies in the context of global youth priorities, such as access to education, employment and poverty eradication. -

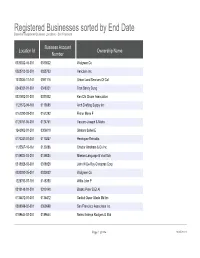

Registered Businesses Sorted by End Date Based on Registered Business Locations - San Francisco

Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco Business Account Location Id Ownership Name Number 0030032-46-001 0030032 Walgreen Co 0028703-02-001 0028703 Vericlaim Inc 1012834-11-141 0091116 Urban Land Services Of Cal 0348331-01-001 0348331 Tran Sandy Dung 0331802-01-001 0331802 Ken Chi Chuan Association 1121572-09-161 0113585 Arch Drafting Supply Inc 0161292-03-001 0161292 Fisher Marie F 0124761-06-001 0124761 Vaccaro Joseph & Maria 1243902-01-201 0306019 Shatara Suheil E 0170247-01-001 0170247 Henriquez Reinaldo 1125567-10-161 0130286 Chador Abraham & Co Inc 0189884-03-001 0189884 Mission Language & Vocl Sch 0318928-03-001 0318928 John W De Roy Chiroprac Corp 0030032-35-001 0030032 Walgreen Co 1228793-07-191 0148350 Willis John P 0310148-01-001 0310148 Blasko Peter B Et Al 0135472-01-001 0135472 Saddul Oscar Allado Md Inc 0369698-02-001 0369698 San Francisco Associates Inc 0189644-02-001 0189644 Neirro Erainya Rodgers G Etal Page 1 of 984 10/05/2021 Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco DBA Name Street Address City State Source Zipcode Walgreens #15567 845 Market St San Francisco CA 94103 Vericlaim Inc 500 Sansome St Ste 614 San Francisco CA 94111 Urban Land Services Of Cal 1170 Sacramento St 5d San Francisco CA 94108 Elizabeth Hair Studio 672 Geary St San Francisco CA 94102 Ken Chi Chuan Association 3626 Taraval St Apt 3 San Francisco CA 94116 Arch Drafting Supply Inc 10 Carolina St San Francisco CA 94103 Marie Fisher Interior -

Growth of the Internet

Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] Preliminary version, July 6, 2001 Abstract The Internet is the main cause of the recent explosion of activity in optical fiber telecommunica- tions. The high growth rates observed on the Internet, and the popular perception that growth rates were even higher, led to an upsurge in research, development, and investment in telecommunications. The telecom crash of 2000 occurred when investors realized that transmission capacity in place and under construction greatly exceeded actual traffic demand. This chapter discusses the growth of the Internet and compares it with that of other communication services. Internet traffic is growing, approximately doubling each year. There are reasonable arguments that it will continue to grow at this rate for the rest of this decade. If this happens, then in a few years, we may have a rough balance between supply and demand. Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] 1. Introduction Optical fiber communications was initially developed for the voice phone system. The feverish level of activity that we have experienced since the late 1990s, though, was caused primarily by the rapidly rising demand for Internet connectivity. The Internet has been growing at unprecedented rates. Moreover, because it is versatile and penetrates deeply into the economy, it is affecting all of society, and therefore has attracted inordinate amounts of public attention. The aim of this chapter is to summarize the current state of knowledge about the growth rates of the Internet, with special attention paid to the implications for fiber optic transmission. -

HISTORYOF COMMUNICATION in MALAYSIA (1940-2008) Sevia Mahdaliza Khairil Amree Zainol

1 HISTORYOF COMMUNICATION IN MALAYSIA (1940-2008) Sevia Mahdaliza Khairil Amree Zainol 1.1 INTRODUCTION The Second World War was, in some ways, one of the lowest points in Malaysia's history. Japanese forces landed on the north- east border of Malaya on 8 December 194 1 and, in one month, succeeded in establishing their control of both Peninsula Malaya and Sabah and Sarawak. On 15 March 1942, Singapore surrendered. Singapore was renamed Shonan and became the centre of a regional administrative headquarters that incorporated the Straits Settlements, and the Federated Malay States and Sumatra. Much like the British who had installed residents in the Malay ruling houses fifty years earlier, the Japanese appointed local governors to each state. The only difference was that this time, it was the Sultans who were placed in the positions of advisors. The Unfederated Malay States, Perlis, Kedah, Kelantan and Terengganu found themselves back under the sovereignty of Thailand in 1942, when Thailand declared war on Britain and the USA. Most large scale economic activities grounded to a halt during the period of the War. The production of tin which was already falling before the War stopped almost completely. People turned their occupation away from the cultivation of commercial crops, concentrating instead on planting rice and vegetables to ensure they did not go hungry. [1] 2 Wireless Communication Technology in Malaysia 1.2 HISTORY BEGAN For the telecommunication industry, all activity not specifically related to the war effort came to a stand still. A young telegraph operator identified only as E.R. joined what was then the Post and Telecoms Department in 1941.