A Critical Role Dungeons and Dragons Dataset

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Identifying Critical Roles, Easier Said Than Done! a Best Practice Approach to Addressing the Missing Link in Talent Management

Whitepaper Identifying Critical Roles, Easier Said Than Done! A best practice approach to addressing the missing link in talent management “ Colin Beames is a global thought leader in SWP. His content is more advanced By Colin Beames than typically what has existed on this subject.” BA (Hons) Qld, BEng (Hons), MBA, MAPS Corporate Psychologist Mike Haffenden, CEO, Corporate Research Forum, UK 2 Summary The Business Case for Identifying contribute to strategic impact. Critical Roles The business case for identifying Critical Roles A Critical Role Model Best Practice is compelling. By investing disproportionally Approach in the people and resources associated with such roles, this will have maximum leverage We present the Advanced Workforce on enhancing organisational performance, Strategies (AWS) Skills-Based Workforce contribute to the achievement of strategic and Segmentation Model, adapted from the work business objectives, and provide longer-term of Lepak and Snell (1999), as a “best practice” competitive advantage. approach to the identification of Critical Roles. Role differentiation (e.g., “make” versus “buy” This model serves as a basis for identifying roles) constitutes the essence of developing an various role types (Critical Roles, “make” roles, effective workforce strategy, starting with the “buy” roles, roles suitable for outsourcing). It identification of Critical Roles. is based on analyzing roles according to two dimensions of skills: (1) skills value and (2) skills However, definitions of what constitutes Critical uniqueness. Critical Roles are defined as having Roles abound, many of which are piecemeal higher skills value (i.e., impact on business or ad hoc, lacking in rigour, and/or of limited outcomes) and higher skills uniqueness (i.e., utility. -

The Critical Role of Youtube and Twitch in D&D's

The Critical Role of YouTube and Twitch in D&D’s Resurgence Premeet Sidhu The University of Sydney Camperdown, NSW, 2006 Australia [email protected] Marcus Carter The University of Sydney Camperdown, NSW, 2006 Australia [email protected] Keywords critical role, twitch, youtube, D&D, resurgence, convergence culture INTRODUCTION Over the last five years Dungeons and Dragons [D&D] (Arneson & Gygax, 1974) has risen in prominence and popularity, with a broadening of its player demographic. Though there are many factors motivating renewed and engaged play of D&D, in this presentation we draw on our study of D&D players in 2019 to discuss the impact that representation of D&D play on YouTube, Twitch, and in podcasts, has on the game’s play, experience, and resurgence. We argue that our results stress the impact of convergence culture (Jenkins, 2006) in understanding D&D play. METHODOLOGY This presentation draws on a qualitative study of 20 D&D (7F, 13M, aged 18 – 34) players across 4 player groups, involving pre-play interviews, observations of play sessions, and follow-up post-play interviews. Interviews interrogated player histories, motivations to play D&D, experiences playing, and engagement with D&D paratexts. THE CRITICAL ROLE OF CONVERGENCE CULTURE In alignment with Gerbner’s (1969) media cultivation theory, Duck et al. (2000) argue that media representations “have the potential to play an active part in shaping and framing our perceptions” (p. 11), which has similarly been applied in the study of game paratexts (Consalvo, 2007). The participants in our study were very cognisant of how media representation of D&D has changed over time; from overt stigmatisation to more positively skewed portrayals. -

ARCHITECTURE & Other Topics

JUNE | 2020 ARCHITECTURE & other topics WORLD SHOWCASE vbwyrde’s Elthos EXLUSIVE INTERVIEW with Chris Lockey STORMING THE BASTILLE Analysis | Art | Interviews A Community Project Prompts | Stories | Theory Issue 3 | 2020 ARCHITECTURE LETTER FROM THE EDITOR CONTENTS You can quickly recognize Paris by the presence of the 4 World Showcase: vbwyrde’s Elthos Eiffel Tower. Athens by the Parthenon. Sydney by its 10 Architecture & Cultural Opera House. Beyond single buildings, unique patterns 23 Assimilation in architecture denote different cultures across the A BOY IN THE WOODS 16 Exclusive Interview: globe and throughout time. In this issue, we delve into Chris Lockey the most visible components of civilization. It is my 23 A Boy in the Woods great pride to present to you the culmination of more than two months of hard work by the Worldbuilding 36 Red Sky at Night, Builder’s Delight Magazine team and community: the Architecture issue. 44 Words of Worldbuilding World Anvil Contest Results On a personal note, this issue is bittersweet for me, as 36 51 Community Art Features it is my last issue as Editor-in-Chief of Worldbuilding RED SKY AT NIGHT, 59 Storming the Bastille BUILDER’S DELIGHT Magazine. I’m not leaving, though. I am stepping down 65 33 Tales of War to a more focused role so that I can more effectively Stories 17-20 accomplish long-term goals. I’m very thankful to the ADDITIONAL CONTENT team for everything we’ve accomplished thus far, and 54 Resource Column 56 Artist Corner I look forward to the future as we continue to serve 76 Ask Us Anything the worldbuilding community. -

The Critical Role of Youth in Global Development

Issue Brief December 2001 The Critical Role of Youth in Global Development Nearly half of all people in the world today are under the age of 25. Effectively addressing the special needs of these youth is a critical challenge for the future. Youth, individuals between the ages of 15 and 24, make up over one-sixth of the world’s population, but are seldom recognized as a distinct group for the important role they will play in shaping the future. Defining Youth The meaning of the terms “youth,” “adolescents,” More than any other group, today’s young women and “young people” varies in different societies, as and men will impact how people in rich and poor do the different roles and responsibilities ascribed to countries live in the 21st century. Unfortunately, members of each group. In Egypt, for example, the hundreds of millions of youth—especially young average male enters the workforce at the age of 15, women—lack education, skills and job training, and in Niger about 75% of women give birth before employment opportunities, and health services their 20th birthday. This fact sheet uses the United effectively limiting their futures at a very early age. Nations’ definitions: As a result, youth may react by unleashing risky or ! Adolescents: 10-19 years of age (early harmful behavior against themselves or society. adolescence 10-14; late adolescence 15-19) Although youth may often be perceived as contributing ! Youth: 15-24 years of age to society’s problems, they are, in fact, important ! Young People: 10-24 years of age assets for the economic, political, and social life of their communities. -

City of Splendors: Waterdeep

™ 6620_88162_Ch1.indd20_88162_Ch1.indd 1 44/22/05/22/05 99:42:19:42:19 AAMM DESIGNER: Eric L. Boyd ADDITIONAL DESIGN: Ed Greenwood, James Jacobs, Steven E. Schend, Sean K Reynolds DEVELOPER: Richard Baker EDITORS: Cindi Rice, Gary Sarli EDITING MANAGER: Kim Mohan DESIGN MANAGER: Christopher Perkins DEVELOPMENT MANAGER: Jesse Decker SENIOR ART DIRECTOR RPG R&D: Stacy Longstreet DIRECTOR OF RPG R&D: Bill Slavicsek PRODUCTION MANAGERS: Josh Fischer, Randall Crews FORGOTTEN REALMS ART DIRECTOR: Mari Kolkowsky COVER ARTIST: Scott M. Fischer INTERIOR ARTISTS: Steve Belledin, Steve Ellis, Wayne England, Ralph Horsley, William O’Connor, Lucio Parrillo, Vinod Rams, Rick Sardinha GRAPHIC DESIGNER: Dee Barnett CARTOGRAPHER: Dennis Kauth, Robert Lazzaretti GRAPHIC PRODUCTION SPECIALIST: Angelika Lokotz IMAGE TECHNICIAN: Jason Wiley SPECIAL THANKS: Thomas M. Costa, Elaine Cunningham, George Krashos, Alex Roberts Major sources include Forgotten Realms Campaign Setting by Ed Greenwood, Sean Reynolds, Skip Williams, and Rob Heinsoo; Ruins of Undermountain II by Donald Bingle, Jean Rabe, and Norm Ritchie; Songs and Swords novels by Elaine Cunningham; Waterdeep novel by Richard Awlinson (Troy Denning); “Realmslore,” Ruins of Undermountain, Seven Sisters, Volo’s Guide to Waterdeep, Waterdeep, and Waterdeep and the North by Ed Greenwood; City System and Advanced Dungeons & Dragons comic by Jeff Grubb; Dragon #307 – “Monsters in the Alley” by James Jacobs, City of Splendors, The Lost Level, Maddgoth’s Castle, and Stardock by Steven E. Schend; and Skullport by Joseph C. Wolf. Based on the original DUNGEONS & DRAGONS® rules created by Gary Gygax and Dave Arneson and the new DUNGEONS & DRAGONS game designed by Jonathan Tweet, Monte Cook, Skip Williams, Richard Baker, and Peter Adkison. -

![Dec5387 [Pdf] the World of Critical Role: the History Behind the Epic](https://docslib.b-cdn.net/cover/6158/dec5387-pdf-the-world-of-critical-role-the-history-behind-the-epic-4666158.webp)

Dec5387 [Pdf] the World of Critical Role: the History Behind the Epic

[Pdf] The World Of Critical Role: The History Behind The Epic Fantasy Liz Marsham - pdf free book Download Online The World of Critical Role: The History Behind the Epic Fantasy Book, The World of Critical Role: The History Behind the Epic Fantasy Books Online, Download Online The World of Critical Role: The History Behind the Epic Fantasy Book, The World of Critical Role: The History Behind the Epic Fantasy Free Read Online, Read Best Book The World of Critical Role: The History Behind the Epic Fantasy Online, The World of Critical Role: The History Behind the Epic Fantasy PDF read online, PDF Download The World of Critical Role: The History Behind the Epic Fantasy Free Collection, Read The World of Critical Role: The History Behind the Epic Fantasy Full Collection Liz Marsham, The World of Critical Role: The History Behind the Epic Fantasy Full Collection, read online free The World of Critical Role: The History Behind the Epic Fantasy, Read The World of Critical Role: The History Behind the Epic Fantasy Books Online Free, book pdf The World of Critical Role: The History Behind the Epic Fantasy, The World of Critical Role: The History Behind the Epic Fantasy Books Online, Free Download The World of Critical Role: The History Behind the Epic Fantasy Books [E-BOOK] The World of Critical Role: The History Behind the Epic Fantasy Full eBook, The World of Critical Role: The History Behind the Epic Fantasy Free PDF Online, Read The World of Critical Role: The History Behind the Epic Fantasy Book Free, Download The World of Critical Role: The History Behind the Epic Fantasy E-Books, PDF The World of Critical Role: The History Behind the Epic Fantasy Full Collection, Free Download The World of Critical Role: The History Behind the Epic Fantasy Books [E-BOOK] The World of Critical Role: The History Behind the Epic Fantasy Full eBook, full book The World of Critical Role: The History Behind the Epic Fantasy, CLICK HERE TO DOWNLOAD pdf, epub, azw, mobi Description: The whole thing was very interesting, so when you do go back through that piece.. -

The Critical Role of Media Representations, Reduced Stigma and Increased Access in D&D's Resurgence

The Critical Role of Media Representations, Reduced Stigma and Increased Access in D&D’s Resurgence Premeet Sidhu The University of Sydney Camperdown, NSW, 2006 Australia [email protected] Marcus Carter The University of Sydney Camperdown, NSW, 2006 Australia [email protected] ABSTRACT Over the last five years Dungeons and Dragons [D&D] (Arneson & Gygax, 1974) has risen in prominence and popularity with a broadening of its player demographic. Though the game’s resurgence has been widely discussed in non-academic outlets, it has been neglected in academic literature. While there are many factors motivating renewed and engaged play of D&D, in this paper we draw on our 2019 study of contemporary D&D players to present key contextual factors of the game’s resurgence. Through discussion of our results, we argue that the influence of representations and trends in popular media, reduction of associated stigma, and impact of convergence culture (Jenkins, 2006) on increased game access, have led to the resurgence of D&D according to our participants and shed light on some key reasons for its success in recent years. Keywords D&D, resurgence, Critical Role, Twitch, YouTube, convergence culture, non-digital game, media, stigma, access INTRODUCTION Over the last five years, the tabletop role-playing game (RPG) Dungeons and Dragons [D&D] (Arneson & Gygax, 1974) has risen in prominence and popularity with a broadening of its player demographic. Though there are many internal and external factors motivating renewed and engaged play of the game, in this paper we draw on our 2019 study of 20 Australian D&D players, both experienced and new to the game, to discuss contextual factors that have shaped how current players position themselves regarding the changing nature of D&D play, its culture and current social perception. -

Boundary Negotiation and Critical Role Robyn Hope a Thesis in The

Play, Performance, and Participation: Boundary Negotiation and Critical Role Robyn Hope A Thesis in The Department Of Communication Studies Presented in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Media Studies) at Concordia University Montreal, Quebec, Canada November 2017 © Robyn Hope, 2017 CONCORDIA UNIVERSITY School of Graduate Studies This is to certify that the thesis prepared By: Robyn Hope Entitled: Play, Performance, and Participation: Boundary Negotiation on Critical Role and submitted in partial fulfillment of the requirements for the degree of Master of Arts (Media Studies) complies with the regulations of the University and meets the accepted standards with respect to originality and quality. Signed by the final Examining Committee: Owen Chapman______________ Chair Chair’s Name Bart Simon_________________ Examiner Examiner’s Name Matt Soar___________________ Examiner Examiner’s Name Mia Consalvo______________ Supervisor Supervisor’s Name Approved by _____________________________________________________ Chair of Department or Graduate Program Director _____________ 2017 ________________________________________________ Dean of Faculty iii Abstract Play, Performance and Participation: Boundary Negotiation on Critical Role Robyn Hope Critical Role is a livestreamed spectacle of play, in which eight professional voice actors come together once a week for a session of the tabletop roleplaying game Dungeons and Dragons. This show first launched in 2015, and, after one hundred and fifteen episodes spanning nearly four hundred and fifty hours of content, reached the conclusion of its first major narrative arc in October 2017. In this time, the show has attracted a dedicated fanbase of thousands. These fans, known as “Critters”, not only produce creative fanworks, but also undertake massive projects of archiving, timekeeping, transcribing and curating Critical Role. -

U UNSEELIE COURT THEATRE

169 OPENING NIGHT: FEBRUARY 23, 2018 u UNSEELIE COURT THEATRE UNDER THE DIRECTION OF WILL CROSSWAIT AND ANGELA MCCAIN CRITTERS AROUND THE WORLD AND THE THEATRE PRESENT Vox M chinA AN EXANDRIAN MUSICAL BOOK AND LYRICS BY THE CANTATA PANSOPHICAL INSPIRED BY THE MUSICAL HAMILTON BY LIN-MANUEL MIRANDA WITH Grog, Keyleth, Percy, Pike, Scanlan, VAx, Vex AND Raishan, Thordak, Umbrasyl, Vorugal SCENIC DESIGN COSTUME DESIGN LIGHTING DESIGN SOUND DESIGN PIT CREW PHOTOSHOP THOSE TORCH THINGS Limited HAIR AND WIG DESIGN MUSIC COORDINATION PRESS REPRESENTATIVE tALIESIN jAFFE MY LIFE A TWITTER BOT TECHNICAL SUPERVISION PRODUCTION STAGE MANAGER COMPANY MANAGER Buggy Spreadsheets Dadlan Discord CASTING ARRANGEMENTS GENERAL MANAGEMENT Will Crosswait Were made at the last minute WILL CROSSWAIT ANGELA MCCAIN MUSIC DIRECTION AND ORCHESTRATIONS BY Logic (pro, not the rapper) CHOREOGRAPHY BY OTTO VOICE DIRECTED BY WILL CROSSWAIT, Angela McCain THE WORLD PREMERE OF VOX MACHINA: AN EXANDRIAN MUSICAL WAS PRESENTED ON YOUTUBE IN FEBRUARY 2018 BY THE THEATRE ANGELA MCCAIN, ARTISTIC DIRECTOR WILL CROSSWAIT, EXECUTIVE DIRECTOR 170 Scott Danita Maitland Gilbert Joe Jesse Collins Theo Will John Crosswait MacMillan Shannon Randolph Baker 171 CAST Grog Strongjaw . Scott Maitland Keyleth of the Air Ashari . Danita Gilbert Percival Fredrickstein Von Mussel Kowalski De Rollo III . Joe Collins Pike Trickfoot . Jesse Theo Scanlan Shorthalt . Will Crosswait Vax’ildan . John MacMillan Vex’ahlia . Shannon Randolph Baker Matthew Mercer . James Coates Gilmore . Eric Peterson Allura . Angela McCain Kima . Kit Harroun Raishan . Angela McCain Thordak . Scott Maitland Vorugal . CriticalScroll Umbrasyl . VioletLegacy Kashaw . Robert Taylor Zahra . Liisa Lee Uriel . Andrew Goehring Earthbreaker Groon . Matthew A. Seibert Craven Edge . -

Living Pedagogies of a Game-Master: an Autoethnographic Education of Liminal Moments

Living Pedagogies of a Game-Master: An Autoethnographic Education of Liminal Moments Graeme Lachance Thesis Submitted to the Faculty of Graduate and Postdoctoral Studies in partial fulfillment of the degree of Master of Arts in Education Faculty of Education University of Ottawa © Graeme Lachance, Ottawa, Canada, 2016 PEDAGOGIES OF A GAME-MASTER ii Acknowledgements If a thesis were a monster, it would be hideous, with at least a dozen eyes, oozing, slime- filled pores, and terrible, unnatural strength. It would be talked about as the one creature capable of defeating even the most skilled and powerful—whose ranks I am not yet worthy of joining. This monograph was not an adversary taken down alone. Special thanks are extended to the members of the groups in which I have acted as game-master, for providing the fun and fodder on which this research stands. This thesis would not have been possible if it were not for the love, support, and encouragement of my family and friends, especially Catia, who kept an encouraging hand on my shoulder even through the toughest moments. I am appreciative my of thesis committee members, Dr. Awad Ibrahim and Dr. Nicholas Ng-A-Fook, whose advice, ideas, and edits shaped this into a fully-formed project worthy of publication. Without their insight, expertise, and support I surely would not have finished. Lastly, I would especially like to recognize the time and support of my thesis supervisor, Dr. Patricia Palulis. Her guidance, craft, and belief in the non-traditional allowed this idea to flourish. Thank you. PEDAGOGIES OF A GAME-MASTER iii Abstract This study presents the concept of the pedagogy of the game-master. -

The Critical Role of Oral Language in Reading Instruction and Assessment Elizabeth Brooke, Ph.D., CCC-SLP Chief Education Officer, Lexia Learning and Rosetta Stone

The Critical Role of Oral Language in Reading Instruction and Assessment Elizabeth Brooke, Ph.D., CCC-SLP Chief Education Officer, Lexia Learning and Rosetta Stone Unlike mathematics or science, reading is the only academic area in which we expect children to arrive as kindergarteners with a basic skill level. Research has shown that oral language—the foundations of which are developed by age four—has a profound impact on children’s preparedness for kindergarten and on their success throughout their academic career. Children typically enter school with a wide range of background knowledge and oral language ability, attributable in part to factors such as children’s experiences in the home and their socioeconomic status (SES) (Hart & Risley, 1995; Fernald Marchman, & Weisleder, 2013). The resulting gap in academic ability tends to persist or grow throughout their school experience (Fielding, Kerr, & Rosier, 2007; Juel, Biancarosa, Coker & Deffes, 2003), which is why a strong focus on oral language development in the early years is imperative for future academic success. This white paper will examine the critical role of oral language in reading instruction and assessment and the implications for classroom teachers. What is Oral Language? Oral language is often associated with vocabulary as the main component. However, in the broadest definition, oral language consists of phonology, grammar, morphology, vocabulary, discourse, and pragmatics. The acquisition of these skills often begins at a young age, before students begin focusing on print-based concepts such as sound-symbol correspondence and decoding. Because these skills are often developed early in life, children with limited oral language ability at the time they enter kindergarten are typically at a distinct disadvantage (Fielding et al., 2007). -

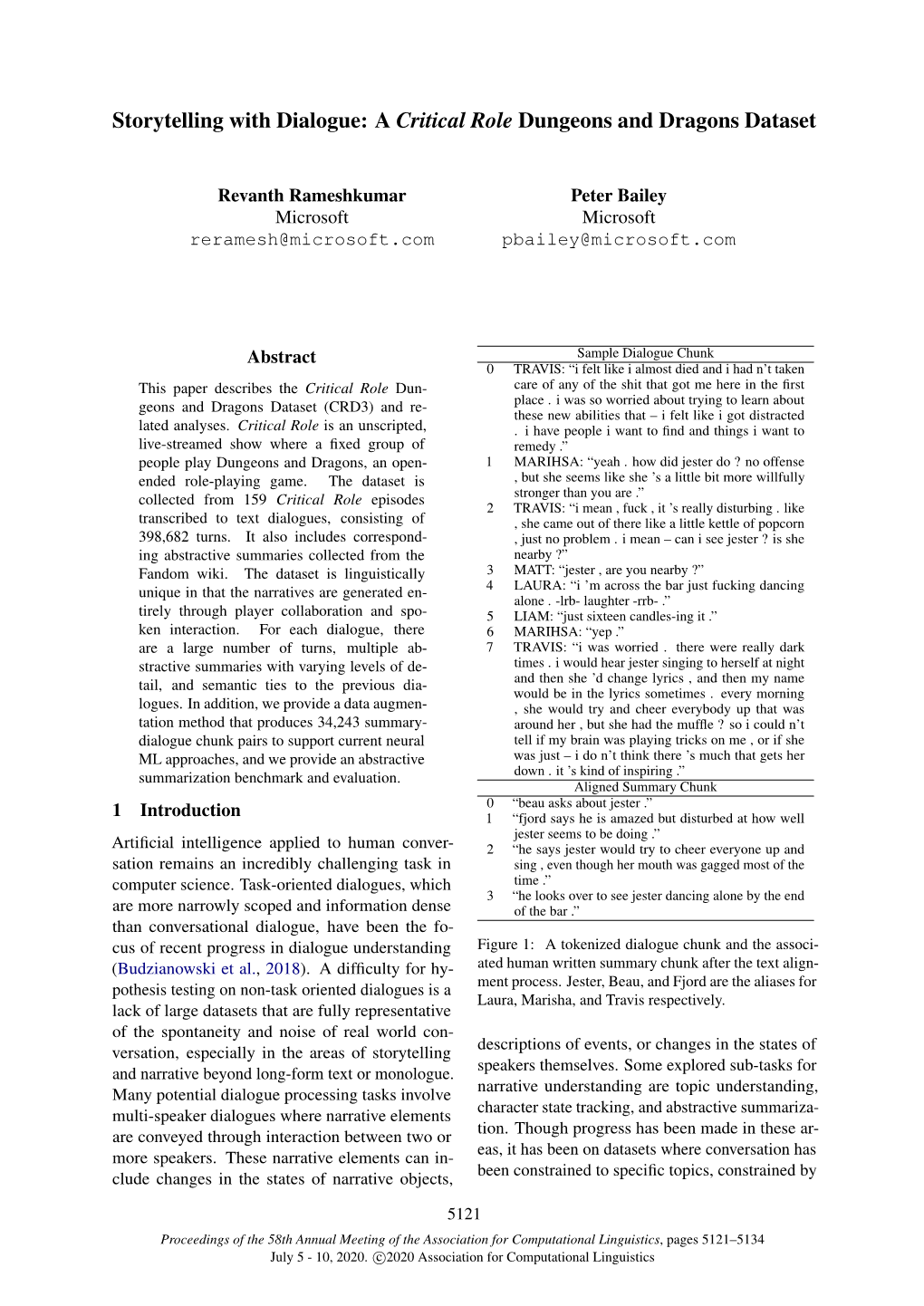

Storytelling with Dialogue: a Critical Role Dungeons and Dragons Dataset

Storytelling with Dialogue: A Critical Role Dungeons and Dragons Dataset Revanth Rameshkumar Peter Bailey Microsoft Microsoft [email protected] [email protected] Abstract Sample Dialogue Chunk 0 TRAVIS: “i felt like i almost died and i had n’t taken This paper describes the Critical Role Dun- care of any of the shit that got me here in the first geons and Dragons Dataset (CRD3) and re- place . i was so worried about trying to learn about these new abilities that – i felt like i got distracted lated analyses. Critical Role is an unscripted, . i have people i want to find and things i want to live-streamed show where a fixed group of remedy .” people play Dungeons and Dragons, an open- 1 MARIHSA: “yeah . how did jester do ? no offense ended role-playing game. The dataset is , but she seems like she ’s a little bit more willfully collected from 159 Critical Role episodes stronger than you are .” 2 TRAVIS: “i mean , fuck , it ’s really disturbing . like transcribed to text dialogues, consisting of , she came out of there like a little kettle of popcorn 398,682 turns. It also includes correspond- , just no problem . i mean – can i see jester ? is she ing abstractive summaries collected from the nearby ?” Fandom wiki. The dataset is linguistically 3 MATT: “jester , are you nearby ?” unique in that the narratives are generated en- 4 LAURA: “i ’m across the bar just fucking dancing alone . -lrb- laughter -rrb- .” tirely through player collaboration and spo- 5 LIAM: “just sixteen candles-ing it .” ken interaction.