Generating Fuzzy Queries from Weighted Fuzzy Classifier Rules

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The State of the Art in Developing Fuzzy Ontologies: a Survey

The State of the Art in Developing Fuzzy Ontologies: A Survey Zahra Riahi Samani1, Mehrnoush Shamsfard2, 1Faculty of Computer Science and Engineering, Shahid Beheshti University Email:[email protected] 1Faculty of Computer Science and Engineering, Shahid Beheshti University Email:[email protected] Abstract Conceptual formalism supported by typical ontologies may not be sufficient to represent uncertainty information which is caused due to the lack of clear cut boundaries between concepts of a domain. Fuzzy ontologies are proposed to offer a way to deal with this uncertainty. This paper describes the state of the art in developing fuzzy ontologies. The survey is produced by studying about 35 works on developing fuzzy ontologies from a batch of 100 articles in the field of fuzzy ontologies. 1. Introduction Ontology is an explicit, formal specification of a shared conceptualization in a human understandable, machine- readable format. Ontologies are the knowledge backbone for many intelligent and knowledge based systems [1, 2]. However, in some domains, real world knowledge is imprecise or vague. For example in a search engine one may be interested in ”an extremely speedy, small size, not expensive car”. Classical ontologies based on crisp logic are not capable of handling this kind of knowledge. Fuzzy ontologies were proposed as a combination of fuzzy theory and ontologies to tackle such problems. On the topic of fuzzy ontology, we studied about 100 research articles which can be categorized into four main categories. The first category includes the research works on applying fuzzy ontologies in a specific domain- application to improve the performance of the application such as group decision making systems [3] or visual video content analysis and indexing [4].The ontology development parts of the works in this category were done manually or were not of much concentration. -

Zerohack Zer0pwn Youranonnews Yevgeniy Anikin Yes Men

Zerohack Zer0Pwn YourAnonNews Yevgeniy Anikin Yes Men YamaTough Xtreme x-Leader xenu xen0nymous www.oem.com.mx www.nytimes.com/pages/world/asia/index.html www.informador.com.mx www.futuregov.asia www.cronica.com.mx www.asiapacificsecuritymagazine.com Worm Wolfy Withdrawal* WillyFoReal Wikileaks IRC 88.80.16.13/9999 IRC Channel WikiLeaks WiiSpellWhy whitekidney Wells Fargo weed WallRoad w0rmware Vulnerability Vladislav Khorokhorin Visa Inc. Virus Virgin Islands "Viewpointe Archive Services, LLC" Versability Verizon Venezuela Vegas Vatican City USB US Trust US Bankcorp Uruguay Uran0n unusedcrayon United Kingdom UnicormCr3w unfittoprint unelected.org UndisclosedAnon Ukraine UGNazi ua_musti_1905 U.S. Bankcorp TYLER Turkey trosec113 Trojan Horse Trojan Trivette TriCk Tribalzer0 Transnistria transaction Traitor traffic court Tradecraft Trade Secrets "Total System Services, Inc." Topiary Top Secret Tom Stracener TibitXimer Thumb Drive Thomson Reuters TheWikiBoat thepeoplescause the_infecti0n The Unknowns The UnderTaker The Syrian electronic army The Jokerhack Thailand ThaCosmo th3j35t3r testeux1 TEST Telecomix TehWongZ Teddy Bigglesworth TeaMp0isoN TeamHav0k Team Ghost Shell Team Digi7al tdl4 taxes TARP tango down Tampa Tammy Shapiro Taiwan Tabu T0x1c t0wN T.A.R.P. Syrian Electronic Army syndiv Symantec Corporation Switzerland Swingers Club SWIFT Sweden Swan SwaggSec Swagg Security "SunGard Data Systems, Inc." Stuxnet Stringer Streamroller Stole* Sterlok SteelAnne st0rm SQLi Spyware Spying Spydevilz Spy Camera Sposed Spook Spoofing Splendide -

A Fuzzy-Rule Based Ontology for Urban Object Recognition

A Fuzzy-rule Based Ontology for Urban Object Recognition Stella Marc-Zwecker, Khalid Asnoune and Cedric´ Wemmert ICube Laboratory, BFO team, University of Strasbourg, CNRS, Illkirch Cedex, Strasbourg, France Keywords: Ontologies, OWL, SWRL, Fuzzy Logic, Urban Object Recognition, Satellite Images. Abstract: In this paper we outline the principles of a methodology for semi-automatic recognition of urban objects from satellite images. The methodology aims to provide a framework for bridging the semantic gap problem. Its principle consists in linking abstract geographical domain concepts with image segments, by the means of ontologies use. The imprecision of image data and of qualitative rules formulated by experts geographers are handled by fuzzy logic mechanisms. We have defined fuzzy rules, implemented in SWRL (Semantic Web Rule Language), which allow classification of image segments in the ontology. We propose some fuzzy classification strategies, which are compared and evaluated through an experimentation performed on a VHR image of Strasbourg region. 1 INTRODUCTION of cognitive vision. Their approach relies on an on- tology of visual concepts, such as colour and texture, In the domain of knowledge representation for image which can be seen as an intermediate layer between recognition, we outline the principles of a method- domain knowledge and image processing procedures. ology for semi-automatic extraction of urban objects However, this kind of learning system requires that from Very High Resolution (VHR) satellite images. the expert produces examples for each of the concepts This methodology relies on the design and implemen- he is looking for.(Athanasiadis et al., 2007) present a tation of ontologies, which are an effective tool for framework for both image segmentation and object domain’s knowledge formalization and exploitation labeling using an ontology in the domain of multime- (Gruber, 1993) and for the implementation of reason- dia analysis. -

Fuzzy Sets, Fuzzy Logic and Their Applications • Michael Gr

Fuzzy Sets, Fuzzy Logic and Their Applications • Michael Gr. Voskoglou • Michael Gr. Fuzzy Sets, Fuzzy Logic and Their Applications Edited by Michael Gr. Voskoglou Printed Edition of the Special Issue Published in Mathematics www.mdpi.com/journal/mathematics Fuzzy Sets, Fuzzy Logic and Their Applications Fuzzy Sets, Fuzzy Logic and Their Applications Special Issue Editor Michael Gr. Voskoglou MDPI • Basel • Beijing • Wuhan • Barcelona • Belgrade • Manchester • Tokyo • Cluj • Tianjin Special Issue Editor Michael Gr. Voskoglou Graduate Technological Educational Institute of Western Greece Greece Editorial Office MDPI St. Alban-Anlage 66 4052 Basel, Switzerland This is a reprint of articles from the Special Issue published online in the open access journal Mathematics (ISSN 2227-7390) (available at: https://www.mdpi.com/journal/mathematics/special issues/Fuzzy Sets). For citation purposes, cite each article independently as indicated on the article page online and as indicated below: LastName, A.A.; LastName, B.B.; LastName, C.C. Article Title. Journal Name Year, Article Number, Page Range. ISBN 978-3-03928-520-4 (Pbk) ISBN 978-3-03928-521-1 (PDF) c 2020 by the authors. Articles in this book are Open Access and distributed under the Creative Commons Attribution (CC BY) license, which allows users to download, copy and build upon published articles, as long as the author and publisher are properly credited, which ensures maximum dissemination and a wider impact of our publications. The book as a whole is distributed by MDPI under the terms and conditions of the Creative Commons license CC BY-NC-ND. Contents About the Special Issue Editor ...................................... vii Preface to ”Fuzzy Sets, Fuzzy Logic and Their Applications” ................... -

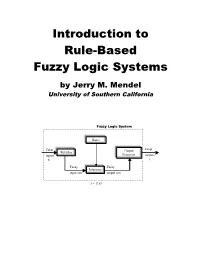

Introduction to Rule-Based Fuzzy Logic Systems

Introduction to Rule-Based Fuzzy Logic Systems by Jerry M. Mendel University of Southern California Fuzzy Logic System Rules Crisp Crisp Output Fuzzifier inputs Processor outputs x y Fuzzy Fuzzy Inference input sets output sets y = f( x) CONTENTS A Self-Study Course (Introduction) Lesson 1 Introduction and Overview Lesson 2 Fuzzy Sets–Part 1 Lesson 3 Fuzzy Sets–Part 2 Lesson 4 Fuzzy Sets–Part 3 Lesson 5 Fuzzy Logic Lesson 6 Case Studies Lesson 7 Singleton Type-1 Fuzzy Logic Systems–Part 1 Lesson 8 Singleton Type-1 Fuzzy Logic Systems–Part 2 Lesson 9 Singleton Type-1 Fuzzy Logic Systems–Part 3 Lesson 10 Singleton Type-1 Fuzzy Logic Systems–Part 4 Lesson 11 Non-Singleton Type-1 Fuzzy Logic Systems Lesson 12 TSK Fuzzy Logic Systems Lesson 13 Applications of Type-1 FLSs Lesson 14 Computation Lesson 15 Open Issues With Type-1 FLSs Solutions “Profile of Lotfi Zadeh,” IEEE Spectrum, June 1995, pp. 32-35. Final Exam Solution to Final Exam Introduction to Rule-Based Fuzzy Logic Systems A Self-Study Course This course was designed around Chapters 1, 2, 4–6, 13 and 14 of Uncertain Rule-Based Fuzzy Logic Systems: Introduction and new Directions by Jerry M. Mendel, Prentice-Hall 2001. The goal of this self- study course is to provide training in the field of rule-based fuzzy logic systems. In this course, which is the first of two self-study courses, the participant will focus on rule-based fuzzy logic systems when no uncertainties are present. This is analogous to first studying deterministic systems before studying random systems. -

Patterns of Fuzzy Rule-Based Inference Valerie Cross Systems Analysis Department, Miami University, Oxford, Ohio

Patterns of Fuzzy Rule-Based Inference Valerie Cross Systems Analysis Department, Miami University, Oxford, Ohio Thomas Sudkamp Department of Computer Science, Wright State University, Dayton, Ohio ABSTRACT Processing information in fuzzy rule-based systems generally employs one of two patterns of inference: composition or compatibility modification. Composition origi- nated as a generalization of binary logical deduction to fuzzy logic, while compatibility modification was developed to facilitate the evaluation of rules by separating the evaluation of the input from the generation of the output. The first step in compatibility modification inference is to assess the degree to which the input matches the antecedent of a rule. The result of this assessment is then combined with the consequent of the rule to produce the output. This paper examines the relationships between these two patterns of inference and establishes conditions under which they produce equivalent results. The separation of the evaluation of input from the generation of output permits a flexibility in the methods used to compare the input with the antecedent of a rule with multiple clauses. In this case, the degree to which the input and the rule antecedent match is determined by the application of a compatibility measure and an aggregation operator. The order in which these operations are applied may affect the assessment of the degree of matching, which in turn may cause the production of different results. Separability properties are introduced to define conditions under which compatibility modification inference is independent of the input evaluation strategy. KEYWORDS: fuz~ inference, compatibility measures, approximate analogi- cal reasoning, fuzzy if-then rules 1. -

Fuzzy Logic, Probability, and Measurement for CWW L

Tutorial Boris Kovalerchuk Dept. of Computer Science Central Washington University, USA [email protected] June 10, 2012, WCCI 2012, Brisbane 3 PM‐5 PM Agenda at glance Background Approaches Advantages and disadvantages Open questions for the future research Conclusion Tutorial Topics Links of Fuzzy and Probability concepts with the Representative Measurement theory from the mathematical psychology Probabilistic Interpretation of Fuzzy sets for CWW Interpretation of fuzzy sets and probabilities in a linguistic context spaces Interpretation of fuzzy sets with the concept of irrational agents Agenda Topics Motivation Computing with words as “human logic” modeling Tutorial Motivation Fuzzy Logic, Probability, and Measurement for CWW L. Zadeh initiated Computing with Words (CWW) studies with fuzzy logic and probability combined. He proposed a set of CWW test tasks and asked whether probability can solve them. CWW and mathematical principles for modeling human logic under uncertainty comparing probabilistic, stochastic and fuzzy logic approaches. The most recent discussion on this topic started at the BISC group with a “naïve” question from a student: “What is the difference between fuzzy and probability?” that generated multiple answers and a very active discussion. Numerous debates can be found in the literature [e.g., Cheeseman; Dubous, Prade; Hisdal; Kovalerchuk,] and BISC 2009‐2012 about relations between fuzzy logic and probability theory. Panel on CWW This tutorial can be a starting “warming up” point for the round table on -

Tesis Doctoral Autor: Ángel Laureano Garrido Bullón Título: Filosofía Y Matemáticas De La Vaguedad Y De La Incertidumbre

TESIS DOCTORAL AUTOR: ÁNGEL LAUREANO GARRIDO BULLÓN LICENCIADO EN CIENCIAS MATEMÁTICAS, ESPECIALIDAD DE MATEMÁTICA PURA TÍTULO: FILOSOFÍA Y MATEMÁTICAS DE LA VAGUEDAD Y DE LA INCERTIDUMBRE (PHILOSOPHY AND MATHEMATICS OF VAGUENESS AND UNCERTAINTY) DEPARTAMENTO DE FILOSOFÍA, FACULTAD DE FILOSOFÍA DE LA UNED ELABORACIÓN, REDACCIÓN Y PRESENTACIÓN: EN CASTELLANO 2013 1 2 TESIS DOCTORAL AUTOR: ÁNGEL LAUREANO GARRIDO BULLÓN LICENCIADO EN CIENCIAS MATEMÁTICAS, ESPECIALIDAD DE MATEMÁTICA PURA TÍTULO: FILOSOFÍA Y MATEMÁTICAS DE LA VAGUEDAD Y DE LA INCERTIDUMBRE (PHILOSOPHY AND MATHEMATICS OF VAGUENESS AND UNCERTAINTY) DEPARTAMENTO DE FILOSOFÍA, FACULTAD DE FILOSOFÍA DE LA UNED ELABORACIÓN, REDACCIÓN Y PRESENTACIÓN: EN CASTELLANO DIRECTOR: Dr. D. DIEGO SANCHEZ MECA Facultad/Escuela/Instituto: FACULTAD DE FILOSOFIA Departamento: FILOSOFIA CODIRECTORA: Dra. Dª MARIA PIEDAD YUSTE LECIÑENA Facultad/Escuela/Instituto: FACULTAD DE FILOSOFIA Departamento: FILOSOFIA 3 AGRADECIMIENTOS. Éste trabajo se empezó a encauzar bajo los auspicios del Proyecto de Investigación denominado “El papel de las controversias en la producción de las prácticas teóricas y en el fortalecimiento de la sociedad civil”, cuyo investigador principal fuera en su día el tristemente desaparecido profesor Quintín Racionero Carmona, por lo que ahora está siendo dirigido por la profesora Cristina De Peretti Peñaranda. La institución convocante de dicho Proyecto (el FF2011-29623) fue el MICINN del Estado Español. Agradezco públicamente la ayuda y el siempre sabio consejo de mi primer mentor, -

Fuzzy Temporal Predicate Logic for Incomplete Information

EasyChair Preprint № 3558 Modeling Fuzzy Temporal Predicate Logic and Fuzzy Temporal Agent Venkata Subba Reddy Poli EasyChair preprints are intended for rapid dissemination of research results and are integrated with the rest of EasyChair. June 5, 2020 Manuscript Click here to download Manuscript: IJFSifuzzy14ftpl.pdf ModelingFuzzy Fuzzy Temporal Temporal Predicate Logic Logic and Fuzzy for TemporalIncomplete Agent 1 2 Information 3 4 5 P.Venkata Subba Reddy 6 Computer Science and Engineering 7 Sri Venkateswara University College of Engineering 8 Tirupati-517502, India 9 [email protected] 10 11 12 13 Abstract—The fuzzy temporal logic is fuzzy logic to deal time 14 constraints of incomplete information. The Fuzzy Temporal II. FUZZY LOGIC 15 logic is to solve independent time constraints problems of Zadeh [11] introduced fuzzy set as model to deal with 16 incomplete information in AI. The Knowledge Representation imprecise, inconsistent and inexact information. The fuzzy set (KR) is the main component to solve the problems in Artificial 17 A of X is defined by its membership function µ (x) and take 18 Intelligence (AI). In this paper, fuzzy predicate temporal logic is A the values in the unit interval [0, 1] 19 studied for incomplete information with time constraints. Fuzzy µ (x) →[0, 1], where x єX is in some Universe of 20 Temporal Agent is discussed for Fuzzy Automated Reasoning. A discourse. 21 The Logic Programming Prolog is discussed for Fuzzy Temporal Predicate Logic For example, 22 Consider the fuzzy proposition “x is tall” and the fuzzy set 23 Keywords— fuzzy logic; fuzzy reasoning; fuzzy agent; fuzzy „tall” is defined as 24 modules; temporal logic fuzzy temporal predicate logic, logic µ (x)→[0, 1], x Є X 25 tall programming tall= 0.5/x1 +0.6/x2 + 0.7/x3 +0.8/x4 +0.9/x5 26 . -

The Paradoxical Success of Fuzzy Logic Charles Elkan, University of California, San Diego

I The Paradoxical Success of Fuzzy Logic Charles Elkan, University of California, San Diego Fuzzy logic methods have been used suc- Definition 1: Let A and B be arbitrary as- itively, and it is natural to apply it in rea- cessfully in many real-world applications, sertions. Then soning about a set of fuzzy rules, since but the foundations of fuzzy logic remain 7(A A 4)and B v (4A 4)are both t(A A B) = min [ t(A),t(B)) under attack. Taken together, these two reexpressions of the classical implication t(A v B) = max { t(A),t(B)] facts constitute a paradox. A second para- 4 4 B. It was chosen for this reason, but t(4)= 1 - t(A) dox is that almost all of the successful the same result can also be proved using t(A)= t(B)if A and B are logically fuzzy logic applications are embedded con- many other ostensibly reasonable logical equivalent. trollers, while most of the theoretical pa- aquivalences. pers on fuzzy methods deal with knowl- Depending how the phrase “logically equiv- It is important to be clear on what ex- edge representation and reasoning. I hope alent” is understood, Definition 1 yields actly Theorem 1 says, and what it does not here to resolve these paradoxes by identify- different formal systems. A fuzzy logic sys- say. On the one hand, the theorem applies ing which aspects of fuzzy logic render it tem is intended to allow an indefinite variety to any more general formal system that useful in practice, and which aspects are of numerical truth values. -

Fuzzy Reasoning

Fuzzy Reasoning Outline ●Introduction ●Bivalent & Multivalent Logics ●Fundamental fuzzy concepts ●Fuzzification ●Defuzzification ●Fuzzy Expert System ●Neuro-fuzzy System Introduction ●Fuzzy concept first introduced by Lotfi Zadeh in the 1965 ●Form of many-valued logic; it deals with reasoning that is approximate rather than fixed and exact. Compared to traditional binary sets, fuzzy logic variables may have a truth value that ranges in degree between 0 and 1 ●Resembles human reasoning in its use of imprecise information to generate decisions, unlike classical logic which requires a deep understanding of a system, exact equations, and precise numeric values Bivalent Logics ●Classical logic, often described as Aristotelian logic – True or false ●Bayesian Reasoning and probabilistic models – Each fact is either True or false – Often unclear whether a given fact is true or false ●Probability – A particular expression will turn out to be true Multivalent Logics ●Three-valued logic – True , false, and undetermined – 1 represents true, 0 represents false, and real numbers between 0 and 1 represent degree of truth Bivalent Logic vs. Multivalent Logic ●A fact has a probability value of 0.5, means it is as likely to be true as it is to be false, or it will be either true or false – There is Uncertainty , (at the moment we don’t know whether the proposition will be true or false, but it will definitely either be true or false—not both, not neither, and not something in between) ●A proposition has a logical value of 0.5, means it is about the degree to which that statement is true – We are Certain of the truth value of the proposition, it is just vague (it is neither true nor false, or it is both true and false) Linguistic Variables ●Often used to facilitate the expression of rules and facts ●A linguistic variable such as “height” may have a value from a range of fuzzy values including “tall” “short” and “medium.” ●It may be defined over the Universe of discourse from 2 feet up to 8 feet. -

Design Considerations of Time in Fuzzy Systems Applied Optimization

Design Considerations of Time in Fuzzy Systems Applied Optimization Volume 35 Series Editors: Panos M. Pardalos University of Florida, U.S.A. Donald Hearn University of Florida, U.S.A. Design Considerations of TilTIe in Fuzzy Systellls by Jemej Virant Faculty of Computer and Information Science. University of Ljubljana. Slovenia .... "Springer-Science+Business Media, B.V. A C.I.P. Catalogue record for this book is available from the Library of Congress. ISBN 978-1-4613-7115-1 ISBN 978-1-4615-4673-3 (eBook) DOI 10.1007/978-1-4615-4673-3 Printed on acid-free paper All Rights Reserved © 2000 by Springer Science+Business Media Dordrecht Originally published by Kluwer Academic Publishers in 2000 Softcover reprint of the hardcover lst edition 2000 No part of the material protected by this copyright notice may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording or by any information storage and retrieval system, without written permission from the copyright owner. This book is dedicated to understanding the meaning of time in fuzzy structures and systems. Contents List of Figures Xlll List of Tables xxv Foreword xxvii Preface XXIX Acknowledgments xxxv Part I THE FUNDAMENTALS OF FUZZY AND POSSIBILISTIC LOGIC 1. FUZZINESS AND ITS MEASURE 3 1. FUZZY VALUES AND FUZZY VARIABLES 3 2. MEASURES OF FUZZINESS 9 2. FUZZY OPERATIONS AND RELATIONS 19 1. INTRODUCTION 19 2. FUZZY APPROACH TO REASONING 19 3. PARAMETERS, CLASSES AND NORMS 23 4. EXTENDED OPERATIONS 28 5. FUZZY RELATIONS 40 3. FUZZY FUNCTIONS 47 1. INTRODUCTION 47 2.