Collaborative Computer Personalities in the Game of Chess

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chess Review

MARCH 1968 • MEDIEVAL MANIKINS • 65 CENTS vI . Subscription Rat. •• ONE YEAR $7.S0 • . II ~ ~ • , .. •, ~ .. -- e 789 PAGES: 7'/'1 by 9 inches. clothbound 221 diagrams 493 ideo variations 1704 practical variations 463 supplementary variations 3894 notes to all variations and 439 COMPLETE GAMES! BY I. A . HOROWITZ in collaboration with Former World Champion, Dr, Max Euwe, Ernest Gruenfeld, Hans Kmoch, and many other noted authorities This Jatest and immense work, the mo~t exhaustive of i!~ kind, e:x · plains in encyclopedic detail the fine points of all openings. It carries the reader well into the middle game, evaluates the prospects there and often gives complete exemplary games so that he is not teft hanging in mid.position with the query : What bappens now? A logical sequence binds the continuity in each opening. Firsl come the moves with footnotes leading to the key position. Then fol· BIBLIOPHILES! low perlinenl observations, illustrated by "Idea Variations." Finally, Glossy paper, handsome print. Practical and Supplementary Variations, well annotated, exemplify the effective possibilities. Each line is appraised : or spacious poging and a ll the +, - = . The large format-71/2 x 9 inches- is designed for ease of rcad· other appurtenances of exquis· ing and playing. It eliminates much tiresome shuffling of pages ite book-making combine to between the principal lines and the respective comments. Clear, make this the handsomest of legible type, a wide margin for inserting notes and variation·identify· ing diagrams are other plus features. chess books! In addition to all else, fhi s book contains 439 complete ga mes- a golden trea.mry in itself! ORDER FROM CHESS REVIEW 1- --------- - - ------- --- - -- - --- -I I Please send me Chess Openings: Theory and Practice at $12.50 I I Narne • • • • • • • • • • . -

All's Well That Ends Well

• America j Chej:J rJew:Jpaper Copy:ig:', 19S9 by United Statu Chess Federation Vol. XIV. No.6 Friduy, November 20, 1959 15 Cents Tal Wins Candidates' Tournament ALL'S WELL THAT ENDS WELL KERES SECOND-SMYSLOV THIRD-PETROSIAN FOURTH Mastering the End Game FISCHER TIES GLiGORIC FOR FIFTH Mikhail Tal, 24 year old Latvian grandmaster. 1958 and 1959 USSR By WALTER KORN, Editor of Meo champion, won 16, lost 4, and drew 8 games, to win the Candidates' tournament in Yugoslavia with a 20-8 score. The veteran Paul Keres TIlE DIDACTIC REHASH OF THE OLD HAT! took second place with 18%-8'h, . Petrosian was third with 15Y..!-12 1h.. p'or quite some time, our lament has been the lack or interest and Smyslov was fourth with 15·13. Fischer and Gligoric tied for fifth stimulus in End Game Study and our eye was, therefore, caught with with 12Y..!·15Y..! ahead of Olafssan, 10-1 8, and Benko, 8·20. Fischer curiosity by a book just published, Modern End Game Studies for the thrilled the experts with a final·round win against ex·world champion Chess-player, (the suffix "for the Chess-player" is superfluous) by Hans Smyslov, winning with the Black pieces in a 44 move Sicilian. Bouwmeester, a Dutch master of considerable practical playing strength; translated from the Dutch and edited by H. Golombek of England. MEMBERSHIPS AS GIFTS AND PRIZES Any printed promotion in this artistic field is an asset and, espe· A USCF membership has outstanding advantages as a gift or as a cially at the low price ofIered, the book should be purchased by all prize-both for the giver and for the recipient. -

2014-03-11 Randi Malcuit Who Some of You May Remember Used to Sell

2014-03-11 Randi Malcuit who some of you may remember used to sell chess books at the club a few years ago is planning to be at the club tonight to sell books. Below is a letter he sent me. Contact information: Randi Malcuit 22 Ponemah Hill Rd Unit 10 Milford NH 03055 Put these pieces together to make the email address. Replace At with @: StateLineChess At Hotmail.com (PayPal payment address also) (603)303-0222 PayPal, Check and Cash accepted. No credit cards. I am helping a chess collector to sell his collection. I was trying to find some certain items so I contacted him and we got talking and he said it was time to sell some stuff. If you are interested in any items make a offer. Let me know if you need pics of any sets etc or the really collectible books. I have the pics already to go. was trying to find some certain items so I contacted him and we got talking and he said it was time to sell some stuff. If you are interested in any items make a offer. Let me know if you need pics of any sets etc or the really collectible books. I have the pics already to go. Flower Design Mammoth Ivory Set which I am taking offers on In 1835, Nathaniel Cooke designed new chessmen dedicated to one of the strongest chess players of the day: Howard Staunton. Today, Oleg Raikis, a famous Russian sculptor, continues this tradition. "Chess of the 21st Century", inspired by the balance and simplicity of the Staunton design, is dedicated to today's strongest chess players: Former World Champion Garry Kasparov, World Champion Vladimir Kramnik, and Women's World Champion Xie Jun. -

Jan-Feb 1949

EW ZEALAI{D II] B .m. ... , s.2' Vol.2-No. 5 January-February \ c.) 1949 N E 30-360 .D NEW ZEATAND r CHAMPI(}NSH[P HASTINCS AND BEVERWIJK r Cluh WADE II.I EUR(IPE TOETOE I p.m. llhone 246s A E, NIELD Champion of New Zealand CLUB .BERT BDS. PM. TWO SH ILLINGS I i'olhes St. NEWS IN PRINT AND PICTURE READERS'VIEWS EQUAL RIGHTS FOR EQUAL SUBSCRIFTIONS him a previous issue of THE NEW ZEAL'{I- CHESSPLAYER, so he is becoming another s-:- scriber to ouR magazine' . M. wALKER (Mrs. MORE PROBLEMS WANTEI) Sir,-Enclosed is a Meredith two-mover u'-h::: may b'e of r.rse to you in your setion of-THP.NET' ZEALAND CHESSPLAYER. I would lrxe -"' endorse the tributes alreadY Pa magazine, but with a Problemist your section is very cramPed. iew inches is all one can exPect in a che should be a Page -': nothing. s for Yourself and Tl-I NEW ZE AYER. (Margate, England t. telegraPhic GAME NO. 1?6 is not verY e engaging the honour essentials. As a New Zealander, Mr. Johnstone is fully entitled to criticise New Zealand che'ss administra- becoming a little stale. " ALWYN JONES (Ngaruawahia) TIIE RIGHT SFIRIT Sir,-Congrdtulations on the last issue of THE NEXT PUBI,ICATION DATE NEW ZEALAND CIIESSPLAYER. Game-s,-prorq- The next issue of Iemsi and "Anribunce the Mate" are splendid. We on April 15, and coPY found working out t than-March 15. We really good pragtice. of anything received getting another Playe some club news? WELLII\GTOI\ CEIESS CL.I]B WELLINGTON SPORTS CENTRE WAKEFIELI} STREET R. -

CHESS OPENINGS· Ever Appeared

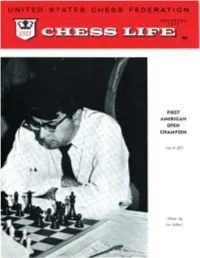

FIRST AMERICAN OPEN CHAM PION ( See P. 257) , I - I --• - ( P1WIO by Art Zeller) • • .:. UNl'l'ED STATES .:. Volume XX N umber 12 December, 1965 EDITOR: J. F. Reinhardt CONTENTS CHESS FEDERATION U.S. Championship .. .......... .............................. .. ... .. .... ..................... .. ... 255 PRESIDENT Benko Wins First American Open .............................. .......................... 257 Lt. Col. E. B. Edmondson Ba it or Booty, by Robert Byrne ............................................................ 258 VICE·PRESIDENT David Hoffmann Gomes· by USCF Members, by John W. Col li ns ...... _............................. 260 REGIONAL VICE·PRESIDENTS Chess Life Here & There ............................................................... ..... 262 NEW ENGLAND Stanley King Har old Dond.1. 1ndex of Players, 1965 ... .. .. ...................... ..... ..... ... .... .. ...... .... ... .... ......... 267 Ell Bourdon EASTIiRN Donald Sc hult~ Tournament Life .... ... ..... ...................................... ......... ................ ......... 2 6 9 Lewis E. Wood Rober t L aBe lle MID-ATLANTIC William Bragg Eu l Clary Edward D. Strehle * SOUTHERN Dr. Robert Froemil:e * * Peter Lahde Carroll M. Crull GREAT LAKIS Norbert lbtthew. THERE'S A USCF TOURNAMENT Donald W. Hildina: Dr. HaTYeY McClellan NORTH CIENTIltAL Robert Lerner IN AREA- John o.neu YOUR Ken R,yk ken SOUTHWESTERN W. W. Crew Kenneth Smith SEE THE "TOURNAMENT LIFE" LISTINGS! l'ark Bishop PACIFIC K enneth Jon es Gordon Barrett Col. Paul L . W ebb SECRETARY * * * Marshall Rohland JUNIORS NATIONAL CHAIRMEN and OFFICERS U your rating is 2100 or higher and you shall not havc reached your 21st ARMED FORCES CHESS.•.• MM . .. _ _ .Robert Karch birthday before J uly 1, 1966, send your name and address to Lt. Col. E. B. BUSINESS MANAGER-• •_ • ........ J . F . ReJnhardt Edmondson, President U.S. Cbess Federation, 210 Britton Way, Mather AFB, COLLEGE CH. S5.•••••• •___ _ _. __ Pau.l. -

Archbishop to Dedicate New St. John's on Dec. 2

Archbishop to Dedicate New St. John’s on Dec. 2 Member of Audit Bureau of Circulations Third Major Church Dedication in Year Contents Copyright by the Catholic Press Society, Inc., 1953—Permission to Reproduce, Except on Articles Otherwise Marked, Given After 12 M. Friday' Following Issue When Archbishop Urban J. Vehr dedicates the new St. John the Evangelist’s Church Wednes day, Dec. 2, it will be the third major church on principal Denver thoroughfares that' he has blessed in a little more than a year. DENVER CATHaiC The ceremonies at St. John’s will begin at 10:30, and will be followed immediately by a Sol emn Mass to be offered in the presence of the Arch bishop by the ^t. Eev. Monsignor John P. Moran, pastor. REGISTER The two other major Denver churches dedi cated in the past year are St. Catherine of Siena’s, fronting on North Federal Boule vard at 42nd Avenue,, dedicated on Thanksgiving day, Nov. 27, 1952; and St. Vincent de Paul’s, facing S. University Boulevard at E. Arizona Avenue, which was dedicated Feb. 11, 1953. The new St. John's faces beautiful East Seventh Avenue Parkway at Eliza beth Street. The three new edifices give Denver an array of magnificent Catholic churches of which it may well be proud, and which are un excelled by any city of comparable size in the nation. John K. Monroe of Denver was architect for the new building, which was erected by the F. J. New St. John’s Is Handsome Addition on Seventh Avenue Parkway Kirchhof Construction Co. -

Saul Sorrin: Oral History Transcript

Saul Sorrin: Oral History Transcript www.wisconsinhistory.org/HolocaustSurvivors/Sorrin.asp Name: Saul Sorrin (1919–1995) Birth Place: New York City Arrived in Wisconsin: 1962, Milwaukee Project Name: Oral Histories: Wisconsin Survivors Saul Sorrin of the Holocaust Biography: Saul Sorrin was an American witness to the Holocaust. He aided survivors at displaced persons camps in Germany as an administrator from 1945 to 1950. Saul was born on July 6, 1919, in New York City, where he attended the City College of New York. In 1940, he applied for a position with the United Nations Relief and Rehabilitation Administration (UNRRA). At the end of 1945, Saul was sent to Neu Freimann Siedlung, a displaced persons camp near Munich, Germany, to help Holocaust survivors. In 1946, he hosted General Dwight D. Eisenhower on an inspection tour of Neu Freimann. At Eisenhower's recommendation, Saul was appointed permanent area director at the Wolf- Ratshausen camp in Bad Kissingen. He served there until March 1950. Saul became deeply committed to the survivors he was helping. He ignored many illegal actions, violated countless rules and regulations, and helped refugees alter their documents to sidestep a variety of restrictions. Saul also aided those who wanted to immigrate illegally to countries with quotas. He even permitted refugees to hold secret military training for the newly formed state of Israel. Saul helped set up cultural institutions in the displaced persons camps he supervised. He began schools and synagogues and organized sporting events and entertainment. He was also able to bring in famous performers. After the war, Saul returned to the U.S. -

Chess Quiz Positions, Mueh Here Is Another Set of Teasln, Posltiona, All from Prac Yield Or Course to the Sen· the Same Can Be Sald-----All Ucal Play

OCTOBER 1969 MESSAGE OF PROGRESS (See poge 291) • -'- 85 CENTS I SubscnptiOfl Rot. \ ONE YEAR S8.S0 1 White to move and win 2 Black to move and win AU or these poslUons, no In each and every single QUELL THE QUIZ QUAVERS matter bow teasing or in an Instanee here and In other other word tantalizing, do chess quiz positions, mueh Here Is another set of teasln, posltiona, all from prac yield or course to the sen· the SAme can be sald-----all Ucal play. If you find all te.n (correct) solutions, you'll be sible application or logic, right, we'll SAY it--exactly equaling -the &trorts of ten worthies (though without their chess logic, that Is, In some the same can be said: a pply tournament pressure) and can fairly lay credit to the rating sense what the greal Eman· your ehesslc talent for eals· or excellent, or for eight true answers to that of good, or for uel meant when he wrote sle eommonsense. Do not ask six to tllat of fair. For our SOIUtlODS (don't look now!), turn "Common Sense In Chess_" us to supply the winning to the table ot content! on the facing leaL You need no other elue! Jdea! 3 White to move and win 4 Black to move and win 5 White to move and win 6 Black to move and win Low and high, wide and Chess, atter all, Is lIke Haul and tug at the veri· Every solution wlU ·yleld far, wherever you look for tbat and must be so consid· ties In the position. -

GM Square Auction No. 15, 23-25 March 2006

GM Square Auction No. 15, 23-25 March 2006 Chess Magazines Lot 1. The Chess Player. Volumes 1-4 – a complete run. Weekly British chess magazine, edited by Kling and Horwitz, published from 19 July 1851. Contents: chess news, games, articles, problems and studies. Volume 1: 192 pages, 24 issues (complete). Index. Half-bound in brown calf with gilt spine and marbled boards. Stamp and signs on paste-down endpaper, very clean throughout, condition is very good. Volume 2: 218 + 8 pages, 52 issues (complete). Index. Half-bound in brown calf with gilt spine and marbled boards. The spine is slightly chipped top and bottom, stamp and signs on paste-down endpaper, occasional pencil comments, otherwise very clean throughout, condition is very good. Volume 3: 12 pages (first issue) + 330 pages (complete). Index. The first four issues bear the name “The New Chess Player” – there was a short break in this publication in July-August 1852. After the 4th issue the publication reversed to its original name and also discontinued weekly issues. Half-bound in dark-brown calf with gilt spine and marbled boards. Stamp and signs on paste-down endpaper, very clean throughout, condition is very good. Volume 4: 188 pages (complete). Index. Half-bound in dark-brown calf with gilt spine and marbled boards. Stamp and signs on paste-down endpaper, very clean throughout, condition is very good. Sold for €1,929.24 Lot 2. La Strategie, volume 2 (1868) French chess magazine published from 1867 till 1940. L/N 6020. 288 pages. Quarter-bound in leather with gilt spine and marbled boards. -

White Knight Review Chess E-Magazine

Chess E-Magazine Interactive E-Magazine Volume 2 • Issue 6 November/December 2011 Other Occupations of Famous Chess Players Chess Clocks & Timers Pal Benko Simultaneous and Blindfold Displays C Seraphim Press White Knight Review Chess E-Magazine Table of Contents contents EDITORIAL- “My Move” 3 4 FEATURE- Russian Chess INTERACTIVE CONTENT BIOGRAPHY- Pal Benko 12 ARTICLE- Chess Progam 4. 14 ________________ • Click on title in Table of Contents ARTICLE- Chess Clocks 16 to move directly to Time Honored Tradition page. • Click on “White FEATURE-Occupations of Famous Players 18 Knight Review” on the top of each page to return to ARTICLE- Math and Chess 22 Table of Contents. • Click on red type to 23 continue to next ARTICLE- News Around the World page 24 • Click on ads to go FEATURE- Simultaneous/Blindfold Displays to their websites • Click on email to ARTICLE - Pandolfini’s Advice 27 open up email program BOOK REVIEW-Karpov’s Strategic Wins 1 • Click up URLs to 1961-1985- The Making of a Champion 28 go to websites. by Tibor Karolyi ANNOTATED GAME -Hampyuk - 29 Anatoluy Karpov COMMENTARY- “Ask Bill” 31 November/December 2011 White Knight Review November/December2011 My Move [email protected] editorial -Jerry Wall Well it has been over a year now since we started this publication. It is not easy putting together a 32 page magazine on chess every couple of months but it certainly has been rewarding (maybe not so White Knight much financially but then that really never was the goal). Review We wanted to put together a different kind of Chess E-Magazine chess publication that wasn’t just diagrams, problems, analytical moves and such. -

Oscar 75 Cents

MAY 1968 OSCAR ( Sell pa gll 1321 75 CENTS Sub:u:riptlon lote ONE YEAl $7.50 • e ,-" wn 789 PAGES: 7 'I, by 9 Inches. clothbound 211 di Cl C) rams 493 idea variations 1704 practical variations 463 supplementary variations . 3894 not •• to an variations and 439 COMPLETE GAMES! BY I. A. HOROWITZ in collaboration with Former World Champion. Dr. Max Euwe. Ernest Gruenfeld. Hans Kmoch. a nd many other noted authorit ies This latest and immense work, the rno:;t exhaustive of its ki ud, ex· plains in encyclopedic detail the fine points of all openings. It carries the reader well into the middle game, evaluates the prospects there and often gives complete exemplary games so that he is not left hanging in mid.position with the query: What happens now? A logical sequence binds the continuity in each opening. First come the moves with footnotes leading to the key position . Then fol· BIBLIOPHILES! low pertinent observations, illustrated by "Idea Variations." Finally, Glossy paper, handsome print. Practical and Supplementary Variations, weB annotated. exemplify the effective' possibilities. Each line is appraised: or = , spacious poC)inCJ and a ll the +, - The large format- 7V2 x 9 inches- is designed for ease of read· other appurtenances of exquis. ing and playing. It eliminates much tiresome shuffling of pages ite book-makinCJ combine to between the principal lines and the respective comments. Clear. make t his the handsomest of legible type, a wide margin for inserting notes and variation-id entify. ing diagrams are other plus features. • chess books! In addition,.to all else, thi·s book contains 439 complete games- a golden treasu,ry.j n itself! ORDER FROM CHESS REVIEW 1----- - - -- - - ------- - - - - ---- - ---1 I Please send me Ghell Openings : Theory and Practice at $12.50 I I Name • • • , . -

World Champion Petrosian

World Champion Petrosian • Volume XXI Numl)e r 1 Fe l)r uary, 1966 EDITOR: J. F . Reinhardt CONTENTS CHESS FEDERATION Erevon ................................................................................................ 44-46 From the Spossky-Tol Motch ..................................... .. ............................ .46 PRESIDENT Lt. Col. E. B. Edmondson Zinnowitz, 1965 ...................................................................................... 49 VICE·PRESIDENT David Hoffmann Chigorin Memoria l .... ................................................................: ........... ..50 REGIONAL VICE·PRESIDENTS Asztolos Memoriol ....................................... .. ... ......... ............................... 51 NEW ~NOLAND St,nley KJng Ha rold Dondls Here & There ........................................................... ............................... 52 &I I Bourdon EASTERN Donald Sctu.llb Upset of 0 Chomp ion, by Edmar MedniS ..................................................54 Lew's E. Wood Robert wl.lelle MID-ATLANTIC WillIam Bra" Tournament Life .. ................................................................... ................. 55 &.rl Clary Edw'rd O. Slnhle SOUTHEItN Dr. Robert Froemke P. ler LahdCl Curoll M. Crull GREAT LAKES Norbert Matthew, Don.Ld W. Hlldln, Dr. Harvey McClelln NORTH CIiNTRAt. Robert Lerner ,John (nne" * * * Ken Ryklr.en SOUTHWESTERN W. W. Crew K enneth Smith Park Bltbop THERE'S A USCF TOURNAMENT PACIFIC Kenneth .I0MI Ool'llon Barrett Col. Paull.. Webb IN YOUR AREA - SECRETARY Marshall