Texture Shaders Sébastien Dominé and John Spitzer NVIDIA Corporation Session Agenda

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GLSL 4.50 Spec

The OpenGL® Shading Language Language Version: 4.50 Document Revision: 7 09-May-2017 Editor: John Kessenich, Google Version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost Copyright (c) 2008-2017 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

First Person Shooting (FPS) Game

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 04 | Apr-2018 www.irjet.net p-ISSN: 2395-0072 Thunder Force - First Person Shooting (FPS) Game Swati Nadkarni1, Panjab Mane2, Prathamesh Raikar3, Saurabh Sawant4, Prasad Sawant5, Nitesh Kuwalekar6 1 Head of Department, Department of Information Technology, Shah & Anchor Kutchhi Engineering College 2 Assistant Professor, Department of Information Technology, Shah & Anchor Kutchhi Engineering College 3,4,5,6 B.E. student, Department of Information Technology, Shah & Anchor Kutchhi Engineering College ----------------------------------------------------------------***----------------------------------------------------------------- Abstract— It has been found in researches that there is an have challenged hardware development, and multiplayer association between playing first-person shooter video games gaming has been integral. First-person shooters are a type of and having superior mental flexibility. It was found that three-dimensional shooter game featuring a first-person people playing such games require a significantly shorter point of view with which the player sees the action through reaction time for switching between complex tasks, mainly the eyes of the player character. They are unlike third- because when playing fps games they require to rapidly react person shooters in which the player can see (usually from to fast moving visuals by developing a more responsive mind behind) the character they are controlling. The primary set and to shift back and forth between different sub-duties. design element is combat, mainly involving firearms. First person-shooter games are also of ten categorized as being The successful design of the FPS game with correct distinct from light gun shooters, a similar genre with a first- direction, attractive graphics and models will give the best person perspective which uses light gun peripherals, in experience to play the game. -

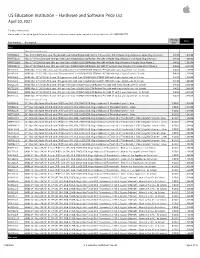

Apple US Education Price List

US Education Institution – Hardware and Software Price List April 30, 2021 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac iMac with Intel processor MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR2P2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 3-year AppleCare+ for Schools 8/4/20 1,818.00 BR2S2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 4-year AppleCare+ for Schools 8/4/20 1,868.00 BR2Q2LL/A BNDL iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB -

Managing Research with the Ipad

Managing Research with the iPad Center for Innovation in Teaching and Research Presenter: Sara Settles Instructional Technology Systems Manager Email: [email protected] ©Copyright 2013 Center for Innovation in Teaching and Research Western Illinois University * Contact S. Settles for Permission to Reprint or Copy (V1.2) 1 Overview iPads have brought new abilities to research within the education field adding mobility and ease of use. With the mobility of the iPad along with the development of many apps there are numerous ways the iPad can be used for research. Researchers can now take the iPad into the field and record data and information in a timelier manner. The iPad is being used to record audio, movies, and enter statistics as a means of collecting and analyzing data. Below are iPad apps which can easily be used in research. Dragon Dictation (Free) – Voice recognition uses Dragon® NaturallySpeaking® technology. This application records the users voice and turns into text allowing editing and the ability to share with others via email or SMS. Google Forms – Use Google Docs > Forms to create forms on a laptop or desktop computer. Information can then be entered on the mobile iPad. Login to Google Docs and use the Create > Form button. Create the form using the settings and save. The information can then be accessed using the iPad. Center for Innovation in Teaching & Research • Malpass Library 637 • Phone: 309.298.2434 2 Grafio Lite – Diagrams & Ideas (Formerly - iDesk Lite) (Free) Mind Mapping – Create diagrams with this application. Users can brainstorm creating mind mapping diagrams and flow charts. -

Advanced Computer Graphics to Do Motivation Real-Time Rendering

To Do Advanced Computer Graphics § Assignment 2 due Feb 19 § Should already be well on way. CSE 190 [Winter 2016], Lecture 12 § Contact us for difficulties etc. Ravi Ramamoorthi http://www.cs.ucsd.edu/~ravir Motivation Real-Time Rendering § Today, create photorealistic computer graphics § Goal: interactive rendering. Critical in many apps § Complex geometry, lighting, materials, shadows § Games, visualization, computer-aided design, … § Computer-generated movies/special effects (difficult or impossible to tell real from rendered…) § Until 10-15 years ago, focus on complex geometry § CSE 168 images from rendering competition (2011) § § But algorithms are very slow (hours to days) Chasm between interactivity, realism Evolution of 3D graphics rendering Offline 3D Graphics Rendering Interactive 3D graphics pipeline as in OpenGL Ray tracing, radiosity, photon mapping § Earliest SGI machines (Clark 82) to today § High realism (global illum, shadows, refraction, lighting,..) § Most of focus on more geometry, texture mapping § But historically very slow techniques § Some tweaks for realism (shadow mapping, accum. buffer) “So, while you and your children’s children are waiting for ray tracing to take over the world, what do you do in the meantime?” Real-Time Rendering SGI Reality Engine 93 (Kurt Akeley) Pictures courtesy Henrik Wann Jensen 1 New Trend: Acquired Data 15 years ago § Image-Based Rendering: Real/precomputed images as input § High quality rendering: ray tracing, global illumination § Little change in CSE 168 syllabus, from 2003 to -

Developer Tools Showcase

Developer Tools Showcase Randy Fernando Developer Tools Product Manager NVISION 2008 Software Content Creation Performance Education Development FX Composer Shader PerfKit Conference Presentations Debugger mental mill PerfHUD Whitepapers Artist Edition Direct3D SDK PerfSDK GPU Programming Guide NVIDIA OpenGL SDK Shader Library GLExpert Videos CUDA SDK NV PIX Plug‐in Photoshop Plug‐ins Books Cg Toolkit gDEBugger GPU Gems 3 Texture Tools NVSG GPU Gems 2 Melody PhysX SDK ShaderPerf GPU Gems PhysX Plug‐Ins PhysX VRD PhysX Tools The Cg Tutorial NVIDIA FX Composer 2.5 The World’s Most Advanced Shader Authoring Environment DirectX 10 Support NVIDIA Shader Debugger Support ShaderPerf 2.0 Integration Visual Models & Styles Particle Systems Improved User Interface Particle Systems All-New Start Page 350Z Sample Project Visual Models & Styles Other Major Features Shader Creation Wizard Code Editor Quickly create common shaders Full editor with assisted Shader Library code generation Hundreds of samples Properties Panel Texture Viewer HDR Color Picker Materials Panel View, organize, and apply textures Even More Features Automatic Light Binding Complete Scripting Support Support for DirectX 10 (Geometry Shaders, Stream Out, Texture Arrays) Support for COLLADA, .FBX, .OBJ, .3DS, .X Extensible Plug‐in Architecture with SDK Customizable Layouts Semantic and Annotation Remapping Vertex Attribute Packing Remote Control Capability New Sample Projects 350Z Visual Styles Atmospheric Scattering DirectX 10 PCSS Soft Shadows Materials Post‐Processing Simple Shadows -

Apple Buys Digital Magazine Subscription Service 12 March 2018

Apple buys digital magazine subscription service 12 March 2018 producing beautifully designed and engaging stories for users." The Texture application launched in 2012, the product of a joint venture created two years earlier. "We could not imagine a better home or future for the service," Texture chief executive John Loughlin said. Despite Apple's spectacular trajectory in the decade since the introduction of the iPhone, the California technology titan is facing challenges on whether it can continue growth. Apple is buying digital magazine subscription service While iPhone sales are at the heart of Apple's Texture, adding to the side of its business aimed at money-making machine, the company has taken to making money from online content or services spotlighting revenue from the App Store, iCloud, Apple Music, iTunes and other content and services people tap into using its devices. Apple announced Monday it is buying digital Texture could add digital magazine subscription magazine subscription service Texture, adding to revenue to that lineup. the side of its business aimed at making money from online content or services. Apple reported that it finished last year with cash reserves of $285 billion—much of that stashed The iPhone maker did not disclose financial terms overseas. of the deal to buy Texture from its owners—publishers Conde Nast, Hearst, Meredith, The company said it would bring back most of its Rogers Media and global investment firm KKR. profits from abroad to take advantage of a favorable tax rate in legislation approved by Texture gives subscribers unlimited access to Congress last year. more than 200 magazines, such as Forbes, Esquire, GQ, Wired, People, The Atlantic and The repatriation will result in a tax bill of about $38 National Geographic for a $10 monthly fee. -

Real-Time Rendering Techniques with Hardware Tessellation

Volume 34 (2015), Number x pp. 0–24 COMPUTER GRAPHICS forum Real-time Rendering Techniques with Hardware Tessellation M. Nießner1 and B. Keinert2 and M. Fisher1 and M. Stamminger2 and C. Loop3 and H. Schäfer2 1Stanford University 2University of Erlangen-Nuremberg 3Microsoft Research Abstract Graphics hardware has been progressively optimized to render more triangles with increasingly flexible shading. For highly detailed geometry, interactive applications restricted themselves to performing transforms on fixed geometry, since they could not incur the cost required to generate and transfer smooth or displaced geometry to the GPU at render time. As a result of recent advances in graphics hardware, in particular the GPU tessellation unit, complex geometry can now be generated on-the-fly within the GPU’s rendering pipeline. This has enabled the generation and displacement of smooth parametric surfaces in real-time applications. However, many well- established approaches in offline rendering are not directly transferable due to the limited tessellation patterns or the parallel execution model of the tessellation stage. In this survey, we provide an overview of recent work and challenges in this topic by summarizing, discussing, and comparing methods for the rendering of smooth and highly-detailed surfaces in real-time. 1. Introduction Hardware tessellation has attained widespread use in computer games for displaying highly-detailed, possibly an- Graphics hardware originated with the goal of efficiently imated, objects. In the animation industry, where displaced rendering geometric surfaces. GPUs achieve high perfor- subdivision surfaces are the typical modeling and rendering mance by using a pipeline where large components are per- primitive, hardware tessellation has also been identified as a formed independently and in parallel. -

For Immediate Release

FOR IMMEDIATE RELEASE THE JIM HENSON COMPANY AND REHAB ENTERTAINMENT PARTNER WITH AUTHOR/ILLUSTRATOR KAREN KATZ ON LENA!, A NEW ANIMATED PRESCHOOL SERIES Original Series Inspired by Katz’s Award-Winning Title “The Colors of Us” and the Upcoming “My America” Hollywood, CA (July 28, 2020) Rehab Entertainment and The Jim Henson Company are partnering with award- winning author and illustrator Karen Katz to develop Lena!, an original 2D animated preschool series inspired by Katz’s acclaimed picture book The Colors of Us and her upcoming release My America, a new book from MacMillan Publishers (spring, 2021) that celebrates the experiences and stories of U.S. immigrants. Created by Katz and Sidney Clifton, Lena! follows a 7-year-old Guatemalan girl who uses her unique and imaginative way of thinking to explore her culturally diverse neighborhood, identifying ways to help her friends and neighbors along the way. There’s no problem too big or too unusual for Lena's whimsical approach to creative problem-solving in this series all about connection, and she draws from the rich diversity in her community for the resources, support, and inspiration she needs to succeed. For more than twenty years, prolific author and illustrator Karen Katz has released over 85 picture books and board books for children that have been recognized and embraced by critics and parents. Her award-winning titles, including the number-one best-selling Where is Baby’s Bellybutton? and Counting Kisses, have sold 16 million copies worldwide, in over a dozen countries. Katz and Clifton will executive produce Lena! along with John W. -

Music 5 September 2020 Content

St. Michael-Albertville Middle School Music 5 Teacher: Michael Hilden September 2020 Content Skills Learning Targets Assessment Resources & Technology CEQ: A. Tone Color A. Tone Color A. Tone Color A. Tone Color WHAT ARE THE A1-2. "Tone Color ELEMENTS OF A1. Identify tone Digital Video with student MUSIC? color aurally 1. I can identify the CFA A1-2. Tone Color UEQ: "phones" by what they are study guide A1. Identify tone made of and how they Quiz. What do tone colors color producers visually. produce their sound. A1-2. Notebook/SM sound like? ART board World A1. Identify the 2. I can organize Instrument file What instruments create characteristics of tone instruments into their tone colors and what do color correct families. A1-2. GarageBand they look like? computer program A2. Identify tone How do you produce color aurally A1-2. Pachelbel's tone colors? "Canon" MIDI piece on A2. Identify tone A. Tone Color color producers visually. GarageBand A1. Vocal A1-2. Mac computer A2. Identify the characteristics of tone with MIDI keyboard A2. Instrument color controller A2. Choose A1-2. "Bach's Fight appropriate tone colors for For Freedom" DVD. a MIDI piece www.curriculummapper.com 114 Music 5 St. Michael-Albertville Middle Schools October Content Skills Learning Targets Assessment Resources & Technology ACEQ: A. Tone Color 1. I can identify the A. Tone Color A. Tone Color "phones" by what they are WHAT ARE THE made of and how they A1-2. Britten's ELEMENTS OF A1. Identify tone produce their sound. "Young Person's Guide to A1-2. -

NVIDIA Quadro P620

UNMATCHED POWER. UNMATCHED CREATIVE FREEDOM. NVIDIA® QUADRO® P620 Powerful Professional Graphics with FEATURES > Four Mini DisplayPort 1.4 Expansive 4K Visual Workspace Connectors1 > DisplayPort with Audio The NVIDIA Quadro P620 combines a 512 CUDA core > NVIDIA nView® Desktop Pascal GPU, large on-board memory and advanced Management Software display technologies to deliver amazing performance > HDCP 2.2 Support for a range of professional workflows. 2 GB of ultra- > NVIDIA Mosaic2 fast GPU memory enables the creation of complex 2D > Dedicated hardware video encode and decode engines3 and 3D models and a flexible single-slot, low-profile SPECIFICATIONS form factor makes it compatible with even the most GPU Memory 2 GB GDDR5 space and power-constrained chassis. Support for Memory Interface 128-bit up to four 4K displays (4096x2160 @ 60 Hz) with HDR Memory Bandwidth Up to 80 GB/s color gives you an expansive visual workspace to view NVIDIA CUDA® Cores 512 your creations in stunning detail. System Interface PCI Express 3.0 x16 Quadro cards are certified with a broad range of Max Power Consumption 40 W sophisticated professional applications, tested by Thermal Solution Active leading workstation manufacturers, and backed by Form Factor 2.713” H x 5.7” L, a global team of support specialists, giving you the Single Slot, Low Profile peace of mind to focus on doing your best work. Display Connectors 4x Mini DisplayPort 1.4 Whether you’re developing revolutionary products or Max Simultaneous 4 direct, 4x DisplayPort telling spectacularly vivid visual stories, Quadro gives Displays 1.4 Multi-Stream you the performance to do it brilliantly. -

3Aa7da6d3554e07e7abc52fb2b

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLI-B7, 2016 XXIII ISPRS Congress, 12–19 July 2016, Prague, Czech Republic SCENE CLASSFICATION BASED ON THE SEMANTIC-FEATURE FUSION FULLY SPARSE TOPIC MODEL FOR HIGH SPATIAL RESOLUTION REMOTE SENSING IMAGERY Qiqi Zhua,b,Yanfei Zhonga,b,* , Liangpei Zhanga,b a State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing, Wuhan University, Wuhan 430079, China – [email protected], (zhongyanfei, zlp62)@whu.edu.cn b Collaborative Innovation Center of Geospatial Technology, Wuhan University, Wuhan 430079, China Commission VII, WG VII/4 KEY WORDS: Scene classification, Fully sparse topic model, Semantic-feature, Fusion, Limited training samples, High spatial resolution ABSTRACT: Topic modeling has been an increasingly mature method to bridge the semantic gap between the low-level features and high-level semantic information. However, with more and more high spatial resolution (HSR) images to deal with, conventional probabilistic topic model (PTM) usually presents the images with a dense semantic representation. This consumes more time and requires more storage space. In addition, due to the complex spectral and spatial information, a combination of multiple complementary features is proved to be an effective strategy to improve the performance for HSR image scene classification. But it should be noticed that how the distinct features are fused to fully describe the challenging HSR images, which is a critical factor for scene classification. In this paper, a semantic-feature fusion fully sparse topic model (SFF-FSTM) is proposed for HSR imagery scene classification. In SFF- FSTM, three heterogeneous features—the mean and standard deviation based spectral feature, wavelet based texture feature, and dense scale-invariant feature transform (SIFT) based structural feature are effectively fused at the latent semantic level.