3Aa7da6d3554e07e7abc52fb2b

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Apple US Education Price List

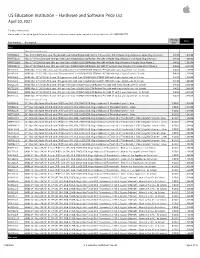

US Education Institution – Hardware and Software Price List April 30, 2021 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac iMac with Intel processor MHK03LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/256GB SSD/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 8/4/20 1,049.00 MXWT2LL/A iMac 27" 5K/3.1GHz 6-core 10th-gen Intel Core i5/8GB/256GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard and Apple Magic Mouse 2 8/4/20 1,699.00 MXWU2LL/A iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB SSD/Radeon Pro 5300 w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 1,899.00 MXWV2LL/A iMac 27" 5K/3.8GHz 8-core 10th-gen Intel Core i7/8GB/512GB SSD/Radeon Pro 5500 XT w/Apple Magic Keyboard & Apple Magic Mouse 2 8/4/20 2,099.00 BR332LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 3-year AppleCare+ for Schools 8/4/20 1,168.00 BR342LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/256GB SSD/Intel IPG 640 with 4-year AppleCare+ for Schools 8/4/20 1,218.00 BR2P2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 3-year AppleCare+ for Schools 8/4/20 1,818.00 BR2S2LL/A BNDL iMac 27" 5K/3.1GHz 6-core 10th-generation Intel Core i5/8GB/256GB SSD/RP 5300 with 4-year AppleCare+ for Schools 8/4/20 1,868.00 BR2Q2LL/A BNDL iMac 27" 5K/3.3GHz 6-core 10th-gen Intel Core i5/8GB/512GB -

Managing Research with the Ipad

Managing Research with the iPad Center for Innovation in Teaching and Research Presenter: Sara Settles Instructional Technology Systems Manager Email: [email protected] ©Copyright 2013 Center for Innovation in Teaching and Research Western Illinois University * Contact S. Settles for Permission to Reprint or Copy (V1.2) 1 Overview iPads have brought new abilities to research within the education field adding mobility and ease of use. With the mobility of the iPad along with the development of many apps there are numerous ways the iPad can be used for research. Researchers can now take the iPad into the field and record data and information in a timelier manner. The iPad is being used to record audio, movies, and enter statistics as a means of collecting and analyzing data. Below are iPad apps which can easily be used in research. Dragon Dictation (Free) – Voice recognition uses Dragon® NaturallySpeaking® technology. This application records the users voice and turns into text allowing editing and the ability to share with others via email or SMS. Google Forms – Use Google Docs > Forms to create forms on a laptop or desktop computer. Information can then be entered on the mobile iPad. Login to Google Docs and use the Create > Form button. Create the form using the settings and save. The information can then be accessed using the iPad. Center for Innovation in Teaching & Research • Malpass Library 637 • Phone: 309.298.2434 2 Grafio Lite – Diagrams & Ideas (Formerly - iDesk Lite) (Free) Mind Mapping – Create diagrams with this application. Users can brainstorm creating mind mapping diagrams and flow charts. -

Apple Buys Digital Magazine Subscription Service 12 March 2018

Apple buys digital magazine subscription service 12 March 2018 producing beautifully designed and engaging stories for users." The Texture application launched in 2012, the product of a joint venture created two years earlier. "We could not imagine a better home or future for the service," Texture chief executive John Loughlin said. Despite Apple's spectacular trajectory in the decade since the introduction of the iPhone, the California technology titan is facing challenges on whether it can continue growth. Apple is buying digital magazine subscription service While iPhone sales are at the heart of Apple's Texture, adding to the side of its business aimed at money-making machine, the company has taken to making money from online content or services spotlighting revenue from the App Store, iCloud, Apple Music, iTunes and other content and services people tap into using its devices. Apple announced Monday it is buying digital Texture could add digital magazine subscription magazine subscription service Texture, adding to revenue to that lineup. the side of its business aimed at making money from online content or services. Apple reported that it finished last year with cash reserves of $285 billion—much of that stashed The iPhone maker did not disclose financial terms overseas. of the deal to buy Texture from its owners—publishers Conde Nast, Hearst, Meredith, The company said it would bring back most of its Rogers Media and global investment firm KKR. profits from abroad to take advantage of a favorable tax rate in legislation approved by Texture gives subscribers unlimited access to Congress last year. more than 200 magazines, such as Forbes, Esquire, GQ, Wired, People, The Atlantic and The repatriation will result in a tax bill of about $38 National Geographic for a $10 monthly fee. -

For Immediate Release

FOR IMMEDIATE RELEASE THE JIM HENSON COMPANY AND REHAB ENTERTAINMENT PARTNER WITH AUTHOR/ILLUSTRATOR KAREN KATZ ON LENA!, A NEW ANIMATED PRESCHOOL SERIES Original Series Inspired by Katz’s Award-Winning Title “The Colors of Us” and the Upcoming “My America” Hollywood, CA (July 28, 2020) Rehab Entertainment and The Jim Henson Company are partnering with award- winning author and illustrator Karen Katz to develop Lena!, an original 2D animated preschool series inspired by Katz’s acclaimed picture book The Colors of Us and her upcoming release My America, a new book from MacMillan Publishers (spring, 2021) that celebrates the experiences and stories of U.S. immigrants. Created by Katz and Sidney Clifton, Lena! follows a 7-year-old Guatemalan girl who uses her unique and imaginative way of thinking to explore her culturally diverse neighborhood, identifying ways to help her friends and neighbors along the way. There’s no problem too big or too unusual for Lena's whimsical approach to creative problem-solving in this series all about connection, and she draws from the rich diversity in her community for the resources, support, and inspiration she needs to succeed. For more than twenty years, prolific author and illustrator Karen Katz has released over 85 picture books and board books for children that have been recognized and embraced by critics and parents. Her award-winning titles, including the number-one best-selling Where is Baby’s Bellybutton? and Counting Kisses, have sold 16 million copies worldwide, in over a dozen countries. Katz and Clifton will executive produce Lena! along with John W. -

Music 5 September 2020 Content

St. Michael-Albertville Middle School Music 5 Teacher: Michael Hilden September 2020 Content Skills Learning Targets Assessment Resources & Technology CEQ: A. Tone Color A. Tone Color A. Tone Color A. Tone Color WHAT ARE THE A1-2. "Tone Color ELEMENTS OF A1. Identify tone Digital Video with student MUSIC? color aurally 1. I can identify the CFA A1-2. Tone Color UEQ: "phones" by what they are study guide A1. Identify tone made of and how they Quiz. What do tone colors color producers visually. produce their sound. A1-2. Notebook/SM sound like? ART board World A1. Identify the 2. I can organize Instrument file What instruments create characteristics of tone instruments into their tone colors and what do color correct families. A1-2. GarageBand they look like? computer program A2. Identify tone How do you produce color aurally A1-2. Pachelbel's tone colors? "Canon" MIDI piece on A2. Identify tone A. Tone Color color producers visually. GarageBand A1. Vocal A1-2. Mac computer A2. Identify the characteristics of tone with MIDI keyboard A2. Instrument color controller A2. Choose A1-2. "Bach's Fight appropriate tone colors for For Freedom" DVD. a MIDI piece www.curriculummapper.com 114 Music 5 St. Michael-Albertville Middle Schools October Content Skills Learning Targets Assessment Resources & Technology ACEQ: A. Tone Color 1. I can identify the A. Tone Color A. Tone Color "phones" by what they are WHAT ARE THE made of and how they A1-2. Britten's ELEMENTS OF A1. Identify tone produce their sound. "Young Person's Guide to A1-2. -

6 Universal Texture Compression Format Mar17

Universal Texture Compression Format WebGL/WebVR/glTF Meetup Stephanie Hurlburt Introduction I work at Binomial, a company building a texture compressor called Basis. I’m currently fully focused on building this up with my business partner, Rich Geldreich. Since Basis uniquely solves a texture compression problem, especially in the WebGL/WebVR pipelines, we are more than happy to donate our texture file format specification and transcoder as a standard to the Khronos Group. We are standardizing this as a part of glTF. Using JPEG for textures A common way to handle texture data is to compress to JPEG, and store uncompressed on the GPU It has decent reasoning behind it CPU-side (JPEGs are small!), but truly limits your graphical capacity. GPU formats were made to improve upon this pipeline! We should use them! (Side note: You don’t want to compress to JPEG and then turn that into a GPU format. Great CPU storage, but heavy runtime processing and double-artifacts and poor GPU compression) Using GPU formats for textures This is what we want to do, but there exists a problem in the existing pipeline. Right now web developers have to store a copy of every GPU format they want to support (at least ETC for Android/Metal iOS, PVRTC for non-Metal (older) iOS, and DXT for desktop-- and more if you want better quality!) To give some perspective-- even if you’re only storing one GPU format, GPU formats are not meant for CPU storage and are still very big. This is where Basis comes in One format, the size of JPEG, that turns into the GPU format you need when you need it. -

Itunes App Store Promotional Design Specifications the Following Specifications Are Required When Submitting Design Elements for Promotion of App on Itunes

iTunes App Store Promotional Design Specifications The following specifications are required when submitting design elements for promotion of App on iTunes. • Acceptable file types are: layered .psd, transparent .tif, vector .ai, or .eps files. • The following assets are required if an App is selected to be promoted on the iTunes Store. Submission of assets does not guarantee promotion on iTunes. Required Assets Title treatment Include a title treatment / logo for your application (vector .eps, .ai or minimum 600 x 600 px transparent .psd or .tif). The title treatment should be simple and easy to read when scaled down to a small size. Please limit tag or bylines if they are not legible at small scale. Background image Include a background image, texture, color or gradient that corresponds to your application, or is complimentary to your title treatment. App Store “Main” “Splash” Graphic links to individual Application or other location (as programmed by iTunes). Required assets: · title treatment · background image “Brick” Graphic links to individual Application or other location (as programmed by iTunes). Required assets: · title treatment · background image “Icon” Graphic links to individual Application This image is delivered to iTunes via iTunes Connect. Additional Considerations: • Assets that do not follow the above guidelines may be rejected and/or delay potential promotion of application. • "Tags" will be added by iTunes when appropriate, to communicate promotion (i.e. “Application Just Added”). 1 iTunes App Store Promotional Design Specifications (con’t) iTunes may feature your application with a customized page design. If your app is to be featured in this manner, you will be contacted for additional assets The following assets are required if an App is selected for a custom page design on the iTunes Store: Customized Application Page “Background image” The background image, texture, color or gradient should correspond to your application or compliment the title treatment. -

Apple US Education Institution Price List

US Education Institution – Hardware and Software Price List December 10, 2019 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac MMQA2LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/1TB hard drive/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 6/5/17 1,049.00 MRT32LL/A iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/1TB hard drive/Radeon Pro 555X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,249.00 MRT42LL/A iMac 21.5" 4K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 560X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,399.00 MRQY2LL/A iMac 27" 5K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 570X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,699.00 MRR02LL/A iMac 27" 5K/3.1GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 575X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 1,899.00 MRR12LL/A iMac 27" 5K/3.7GHz 6-core 8th-gen Intel Core i5/8GB/2TB Fusion drive/Radeon Pro 580X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 2,099.00 BMPP2LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/1TB hard drive/Intel IPG 640 with AppleCare+ for Mac 6/5/17 1,168.00 BNR82LL/A BNDL iMac 21.5" 4K/3.6GHz quad-core 8th-generation Intel Core i3/8GB/1TB hard drive/RP 555X with AppleCare+ for Mac 3/19/19 1,368.00 BNR92LL/A BNDL iMac 21.5" 4K/3.0GHz 6-core -

CN-LBP: Complex Networks-Based Local Binary Patterns for Texture Classification

> REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) < 1 CN-LBP: Complex Networks-based Local Binary Patterns for Texture Classification Zhengrui Huang 1 generative adversarial networks (GAN) [20]), etc. Abstract—To overcome the limitations of original local binary Compared with other approaches, statistic-based descriptors patterns (LBP), this article proposes a new texture descriptor (LBP) are used most widely. Original LBP was first proposed aided by complex networks (CN) and LBP, named CN-LBP. for rotation invariant texture classification in [10]. Based on the Specifically, we first abstract a texture image (TI) as directed graphs over different bands with the help of pixel distance, idea of local encoding patterns, some efficient LBP-based intensity, and gradient (magnitude and angle). Second, several variants were introduced. For instance, in [21], Zhang et al. CN-based feature measurements (including clustering coefficient, proposed a high-order information-based local derivative in-degree centrality, out-degree centrality, and eigenvector pattern (LDP) for face recognition and classification. To reduce centrality) are selected to further decipher the texture features, the sensitivity of imaging conditions, a local energy pattern which generates four feature images that can retain the image (LEP)-based descriptor was proposed in [22]. Following [22], a information as much as possible. Third, given the original TIs, gradient images (GI), and generated feature images, we can local directional number (LDN)-based descriptor was designed obtain the discriminative representation of texture images based to distinguish similar structural patterns [23]. To reduce the on uniform LBP (ULBP). Finally, the feature vector is obtained by impact of illumination and pose, Ding et al. -

Amadine User Manual for Ios.Pdf

1 Contents Introduction Brief Description Technical Support Useful Web Resources BeLight Software Privacy Statement Workspace and Program Interface The Main Window The Tools Panel The Control Panel Using Controls with Numeric Input Program Settings The Main Menu Working with Documents Creating Documents Opening Documents The Document Browser Saving Documents Document Settings 2 Layout Layout Basics Sheets The Grid Layout Guides Selecting and Arranging Objects Select Objects Delete or Hide an Object Move, Resize and Rotate Objects The Geometry Panel Align and Distribute Objects Lock an Object Group and Expand Objects Align Objects to the Pixel Grid Working with Layers Introduction to Layers and Objects The Layers Panel Creating and Deleting Layers Arranging Layers and Objects Blending Layers Drawing Vector Graphics Path and its Features Draw a Path with the Pen Tool 3 Create a Path with the Draw Tool Draw a Path with the Pencil Tool Draw a Path with the Brush Tool Edit a Path Simplify a Path Draw Lines and Shapes Stroke Brush The Appearance Panel Color, Gradient and Image Fill Introduction to Colors Fill an Object with a Color, Gradient or Image The Color Panel Selecting Colors Gradients Image Fill The Recolor Panel Working with Text Introduction to Text Objects Text in Rectangular Boundary Distortable Text Text Along a Path Text Inside a Shape Properties of Text 4 Creating Effects The Shadow Effect The Glow Effect The Blur Effect Changing Object's Shape Introduction to the Object Shape Transformation The Transform Panel Transforming Objects The Free Transform Tool The Path Panel Combining Objects Compound Path Using a Clipping Mask Cut an Object with the Knife Tool Cut a Path with the Scissors Tool Erase a Part of an Object Importing Introduction to Importing Importing Graphics Exporting and Printing Exporting Documents The Export Panel Printing 5 The Library Introduction to the Library The Object Library Panel Exporting and Importing Libraries 6 Brief Description The Amadine app is designed for creating and editing vector graphics. -

The American Express® Card Membership Rewards® 2021 Catalogue WHAT's INSIDE Select Any Icon to Go to Desired Section

INCENTIVO The American Express® Card Membership Rewards® 2021 Catalogue WHAT'S INSIDE Select any icon to go to desired section THE MEMBERSHIP REWARDS® PROGRAM FREQUENT TRAVELER OPTION FREQUENT GUEST FREQUENT FLYER PROGRAM PROGRAM NON-FREQUENT TRAVELER OPTION GADGETS & HOME & TRAVEL HEALTH & ENTERTAINMENT KITCHEN ESSENTIALS WELLNESS DINING HEALTH & WELLNESS LUXURY HOTEL SHOPPING VOUCHERS VOUCHERS VOUCHERS VOUCHERS FINANCIAL REWARDS TERMS & CONDITIONS THE MEMBERSHIP REWARDS PROGRAM THE MEMBERSHIP REWARDS PROGRAM TAKES YOU FURTHER NON-EXPIRING MEMBERSHIP REWARDS® POINTS Your Membership Rewards® Points do not expire, allowing you to save your Membership Rewards® Points for higher 1 value rewards. ACCELERATED REWARDS REDEMPTION Membership Rewards® Points earned from your Basic and Supplementary Cards are automatically pooled to enable 2 accelerated redemption. COMPREHENSIVE REWARDS PROGRAM The Membership Rewards® Points can be redeemed for a wide selection of shopping gift certificates, dining vouchers, 3 gadgets, travel essential items and more. The Membership Rewards® Points can also be transferred to other loyalty programs such as Airline Frequent Flyer and Hotel Frequent Guest Rewards programs which will allow you to enjoy complimentary flights or hotel stays. *Select any item below to go to the desired section. THE FREQUENT NON- MEMBERSHIP FREQUENT FINANCIAL TERMS & TRAVELER CONDITIONS REWARDS OPTION TRAVELER REWARDS PROGRAM OPTION THE MEMBERSHIP REWARDS PROGRAM PROGRAM OPTIONS AND STRUCTURE Earn one (1) Membership Rewards® Point for every -

Apple US Education Institution Price List

US Education Institution – Hardware and Software Price List April 15, 2020 For More Information: Please refer to the online Apple Store for Education Institutions: www.apple.com/education/pricelists or call 1-800-800-2775. Pricing Price Part Number Description Date iMac MMQA2LL/A iMac 21.5"/2.3GHz dual-core 7th-gen Intel Core i5/8GB/1TB hard drive/Intel Iris Plus Graphics 640 w/Apple Magic Keyboard, Apple Magic Mouse 2 6/5/17 1,049.00 MRT32LL/A iMac 21.5" 4K/3.6GHz quad-core 8th-gen Intel Core i3/8GB/1TB hard drive/Radeon Pro 555X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,249.00 MRT42LL/A iMac 21.5" 4K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 560X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,399.00 MRQY2LL/A iMac 27" 5K/3.0GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 570X w/Apple Magic Keyboard and Apple Magic Mouse 2 3/19/19 1,699.00 MRR02LL/A iMac 27" 5K/3.1GHz 6-core 8th-gen Intel Core i5/8GB/1TB Fusion drive/Radeon Pro 575X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 1,899.00 MRR12LL/A iMac 27" 5K/3.7GHz 6-core 8th-gen Intel Core i5/8GB/2TB Fusion drive/Radeon Pro 580X w/Apple Magic Keyboard & Apple Magic Mouse 2 3/19/19 2,099.00 BPPZ2LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/1TB hard drive/Intel IPG 640 with 3-year AppleCare+ for Schools 2/7/20 1,168.00 BPPY2LL/A BNDL iMac 21.5"/2.3GHz dual-core 7th-generation Core i5/8GB/1TB hard drive/Intel IPG 640 with 4-year AppleCare+ for Schools 2/7/20 1,218.00 BPQ92LL/A BNDL iMac 21.5"