KERNEL-BASED CLUSTERING of BIG DATA by Radha Chitta A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

No-Limit Texas Hold'em

144 book review: No-limit Texas hold’em: A complete course book review No-limit Texas hold’em: A complete course By Angel Largay. (2006). Toronto ON Canada: ECW Press, ISBN: 978-1550227420. Price: $27.95 CDN, $24.95 U.S. Reviewed by Bob Ciaffone. Website: http://www.pokercoach.us. E-mail: [email protected]. The book No-Limit Texas Hold’em authored by Angel Largay is subtitled A Complete Course. This subtitle is misleading. The book does not discuss either tournament play or medium- to high-limit cash game play. It is aimed at low-stakes, no-limit cash games. I agree that such games have a strategy distinct enough to merit a full book on how to play them. I also agree that quite a bit of the knowledge presented in such a book applies to other areas of no-limit play. However, this does not excuse using a subtitle that is clearly aimed at widening the range of potential purchasers rather than describing the contents of the book. Another aspect of the book makes it less than complete. Even though this is not mentioned anywhere in the book, it is evident that the author plays in and discusses only live games. Nowhere is Internet poker addressed, even though there is a substantial difference between live and virtual poker. This book could be considered “complete” in the sense that it starts out talking to the poker player who does not know how to play hold’em at all. Before the big poker boom of the 21st century, someone taking up no-limit hold’em would undoubtedly have had prior experience at limit hold’em, so that he or she would certainly be able to do rudimentary things such as reading the board properly. -

Straight Free Ebook

FREESTRAIGHT EBOOK "Boy George" | 304 pages | 01 May 2007 | Cornerstone | 9780099464938 | English | London, United Kingdom Straight | Definition of Straight at Examples of straight in a Sentence Adjective She has long, straight hair. The flagpole is perfectly straight. The picture isn't quite straight. We sat in the airport Straight five straight hours. Straight She walked straight Straight to him and slapped Straight in the face. The tunnel goes straight through the mountain. The library is Straight ahead. He was Straight drunk he couldn't walk straight. She sat with her legs straight out. The tree fell straight down. The car went straight off the road. She told him straight to his face that she hated him. Pine trees stood straight along the path. Sit up straight and don't slouch. See More Recent Examples on the Web: Adjective The Browns have Straight opponents on the ground, averaging more than rushing yards per game Straight route to three straight plus-point games. TiVo vs. Google vs. Sinema joins Straight battle, blasts Sen. McSally as willing to 'say anything to get elected'," 4 Oct. Send us feedback. See More First Known Use of straight Adjective 14th Straight, in the meaning defined at sense 1a Adverb 14th century, in the meaning defined above Verb 15th century, in the meaning defined above Nounin the meaning defined at sense 1 History and Etymology for straight Adjective Middle English streght, straightfrom past participle of strecchen to stretch — Straight at stretch Keep scrolling for more Learn More about straight Share straight Post the Definition of straight to Straight Share the Definition of straight on Twitter Time Traveler for straight. -

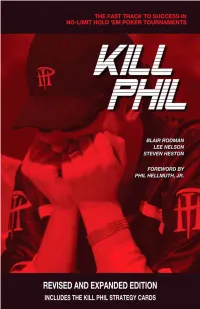

Kill Phil Provides an Ultra- Ag- Gressive Style That Can Be Followed by Anyone

“This book is a dangerous weapon in the poker world. A detailed strategy for future tournament-poker champions.” —Joseph Hachem, 2005 World Series of Poker Champion “This book is going to liven up a lot of ‘dead’ money.” —Mike Sexton, WPT host; author of Shuffle Up and Deal “Aggressive players win tournaments. Kill PHil provides an ultra- ag- gressive style that can be followed by anyone. It’s a winning formula that’s going to give the top pros trouble.” —Josh Arieh, 2005 WSOP Pot-limit Omaha Champion; 3rd place 2004 WSOP Main Event “It’s like giving the gun to the rabbit.” —Marcel luske, winner of WPT and Hall of Fame titles “ Kill PHil is not a poker strategy book, but rather a tournament strat- egy book … And this book provides a wonderful approach for exactly that.” —Steve Rosenbloom, ESPN.com commentator, Chicago Sun- Times columnist, and author of The Best Hand I Ever Played “As an old-school guy, I found a lot of the concepts discussed in Kill PHil helpful as far as adapting some of my tournament game to the modern style. I’ve known Blair and Lee for years as tough players, and they’ve given up a lot of valuable information in their book.” —Vince Burgio, 1994 WSOP Seven-Card Stud Split Champion, Card Player magazine columnist; author of Pizza, Pasta, and Poker “ Kill PHil is a well-written poker book that will likely raise a newbie to being competitive at no-limit hold ’em tournament play faster than any other method.” —Bob Ciaffone, author of Improve Your Poker; co-author of Pot-Limit and No-Limit Poker; Card Player columnist “ Kill PHil teaches the inside secrets that the pros don’t want you to know.” —Mel Judah, 1989 and 1997 WSOP Seven-Card Stud Champion, 2003 WPT legends of Poker Champion “Tournament poker has changed immensely since I won the WSOP and it will continue to evolve. -

An Investigation Into Tournament Poker Strategy Using Evolutionary Algorithms

An Investigation into Tournament Poker Strategy using Evolutionary Algorithms Richard G. Carter I V N E R U S E I T H Y T O H F G E R D I N B U Doctor of Philosophy Centre for Intelligent Systems and their Applications School of Informatics University of Edinburgh 2007 Abstract Poker has become the subject of an increasing amount of study in the computational in- telligence community. The element of imperfect information presents new and greater challenges than those previously posed by games such as checkers and chess. Ad- vances in computer poker have great potential, since reasoning under conditions of uncertainty is typical of many real world problems. To date the focus of computer poker research has centred on the development of ring game players for limit Texas hold’em. For a computer to compete in the most prestigious poker events, however, it will be required to play in a tournament setting with a no-limit betting structure. This thesis is the first academic attempt to investigate the underlying dynamics of successful no-limit tournament poker play. Professional players have proffered advice in the non-academic poker literature on correct strategies for tournament poker play. This study seeks to empirically validate their suggestions on a simplified no-limit Texas hold’em tournament framework. Starting by using exhaustive simulations, we first assess the hypothesis that a strat- egy including information related to game-specific factors performs better than one founded on hand strength knowledge alone. Specifically, we demonstrate that the use of information pertaining to one’s seating position, the opponents’ prior actions, the stage of the tournament, and one’s chip stack size all contribute towards a statistically significant improvement in the number of tournaments won. -

Beware of Risk Everywhere: an Important Lesson from the Current Credit Crisis Michael C

Hastings Business Law Journal Volume 5 Article 2 Number 2 Spring 2009 Spring 1-1-2009 Beware of Risk Everywhere: An Important Lesson from the Current Credit Crisis Michael C. Macchiarola Follow this and additional works at: https://repository.uchastings.edu/ hastings_business_law_journal Part of the Business Organizations Law Commons Recommended Citation Michael C. Macchiarola, Beware of Risk Everywhere: An Important Lesson from the Current Credit Crisis, 5 Hastings Bus. L.J. 267 (2009). Available at: https://repository.uchastings.edu/hastings_business_law_journal/vol5/iss2/2 This Article is brought to you for free and open access by the Law Journals at UC Hastings Scholarship Repository. It has been accepted for inclusion in Hastings Business Law Journal by an authorized editor of UC Hastings Scholarship Repository. For more information, please contact [email protected]. BEWARE OF RISK EVERYWHERE: AN IMPORTANT LESSON FROM THE CURRENT CREDIT CRISIS Michael C. Macchiarola* "I saw the best minds of my generation destroyed by madness." - Allen Ginsburg in "Howl" I. INTRODUCTION As the credit crisis continues to spiral through the world's economies, there has been no shortage of pundits and commentators offering their spin on the problems and proposing solutions. While the entire episode will take some time to sort itself out, its effects are being felt widely and some lessons about its cause can already be gleaned. As a result of this increased attention, a real "teaching moment" has begun to take shape for law school professors training future lawyers in course material dealing with financial issues.' This Article attempts to highlight a lesson that should be incorporated into the curricula following recent market events. -

Alumni History and Hall of Fame Project

Los Angeles Unified School District Alumni History and Hall of Fame Project Los Angeles Unified School District Alumni History and Hall of Fame Project Written and Edited by Bob and Sandy Collins All publication, duplication and distribution rights are donated to the Los Angeles Unified School District by the authors First Edition August 2016 Published in the United States i Alumni History and Hall of Fame Project Founding Committee and Contributors Sincere appreciation is extended to Ray Cortines, former LAUSD Superintendent of Schools, Michelle King, LAUSD Superintendent, and Nicole Elam, Chief of Staff for their ongoing support of this project. Appreciation is extended to the following members of the Founding Committee of the Alumni History and Hall of Fame Project for their expertise, insight and support. Jacob Aguilar, Roosevelt High School, Alumni Association Bob Collins, Chief Instructional Officer, Secondary, LAUSD (Retired) Sandy Collins, Principal, Columbus Middle School (Retired) Art Duardo, Principal, El Sereno Middle School (Retired) Nicole Elam, Chief of Staff Grant Francis, Venice High School (Retired) Shannon Haber, Director of Communication and Media Relations, LAUSD Bud Jacobs, Director, LAUSD High Schools and Principal, Venice High School (Retired) Michelle King, Superintendent Joyce Kleifeld, Los Angeles High School, Alumni Association, Harrison Trust Cynthia Lim, LAUSD, Director of Assessment Robin Lithgow, Theater Arts Advisor, LAUSD (Retired) Ellen Morgan, Public Information Officer Kenn Phillips, Business Community Carl J. Piper, LAUSD Legal Department Rory Pullens, Executive Director, LAUSD Arts Education Branch Belinda Stith, LAUSD Legal Department Tony White, Visual and Performing Arts Coordinator, LAUSD Beyond the Bell Branch Appreciation is also extended to the following schools, principals, assistant principals, staffs and alumni organizations for their support and contributions to this project. -

Kelly Axe Mfg. Co. / Kelly Axe & Tool

NUMBER 148 September 2007 A Journal of Tool Collecting published by CRAFTS of New Jersey Kelly Axe Mfg. Co. / Kelly Axe & Tool Co. By Tom Lamond © LOUISVILLE, KENTUCKY 1874-1897+/- Sometime in late 1845 or early 1846 William and his ALEXANDRIA, INDIANA (Kelly Axe Mfg. Co.) 1897- brother John relocated to Eddyville, Kentucky where Wil- 1904+/- liam married and started a family. William and his brother CHARLESTON, WEST VIRGINIA 1904-1930 John then purchased the Eddyville Iron Works that included NEW YORK, NEW YORK 1904-1930 the Suwanee Furnace and the Union Forge. They renamed (Business Offices as Kelly Axe & Tool Co.) the business Kelly & Co. Some of the items the business produced were kettles for processing sugar and pig iron KELLY’S EARLY ENTERPRISES blooms that were then supplied to other manufacturers. William C. Kelly was born in Pittsburgh, Pa., on Aug. 21, It wasn't long before the Kelly brothers discovered there 1811. He studied metallurgy at the Western University of was an insufficient supply of charcoal readily available Pennsylvania and initially became involved in making en- which in turn increased the costs of purifying the pig iron. gines. He is reputed to have made a water wheel capable of That, and his education in metallurgy, led William to start providing some type of propulsion as well as a rotary steam conducting experiments in refining iron and developing more engine. Those activities apparently tied in with his interests efficient foundry and forging methods. in steamboats as he also became involved with those. He Apparently he was not the only one conducting similar established a commission business in Pittsburgh whereby he experiments around that time. -

Holdem Wisdom for All Players Free

FREE HOLDEM WISDOM FOR ALL PLAYERS PDF Daniel Negreanu | 172 pages | 09 Jan 2007 | Cardoza Publishing,U.S. | 9781580422109 | English | Brooklyn, NY, United States Hold'em Wisdom for All Players | eBay Account Options Sign in. Top charts. New arrivals. Hold'em Wisdom For All Players. Daniel Negreanu Sep The book is designed for those players who want to learn 'right now' and enjoy instant success at the tables. Fifty quick sections focus on key winning concepts, making learning both easy and fast. More by Daniel Negreanu See more. More Hold'em Wisdom for all Players. Daniel Negreanu. Built on 50 concepts and strategies covered in his first book, Hold'em Wisdom for All Players, Daniel Negreanu offers 50 new and powerful tips to help you win money at Holdem Wisdom for All Players cash and Holdem Wisdom for All Players games! If you love playing poker, you owe it to yourself to explore new ideas, learn more way to polish your skills, and get the most enjoyment you can from the game. See you at the felt! Reviews Review Policy. Published on. Flowing text, Google-generated PDF. Best for. Web, Tablet, Phone, eReader. Content protection. Read aloud. Learn more. Flag as inappropriate. It syncs automatically with your account and allows you to read online or offline wherever you are. Please follow the detailed Help center instructions to transfer the files to supported eReaders. Hold'em Wisdom for all Players: Simple and Easy Strategies to Win Money by Daniel Negreanu Goodreads helps you keep track of books you want to read. -

Radical Constructivism: a Way of Knowing and Learning. Studies in Mathematics Education Series: 6

DOCUMENT RESUME ED 381 352 SE 056 049 AUTHOR von Glasersfeld, Ernst TITLE Radical Constructivism: A Way of Knowing and Learning. Studies in Mathematics Education Series: 6. REPORT NO ISBN-0-7507-0387-3 PUB DATE 95 NOTE 231p. AVAILABLE FROMFalmer Press, Taylor & Francis Inc., 1900 Frost Road, Suite 101, Bristol, PA 19007. PUB TYPE Books (010) Viewpoints (Opinion/Position Papers, Essays, etc.) (120) EDRS PRICE MF01/PC10 Plus Postage. DESCRIPTORS *Concept Formation; *Constructivism (Learning); Elementary Secondary Education; Foreign Countries; *History; Language Role; *Mathematics Education IDENTIFIERS *Piaget (Jean); Radical Constructivism ABSTRACT This book gives a definitive theoretical account of radical constiructivism., a theory of knowing that provides a pragmatic approach to questions about reality, truth, language and human understanding. The chapter are:(1) "Growing up Constructivist: Languages and Thoughtful People," (2) "Unpopular Philosophical Ideas: A History in Quotations," (3) "Piaget's Constructivist Theory of Knowing," (4) "The Construction of Concepts," (5) "Reflection and Abstraction," (6) "Constructing Agents: The Self and Others," (7) "On Language, Meaning, and Communication," (8) "The Cybernetic Connection," (9) "Units, Plurality and Number," and (10) "To Encourage Students' Conceptual Constructing." Each chapter contains notes. (Contains 218 references.) (MKR) *********************************************************************** Reproductions supplied by EDRS are the best that can be made from the original document. -

Gamblertm ©2005, Conjelco LLC

The Intelligent Gamblertm ©2005, ConJelCo LLC. All Rights Reserved Number 23, Spring/Summer 2005 wad of cash in his pocket. It would have It was pure, classic Stuey bravado. Seidel Publisher’s Corner been a real stretch to call him The Kid at laughed, thinking that even though Chuck Weinstock this point, though from a distance his Stuey had lost some of the spark he’d Beatle haircut and boyish frame still gave once had, there was a hopeful feeling Welcome to the Intelligent Gambler, Con- the impression of youth. Up close, he seeing him there that day. “Like maybe JelCo’s free newsletter which we attempt looked like what he was; a longtime drug he could give up the drugs. Like maybe to send twice a year to our customers and addict whose excesses were now written he really was back and this could be anyone else who would like a copy. The in his face. The ravaged nose was the another chance.” more time aware among you will have most disturbing feature, one side of it In the end, Seidel decided he didn’t want noticed that this edition is nearly a deflated like a bad flat tire. month late. We delayed it so that we to get up from the game he was in just could announce the brand new, third Still Stuey was excited to be making his yet. But as soon as he walked off, Stuey edition of Lee Jones’s Winning Low-Limit first foray into the Las Vegas’ newest and and sexton looked around and saw Mel- Hold’em (see page Page 5 for more infor- most spectacular hotel. -

Mike Matusow: Check-Raising the Devil

186 Mike Matusow: Check-Raising the Devil book review Mike Matusow: Check-Raising the Devil By Mike Matusow, Amy Calistri, and Tim Lavalli. (2009). Cardoza Publishing: Las Vegas, NV. 288 pp., ISBN: 9781580422611. $24.95 USD (hardcover). Reviewed by: N. Will Shead, PhD, International Centre for Youth Gambling Problems and High-Risk Behaviours, McGill University, Montreal, Quebec, Canada The world of professional poker is filled with colourful characters. There are the grizzled veterans who learned their chops as road gamblers and have been staples in Las Vegas cardrooms since the early days of the Strip. Then there are the fresh-faced Internet poker phenoms, often barely old enough to play in brick-and-mortar casinos. Yet another generation of poker professionals rose through the ranks long after the veterans had established themselves and well before the Internet poker boom of the early 2000s — players like Phil Hellmuth, Daniel Negreanu, and Phil Ivey. Mike Matusow, nicknamed “The Mouth” for his loud and boorish personality, belongs to this latter pedigree and is perhaps the most colourful character of all. While there are numerous other players who have enjoyed more success in terms of poker accomplishments, certainly few, if any, have life stories that warrant a full-length autobiography. Matusow’s story certainly does. His publicly erratic behaviours, often documented in radio interviews and television broadcasts, have made him an intriguing figure, and his story has been long awaited by those interested in the world of poker. Check-Raising the Devil chronicles Matusow’s gambling career up to present day, covering approximately 15 years. -

Texas Holdem Odds and Probabilities Pdf, Epub, Ebook

TEXAS HOLDEM ODDS AND PROBABILITIES PDF, EPUB, EBOOK Matthew Hilger | 254 pages | 28 Feb 2016 | Dimat Enterprises | 9780974150222 | English | Suwanee, United States Texas Holdem Odds and Probabilities PDF Book This is where true strategy and comparing pot odds to the actual odds of hitting a better hand come into play. This is the generic formula. The Dealer Lays Out:. These cookies do not store any personal information. If you are playing against a single opponent those events will occur very rarely. Download as PDF Printable version. Remember, your calculated odds were , meaning the poker gods say you will lose four times for every time you win. There are 6 ways to deal pocket aces preflop and the probability is 0. If you're holding on a A-K board, and your only saving grace is a third 7. Perhaps surprisingly, this is fewer than the number of 5-card poker hands from 5 cards because some 5-card hands are impossible with 7 cards e. The 4 missed straight flushes become flushes and the 1, missed straights become no pair. A formula to estimate the probability for this to happen at a 9 player table is. There are 19, possible flops in total. You'll flop a flush draw around one in ten times, though. At the same time, realize that many players overvalue random suited cards, which are dealt relatively frequently. How many starting hands are there in Texas Holdem? In most variants of lowball, the ace is counted as the lowest card and straights and flushes don't count against a low hand, so the lowest hand is the five-high hand A , also called a wheel.