Parallel Programming in Intel Cilk Plus with Autotuning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Other Apis What’S Wrong with Openmp?

Threaded Programming Other APIs What’s wrong with OpenMP? • OpenMP is designed for programs where you want a fixed number of threads, and you always want the threads to be consuming CPU cycles. – cannot arbitrarily start/stop threads – cannot put threads to sleep and wake them up later • OpenMP is good for programs where each thread is doing (more-or-less) the same thing. • Although OpenMP supports C++, it’s not especially OO friendly – though it is gradually getting better. • OpenMP doesn’t support other popular base languages – e.g. Java, Python What’s wrong with OpenMP? (cont.) Can do this Can do this Can’t do this Threaded programming APIs • Essential features – a way to create threads – a way to wait for a thread to finish its work – a mechanism to support thread private data – some basic synchronisation methods – at least a mutex lock, or atomic operations • Optional features – support for tasks – more synchronisation methods – e.g. condition variables, barriers,... – higher levels of abstraction – e.g. parallel loops, reductions What are the alternatives? • POSIX threads • C++ threads • Intel TBB • Cilk • OpenCL • Java (not an exhaustive list!) POSIX threads • POSIX threads (or Pthreads) is a standard library for shared memory programming without directives. – Part of the ANSI/IEEE 1003.1 standard (1996) • Interface is a C library – no standard Fortran interface – can be used with C++, but not OO friendly • Widely available – even for Windows – typically installed as part of OS – code is pretty portable • Lots of low-level control over behaviour of threads • Lacks a proper memory consistency model Thread forking #include <pthread.h> int pthread_create( pthread_t *thread, const pthread_attr_t *attr, void*(*start_routine, void*), void *arg) • Creates a new thread: – first argument returns a pointer to a thread descriptor. -

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

Technical Report Aaron Councilman

Extensible Parallel Programming in ableC Aaron Councilman Department of Computer Science and Engineering University of Minnesota, Twin Cities May 23, 2019 1 Introduction There are many different manners of parallelizing code, and many different languages that provide such features. Different types of computations are best suited by different types of parallelism. Simply whether a computation is compute bound or I/O bound determines whether the computation will benefit from being run with more threads than the machine has cores, and other properties of a computation will similarly affect how it performs when run in parallel. Thus, to provide parallel programmers the ability to deliver the best performance for their programs, the ability to choose the parallel programming abstractions they use is important. The ability to combine these abstractions however they need is also important, since different parts of a program will have different performance properties, and therefore may perform best using different abstractions. Unfortunately, parallel programming languages are often designed monolithically, built as an entire language with a specific set of features. Because of this, programmer's choice of parallel programming abstractions is generally limited to the choice of the language to use. Beyond limiting the available abstracts, this also means, that the choice of abstractions must be made ahead of time, since any attempt to change the parallel programming language at a later time is likely to be be prohibitive as it may require rewriting large portions of the codebase, if not the entire codebase. Extensible programming languages can offer a solution to these problems. With an extensible compiler, the programmer chooses a base programming language and can then select the set of \extensions" for that language that best fit their needs. -

Operating Systems and Applications for Embedded Systems >>> Toolchains

>>> Operating Systems And Applications For Embedded Systems >>> Toolchains Name: Mariusz Naumowicz Date: 31 sierpnia 2018 [~]$ _ [1/19] >>> Plan 1. Toolchain Toolchain Main component of GNU toolchain C library Finding a toolchain 2. crosstool-NG crosstool-NG Installing Anatomy of a toolchain Information about cross-compiler Configruation Most interesting features Sysroot Other tools POSIX functions AP [~]$ _ [2/19] >>> Toolchain A toolchain is the set of tools that compiles source code into executables that can run on your target device, and includes a compiler, a linker, and run-time libraries. [1. Toolchain]$ _ [3/19] >>> Main component of GNU toolchain * Binutils: A set of binary utilities including the assembler, and the linker, ld. It is available at http://www.gnu.org/software/binutils/. * GNU Compiler Collection (GCC): These are the compilers for C and other languages which, depending on the version of GCC, include C++, Objective-C, Objective-C++, Java, Fortran, Ada, and Go. They all use a common back-end which produces assembler code which is fed to the GNU assembler. It is available at http://gcc.gnu.org/. * C library: A standardized API based on the POSIX specification which is the principle interface to the operating system kernel from applications. There are several C libraries to consider, see the following section. [1. Toolchain]$ _ [4/19] >>> C library * glibc: Available at http://www.gnu.org/software/libc. It is the standard GNU C library. It is big and, until recently, not very configurable, but it is the most complete implementation of the POSIX API. * eglibc: Available at http://www.eglibc.org/home. -

Concurrent Cilk: Lazy Promotion from Tasks to Threads in C/C++

Concurrent Cilk: Lazy Promotion from Tasks to Threads in C/C++ Christopher S. Zakian, Timothy A. K. Zakian Abhishek Kulkarni, Buddhika Chamith, and Ryan R. Newton Indiana University - Bloomington, fczakian, tzakian, adkulkar, budkahaw, [email protected] Abstract. Library and language support for scheduling non-blocking tasks has greatly improved, as have lightweight (user) threading packages. How- ever, there is a significant gap between the two developments. In previous work|and in today's software packages|lightweight thread creation incurs much larger overheads than tasking libraries, even on tasks that end up never blocking. This limitation can be removed. To that end, we describe an extension to the Intel Cilk Plus runtime system, Concurrent Cilk, where tasks are lazily promoted to threads. Concurrent Cilk removes the overhead of thread creation on threads which end up calling no blocking operations, and is the first system to do so for C/C++ with legacy support (standard calling conventions and stack representations). We demonstrate that Concurrent Cilk adds negligible overhead to existing Cilk programs, while its promoted threads remain more efficient than OS threads in terms of context-switch overhead and blocking communication. Further, it enables development of blocking data structures that create non-fork-join dependence graphs|which can expose more parallelism, and better supports data-driven computations waiting on results from remote devices. 1 Introduction Both task-parallelism [1, 11, 13, 15] and lightweight threading [20] libraries have become popular for different kinds of applications. The key difference between a task and a thread is that threads may block|for example when performing IO|and then resume again. -

Intel® Cilk™ Plus

Overview: Programming Environment for Intel® Xeon Phi™ Coprocessor One Source Base, Tuned to many Targets Source Compilers, Libraries, Parallel Models Multicore Many-core Cluster Multicore Multicore CPU CPU Intel® MIC Multicore Multicore and Architecture Cluster Many-core Cluster Copyright© 2014, Intel Corporation. All rights reserved. *Other brands and names are the property of their respective owners. Intel® Parallel Studio XE 2013 and Intel® Cluster Studio XE 2013 Phase Product Feature Benefit Intel® Threading design assistant • Simplifies, demystifies, and speeds Advisor XE (Studio products only) parallel application design • C/C++ and Fortran compilers • Intel® Threading Building Blocks • Enabling solution to achieve the Intel® • Intel® Cilk™ Plus application performance and Composer XE • Intel® Integrated Performance scalability benefits of multicore and Build Primitives forward scale to many-core • Intel® Math Kernel Library • Enabling High Performance Scalability, Interconnect Intel® High Performance Message Independence, Runtime Fabric † MPI Library Passing (MPI) Library Selection, and Application Tuning Capability ® Intel Performance Profiler for • Remove guesswork, saves time, VTune™ optimizing application makes it easier to find performance Amplifier XE performance and scalability and scalability bottlenecks Memory & threading dynamic • Increased productivity, code quality, ® Intel analysis for code quality and lowers cost, finds memory, Verify Inspector XE threading , and security defects & Tune Static Analysis for code quality -

XL C/C++: Language Reference About This Document

IBM XL C/C++ for Linux, V16.1.1 IBM Language Reference Version 16.1.1 SC27-8045-01 IBM XL C/C++ for Linux, V16.1.1 IBM Language Reference Version 16.1.1 SC27-8045-01 Note Before using this information and the product it supports, read the information in “Notices” on page 63. First edition This edition applies to IBM XL C/C++ for Linux, V16.1.1 (Program 5765-J13, 5725-C73) and to all subsequent releases and modifications until otherwise indicated in new editions. Make sure you are using the correct edition for the level of the product. © Copyright IBM Corporation 1998, 2018. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this document ......... v Chapter 4. IBM extension features ... 11 Who should read this document........ v IBM extension features for both C and C++.... 11 How to use this document.......... v General IBM extensions ......... 11 How this document is organized ....... v Extensions for GNU C compatibility ..... 15 Conventions .............. v Extensions for vector processing support ... 47 Related information ........... viii IBM extension features for C only ....... 56 Available help information ........ ix Extensions for GNU C compatibility ..... 56 Standards and specifications ........ x Extensions for vector processing support ... 58 Technical support ............ xi IBM extension features for C++ only ...... 59 How to send your comments ........ xi Extensions for C99 compatibility ...... 59 Extensions for C11 compatibility ...... 59 Chapter 1. Standards and specifications 1 Extensions for GNU C++ compatibility .... 60 Chapter 2. Language levels and Notices .............. 63 language extensions ......... 3 Trademarks ............. -

Section “Common Predefined Macros” in the C Preprocessor

The C Preprocessor For gcc version 12.0.0 (pre-release) (GCC) Richard M. Stallman, Zachary Weinberg Copyright c 1987-2021 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation. A copy of the license is included in the section entitled \GNU Free Documentation License". This manual contains no Invariant Sections. The Front-Cover Texts are (a) (see below), and the Back-Cover Texts are (b) (see below). (a) The FSF's Front-Cover Text is: A GNU Manual (b) The FSF's Back-Cover Text is: You have freedom to copy and modify this GNU Manual, like GNU software. Copies published by the Free Software Foundation raise funds for GNU development. i Table of Contents 1 Overview :::::::::::::::::::::::::::::::::::::::: 1 1.1 Character sets:::::::::::::::::::::::::::::::::::::::::::::::::: 1 1.2 Initial processing ::::::::::::::::::::::::::::::::::::::::::::::: 2 1.3 Tokenization ::::::::::::::::::::::::::::::::::::::::::::::::::: 4 1.4 The preprocessing language :::::::::::::::::::::::::::::::::::: 6 2 Header Files::::::::::::::::::::::::::::::::::::: 7 2.1 Include Syntax ::::::::::::::::::::::::::::::::::::::::::::::::: 7 2.2 Include Operation :::::::::::::::::::::::::::::::::::::::::::::: 8 2.3 Search Path :::::::::::::::::::::::::::::::::::::::::::::::::::: 9 2.4 Once-Only Headers::::::::::::::::::::::::::::::::::::::::::::: 9 2.5 Alternatives to Wrapper #ifndef :::::::::::::::::::::::::::::: -

Parallelism in Cilk Plus

Cilk Plus: Language Support for Thread and Vector Parallelism Arch D. Robison Intel Sr. Principal Engineer Outline Motivation for Intel® Cilk Plus SIMD notations Fork-Join notations Karatsuba multiplication example GCC branch Copyright© 2012, Intel Corporation. All rights reserved. 2 *Other brands and names are the property of their respective owners. Multi-Threading and Vectorization are Essential to Performance Latest Intel® Xeon® chip: 8 cores 2 independent threads per core 8-lane (single prec.) vector unit per thread = 128-fold potential for single socket Intel® Many Integrated Core Architecture >50 cores (KNC) ? independent threads per core 16-lane (single prec.) vector unit per thread = parallel heaven Copyright© 2012, Intel Corporation. All rights reserved. 3 *Other brands and names are the property of their respective owners. Importance of Abstraction Software outlives hardware. Recompiling is easier than rewriting. Coding too closely to hardware du jour makes moving to new hardware expensive. C++ philosophy: abstraction with minimal penalty Do not expect compiler to be clever. But let it do tedious bookkeeping. Copyright© 2012, Intel Corporation. All rights reserved. 4 *Other brands and names are the property of their respective owners. “Three Layer Cake” Abstraction Message Passing exploit multiple nodes Fork-Join exploit multiple cores exploit parallelism at multiple algorithmic levels SIMD exploit vector hardware Copyright© 2012, Intel Corporation. All rights reserved. 5 *Other brands and names are the property of their respective owners. Composition Message Driven compose via send/receive Fork-Join compose via call/return SIMD compose sequentially Copyright© 2012, Intel Corporation. All rights reserved. 6 *Other brands and names are the property of their respective owners. -

Cheat Sheets of the C Standard Library

Cheat Sheets of the C standard library Version 1.06 Last updated: 2012-11-28 About This document is a set of quick reference sheets (or ‘cheat sheets’) of the ANSI C standard library. It contains function and macro declarations in every header of the library, as well as notes about their usage. This document covers C++, but does not cover the C99 or C11 standard. A few non-ANSI-standard functions, if they are interesting enough, are also included. Style used in this document Function names, prototypes and their parameters are in monospace. Remarks of functions and parameters are marked italic and enclosed in ‘/*’ and ‘*/’ like C comments. Data types, whether they are built-in types or provided by the C standard library, are also marked in monospace. Types of parameters and return types are in bold. Type modifiers, like ‘const’ and ‘unsigned’, have smaller font sizes in order to save space. Macro constants are marked using proportional typeface, uppercase, and no italics, LIKE_THIS_ONE. One exception is L_tmpnum, which is the constant that uses lowercase letters. Example: int system ( const char * command ); /* system: The value returned depends on the running environment. Usually 0 when executing successfully. If command == NULL, system returns whether the command processor exists (0 if not). */ License Copyright © 2011 Kang-Che “Explorer” Sung (宋岡哲 <explorer09 @ gmail.com>) This work is licensed under the Creative Commons Attribution 3.0 Unported License. http://creativecommons.org/licenses/by/3.0/ . When copying and distributing this document, I advise you to keep this notice and the references section below with your copies, as a way to acknowledging all the sources I referenced. -

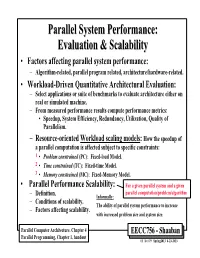

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor.