An Evaluation of the North East of England Pilot of the Gatsby Benchmarks of Good Career Guidance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Index Educational Opportunities.Pdf

_Index Educational Opportunities.pdf _Joint SMAa-KNMA Educational Opportunities WR.pdf Deprivation, Unemployment, The educational benefits of the scheme, STEM Education, Careers and Industry Involvement, a) schools, b) post 16 Educational opportunities and training will enable local people to achieve the qualifications and skills they need to be employable at a reopened airport. Appendix 1.pdf Appendix 10.pdf Appendix 11.pdf Appendix 12.pdf Appendix 13.pdf Appendix 14.pdf Appendix 15.pdf Appendix 2.pdf Appendix 3.pdf Appendix 4.pdf Appendix 5.pdf Appendix 6.pdf Appendix 7.pdf Appendix 8.pdf Appendix 9.pdf TR020002 – Manston Airport - Written Representation Ref:20011948 Educational Opportunities – Socio-economic issues SE ii, SE iii, SE v, SE vii, SE viii Introduction We are writing as Vice Chair of Kent Needs Manston Airport and Vice Chair of Save Manston Airport Association, which both support the RSP DCO application in full, and as such agree with all aspects of the application [APP-001 to APP-087]. Although they are separate organisations, they share a common goal to see local people benefit from gaining the necessary skills and qualifications, through the appropriate provision of education, training and apprenticeships, to enable as many of them as possible to gain employment. We have been working with the applicant to find the best ways to bring this about. This Written Representation will concentrate on the Educational Opportunities and we will make further submissions on Jobs, Training and Apprenticeships throughout the Examination Process. Data, methodology and assumptions We have tried to access information/data from reputable sources and quoted relevant passages using quotation marks. -

Title of Report

Item 4 Cabinet Date: 21 February 2018 Pupil Admission Arrangements for Primary and Secondary Schools – September 2019 Report of the Corporate Director Children, Adults and Health Cabinet Portfolio/Lead Member: Cllr Joan Atkinson, Children, Young People and Families Purpose of Report 1. This report presents the outcome of the 2017/18 consultation exercise with Governing Boards of Local Authority (LA) Schools and others in respect of proposed pupil admission arrangements for September 2019, and specifically seeks the Council’s determination of the pupil admission arrangements for those schools for which it is the admission authority i.e. Community and Voluntary Controlled Schools, and agreement to the operation of the Co-ordinated Admission Scheme. 2. The report also takes into consideration the most recent version of the School Admissions Code issued by the Department for Education (DfE) which came into force on 19 December 2014. 2 Contact Officer: Christine Henderson, Governor Support and School Admissions Manager (4247847) cabinet cabinet cabinet cabinet cabinet cabinet cabinet Pupil Admission Arrangements for Primary and Secondary Schools – September 2019 Introduction 3. All Admission Authorities have a duty to publish details of their own admission arrangements. Governors of all Roman Catholic schools in the Borough are their own Admission Authorities, as are St Oswald’s C of E Primary School and Jarrow School and as such, determine their own admission policies. The relevant Academy Trust determines the admission policy(ies) for its Academy(ies). The LA is the Admission Authority for all Community and Voluntary Controlled schools. 4. The Education (School Information) (England) Regulations 2008 require the LA to publish information annually about admission arrangements for the following year for all maintained schools in its area, and to publish for each school year a ‘composite prospectus’ giving summary details of admission information. -

Open PDF 715KB

LBP0018 Written evidence submitted by The Northern Powerhouse Education Consortium Education Select Committee Left behind white pupils from disadvantaged backgrounds Inquiry SUBMISSION FROM THE NORTHERN POWERHOUSE EDUCATION CONSORTIUM Introduction and summary of recommendations Northern Powerhouse Education Consortium are a group of organisations with focus on education and disadvantage campaigning in the North of England, including SHINE, Northern Powerhouse Partnership (NPP) and Tutor Trust. This is a joint submission to the inquiry, acting together as ‘The Northern Powerhouse Education Consortium’. We make the case that ethnicity is a major factor in the long term disadvantage gap, in particular white working class girls and boys. These issues are highly concentrated in left behind towns and the most deprived communities across the North of England. In the submission, we recommend strong actions for Government in particular: o New smart Opportunity Areas across the North of England. o An Emergency Pupil Premium distribution arrangement for 2020-21, including reform to better tackle long-term disadvantage. o A Catch-up Premium for the return to school. o Support to Northern Universities to provide additional temporary capacity for tutoring, including a key role for recent graduates and students to take part in accredited training. About the Organisations in our consortium SHINE (Support and Help IN Education) are a charity based in Leeds that help to raise the attainment of disadvantaged children across the Northern Powerhouse. Trustees include Lord Jim O’Neill, also a co-founder of SHINE, and Raksha Pattni. The Northern Powerhouse Partnership’s Education Committee works as part of the Northern Powerhouse Partnership (NPP) focusing on the Education and Skills agenda in the North of England. -

Name Liam Malcolm Main Subject Physical Education Second Subject Science My Academic Qualifications

Personal Information: Name Liam Malcolm Main Subject Physical Second Subject Science Education My academic qualifications: School/College University Mortimer Community College (2007-2012) University of Sunderland (2014-2018) GCSE: English (C), Maths (C), Physical Education (A) BSc (Hons): Sports Coaching (First Class) BTEC Level 2: Sport (D*), IT (D*), Triple Science (Pass) MSc: Sport and Exercise Sciences (Merit) Gateshead College Academy of Sport (2012-2014) North East Partnership SCITT (2018-2019) Level 3 Extended Diploma: Sports Development, Coaching and PGCE and QTS: Secondary Physical Education (Pending) Fitness (DDM) The experience I have had in schools: PGCE Placements Benfield School, Newcastle (September-December 2018) St Mary’s Catholic School, Newcastle (January-June 2019) Undergraduate Placements Mortimer Community College, South Shields (2015-2018) Red House Academy, Sunderland (May 2017, 2 days) Kelvin Grove Primary School, Gateshead (March 2016, 2 weeks) Other Placements and / or Harton Academy, South Shields (June 2018, 6 weeks). Master’s Degree Research Employment in Schools The strengths I have within my main subject area: Personal Strengths NGB/Other Coaching Awards Teaching Strengths • Played numerous sports for many years • FA Level 1 Football Coaching (2014) • I possess a vast array of both both within competitive and social • VTCT Level 3 Sports Massage Therapist theoretical and practical sporting environments (2015) knowledge from playing sport, • Member of school football (Captain), • BAFCA Level 1 American Football Coaching completing a range of NGB basketball (Captain) and rugby teams (2016) qualifications and from my university • Played football at club level from the age • Site Certified School Rock Climbing and PGCE studies of 6 to 16 Instructor (2016) • I place a huge emphasis on student • Played county and regional basketball • Teachers Trampolining Award Level 1 & 2 engagement and inclusion during from the age of 12 to 16 (2018) lessons. -

Newsletter Your Termly Update

NEWSLETTER YOUR TERMLY UPDATE EASTER 2019 ISSUE 5 SUMMARY Teaching Internship 03 in Physics or Maths Year 7 Paris Trip 05 Year 12 Visit - The 06 House of Commons Alnwick Mob 08 Football Story Nepal and Vietnam 09 Teams Update Lunchtime 10 Arrangements Girls’ U15 Rugby 12 County Cup Winners Vegas Acro Cup Gold 12 medal winner North East Sports 13 Disability Awards Evening 1 WELCOME Welcome to the Easter edition of April 2019. Please refer to our ‘Pastoral’ Harton News, the termly newsletter for pages for further information relating parents, students, staff, governors and to the start of the new summer term members of the community. 2019. The academic term leading up to the I would like to take this opportunity Easter holidays, as always, has been to express my sincere thanks to very busy this year with a huge number everyone for your tremendous support of activities continuing to be a welcome which continues to make Harton the feature of school life. ‘outstanding’ school that it is. The very important examination Finally, I would like to wish you period commences immediately after all a fantastic Easter break with we return to school, making this your families and I look forward to free time an ideal opportunity for welcoming our students back to school students to organise and commence a on Tuesday 23rd April 2019. comprehensive revision schedule. We break up at 3:15pm on Friday 5th Best wishes April and all students return to school Sir Ken Gibson after the Easter holiday on Tuesday 23rd Executive Head Teacher ISSUEIN 5 THIS/ EASTER -

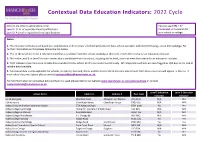

Education Indicators: 2022 Cycle

Contextual Data Education Indicators: 2022 Cycle Schools are listed in alphabetical order. You can use CTRL + F/ Level 2: GCSE or equivalent level qualifications Command + F to search for Level 3: A Level or equivalent level qualifications your school or college. Notes: 1. The education indicators are based on a combination of three years' of school performance data, where available, and combined using z-score methodology. For further information on this please follow the link below. 2. 'Yes' in the Level 2 or Level 3 column means that a candidate from this school, studying at this level, meets the criteria for an education indicator. 3. 'No' in the Level 2 or Level 3 column means that a candidate from this school, studying at this level, does not meet the criteria for an education indicator. 4. 'N/A' indicates that there is no reliable data available for this school for this particular level of study. All independent schools are also flagged as N/A due to the lack of reliable data available. 5. Contextual data is only applicable for schools in England, Scotland, Wales and Northern Ireland meaning only schools from these countries will appear in this list. If your school does not appear please contact [email protected]. For full information on contextual data and how it is used please refer to our website www.manchester.ac.uk/contextualdata or contact [email protected]. Level 2 Education Level 3 Education School Name Address 1 Address 2 Post Code Indicator Indicator 16-19 Abingdon Wootton Road Abingdon-on-Thames -

School Name POSTCODE AUCL Eligible If Taken GCSE's at This

School Name POSTCODE AUCL Eligible if taken GCSE's at this AUCL Eligible if taken A-levels at school this school City of London School for Girls EC2Y 8BB No No City of London School EC4V 3AL No No Haverstock School NW3 2BQ Yes Yes Parliament Hill School NW5 1RL No Yes Regent High School NW1 1RX Yes Yes Hampstead School NW2 3RT Yes Yes Acland Burghley School NW5 1UJ No Yes The Camden School for Girls NW5 2DB No No Maria Fidelis Catholic School FCJ NW1 1LY Yes Yes William Ellis School NW5 1RN Yes Yes La Sainte Union Catholic Secondary NW5 1RP No Yes School St Margaret's School NW3 7SR No No University College School NW3 6XH No No North Bridge House Senior School NW3 5UD No No South Hampstead High School NW3 5SS No No Fine Arts College NW3 4YD No No Camden Centre for Learning (CCfL) NW1 8DP Yes No Special School Swiss Cottage School - Development NW8 6HX No No & Research Centre Saint Mary Magdalene Church of SE18 5PW No No England All Through School Eltham Hill School SE9 5EE No Yes Plumstead Manor School SE18 1QF Yes Yes Thomas Tallis School SE3 9PX No Yes The John Roan School SE3 7QR Yes Yes St Ursula's Convent School SE10 8HN No No Riverston School SE12 8UF No No Colfe's School SE12 8AW No No Moatbridge School SE9 5LX Yes No Haggerston School E2 8LS Yes Yes Stoke Newington School and Sixth N16 9EX No No Form Our Lady's Catholic High School N16 5AF No Yes The Urswick School - A Church of E9 6NR Yes Yes England Secondary School Cardinal Pole Catholic School E9 6LG No No Yesodey Hatorah School N16 5AE No No Bnois Jerusalem Girls School N16 -

Information for Parents Booklet

Information for Parents School Education in September 2021 Admission to Secondary Schools Closing date 2nd November 2020 at 4.30pm Apply on-line at www.southtyneside.gov.uk/schooladmissions 1 Foreword Dear Parent This booklet is to help you, as a parent/carer of a child who is about to transfer into Secondary School in September 2021. It contains information about all of our schools and we hope you will find it easy to read and understand and helpful in its content. The Council is committed to working in partnership with yourselves, teachers, governors and other partners to provide the best educational and learning opportunities for South Tyneside children. This includes providing you with accurate and up to date information to help you decide which school is right for your child. Please read this booklet carefully and make sure you understand how your application will be processed. We have made the process of applying for a school place even more convenient for parents/ carers by introducing online admissions. Applying for a school place online is quick, safe and an efficient way of making your application. The process offers many benefits, which are outlined in this booklet. Whilst we recommend that you apply online, we appreciate you may alternatively prefer to complete a paper application. The Council is keen to improve the quality of the information we provide to parents. If there is anything else you would like to see in this booklet or have any other comments about the service you have received from the Authority please let us know by contacting the School Admissions Team on telephone number (0191) 424 7767 or (0191) 424 7706. -

Annex 8 – Academy Trusts Consolidated Into SARA 2018/19 This Annex Lists All Ats Consolidated Into SARA 2018/19, with Their Constituent Academies

Annex 8 – Academy Trusts consolidated into SARA 2018/19 This annex lists all ATs consolidated into SARA 2018/19, with their constituent Academies. * These Academies transferred into the AT from another AT during the year. ** Newly opened or converted to academy status during 2018/19. ^ These Academies transferred out of the AT into another AT during the year. + Closed during the year to 31 August 2019. ++ Closed prior to 31 August 2018. +++ ATs where the Academies had all transferred out over the course of 2018/19. # City Technology colleges (CTC) are included in the SARA consolidation, but do not appear in Annex 1 – Sector Development Data. Further details can be found at www.companieshouse.gov.uk by searching on the company number. -

Contextual Data Education Indicators: 2020 Cycle

Contextual Data Education Indicators: 2020 Cycle Schools are listed in alphabetical order. You can use CTRL + F/ Level 2: GCSE or equivalent level qualifications Command + F to search for Level 3: A Level or equivalent level qualifications your school or college. Notes: 1. The education indicators are based on a combination of three years' of school performance data, where available, and combined using z-score methodology. For further information on this please follow the link below. 2. 'Yes' in the Level 2 or Level 3 column means that a candidate from this school, studying at this level, meets the criteria for an education indicator. 3. 'No' in the Level 2 or Level 3 column means that a candidate from this school, studying at this level, does not meet the criteria for an education indicator. 4. 'N/A' indicates that there is no reliable data available for this school for this particular level of study. All independent schools are also flagged as N/A due to the lack of reliable data available. 5. Contextual data is only applicable for schools in England, Scotland, Wales and Northern Ireland meaning only schools from these countries will appear in this list. If your school does not appear please contact [email protected]. For full information on contextual data and how it is used please refer to our website www.manchester.ac.uk/contextualdata or contact [email protected]. Level 2 Education Level 3 Education School Name Address 1 Address 2 Post Code Indicator Indicator 16-19 Abingdon Wootton Road Abingdon-on-Thames -

Name of Organisation

Name of Organisation Body Service Area Service Detail Spend Description Date South Tyneside Council 00CL Business & Resources Group Premises Insurance Insurance Premiums 01 February 2019 South Tyneside Council 00CL Economic Regeneration Group Non-Staff Advertising Statutory Notices 01 February 2019 South Tyneside Council 00CL Economic Regeneration Capital Capital Enhancement Costs Legionnaires Water Management 01 February 2019 South Tyneside Council 00CL Business & Resources Group Furniture & Equipment - General Haven Point 01 February 2019 South Tyneside Council 00CL Business & Resources Group Property Planned Maintenance Street works 01 February 2019 South Tyneside Council 00CL Economic Regeneration Group Other Energy Charges Utilities - Corporate Landlord 01 February 2019 South Tyneside Council 00CL Children's, Adults & Families Group Legal Advisors Sec 17 - Central Pot 01 February 2019 South Tyneside Council 00CL Business & Resources Group Furniture & Equipment - General Temple Park Centre 01 February 2019 South Tyneside Council 00CL Children's, Adults & Families Group Home Care Physical Support 01 February 2019 South Tyneside Council 00CL Children's, Adults & Families Group Purchase of Stock Items Community Equipment Service 01 February 2019 South Tyneside Council 00CL Children's, Adults & Families Group Purchase of Stock Items Community Equipment Service 01 February 2019 South Tyneside Council 00CL Business & Resources Group Cleaning Charges Hebburn Central 01 February 2019 South Tyneside Council 00CL Business & Resources Group Library & Other Books Maps etc. Libraries Admin 01 February 2019 South Tyneside Council 00CL Housing Revenue Account Valuation Services Rents Rates and Taxes 01 February 2019 South Tyneside Council 00CL Economic Regeneration Group Furniture & Equipment - General Jarrow Hub 01 February 2019 South Tyneside Council 00CL Business & Resources Group Library & Other Books Maps etc. -

1 March 2019

1 March 2019 This House believes that the cost of a plastic bottle is too great! The much anticipated single-use plastic debate, hosted with our partners from the North East Initiative on Business Ethics Ltd, enabled sixth form students from Emmanuel College, Gosforth Academy, Harton Academy, and St Bede’s to engage in some strong academic jousting. Caroline Theobald CBE kept them all in check! Well done to all those involved and congratulations to the winners, Gosforth Academy. Fantastic logistical support was provided by many of the Year 10-13 economists. It was fabulous to be supported by an eminent judging panel comprising Gareth Johnson, Anna McCready, CFA, Professor Ron Beadle, Karl Morgan, and Hallam Wheatley. Thank you for 'Making a Difference'! A big thank you to everyone who supported Make a Difference Week. Our various fundraising initiatives proved popular, and we would like to thank all those who brought donations to the food collection, book exchange and cake sale. We look forward to bringing news of the grand total raised when all monies have been counted. Sixth Formers spend half-term holiday in Berlin Over the half term, seven Year 13 German students travelled to Berlin as part of the exchange programme with the Gustav-Heinemann-Oberschule. During their time there, they immersed themselves fully into the German culture, having to cope without the accompaniment of a teacher, and learnt many things about German history, with visits to an ex-Stasi prison and an underground bunker. Year 13 were also fortunate enough to visit the German Parliament building, where they found out about what goes on inside the Reichstag.