Tele-Operated Car Control Using Brain Computer Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture Notes: the Mathematics of Phylogenetics

Lecture Notes: The Mathematics of Phylogenetics Elizabeth S. Allman, John A. Rhodes IAS/Park City Mathematics Institute June-July, 2005 University of Alaska Fairbanks Spring 2009, 2012, 2016 c 2005, Elizabeth S. Allman and John A. Rhodes ii Contents 1 Sequences and Molecular Evolution 3 1.1 DNA structure . .4 1.2 Mutations . .5 1.3 Aligned Orthologous Sequences . .7 2 Combinatorics of Trees I 9 2.1 Graphs and Trees . .9 2.2 Counting Binary Trees . 14 2.3 Metric Trees . 15 2.4 Ultrametric Trees and Molecular Clocks . 17 2.5 Rooting Trees with Outgroups . 18 2.6 Newick Notation . 19 2.7 Exercises . 20 3 Parsimony 25 3.1 The Parsimony Criterion . 25 3.2 The Fitch-Hartigan Algorithm . 28 3.3 Informative Characters . 33 3.4 Complexity . 35 3.5 Weighted Parsimony . 36 3.6 Recovering Minimal Extensions . 38 3.7 Further Issues . 39 3.8 Exercises . 40 4 Combinatorics of Trees II 45 4.1 Splits and Clades . 45 4.2 Refinements and Consensus Trees . 49 4.3 Quartets . 52 4.4 Supertrees . 53 4.5 Final Comments . 54 4.6 Exercises . 55 iii iv CONTENTS 5 Distance Methods 57 5.1 Dissimilarity Measures . 57 5.2 An Algorithmic Construction: UPGMA . 60 5.3 Unequal Branch Lengths . 62 5.4 The Four-point Condition . 66 5.5 The Neighbor Joining Algorithm . 70 5.6 Additional Comments . 72 5.7 Exercises . 73 6 Probabilistic Models of DNA Mutation 81 6.1 A first example . 81 6.2 Markov Models on Trees . 87 6.3 Jukes-Cantor and Kimura Models . -

Community Report 2Nd Quarter 2012 | Issue 22

COMMUNITY REPORT 2ND QUARTER 2012 | ISSUE 22 WELCOME MESSAGE Dear KDE members, contributors, night only to return after few hours of raised. A Pledgie campaign users, supporters, patrons, sponsors sleep to continue working from where (http://pledgie.com/campaigns/18045) and friends, they left off. Since then, a lot has is available to help achieve this goal. changed. We now have regular sprints Please spread the word about this We are coming back from a successful pretty much all year around. campaign and donate if you can. Akademy 2012 in Tallinn, Estonia. The event commenced with a befitting talk One such sprint is the Randa meeting. Speaking of changing times, a very “Freedom of Expression” by Mathias In fact, it has become the most important one is the upcoming new Klang; “freedom of expression” is a intense and largest KDE sprint. In 2011, ownership of Qt. Recently Nokia trait that has embodied KDE since its the event saw participation by 40 KDE announced that it is selling its Qt beginning. It was followed by an contributors from around the world business to Digia. Congratulations to excellent two day line-up of talks. The coming together to plan, design, KDE e.V. supporting member Digia on main conference was followed by a create and work on their favorite KDE acquiring Qt Software. We wish them diverse and interesting array of BoF projects. The Randa sprint is being all the best in taking Qt to even sessions and workshops. The KDE e.V. organized by our own KDE hero higher levels of success and adoption. -

What's New in KDE SC

RevieWs KDE SC 4.4 What’s new in KDE SC 4.4 FOUR-IN-HAND fmatte, photocase.com fmatte, Version 4.4 sees the KDE Software Compilation reach a status on par puzzle bench lets you create your own puzzles, giving you a free choice of the with the user friendliness and stability of good old KDE 3.5. On top of number of parts (Figure 3). Palapeli au- tomatically remembers your progress in this, many features are new. BY MARCEL HILZINGER completing the puzzles. You can thus work on multiple puzzles, or new puzzle f you have not looked at an open line feature allows users to compose designs, concurrently. source operating system for a year, postings at any time. iyou probably won’t recognize any- The KDE-Edu educational set includes New system Components thing. Projects disappear and new ones two new programs: Cantor and Rocs. KDE always had an address book, but take their place; a tool or library that is The Cantor math tool (Figure 2) lets you the tool that comes with KDE SC 4.4 is today’s buzz can be obsolete tomorrow. compose math worksheets. By default, practically a new program. Its author is KDE users experienced this situation Cantor relies on the internal KAlgebra KDE PIM developer Tobias König, who is when the desktop warped from version back end, although it 3.x to 4.x. Early 4.x releases showed supports the free Maxima promise, but none could achieve the sta- [3] and Sage [4] educa- Online. Easy. Secure. Reliable bility and versatility of the late 3.x se- tional systems. -

Release Notes

LispWorks® Release Notes and Installation Guide Version 5.1 Copyright and Trademarks LispWorks Release Notes and Installation Guide Version 5.1 March 2008 Copyright © 2008 by LispWorks Ltd. All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of LispWorks Ltd. The information in this publication is provided for information only, is subject to change without notice, and should not be construed as a commitment by LispWorks Ltd. LispWorks Ltd assumes no responsibility or liability for any errors or inaccuracies that may appear in this publication. The software described in this book is furnished under license and may only be used or copied in accordance with the terms of that license. LispWorks and KnowledgeWorks are registered trademarks of LispWorks Ltd. Adobe and PostScript are registered trademarks of Adobe Systems Incorporated. Other brand or product names are the registered trade- marks or trademarks of their respective holders. The code for walker.lisp and compute-combination-points is excerpted with permission from PCL, Copyright © 1985, 1986, 1987, 1988 Xerox Corporation. The XP Pretty Printer bears the following copyright notice, which applies to the parts of LispWorks derived therefrom: Copyright © 1989 by the Massachusetts Institute of Technology, Cambridge, Massachusetts. Permission to use, copy, modify, and distribute this software and its documentation for any purpose and without fee is hereby granted, pro- vided that this copyright and permission notice appear in all copies and supporting documentation, and that the name of M.I.T. -

Software Studies: a Lexicon, Edited by Matthew Fuller, 2008

fuller_jkt.qxd 4/11/08 7:13 AM Page 1 ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• S •••••••••••••••••••••••••••••••••••••new media/cultural studies ••••software studies •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• O ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• F software studies\ a lexicon ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• T edited by matthew fuller Matthew Fuller is David Gee Reader in ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• This collection of short expository, critical, Digital Media at the Centre for Cultural ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• W and speculative texts offers a field guide Studies, Goldsmiths College, University of to the cultural, political, social, and aes- London. He is the author of Media ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• thetic impact of software. Computing and Ecologies: Materialist Energies in Art and A digital media are essential to the way we Technoculture (MIT Press, 2005) and ••••••••••••••••••••••••••••••••••••• •••• •••••••••••••••••••••••••••••••••• work and live, and much has been said Behind the Blip: Essays on the Culture of ••••••••••••••••••••••••••••••••••••• -

Release Notes for Fedora 17

Fedora 17 Release Notes Release Notes for Fedora 17 Edited by The Fedora Docs Team Copyright © 2012 Fedora Project Contributors. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

Upgrade Issues

Upgrade issues Graph of new conflicts libsiloh5-0 libhdf5-lam-1.8.4 (x 3) xul-ext-dispmua (x 2) liboss4-salsa-asound2 (x 2) why sysklogd console-cyrillic (x 9) libxqilla-dev libxerces-c2-dev iceape xul-ext-adblock-plus gnat-4.4 pcscada-dbg Explanations of conflicts pcscada-dbg libpcscada2-dev gnat-4.6 gnat-4.4 Similar to gnat-4.4: libpolyorb1-dev libapq-postgresql1-dev adacontrol libxmlada3.2-dev libapq1-dev libaws-bin libtexttools2-dev libpolyorb-dbg libnarval1-dev libgnat-4.4-dbg libapq-dbg libncursesada1-dev libtemplates-parser11.5-dev asis-programs libgnadeodbc1-dev libalog-base-dbg liblog4ada1-dev libgnomeada2.14.2-dbg libgnomeada2.14.2-dev adabrowse libgnadecommon1-dev libgnatvsn4.4-dbg libgnatvsn4.4-dev libflorist2009-dev libopentoken2-dev libgnadesqlite3-1-dev libnarval-dbg libalog1-full-dev adacgi0 libalog0.3-base libasis2008-dbg libxmlezout1-dev libasis2008-dev libgnatvsn-dev libalog0.3-full libaws2.7-dev libgmpada2-dev libgtkada2.14.2-dbg libgtkada2.14.2-dev libasis2008 ghdl libgnatprj-dev gnat libgnatprj4.4-dbg libgnatprj4.4-dev libaunit1-dev libadasockets3-dev libalog1-base-dev libapq-postgresql-dbg libalog-full-dbg Weight: 5 Problematic packages: pcscada-dbg hostapd initscripts sysklogd Weight: 993 Problematic packages: hostapd | initscripts initscripts sysklogd Similar to initscripts: conglomerate libnet-akamai-perl erlang-base screenlets xlbiff plasma-widget-yawp-dbg fso-config- general gforge-mta-courier libnet-jifty-perl bind9 libplack-middleware-session-perl libmail-listdetector-perl masqmail libcomedi0 taxbird ukopp -

Celebrating Cantor Frieder's Extraordinary Journey to Temple

Vol. LVIII, No. 11 February 5, 2016 26 Shevat 5776 A Life in Music and Song: Celebrating Cantor Frieder’s Extraordinary Journey to Temple Israel of Great Neck By Alise Kreditor In celebration of Cantor Raphael Frieder’s 25th anniversary with Temple Is- rael, he has been selected as the congregation’s annual journal dinner honoree. The event will be marked with a concert and dinner in his honor on Sunday, June 5. “Cantor Frieder has touched the lives of so many members of the congregation WKDWLWLVPRVWÀWWLQJZHKRQRUKLPLQWKLVZD\µVDLG3UHVLGHQW5RE3DQ]HU´+H taught us many skills that we didn’t know we were capable of doing. I look forward WRWKHHQWLUHFRQJUHJDWLRQMRLQLQJXVIRUWKHIHVWLYLWLHVµ Before Cantor Frieder joined Temple Israel as Cantor in 1991, his musical life followed many twists and turns, eventually leading him to become a cantor. Today KLVSUHHPLQHQFHDPRQJFDQWRUVLVQRWRQO\LQWKH86EXWDOVRLQWHUQDWLRQDOO\+LV singing resume´ features many solo engagements at the largest venues in the world, as well as operatic roles and other prestigious performances. And to whom does he credit his good singing genes? Without hesitation Cantor Frieder will tell you what DEHDXWLIXOYRLFHKLVPRWKHUKDGDQGWKDWKLVFKLOGKRRGKRPHZDVDOZD\VÀOOHG with music and singing. ——Continued on page 4 A VOICE GUEST COLUMN FROM THE PRESIDENT The Shalom Club Says Shalom Contributions By Mickey Putterman By Rob Panzer EDITOR’S NOTE: The Shalom Club, the congregation’s oldest arm, invites you to join On page 6 of this issue of the Voice you WKHPLQWKLV9RLFH*XHVW&ROXPQE\3UHVLGHQW0LFNH\3XWWHUPDQDQGWKHJURXS·V%RDUG will see an article relating to the contribu- 5HQHHDQG(OOLRW)OHLVFKHU%HWW\/DJHU(LOHHQ3XWWHUPDQ(OOHQDQG0LOWRQ5RVHQDQG tion made by the late Elayne Bernstein to Bela and Mel Schoenfeld. -

Community Report

COMMUNITY REPORT 4TH QUARTER 2013 | ISSUE 27 WELCOME MESSAGE The end of the year always brings a comers can come and make a program, a Summer of Code version period of self-reflection to people, difference with their contributions for high school students, but you and so it does also to the KDE while learning from others. don't have to be a student to join us. community. Recently we started the KDE Google Summer of Code is maybe Incubator initiative, a way for existing Looking back at 2013 we can see the prime example, nearly 50 projects to join KDE with an how the community has continued university students deep dive into our appointed helping hand to guide doing what it excels at; producing community producing in a few them through the process. great end user software as well as months great improvements or totally documentation, translations, artwork new ideas while learning about If you don't have an existing project, and promotion related work to make coding, real world multicultural, cross you are of course very welcome to our software shine even more and be timezone and non collocated just going us on IRC, mailing list and more useful to more people. collaboration. start collaborating with us, you will learn, teach and share the joy of Almost more importantly, we have Google Summer of Code is not the being part of the great values that achieved that while maintaining the only such example. We have Season KDE represents. great and welcoming collaboration of KDE, a program similar in structure atmosphere that KDE is. -

Understanding the Effects of Practices on KDE Ecosystem Health Simone Silva Amorim, John Mcgregor, Eduardo Almeida, Christina Flach Garcia Chavez

Understanding the Effects of Practices on KDE Ecosystem Health Simone Silva Amorim, John Mcgregor, Eduardo Almeida, Christina Flach Garcia Chavez To cite this version: Simone Silva Amorim, John Mcgregor, Eduardo Almeida, Christina Flach Garcia Chavez. Under- standing the Effects of Practices on KDE Ecosystem Health. 13th IFIP International Conference on Open Source Systems (OSS), May 2017, Buenos Aires, Argentina. pp.89-100, 10.1007/978-3-319- 57735-7_10. hal-01776279 HAL Id: hal-01776279 https://hal.inria.fr/hal-01776279 Submitted on 24 Apr 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Understanding the E↵ects of Practices on KDE Ecosystem Health Simone da Silva Amorim1, John D. McGregor2, Eduardo Santana de Almeida3, and Christina von Flach G. Chavez3 1 Federal Institute of Education, Science and Technology of Bahia, Bahia, Brazil [email protected] 2 Clemson University, South Carolina, USA [email protected] 3 Federal University of Bahia, Bahia, Brazil [email protected], [email protected] Abstract. Open source software ecosystems have adjusted and evolved a set of practices over the years to support the delivery of sustainable software. -

Summer Presentation Week

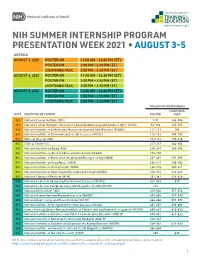

NIH SUMMER INTERNSHIP PROGRAM PRESENTATION WEEK 2021 • AUGUST 3-5 AGENDA AUGUST 3, 2021 POSTER AM 11:00 AM – 12:30 PM (ET) POSTER PM 1:00 PM – 2:30 PM (ET) LIGHTNING TALK 3:00 PM – 4:30 PM (ET) AUGUST 4, 2021 POSTER AM 11:00 AM – 12:30 PM (ET) POSTER PM 1:00 PM – 2:30 PM (ET) LIGHTNING TALK 3:00 PM – 4:30 PM (ET) AUGUST 5, 2021 POSTER AM 11:00 AM – 12:30 PM (ET) POSTER PM 1:00 PM – 2:30 PM (ET) LIGHTNING TALK 3:00 PM – 4:30 PM (ET) PRESENTATION NUMBERS LIGHTNING DATE INSTITUTE OR CENTER POSTER TALK 8/3 National Cancer Institute (NCI) 1-91 144-184 8/3 National Cancer Institute – Division of Cancer Epidemiology and Genetics (NCI – DCEG) 92-110 185-187 8/3 National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS) 111-113 188 8/3 National Institute of Environmental Health Sciences (NIEHS) 114-141 189-192 8/3 Office of Director (OD) 142-143 193-216 8/4 Clinical Center (CC) 217-237 362-364 8/4 National Institute on Aging (NIA) 238-247 365-396 8/4 National Institute on Alcohol Abuse and Alcoholism (NIAAA) 248-256 8/4 National Institute of Biomedical Imaging and Bioengineering (NIBIB) 257-261 397-399 8/4 National Institute on Drug Abuse (NIDA) 262-279 400-402 8/4 National Institute of Mental Health (NIMH) 280-315 403-411 8/4 National Institute of Neurological Disorders and Stroke (NINDS) 316-351 412-423 8/4 National Library of Medicine (NLM) 352-361 424-426 8/5 National Center for Advancing Translational Sciences (NCATS) 427-433 570 8/5 National Center for Complementary and Integrative Health (NCCIH) 434 8/5 National Eye Institute -

Table of Contents

TABLE OF CONTENTS 3 College and Its Mission 6 Admissions 10 Student Life 19 Expenses and Financial Aid 27 Liberal Arts Curriculum 38 Academic Policies 50 Interpreting Course Listings 51 Majors, Minors, Other Programs of Study, Course Descriptions 203 Honors and Awards 213 International Education, National Student Exchange, International Students 217 Academic Internships 218 Lifelong Learning, Non-Degree Students, and Summer Session 220 Affiliations 221 Facilities 223 Directory of Trustees, Faculty, and Staff 245 Appendix 246 Index Accreditation: St. Mary’s College of Maryland (SMCM) is accredited by the Commission on Higher Education, Middle States Association of Colleges and Schools, 3624 Market Street, Philadelphia, Pennsylvania, 19104; Telephone 215-662-5606. SMCM was first accredited in November 1959; its latest re-accreditation was June 2011. The programs at St. Mary’s College of Maryland have been approved by the Maryland Higher Education Commission for the education of veterans and other eligible dependents. Non-Discrimination Policy: St. Mary’s College of Maryland does not discriminate or condone discrimination on the basis of race, sex, gender/gender identity and expression, color, religion, creed, age, genetic information, disability, national or ethnic origin, sexual orientation, or marital status. The College also does not discriminate on the basis of sex and Title IX of the Education Amendments of 1972 (“Title IX”), 20 U.S.C. Sec. 1681, et seq., requires the College not discriminate in such a manner. Prohibited sex discrimination covers sexual harassment, including sexual violence. Examples of the types of conduct prohibited include but are not limited to the following: non-consensual sexual penetration, non-consensual sexual contact, non-consensual sexual exploitation, and/or examples found in the College’s sexual harassment policy.