REAL-TIME EMBEDDED COMPONENTS and SYSTEMS with LINUX and RTOS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

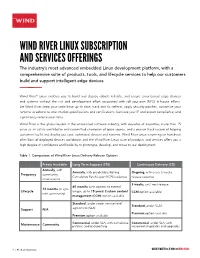

Wind River Linux Subscription and Services Offerings

WIND RIVER LINUX SUBSCRIPTION AND SERVICES OFFERINGS The industry’s most advanced embedded Linux development platform, with a comprehensive suite of products, tools, and lifecycle services to help our customers build and support intelligent edge devices Wind River® Linux enables you to build and deploy robust, reliable, and secure Linux-based edge devices and systems without the risk and development effort associated with roll-your-own (RYO) in-house efforts. Let Wind River keep your code base up to date, track and fix defects, apply security patches, customize your runtime to adhere to strict market specifications and certifications, facilitate your IP and export compliance, and significantly reduce your costs. Wind River is the global leader in the embedded software industry, with decades of expertise, more than 15 years as an active contributor and committed champion of open source, and a proven track record of helping customers build and deploy use case–optimized devices and systems. Wind River Linux is running on hundreds of millions of deployed devices worldwide, and the Wind River Linux suite of products and services offers you a high degree of confidence and flexibility to prototype, develop, and move to real deployment. Table 1. Comparison of Wind River Linux Delivery Release Options Freely Available Long Term Support (LTS) Continuous Delivery (CD) Annually, with Annually, with predictable Rolling Ongoing, with every-3-weeks Frequency community Cumulative Patch Layer (RCPL) cadence release cadence maintenance 3 weeks, until next -

WIND RIVER INTELLIGENT DEVICE PLATFORM XT the Foundation for Building Devices That Connect to the Internet of Things

WIND RIVER INTELLIGENT DEVICE PLATFORM XT The Foundation for Building Devices That Connect to the Internet of Things The opportunities presented by the burgeoning Internet of Things (IoT) may be new, but Wind River® has been providing the intelligence that powers interconnected, automated systems for decades. Wind River Intelligent Device Platform XT is a scalable, sustainable, and secure development environment that simplifies the development, integration, and deployment of IoT gateways. It is based on Wind River industry-leading operating systems, which are standards-compliant and fully tested, as well as Wind River development tools. The platform provides device security, smart connectivity, rich network options, and device management; and it includes ready-to-use components built exclusively for developing IoT gateway applications. Intelligent Device Platform XT provides outstanding software and expertise to fuel the rapid innovation and deployment of safe, secure, and reliable intel- ligent devices through more efficient development cycles, standards-based data connec- tions, and intuitive management tools. CONNECTIVITY TOOLS ZigBee Bluetooth WWAN VPN MQTT Cloud Connector Wi MANAGEMENT nd River Integrated Development Environment T Secure Updates OMA DM, TR-069 Device Authentication Web Interface Application Signing API OpenJDK Lua VM SQLite OSGi To SECURITY ol TCG Standards Role Based Access Control Integrity Monitoring Signed Software ool s WIND RIVER OPERATING ENVIRONMENTS Trusted Secure Boot Wind River Intelligent Device Operating Platform Feature Environment Base Figure 1: Wind River Intelligent Device Platform architecture Product Note INNOVATORS START HERE. WIND RIVER INTELLIGENT DEVICE PLATFORM XT INTEL, MCAFEE, AND WIND RIVER, BETTER TOGETHER Intelligent Device Platform XT is part of Intel® Gateway Solutions for Internet of Things (IoT), a family of platforms that enables companies to seamlessly interconnect indus- trial devices and other systems into a system of systems. -

Wind River Simics

™ AN INTEL COMPANY WIND RIVER SIMICS Electronic systems are becoming increasingly complex, with more hardware, more software, and more connectivity. Today’s systems are software intensive, using multiple processor architectures and running complex, multilayered software stacks. With an increasing empha- sis on smart and connected systems, complexity in software and hardware is unavoidable. With more connections comes additional security risk, which needs to be tested thoroughly. Compounding the challenge is the fact that developers have turned to DevOps and con- tinuous development practices to meet customer and company expectations for quick deliveries. Such methodologies rely on fast iterations for test, feedback, and deployment. Collaborative and cross-functional teams need tools to communicate and share a common development baseline. Wind River® Simics® allows developers to have on-demand access to any target system at any time. It enables more efficient collaboration between developers and quality assurance teams. Simics provides an automation API, enabling organizations to reap the business ben- efits of DevOps and continuous development practices to create and deliver better, more secure software, faster—even for complex, embedded, connected, and large IoT systems. DEVELOP SOFTWARE IN A VIRTUAL ENVIRONMENT Simics provides the access, automation, and collaboration required to enable DevOps and continuous development practices. By using virtual platforms and simulation, soft- ware developers can decouple their work from physical hardware and its limitations during development. Access to virtual hardware allows developers to do continuous integration and automated testing much sooner in the development cycle—even before the hardware design is finalized—as well as perform both testing and debugging during design and pro- totyping phases. -

Wind River Customer Support Services

™ WIND RIVER CUSTOMER SUPPORT SERVICES At Wind River®, we understand the challenges of developing leading-edge, reliable sys- tems that leverage the latest hardware technology. Our award-winning team is here to assist you with support services that fit your needs and your budget. WIND RIVER E-SUPPORT Wind River e-Support provides continuous access to the Wind River Support Network, a single online source for interactive self-help that includes: • Wind River Knowledge Library – Wind River product documentation in PDF and searchable HTML versions – Product-specific information, including bug reports, FAQs, security advisories, and configuration notes – Application notes, technical tips, and sample code on handling common problems • Wind River Knowledge Forum, an interactive question-and-answer forum providing help from Wind River experts • Software upgrades, updates, cumulative patches, and board support packages • An email and Web-based notification system for problem reports and patches WIND RIVER ENTERPRISE SUPPORT Not all challenges can be resolved with knowledge alone—sometimes you need an expert who can consider your unique environment and help solve problems with one-on-one interactions. Wind River Enterprise Support offers the following benefits: • Live assistance from experts, with no limit on the number of issues raised • Over 150 experienced engineers averaging more than 10 years of device software experience • Six major support centers and 21 additional support hubs worldwide, providing access to people with the right knowledge in a convenient time zone • A convenient online utility for submitting, tracking, and monitoring technical support requests • All the benefits of Wind River e-Support WIND RIVER PREMIUM PROJECT SUPPORT For critical projects with sensitive deadlines, you need a support team who understands your unique environment, your application, and your hardware. -

Star Lab Whitepaper

10 Properties of Secure Embedded Systems Protecting Can’t-Fail Embedded Systems from Tampering, Reverse Engineering, and Other Cyber Attacks www.windriver.com It’s Not a Fair Fight… When attacking an embedded system, it takes only “When tasked one vulnerability to lead to an exploit. with securing an embedded When tasked with securing an embedded system, you (the system, you (the defender) must be prepared to protect against every possible vulnerability. Overlook a single opening and the attacker may find it, defender) must take control, steal your secrets, and create an exploit for others to be prepared to use anytime, anywhere. protect against Worse yet, that same attacker may use an initial compromised every possible device to pivot from one exploited subsystem to another, causing further damage to your network, mission, and reputation. vulnerability.” This white paper covers the most important security design principles that, if adhered to, give you a fighting chance against any attacker who seeks to gain unauthorized access, reverse engineer, steal sensitive information, or otherwise tamper with your embedded system. The beauty of these 10 principles is that they can be layered together into a cohesive set of countermeasures that achieve a multiplicative effect, making device exploitation significantly difficult and costly for the attacker. Data at Rest Protection Your applications, configurations, and data aren't Using certified safe if they're not protected at rest. Period. and/or industry standard crypto You can protect your applications and data at rest in one of two algorithms such ways: as AES, RSA, 1. Prevent the attacker from ever gaining access to this information in the first place ECC, or SHA will 2. -

Partner Directory Wind River Partner Program

PARTNER DIRECTORY WIND RIVER PARTNER PROGRAM The Internet of Things (IoT), cloud computing, and Network Functions Virtualization are but some of the market forces at play today. These forces impact Wind River® customers in markets ranging from aerospace and defense to consumer, networking to automotive, and industrial to medical. The Wind River® edge-to-cloud portfolio of products is ideally suited to address the emerging needs of IoT, from the secure and managed intelligent devices at the edge to the gateway, into the critical network infrastructure, and up into the cloud. Wind River offers cross-architecture support. We are proud to partner with leading companies across various industries to help our mutual customers ease integration challenges; shorten development times; and provide greater functionality to their devices, systems, and networks for building IoT. With more than 200 members and still growing, Wind River has one of the embedded software industry’s largest ecosystems to complement its comprehensive portfolio. Please use this guide as a resource to identify companies that can help with your development across markets. For updates, browse our online Partner Directory. 2 | Partner Program Guide MARKET FOCUS For an alphabetical listing of all members of the *Clavister ..................................................37 Wind River Partner Program, please see the Cloudera ...................................................37 Partner Index on page 139. *Dell ..........................................................45 *EnterpriseWeb -

FREQUENTLY ASKED QUESTIONS Wind River Linux 9 Download

™ AN INTEL COMPANY FREQUENTLY ASKED QUESTIONS Wind River Linux 9 Download Q1. What is Wind River® Linux? A1. Wind River Linux is the leader for building outstanding open source embedded products, ensuring customers work with the latest code from the most important open source efforts and most recent technologies. Wind River Linux embraces and extends open source, delivering real-time security monitoring, predictable updates, and legal compliance into open source. Q2. What do I get with this product download? A2. With this product download you get a snapshot of the latest Yocto Project release (Yocto Project 2.2), product templates, which are layers with specific recipes designed to simplify the addition of product features, and all of the board support packages (BSPs) included with the Yocto Project 2.2 release. Q3. What steps do I need to take to download the product? A3. Complete the form and get access to the Wind River GitHub repository that hosts the source code for the product. Q4. How do I install the product? A4. In the Quick Start guide you will find the steps to install the product. It contains instruc- tions on how to create a platform project and build your own Wind River Linux distribu- tion, as well as system requirements details. For more information, please refer to the product documentation. Q5. Do I need to provide any credit card information? A5. No. Access to Wind River Linux 9 is free. Q6. Is Internet access required for Wind River Linux? A6. Yes. Q7. How do I know my hardware can run Wind River Linux? A7. -

Open Network Virtualization with SDN And

HIGH PERFORMANCE, OPEN STANDARD VIRTUALIZATION WITH NFV AND SDN A Joint Hardware and Software Platform for Next-Generation NFV and SDN Deployments By John DiGiglio, Software Product Marketing, Intel Corporation Davide Ricci, Product Line Manager, Wind River INNOVATORS START HERE. HIGH PERFORMANCE, OPEN STANDARD VIRTUALIZATION WITH NFV AND SDN EXECUTIVE SUMMARY With exploding traffic creating unprecedented demands on networks, service providers are looking for equipment that delivers greater agility and economics to address con- stantly changing market requirements. In response, the industry has begun to develop more interoperable solutions per the principles outlined by software defined networking (SDN) and a complementary initiative, network functions virtualization (NFV). At the heart of these two approaches is the decoupling of network functions from hardware through abstraction. The end result: Software workloads will no longer be tied to a particular hardware platform, allowing them to be controlled centrally and deployed dynamically throughout the network as needed. Moreover, network functions can be consolidated onto standard, high-volume servers, switches, and storage, further reducing time-to- market and costs for network operators. This paper describes hardware and software ingredients addressing network equipment platform needs for NFV and SDN, and details how they could be used in a Cloud Radio Access Network (C-RAN) and other use cases. The solutions presented in this paper— designed to achieve real-time, deterministic performance -

Digging Inside the Vxworks OS and Firmware the Holistic Security

Digging Inside the VxWorks OS and Firmware The Holistic Security Aditya K Sood (0kn0ck) SecNiche Security Labs (http://www.secniche.org) Email: adi ks [at] secniche.org 1 Contents 1 Acknowledgement 3 2 Abstract 4 3 Introduction 5 4 Architectural Overview 6 5 Fault Management 7 5.1 Protection Domains, Sybsystems and Isolation . 7 5.2 Understanding OMS and AMS . 7 6 Virtualization - Embedded Hypervisor 8 6.1 Hypervisor and Virtual Board Security . 8 7 VxWorks OS Security Model and Fallacies 9 7.1 Stack Overflow Detection and Protection . 9 7.2 VxWorks Network Stack . 9 7.3 VxWorks and The SSL Game . 10 7.4 Firewalling VxWorks . 10 7.5 VxWorks Debugging Interface . 11 7.6 Weak Password Encryption Algorithm . 13 7.7 NDP Information Disclosure . 13 8 Design of VxWork 5.x - Firmware 13 8.1 VxWorks Firmware Generic Structure . 14 9 VxWorks Firmware Security Analysis 15 9.1 Hacking Boot Sequence Program (BSP) . 15 9.2 VxWorks Firmware Killer . 16 9.3 Services and Port Layout . 18 9.4 Factory Default Passwords . 18 9.5 Config.bin - Inappropriate Encryption . 19 10 Embedded Devices - Truth about Security 20 10.1 Embedded Private Keys - HTTPS Communication . 20 10.2 Firmware Checksum Algorithms . 20 10.3 Insecure Web Interfaces . 20 10.4 Unpatched Firmware and Obsolete Versions . 20 11 Future Work 21 12 Conclusion 21 13 References 22 2 1 Acknowledgement I sincerely thank my friends and security researchers for sharing thoughts over the VxWorks. • Jeremy Collake (Bitsum) • Edgar Barbosa (COSEINC) • Luciano Natafrancesco (Netifera) In addition, I would also like to thank HD Moore for finding vulnerabilities in VxWorks OS. -

Vxworks ® Programmer’S Guide

VxWorks ® Programmer’s Guide 5.4 Edition 1 An ISO 9001 Registered Company Copyright 1984 – 1999 Wind River Systems, Inc. ALL RIGHTS RESERVED. No part of this publication may be copied in any form, by photocopy, microfilm, retrieval system, or by any other means now known or hereafter invented without the prior written permission of Wind River Systems, Inc. VxWorks, IxWorks, Wind River Systems, the Wind River Systems logo, wind, and Embedded Internet are registered trademarks of Wind River Systems, Inc. CrossWind, Tornado, VxMP, VxSim, VxVMI, WindC++, WindConfig, Wind Foundation Classes, WindNet, WindPower, WindSh, and WindView are trademarks of Wind River Systems, Inc. All other trademarks used in this document are the property of their respective owners. Corporate Headquarters Europe Japan Wind River Systems, Inc. Wind River Systems, S.A.R.L. Wind River Systems Japan 1010 Atlantic Avenue 19, Avenue de Norvège Pola Ebisu Bldg. 11F Alameda, CA 94501-1153 Immeuble B4, Bâtiment 3 3-9-19 Higashi USA Z.A. de Courtaboeuf 1 Shibuya-ku 91953 Les Ulis Cédex Tokyo 150 FRANCE JAPAN toll free (US): 800/545-WIND telephone: 510/748-4100 telephone: 33-1-60-92-63-00 telephone: 81-3-5467-5900 facsimile: 510/749-2010 facsimile: 33-1-60-92-63-15 facsimile: 81-3-5467-5877 CUSTOMER SUPPORT Telephone E-mail Fax Corporate: 800/872-4977 toll free, U.S. & Canada [email protected] 510/749-2164 510/748-4100 direct Europe: 33-1-69-07-78-78 [email protected] 33-1-69-07-08-26 Japan: 011-81-3-5467-5900 [email protected] 011-81-3-5467-5877 If you purchased your Wind River Systems product from a distributor, please contact your distributor to determine how to reach your technical support organization. -

Wind River Linux Release Notes, 5.0.1

Wind River Linux Release Notes, 5.0.1 Wind River® Linux RELEASE NOTES 5.0.1 Copyright © 2013 Wind River Systems, Inc. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means without the prior written permission of Wind River Systems, Inc. Wind River, Tornado, and VxWorks are registered trademarks of Wind River Systems, Inc. The Wind River logo is a trademark of Wind River Systems, Inc. Any third-party trademarks referenced are the property of their respective owners. For further information regarding Wind River trademarks, please see: www.windriver.com/company/terms/trademark.html This product may include software licensed to Wind River by third parties. Relevant notices (if any) are provided in your product installation at one of the following locations: installDir/product_name/3rd_party_licensor_notice.pdf installDir/legal-notices/ Wind River may refer to third-party documentation by listing publications or providing links to third-party Web sites for informational purposes. Wind River accepts no responsibility for the information provided in such third-party documentation. Corporate Headquarters Wind River 500 Wind River Way Alameda, CA 94501-1153 U.S.A. Toll free (U.S.A.): 800-545-WIND Telephone: 510-748-4100 Facsimile: 510-749-2010 For additional contact information, see the Wind River Web site: www.windriver.com For information on how to contact Customer Support, see: www.windriver.com/support Wind River Linux Release Notes 5.0.1 4 Apr 13 Contents 1 Overview ...................................................................................................... 1 1.1 Introduction ...................................................................................................................... 1 1.2 Navigating these Release Notes ................................................................................... 1 1.3 Features ............................................................................................................................