Segmentation and Classification of Beatboxing Acoustic Voice Tract Variations in MRI Through Image Processing Technique

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sexism Across Musical Genres: a Comparison

Western Michigan University ScholarWorks at WMU Honors Theses Lee Honors College 6-24-2014 Sexism Across Musical Genres: A Comparison Sarah Neff Western Michigan University, [email protected] Follow this and additional works at: https://scholarworks.wmich.edu/honors_theses Part of the Social Psychology Commons Recommended Citation Neff, Sarah, "Sexism Across Musical Genres: A Comparison" (2014). Honors Theses. 2484. https://scholarworks.wmich.edu/honors_theses/2484 This Honors Thesis-Open Access is brought to you for free and open access by the Lee Honors College at ScholarWorks at WMU. It has been accepted for inclusion in Honors Theses by an authorized administrator of ScholarWorks at WMU. For more information, please contact [email protected]. Running head: SEXISM ACROSS MUSICAL GENRES 1 Sexism Across Musical Genres: A Comparison Sarah E. Neff Western Michigan University SEXISM ACROSS MUSICAL GENRES 2 Abstract Music is a part of daily life for most people, leading the messages within music to permeate people’s consciousness. This is concerning when the messages in music follow discriminatory themes such as sexism or racism. Sexism in music is becoming well documented, but some genres are scrutinized more heavily than others. Rap and hip-hop get much more attention in popular media for being sexist than do genres such as country and rock. My goal was to show whether or not genres such as country and rock are as sexist as rap and hip-hop. In this project, I analyze the top ten songs of 2013 from six genres looking for five themes of sexism. The six genres used are rap, hip-hop, country, rock, alternative, and dance. -

Epsiode 20: Happy Birthday, Hybrid Theory!

Epsiode 20: Happy Birthday, Hybrid Theory! So, we’ve hit another milestone. I’ve rated for 15+ before like 6am, when it made 20 episodes of this podcast – which becomes a Top 50 countdown. When I was is frankly very silly – and I was trying younger, it was the quickest way to find to think about what I could write about new music, and potentially accidentally to reflect such a momentous occasion. see something that would really scar I was trying to think of something that you, music-video wise. Like that time I was released in the year 2000 and accidentally saw Aphex Twin’s Come to was, as such, experiencing a similarly Daddy music video. momentous 20th anniversary. The answer is, unsurprisingly, a lot of things But because I was tiny and my brain – Gladiator, Bring It On and American was a sponge, it turns out a lot of what Psycho all came out in the year 2000. But I consumed has actually just oozed into I wasn’t allowed to watch any of those every recess of my being, to the point things until I was in high school, and they where One Step Closer came on and I didn’t really spur me to action. immediately sang all the words like I was in some sort of trance. And I haven’t done And then I sent a rambling voice memo a musical episode for a while. So, today, to Wes, who you may remember from we’re talking Nu Metal, baby! Hell yeah! such hits as “making this podcast sound any good” and “writing the theme tune I’m Alex. -

Rap in the Context of African-American Cultural Memory Levern G

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2006 Empowerment and Enslavement: Rap in the Context of African-American Cultural Memory Levern G. Rollins-Haynes Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF ARTS AND SCIENCES EMPOWERMENT AND ENSLAVEMENT: RAP IN THE CONTEXT OF AFRICAN-AMERICAN CULTURAL MEMORY By LEVERN G. ROLLINS-HAYNES A Dissertation submitted to the Interdisciplinary Program in the Humanities (IPH) in partial fulfillment of the requirements for the degree of Doctor of Philosophy Degree Awarded: Summer Semester, 2006 The members of the Committee approve the Dissertation of Levern G. Rollins- Haynes defended on June 16, 2006 _____________________________________ Charles Brewer Professor Directing Dissertation _____________________________________ Xiuwen Liu Outside Committee Member _____________________________________ Maricarmen Martinez Committee Member _____________________________________ Frank Gunderson Committee Member Approved: __________________________________________ David Johnson, Chair, Humanities Department __________________________________________ Joseph Travis, Dean, College of Arts and Sciences The Office of Graduate Studies has verified and approved the above named committee members. ii This dissertation is dedicated to my husband, Keith; my mother, Richardine; and my belated sister, Deloris. iii ACKNOWLEDGEMENTS Very special thanks and love to -

Sounds of the Human Vocal Tract

INTERSPEECH 2017 August 20–24, 2017, Stockholm, Sweden Sounds of the Human Vocal Tract Reed Blaylock, Nimisha Patil, Timothy Greer, Shrikanth Narayanan Signal Analysis and Interpretation Laboratory University of Southern California, USA [email protected], {nimishhp, timothdg}@usc.edu, [email protected] Do speech and beatboxing articulations share the same Abstract mental representations? Previous research suggests that beatboxers only use sounds that Is beatboxing grammatical in the same way that exist in the world’s languages. This paper provides evidence to phonology is grammatical? the contrary, showing that beatboxers use non-linguistic How is the musical component of beatboxing represented articulations and airstream mechanisms to produce many sound in the mind? effects that have not been attested in any language. An analysis of real-time magnetic resonance videos of beatboxing reveals To address these questions, a comprehensive inventory of that beatboxers produce non-linguistic articulations such as beatboxing sounds and their articulations must first be ingressive retroflex trills and ingressive lateral bilabial trills. In compiled. addition, beatboxers can use both lingual egressive and Some steps toward this goal have already been taken. pulmonic ingressive airstreams, neither of which have been Splinter and TyTe [5] proposed a Standard Beatbox Notation reported in any language. (SBN) to encode contrasts between different beatboxing The results of this study affect our understanding of the sounds, and Stowell [6] uses a combination of new characters limits of the human vocal tract, and address questions about the and characters from the International Phonetic Alphabet (IPA) mental units that encode music and phonological grammar. to represent some sounds of beatboxing. -

Claire Chase: the Pulse of the Possible

VOLUMEXXXVIII , NO . 3 S PRING 2 0 1 3 THE lut i st QUARTERLY Claire Chase: The Pulse of the Possible Curiosity and Encouragement: Alexander Murray The Charles F. Kurth Manuscript Collection Convention 2013: On to New Orleans THEOFFICIALMAGAZINEOFTHENATIONALFLUTEASSOCIATION, INC It is only a tool. A tool forged from the metals of the earth, from silver, from gold. Fashioned by history. Crafted by the masters. It is a tool that shapes mood and culture. It enraptures, sometimes distracts, exhilarates and soothes, sings and weeps. Now take up the tool and sculpt music from the air. MURAMATSU AMERICA tel: (248) 336-2323 fax: (248) 336-2320 fl[email protected] www.muramatsu-america.com InHarmony Table of CONTENTSTHE FLUTIST QUARTERLY VOLUME XXXVIII, NO. 3 SPRING 2013 DEPARTMENTS 11 From the President 49 From the Local Arrangements Chair 13 From the Editor 55 Notes from Around the World 14 High Notes 58 New Products 32 Masterclass Listings 59 Passing Tones 60 Reviews 39 Across the Miles 74 Honor Roll of Donors 43 NFA News to the NFA 45 Confluence of Cultures & 77 NFA Office, Coordinators, Perseverence of Spirit Committee Chairs 18 48 From Your Convention Director 80 Index of Advertisers FEATURES 18 Claire Chase: The Pulse of the Possible by Paul Taub Whether brokering the creative energy of 33 musicians into the mold-breaking ICE Ensemble or (with an appreciative nod to Varèse) plotting her own history-making place in the future, emerging flutist Claire Chase herself is Exhibit A in the lineup of evidence for why she was recently awarded a MacArthur Fellowship “genius” grant. -

A Brief History of Beat-Boxing by Davidx

A Brief History of Beat-Boxing by DavidX 1980s – Introductions: Beat-boxing emerged in the USA in the 1980s and grew out of hip hop culture. It was created to provide a beat to support MCs when there was no DJ/sound system available. David describes the message of early beat-boxing as; “peace, love and unity, and have fun and teach some kids in the street”. Pioneers of beat boxing were Doug E Fresh, Biz Markie and hip hop trio The Fat Boys. Fat Boys: http://www.youtube.com/watch?feature=player_detailpage&v=a65kmDFfl2g Doug E Fresh 1986 Interview and Human Beatbox: http://www.youtube.com/watch?feature=player_detailpage&v=2ARBgBeHY1w 1990s – New Innovations: Between the late ‘80s and early ‘90s, beatboxing disappeared as an artform. But when MC and human-beatboxer Rahzel combined singing and beatboxing on his cover of “If Your Mother Only Knew” in 1996, he brought beatboxing to the world’s attention. It had a big impact on people discovering the discipline and making it part of the mainstream as fans tried beat- boxing covers themselves. Rahzel: http://www.youtube.com/watch?feature=player_detailpage&v=_GtVONTy6FQ 2000s: At the start of the 21st century, beat-boxing became more technical and the first Human Beatbox Convention happened in London with beatbox artists from all over the world. Scratch from The Roots, and Kenny Muhammad marked this period. Scratch and Eklips live on Radio France: http://www.youtube.com/watch?feature=player_detailpage&v=ayALbNqzVfM Kenny Muhammad wind technique, early 2000: http://www.youtube.com/watch?feature=player_detailpage&v=D21CNg3Xwbs New School: as beatboxing became an international discipline, different approaches appeared. -

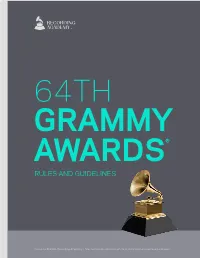

Rules and Guidelines

64TH GRAMMY AWARDS® RULES AND GUIDELINES Contents © 2021 Recording Academy | May not be reproduced in whole or in part without express permission. INTRODUCTION THESE ARE THE OFFICIAL RULES FOR THE GRAMMY AWARDS®. All GRAMMY Awards ballots are cast by Recording Academy Voting Members and are subject to classification and qualifications under rules or regulations approved by the Board of Trustees. From time to time, the Board may vote to amend the qualification criteria for consideration for a GRAMMY® Award or other award. 2 GRAMMY AWARDS YEAR CALENDAR GRAMMY AWARDS YEAR CALENDAR 2020 – 2021 Sept.1, 2020 – Sept. 30, 2021 Release Eligibility Period March 1, 2021, 11:59 P.M. PT Deadline to apply for membership for 64th GRAMMY Awards. Fees must be paid in order to qualify for voting. July 6, 2021, 9 A.M. PT Media Company Registration begins Aug. 24, 2021, 6 P.M. PT Media Company Registration ends July 13, 2021, 9 A.M. PT First Round Online Entry Process (OEP) Access Period begins July 29, 2021, 6 P.M. PT First Round Online Entry Process (OEP) Access Period ends Aug. 17, 2021, 9 A.M. PT Final Round Online Entry Process (OEP) Access Period begins Aug. 31, 2021, 6 P.M. PT Final Round Online Entry Process (OEP) Access Period ends Oct. 22, 2021 - Nov. 5, 2021 First Round Voting TBD Nominations announced Dec. 6, 2021 - Jan. 5, 2022 Final Round Voting 14 days after the announcement of nominations Deadline for errors and omissions to the nominations 2022 Jan. 31, 2022 64th Premiere Ceremony Jan. 31, 2022 64th GRAMMY Awards 3 TABLE OF CONTENTS 2 INTRODUCTION -

The Fifth Element of Hip Hop

'TIME CAPTURED IS TIME CREATED'© ECLECTRIX, INC.© presents BEATBOXING – THE FIFTH ELEMENT OF HIP HOP A documentary film by Klaus Schneyder Duration: 55 minutes HDV Color Aspect Ratio 1.78 (16:9) English Unrated 2011 Film Festivals Magnolia Independent Film Festival, Starkville, MI United States Super 8 Film + Digital Video Festival, New Brunswick, NJ (Best Documentary) Queensworld Film Festival, Jackson Heights, NY Fort Meyers Film Festival, Fort Meyers, FL LA Film + Music Weekend, Los Angeles, CA Canada International Film Festival, Vancouver, BC Lichtfield Hills Film Festival, Kent, CT Fallbrook Film Festival, Fallbrook, CA Buffalo Niagra Film Festival, Amherst, NY Atlanta Film Festival, Atlanta, GA World Music and Independent Film Festival, Jeffersonton, VA Photos available upon request “For The Press” DISTRIBUTION and NY PUBLICITY CONTACT: [email protected] [email protected] 4915 Broadway; Ste. 5J New York, NY 10034 212/569 9246 www.beatboxdocumentary.com SYNOPSIS It was in the late 70s that a youth culture evolved in the poorer parts of New York which combined several disciplines under the name of Hip Hop. Apart from the four classic elements of Graffiti writing, DJing, Breakdancing, and Rapping, the musical side of this culture was enhanced by a fifth element called ‘Beatboxing’. From the hardship of poverty and the lack of instruments, a pioneer was inspired to imitate drum rhythms with his mouth – his brilliance creating the term ‘Human Beatbox’. Very soon other Hip Hop artists picked up his style and added this technique of music making to their own shows, but at that time Beatboxing never left the realm of Hip Hop culture. -

Sonic Subjectivity and Post-Modern Identity Formations in Beatboxing

‘Make the Music with Your Mouth’: Sonic Subjectivity and Post-Modern Identity Formations in Beatboxing Shanté Paradigm Smalls Abstract This paper investigates the possibilities of pleasure, sound, and the disruption of the iterations of identity in progressive time. How does sound reformulate how we see whiteness, heterosexuality, and female-bodied people? Beatboxing as a citational and intertextual form — phatic, rhythmic, sonorous, and lyrically side-steps some of the traps of rap music or other hip hop forms through its embodiment of sounds rather the logics of lyrics and traditional musical structure. In that way it remakes — queers — our alliances, allegiances, and sonic sensibilities. Sonic Acceptance: The beatbox cipher I entered my first hip hop cipher using human beatboxing. At ten years old, I wasn’t terribly witty or clever like my worldly thirteen year-old brother and male cousins, but I was obsessed with rap music and being a participant in rap music and hip hop culture. Every time I would try to rhyme, I messed up the cadence, flow, and tempo of the impromptu session. My “voice was too light” as Jay-Z would proclaim some 25 years later; my sense of rhythm — abysmal. It’s a small wonder I went on to pursue a music career as a singer and an emcee, because I really had no audible prowess. But I loved making sounds, I loved music, and I loved what I heard foundational NYC human beatboxers Doug E. Fresh, Darren “Buff Love” Robinson aka “The Human Beatbox,” and Biz Markie sonically render with their mouths and a microphone. -

Trung Bao Nguyen [email protected]

SEE THE SOUND TRUNG BAO NGUYEN [email protected] TRUNG BAO SEE THE SOUND FALL 2019 GRAPHIC DESIGN ARTIST STATEMENT Trung Bao is a multi-disciplinary artist specializing in voice, vocal percussion, performance and visual technology and he goes with the title of a beatboxer and a visual artist. He has the ability to speak music and is constantly exploring and pushing boundaries of the Beatbox artform. Trung has been pursuing the professional beatbox career for over five years and have achieved many awards on international stages, made him one of the most influen- cial beatboxers worldwide. Alongside his beatbox career, Trung has been studying graphic design and visual technology. Trung Bao’s biggest goal at the moment is to combine his two professions - music and visual art to elevate the experienc- es at beatbox music festival through innovative audio-interactive visual. [email protected] TRUNG BAO SEE THE SOUND FALL 2019 GRAPHIC DESIGN THESIS PROPOSAL I have always been fascinated by the world of sound and image. We perceive the world and shape our realities through the rhythms displayed in very high frequencies. And even colors are light waves traveling through space at specific fre- our five senses, but mainly through hearing and seeing. I analyze the audio and visual information that I receive on the quencies, time and combination. I started to have a visual representation in my mind of every sound I hear and create. I daily basis to learn about the world around me, I imitate the information that strikes me, combine and modify them and to now see the sounds. -

Music Genre/Form Terms in LCGFT Derivative Works

Music Genre/Form Terms in LCGFT Derivative works … Adaptations Arrangements (Music) Intabulations Piano scores Simplified editions (Music) Vocal scores Excerpts Facsimiles … Illustrated works … Fingering charts … Posters Playbills (Posters) Toy and movable books … Sound books … Informational works … Fingering charts … Posters Playbills (Posters) Press releases Programs (Publications) Concert programs Dance programs Film festival programs Memorial service programs Opera programs Theater programs … Reference works Catalogs … Discographies ... Thematic catalogs (Music) … Reviews Book reviews Dance reviews Motion picture reviews Music reviews Television program reviews Theater reviews Instructional and educational works Teaching pieces (Music) Methods (Music) Studies (Music) Music Accompaniments (Music) Recorded accompaniments Karaoke Arrangements (Music) Intabulations Piano scores Simplified editions (Music) Vocal scores Art music Aʼak Aleatory music Open form music Anthems Ballades (Instrumental music) Barcaroles Cadenzas Canons (Music) Rounds (Music) Cantatas Carnatic music Ālāpa Chamber music Part songs Balletti (Part songs) Cacce (Part songs) Canti carnascialeschi Canzonets (Part songs) Ensaladas Madrigals (Music) Motets Rounds (Music) Villotte Chorale preludes Concert etudes Concertos Concerti grossi Dastgāhs Dialogues (Music) Fanfares Finales (Music) Fugues Gagaku Bugaku (Music) Saibara Hát ả đào Hát bội Heike biwa Hindustani music Dādrās Dhrupad Dhuns Gats (Music) Khayāl Honkyoku Interludes (Music) Entremés (Music) Tonadillas Kacapi-suling -

Beatboxing, Rap, and Spoken Word

Learning Resource Pack Beatboxing, rap, and spoken word Creating contemporary music and lyrics inspired by culture and heritage. Contents 03 Introduction 04 Using collections and heritage to inspire contemporary artwork 06 About contemporary beatbox, rap and spoken word 08 Planning your project 14 Methods — Method 1: Creating rap lyrics — Method 2: Beatboxing techniques — Method 3: Creating spoken word and poetry 18 Casestudies — Case study 1: Welsh language with key stage 2 — Ysgol Pentraeth, National Slate Museum, Mr Phormula, and Bari Gwilliam — Case study 2: Beatboxing and rap, English language with key stage 3 — Lewis School Pengam, Big Pit and Beat Technique — Case study 3: Bilingual with key stage 3 — Tredegar Park School, Tredegar House (National Trust), and Rufus Mufasa 25 Extending the learning and facilitating curriculum learning 26 Nextsteps 26 Digital resources 27 Abouttheauthors Arts & Education Network; South East Wales 2 Beatboxing, rap, and spoken word Introduction This creative lyric and music project has The projects in this resource can be been tried and tested with schools by the simplified, adapted or further developed authors Rufus Mufasa, Beat Technique and to suit your needs. There are plenty of Mr Phormula. The project is designed to be opportunities for filmmaking, recording pupil-centred, fun, engaging, relevant and and performing, all of which help to in-line with the Welsh Government Digital develop wider creative attributes Competence Framework, while exploring including resilience, presentation skills, pupil’s individual creativity through the communication skills, and collaboration Expressive Arts Curriculum framework, and that are so important to equip learners facilitating the curriculum’s Four Purposes.