Data Literacy in the Real World: Conversations & Case Studies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Walking the Talk: 2021 Blueprints for a Human Rights-Centered U.S

Walking the Talk: 2021 Blueprints for a Human Rights-Centered U.S. Foreign Policy October 2020 Acknowledgments Human Rights First is a nonprofit, nonpartisan human rights advocacy and action organization based in Washington D.C., New York, and Los Angeles. © 2020 Human Rights First. All Rights Reserved. Walking the Talk: 2021 Blueprints for a Human Rights-Centered U.S. Foreign Policy was authored by Human Rights First’s staff and consultants. Senior Vice President for Policy Rob Berschinski served as lead author and editor-in-chief, assisted by Tolan Foreign Policy Legal Fellow Reece Pelley and intern Anna Van Niekerk. Contributing authors include: Eleanor Acer Scott Johnston Trevor Sutton Rob Berschinski David Mizner Raha Wala Cole Blum Reece Pelley Benjamin Haas Rita Siemion Significant assistance was provided by: Chris Anders Steven Feldstein Stephen Pomper Abigail Bellows Becky Gendelman Jennifer Quigley Brittany Benowitz Ryan Kaminski Scott Roehm Jim Bernfield Colleen Kelly Hina Shamsi Heather Brandon-Smith Kate Kizer Annie Shiel Christen Broecker Kennji Kizuka Mandy Smithberger Felice Gaer Dan Mahanty Sophia Swanson Bishop Garrison Kate Martin Yasmine Taeb Clark Gascoigne Jenny McAvoy Bailey Ulbricht Liza Goitein Sharon McBride Anna Van Niekerk Shannon Green Ian Moss Human Rights First challenges the United States of America to live up to its ideals. We believe American leadership is essential in the struggle for human dignity and the rule of law, and so we focus our advocacy on the U.S. government and other key actors able to leverage U.S. influence. When the U.S. government falters in its commitment to promote and protect human rights, we step in to demand reform, accountability, and justice. -

THE FREE-MARKET WELFARE STATE: Preserving Dynamism in a Volatile World

Policy Essay THE FREE-MARKET WELFARE STATE: Preserving Dynamism in a Volatile World Samuel Hammond1 Poverty and Welfare Policy Analyst Niskanen Center May 2018 INTRODUCTION welfare state” directly depresses the vote for reac- tionary political parties.3 Conversely, I argue that he perennial gale of creative destruc- the contemporary rise of anti-market populism in tion…” wrote the economist Joseph America should be taken as an indictment of our in- 4 Schumpeter, “…is the essential fact of adequate social-insurance system, and a refutation “T of the prevailing “small government” view that reg- capitalism.” For new industries to rise and flourish, old industries must fail. Yet creative destruction is ulation and social spending are equally corrosive to a process that is rarely—if ever—politically neu- economic freedom. The universal welfare state, far tral; even one-off economic shocks can have lasting from being at odds with innovation and economic political-economic consequences. From his vantage freedom, may end up being their ultimate guaran- point in 1942, Schumpeter believed that capitalism tor. would become the ultimate victim of its own suc- The fallout from China’s entry to the World Trade cess, inspiring reactionary and populist movements Organization (WTO) in 2001 is a clear case in against its destructive side that would inadvertently point. Cheaper imports benefited millions of Amer- strangle any potential for future creativity.2 icans through lower consumer prices. At the same This paper argues that the countries that have time, Chinese import competition destroyed nearly eluded Schumpeter’s dreary prediction have done two million jobs in manufacturing and associated 5 so by combining free-markets with robust systems services—a classic case of creative destruction. -

820 First St NE #675 Washington, DC 20002 Climate Policy and Litigation

820 First St NE #675 Washington, DC 20002 Climate Policy and Litigation Program Report FY 2018-2019 December 2019 The Niskanen Center’s Climate Policy and Litigation Program Report 2018 through 2019 Over the reporting period, Niskanen’s climate team has achieved significant progress toward each of our targeted intermediate outcomes and laid the groundwork to reach our ultimate objectives. We describe those accomplishments and what we have learned in the following report, and discuss where our strategic outlook has been reinforced and where it has been altered. Our focus remains on turning the Niskanen Center’s climate program into one of the most influential, informative, and innovative in Washington, D.C. When the Niskanen Center opened its doors five years ago, and even when the reporting period for our program initiated two years ago, leading Republicans embraced climate skepticism and were occupied with deconstructing the Obama Administration’s climate agenda. There had not been a bipartisan bill supporting carbon pricing since the failure of Waxman-Markey in 2009. Now, we see Republicans acknowledging the reality of human-caused climate change and seeking solutions of varying ambition. At the highest levels, several Republican members of Congress have introduced carbon tax legislation with prices over $30 per ton of CO2 emissions, which—were they law—would be the most ambitious national climate policy globally. The developments portend further progress in the coming years, as bipartisan groups of legislators can embrace both sectoral and comprehensive reforms. The Niskanen Center has been at the heart of these developments. Over the reporting period, Niskanen Center staff have provided policy input and advice for carbon pricing bills that have achieved bipartisan support, been asked for information on climate change and the available responses from formal and informal groups of legislators, and maintained a high volume of public appearances and commentary promoting market-based reforms to achieve a low-carbon economy. -

Congressional Record—Senate S924

S924 CONGRESSIONAL RECORD — SENATE March 1, 2021 unanimous consent that the rules of in the subcommittee and shall not be count- IMPEACHMENT procedure of the Committee on Appro- ed for purposes of determining a quorum. Mr. GRASSLEY. Mr. President, just priations for the 117th Congress be f barely a year ago, I was here making a printed in the RECORD. similar statement. Impeachment is one There being no objection, the mate- TRIBUTE TO CHRISTINA NOLAN of the most solemn matters to come rial was ordered to be printed in the Mr. LEAHY. Mr. President, I would before the Senate, but I worry that it’s RECORD, as follows: like to pay tribute to a great also becoming a common occurrence. SENATE COMMITTEE ON APPROPRIATIONS Before getting into the merits of this Vermonter, Christina Nolan, a most COMMITTEE RULES—117TH CONGRESS impeachment, it is important to reit- dedicated public servant who has I. MEETINGS erate that January 6 was a sad and served as U.S. attorney for the District tragic day for America. I hope we can The Committee will meet at the call of the of Vermont since November 2017. She Chairman. all agree about that. will be resigning her post at the end of What happened here at the Capitol II. QUORUMS this month, 11 years since she first 1. Reporting a bill. A majority of the mem- was completely inexcusable. It was not joined the U.S. Attorney’s Office, but a demonstration of any of our pro- bers must be present for the reporting of a her work and the strong partnerships bill. -

2018 Fordham Urban Law Journal's Cooper Walsh Colloquium Remodeling Sanctuary Urban Immigration in a New Era

2018 Fordham Urban Law Journal's Cooper Walsh Colloquium Remodeling Sanctuary Urban Immigration in a New Era NOVEMBER 9, 2018 CLE COURSE MATERIALS Table of Contents 1. Speaker Biographies (view in document) 2. CLE Materials Panel 1: Blocks to Status: Stumbling Blocks & Panel 4: Urban Rebellion: Immigration & City Building Blocks to Urban Immigration Organizing Kang, Alex. Loosening the Federal Grip on Gjecovi, Sibora; James, Esther; Chenoweth, Jeff. Immigration Policy. (View in document) Immigrant-Led Organizers in Their Own Voices: Local Realities and Shared Visions. Johnson, Kit. Opportunities & Anxieties: A study of (View in document) International Students in the Trump Era. (View in document) Panel 2: Cities as Havens: The Evolution of Sanctuary Policies Kwon, Christine; Roy, Marissa. Sanctuary Cities: Distinguishing Rhetoric From Reality. (View in document) Kwon, Christine; Roy, Marissa. Local Action, National Impact: Standing Up For Sanctuary Cities (View in document) Pham, Huyen. State-Created Immigration Climates and Domestic Migration. (View in document) Panel 3: The Balancing Act: Immigration & Due Process Peleg, Talia. Detaining Immigrants Indefinitely is Un- American . Shame on the Supreme Court. (View in document) Benner, Katie; Savage, Charlie. Due Process for Undocumented Immigrants, Explained. (View in document) Heinz, Joanna. Pardoning Immigrants. (View in document) Zachary Ahmad Director at the University of Georgia, School of Zachary Ahmad is a policy counsel at the New Law. Before coming to UGA, he served as an York Civil Liberties Union (NYCLU), the New acting assistant professor at the New York York affiliate of the ACLU. He works largely on University School of Law, where he taught in legislative and policy issues related to the Lawyering Program from 2010 to 2013 and immigration, with a focus on efforts to assisted in the Immigrant Rights Clinic. -

The Politicization and Polarization of Climate Change

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2021 The Politicization and Polarization of Climate Change Williamson Grassle Follow this and additional works at: https://scholarship.claremont.edu/cmc_theses Part of the American Politics Commons, Environmental Law Commons, Environmental Studies Commons, and the Legislation Commons Recommended Citation Grassle, Williamson, "The Politicization and Polarization of Climate Change" (2021). CMC Senior Theses. 2663. https://scholarship.claremont.edu/cmc_theses/2663 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. Claremont McKenna College The Politicization and Polarization of Climate Change Submitted to Professor John J. Pitney, Jr. By Williamson Grassle For Senior Thesis Spring 2021 May 3rd 1 Table of Contents TITLE……………………………………………………………………………………..1 ACKNOWLEDGEMENTS……………………………………………………………….3 ABSTRACT………………………………………………………………………………4 INTRODUCTION………………………………………………………………………...5 CHAPTER 1 – LATE 20TH, EARLY 21ST CENTURY………………………………....12 CHAPTER 2 – RECENT………………………………………………………………...24 CHAPTER 3 – FUTURE………………………………………………………………...39 WORK CITED…………………………………………………………………………...52 2 Acknowledgements I would like to thank Professor John J. Pitney for his guidance and support on this thesis. Throughout my time at Claremont McKenna, you have helped foster my passion for politics and define my interest in environmental policy. Without your guidance and expertise, I would not have been able to complete this project. 3 Abstract In the mid to late 20th-century, climate change and other environmental issues were addressed on a bipartisan basis, with Republican politicians like President Richard Nixon and George H.W. Bush supporting and advancing measures to combat climate change. However, since the 1990s, climate change has become increasingly polarized, with significant polarization in the last decade. -

Large Corporations Contributed to Our Political Polarization Here’S How They Can Fix It

Policy Essay Large Corporations Contributed to Our Political Polarization Here’s How They Can Fix It Mark S. Mizruchi University of Michigan October 2020 Key Takeaways ► The U.S. has faced many difficulties in the past century, and our political system generally has risen to the challenge of addressing the problems. Today, however, the U.S. seems unable to overcome its extremism and gridlock. ► One difference that has contributed to extremism on the right: a decline in leadership by American corporate elite. ► The past decades have seen a notable shift, as American corporations have gone from embracing moderate, bipartisan solutions to aligning themselves with the far right. ► Today’s problems may seem more solvable if the corporate elite reject short-term solutions and return to the model of leadership shown in the past. The Niskanen Center is a 501(c)3 issue advocacy organization that works to change public policy through direct engagement in the policymaking process. NISKANEN CENTER | 820 FIRST ST. NE, SUITE 675 | WASHINGTON, D.C. 20002 www.niskanencenter.org | For inquiries, please contact [email protected] Large Corporations Contributed to Our Political Polarization October 2020 Contents Introduction 3 The Postwar Period 5 The Postwar Moderate Consensus 6 Sources of the Moderate Postwar Consensus 9 The Decline of the Postwar Consensus 11 The Decline of the Corporate Elite 12 Where We Are, and Why 15 What Is to Be Done? 17 Conclusion 19 About the Author 21 NISKANEN CENTER 2 Large Corporations Contributed to Our Political Polarization October 2020 Introduction The American political system is broken. The fact that we have problems in our society is not new. -

Southern California Public Radio- FCC Quarterly Programming Report July 1- September 30,2016 KPCC-KUOR-KJAI-KVLA-K227BX-K210AD S

Southern California Public Radio- FCC Quarterly Programming Report July 1- September 30,2016 KPCC-KUOR-KJAI-KVLA-K227BX-K210AD START TIME DURATION ISSUE TITLE AND NARRATIVE 7/1/2016 Take Two: Border Patrol: Yesterday, for the first time, the US Border patrol released the conclusions of that panel's investigations into four deadly shootings. Libby Denkmann spoke with LA Times national security correspondent, Brian Bennett, 9:07 9:00 Foreign News for more. Take Two: Social Media Accounts: A proposal floated by US Customs and Border Control would ask people to voluntarily tell border agents everything about their social media accounts and screen names. Russell Brandom reporter for The Verge, spoke 9:16 7:00 Foreign News to Libby Denkmann about it. Law & Order/Courts/Polic Take Two: Use of Force: One year ago, the LAPD began training officers to use de-escalation techniques. How are they working 9:23 8:00 e out? Maria Haberfeld, professor of police science at John Jay College of Criminal Justice spoke to A Martinez about it. Take Two: OC Refugee dinner: After 16 hours without food and water, one refugee family will break their Ramadan fast with mostly strangers. They are living in Orange County after years of going through the refugee process to enter the United States. 9:34 4:10 Orange County Nuran Alteir reports. Take Two: Road to Rio: A Martinez speaks with Desiree Linden, who will be running the women's marathon event for the US in 9:38 7:00 Sports this year's Olympics. Take Two: LA's best Hot dog: We here at Take Two were curious to know: what’s are our listeners' favorite LA hot dog? They tweeted and facebooked us with their most adored dogs, and Producers Francine Rios, Lori Galarreta and host Libby Denkmann 9:45 6:10 Arts And Culture hit the town for a Take Two taste test. -

Sexual Harassment and Corporate Law

University of Chicago Law School Chicago Unbound Journal Articles Faculty Scholarship 2018 Sexual Harassment and Corporate Law Daniel Hemel Dorothy Shapiro Lund Follow this and additional works at: https://chicagounbound.uchicago.edu/journal_articles Part of the Law Commons Recommended Citation Daniel Hemel & Dorothy Shapiro Lund, "Sexual Harassment and Corporate Law," 118 Columbia Law Review 1583 (2018). This Article is brought to you for free and open access by the Faculty Scholarship at Chicago Unbound. It has been accepted for inclusion in Journal Articles by an authorized administrator of Chicago Unbound. For more information, please contact [email protected]. COLUMBIA LAW REVIEW VOL. 118 OCTOBER 2018 NO. 6 ARTICLES SEXUAL HARASSMENT AND CORPORATE LAW Daniel Hemel * & Dorothy S. Lund ** The #MeToo movement has shaken corporate America in recent months, leading to the departures of several high-profile executives as well as sharp stock price declines at a number of firms. Investors have taken notice and taken action: Shareholders at more than a half dozen publicly traded companies have filed lawsuits since the start of 2017 alleging that corporate fiduciaries breached state law duties or violated federal securities laws in connection with sexual harassment scandals. Additional suits are likely in the coming months. This Article examines the role of corporate and securities law in regulating and remedying workplace sexual misconduct. We specify the conditions under which corporate fiduciaries can be held liable under state law for perpetrating sexual misconduct or allowing it to occur. We also discuss the circumstances under which federal securities law requires issuers to disclose allegations against top executives and to reveal settlements of sexual misconduct claims. -

FBI–Apple Encryption Dispute - Wikipedia, the Free Encyclopedia 6/2/16, 6:59 AM

FBI–Apple encryption dispute - Wikipedia, the free encyclopedia 6/2/16, 6:59 AM FBI–Apple encryption dispute From Wikipedia, the free encyclopedia The FBI–Apple encryption dispute concerns whether and to what extent courts in the United States can compel manufacturers to assist in unlocking cell phones whose contents are cryptographically protected.[1] There is much debate over public access to strong encryption.[2] In 2015 and 2016, Apple Inc. has received and objected to or challenged at least 11 orders issued by United States district courts under the All Writs Act of 1789. Most of these seek to compel Apple "to use its existing capabilities to extract data like contacts, photos and calls from locked iPhones running on operating systems iOS 7 and older" in order to assist in criminal investigations and prosecutions. A few requests, however, involve phones with more extensive security protections, which Apple has no current ability to break. These orders would compel Apple to write new software that would let the government bypass these device's security and unlock the phones.[3] The most well-known instance of the latter category was a February 2016 court case in the United States District Court for the Central District of California. The FBI wanted Apple to create and electronically An iPhone 5C, the model used by one sign new software that would enable the FBI to unlock a work-issued of the perpetrators of the 2015 San iPhone 5C it recovered from one of the shooters in a December 2015 Bernardino attack terrorist attack in San Bernardino, California, that killed 14 people and injured 22. -

Annual Report 2018-09/2019

Changing the Conversation ANNUAL REPORT 2018-09/2019 The Niskanen Center is in the policy change business. But The second reason that we work with both parties is that so are a lot of people. Unlike many of them, we embrace ev- bipartisan support is necessary to achieve consequential idence-based theories of policy change and marry theory reform in Washington. Attempting to govern with just the with practice. As this annual report demonstrates, this ap- votes of a single party is a recipe for political failure, par- proach has served us well. tisan gridlock, and policy inaction. Ideologically-driven partisans have crashed on the shoals of this reality over and Think tank work is hard for many people to wrap their over again. The outer bounds of what is politically possible minds around. At Niskanen, it’s quite straightforward. We are largely dictated by the degree to which causes can find produce gold-standard policy analyses, use those analyses meaningful support on both sides of the aisle. to better educate legislators and staff, and help them trans- late good insights and ideas into politically compelling leg- Hence, the Niskanen Center is nonpartisan in both form islative initiatives. All the while, we work closely within the and function. We raise our banner, forward our causes, and governing networks of Washington. We mobilize support welcome all who flock to our side. As you’ll see in these pag- for our proposals, build transpartisan advocacy coalitions es, our call for “radical moderation” has captured the im- to advance them, and make the case for our ideas in the agination of public intellectuals and political elites who are most influential media outlets in the country. -

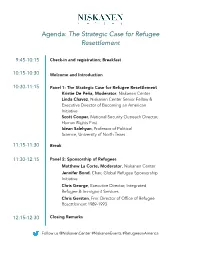

Refugee Event Program

! Agenda: The Strategic Case for Refugee Resettlement 9:45-10:15 Check-in and registration; Breakfast 10:15-10:30 Welcome and Introduction 10:30-11:15 Panel 1: The Strategic Case for Refugee Resettlement Kristie De Peña, Moderator, Niskanen Center Linda Chavez, Niskanen Center Senior Fellow & Executive Director of Becoming an American Initiative Scott Cooper, National Security Outreach Director, Human Rights First Idean Salehyan, Professor of Political Science, University of North Texas 11:15-11:30 Break 11:30-12:15 Panel 2: Sponsorship of Refugees Matthew La Corte, Moderator, Niskanen Center Jennifer Bond, Chair, Global Refugee Sponsorship Initiative Chris George, Executive Director, Integrated Refugee & Immigrant Services Chris Gersten, Fmr. Director of Office of Refugee Resettlement 1989-1993 12:15-12:30 Closing Remarks Follow us @NiskanenCenter #NiskanenEvents #RefugeesinAmerica ! Speakers Jennifer Bond holds degrees in law, literature, and business and has been called to the Bars of Ontario and British Columbia. She completed her graduate work at the Yale Law School as a John Peters Humphrey Fellow in International Human Rights Law. She is currently serving as Chair of the Global Refugee Sponsorship Initiative and is Founder and Managing Director of the University of Ottawa Refugee Hub. In 2015/16, Professor Bond served on a full-time basis as Special Advisor to Canada’s Minister of Immigration, Refugees, and Citizenship on Canada’s Syrian Refugee Initiative, a project which engaged over two million Canadians and brought over 40,000 refugees to Canada in under four months.Professor Bond has also provided strategic advice to Canada’s Ambassador to the United Nations and the United Nations Refugee Agency (UNHCR).