Creative Coding for Audiovisual Art: the Codecircle Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

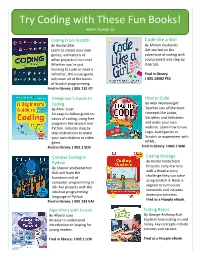

Try Coding with These Fun Books!

Try Coding with These Fun Books! www.mywpl.ca Coding From Scratch Code like a Girl By Rachel Ziter By Miriam Peskowitz Learn to create your own Get started on the games, animations or adventure of coding with other projects in no time! cool projects and step by Whether you’re just step tips. learning to code or need a refresher, this visual guide Find in library: will cover all of the basics J 005.13082 PES of Scratch programming. Find in library: J 005.133 ZIT A Beginner’s Guide to How to Code Coding By Max Wainewright By Marc Scott Teaches you all the basic An easy-to-follow guide to concepts like Loops, basics of coding, using free Variables and Selections programs like Scratch and and make your own Python. Includes step by website. Learn how to use step instructions to make Lego, build games in your own chatbot or video Scratch or experiment with game. HTML. Find in library: J 005.1 SCO Find in library: J 000.1 WAI Creative Coding in Coding Onstage By Kristin Fontichiaro Python By Sheena Vaidyanathan Presents early learners Kids will learn the with a theatre story fundamentals of challenge they can solve computer programming in using Scratch 3. Book is 30+ fun projects with the aligned to curriculum standards and includes intuitive programming language of Python extension activities. Find as a Hoopla eBook. Find in library: J 005.133 VAI Algorithms with Frozen Coding Basics By Allyssa Loya By George Anthony Kulz An easy to understand Explains how coding is used introduction to looping for today, key concepts include young readers. -

Code-Bending: a New Creative Coding Practice

Code-bending: a new creative coding practice Ilias Bergstrom1, R. Beau Lotto2 1EventLAB, Universitat de Barcelona, Campus de Mundet - Edifici Teatre, Passeig de la Vall d'Hebron 171, 08035 Barcelona, Spain. Email: [email protected], [email protected] 2Lottolab Studio, University College London, 11-43 Bath Street, University College London, London, EC1V 9EL. Email: [email protected] Key-words: creative coding, programming as art, new media, parameter mapping. Abstract Creative coding, artistic creation through the medium of program instructions, is constantly gaining traction, a steady stream of new resources emerging to support it. The question of how creative coding is carried out however still deserves increased attention: in what ways may the act of program development be rendered conducive to artistic creativity? As one possible answer to this question, we here present and discuss a new creative coding practice, that of Code-Bending, alongside examples and considerations regarding its application. Introduction With the creative coding movement, artists’ have transcended the dependence on using only pre-existing software for creating computer art. In this ever-expanding body of work, the medium is programming itself, where the piece of Software Art is not made using a program; instead it is the program [1]. Its composing material is not paint on paper or collections of pixels, but program instructions. From being an uncommon practice, creative coding is today increasingly gaining traction. Schools of visual art, music, design and architecture teach courses on creatively harnessing the medium. The number of programming environments designed to make creative coding approachable by practitioners without formal software engineering training has expanded, reflecting creative coding’s widened user base. -

Prioritizing Pull Requests

Prioritizing pull requests Version of June 17, 2015 Erik van der Veen Prioritizing pull requests THESIS submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE in COMPUTER SCIENCE by Erik van der Veen born in Voorburg, the Netherlands Software Engineering Research Group Q42 Department of Software Technology Waldorpstraat 17F Faculty EEMCS, Delft University of Technology 2521 CA Delft, the Netherlands The Hague, the Netherlands www.ewi.tudelft.nl www.q42.com c 2014 Erik van der Veen. Cover picture: Finding the pull request that needs the most attention. Prioritizing pull requests Author: Erik van der Veen Student id: 1509381 Email: [email protected] Abstract Previous work showed that in the pull-based development model integrators face challenges with regard to prioritizing work in the face of multiple concurrent pull requests. We identified the manual prioritization heuristics applied by integrators and ex- tracted features from these heuristics. The features are used to train a machine learning model, which is capable of predicting a pull request’s importance. The importance is then used to create a prioritized order of the pull requests. Our main contribution is the design and initial implementation of a prototype service, called PRioritizer, which automatically prioritizes pull requests. The service works like a priority inbox for pull requests, recommending the top pull requests the project owner should focus on. It keeps the pull request list up-to-date when pull requests are merged or closed. In addition, the service provides functionality that GitHub is currently lacking. We implemented pairwise pull request conflict detection and several new filter and sorting options e.g. -

JOSH MILLER [email protected]

CREATING VISUALS IN PROCESSING JOSH MILLER [email protected] www.josh-miller.com WHAT IS PROCESSING? • Free software: processing.org • Old... 2001 old, but constantly updated • Started at MIT • Created to teach Comp Sci, embraced by artist nerds WHAT IS PROCESSING? • Free software: processing.org • Old... 2001 old, but constantly updated • Started at MIT • Created to teach Comp Sci, embraced by artist nerds WHAT IS IT? • A java-like programming language that makes Java apps... JAVA??!? • Isn’t that just for malware and exploits? PROCESSING 2.0 • Android • “Javascript” HTML5 canvas tag • processingjs.org WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO Text feltron report WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO http://tweetping.net/ WHAT DOES IT DO joshuadavis.com GENERATIVE ART • Art created by a set of rules. • Algorithmic art? CONNECTIONS • libraries • sound, video, animation, visualization, 3d, interface • http://processing.org/reference/libraries/ • hardware • kinect, arduino, sensors, cameras, serial devices, network, tablets, many screens, COMPETITORS • openFrameworks - www.openframeworks.cc • cinder - http://libcinder.org/ • max/msp - http://cycling74.com/ • vvvv - http://vvvv.org/ LEARNING PROCESSING • http://processing.org/learning/ • http://processing.org/reference/ • Books: • Learning Processing & The Nature of Code, Dan Shiffman • Processing: A Programming Handbook for Visual Designers and Artists, Reas & Fry INTRO void setup() { // runs once } void draw() { // runs 30fps... unless you tell it not to } DEMOS! • get excited. WHAT ELSE? • Anything you can do in Java you can do in Processing • libraries • syntax (oop, inheritance, etc) LEARN MORE • THE EXAMPLES MENU! • processing.org • creativeapplications.net PLUG • Teaching a processing course (“Creative Coding”) @ Kutztown this summer • M-T 1-5pm for all of June. -

CS 413 Ray Tracing and Vector Graphics

Welcome to CS 413 Ray Tracing and Vector Graphics Instructor: Joel Castellanos e-mail: [email protected] Web: http://cs.unm.edu/~joel/ Farris Engineering Center: 2110 2/5/2019 1 Course Resources Textbook: Ray Tracing from the Ground Up by Kevin Suffern Blackboard Learn: https://learn.unm.edu/ ◼ Assignement Drop-box ◼ Discussions ◼ Grades Class website: http://cs.unm.edu/~joel/cs413/ ◼ Syllabus ◼ Projects ◼ Lecture Notes ◼ Readings Assignments 2 ◼ Source Code 2 1 Structure of the Course ◼ Studio: Each of you will, by the end of the semester, build a single, well featured ray tracer / procedural texture renderer. ◼ Stages this project total to 70% of course grade. ◼ No exams ◼ Class time (30% of course grade) : ◼ Lecture ◼ Discussion of Reading ◼ Quizzes ◼ Show & Tell ◼ 3 Code reviews 3 Language and Platform ◼ The textbook examples are all in C++: ◼ STL (Standard Template Library – this is multiplatform). ◼ wxWidgets application framework (Windows and Linux). ◼ Class examples replace wxWidgets with OpenFrameworks. ◼ Decide on an IDE. If you are using Windows, I recommend MS Visual Studio as it is an industry standard and many employers will expect you to be comfortable with it. For Linux, there are a number of good options including:, Eclipse C++, Netbeans for C/C++ Development, Code::Blocks, Sublime Text editor and others. ◼ Your project must be in an on-going repo in GitHub. This can be public or private. Building a good GitHub repo to show prospective employers is essential to a young computer 4 scientist in the current job market. 4 2 Assignment 1 ◼ For Monday, Jan 29: ▪ Read Chapters 1, 2 (skim and reference later) and 3. -

Creative Coding and Visual Portfolios for CS1 Ira Greenberg

Bryn Mawr College Scholarship, Research, and Creative Work at Bryn Mawr College Computer Science Faculty Research and Computer Science Scholarship 2012 Creative Coding and Visual Portfolios for CS1 Ira Greenberg Deepak Kumar Bryn Mawr College, [email protected] Dianna Xu Bryn Mawr College, [email protected] Let us know how access to this document benefits ouy . Follow this and additional works at: http://repository.brynmawr.edu/compsci_pubs Part of the Computer Sciences Commons Custom Citation Greenberg, I. et al. 2012.Creative coding and visual portfolios for CS1, Proceedings of the 43rd ACM technical symposium on Computer Science Education, February 29-March 03, 2012, Raleigh, North Carolina: 247-252. This paper is posted at Scholarship, Research, and Creative Work at Bryn Mawr College. http://repository.brynmawr.edu/compsci_pubs/59 For more information, please contact [email protected]. Creative Coding and Visual Portfolios for CS1 Ira Greenberg Deepak Kumar Dianna Xu Center of Creative Computation Department of Computer Science Department of Computer Science Dept. of Comp. Sci. & Engineering Bryn Mawr College Bryn Mawr College Southern Methodist University Bryn Mawr, PA (USA) Bryn Mawr, PA (USA) Dallas, TX (USA) (1) 610-526-7485 (1) 610-526-6502 [email protected] [email protected] [email protected] ABSTRACT Notable efforts include media computation [Guzdial 2004], robots In this paper, we present the design and development of a new [Summet et al 2009], games/animation [Moskal et al 2004, approach to teaching the college-level introductory computing Bayless & Stout 2006], and music [Beck et al 2011]. Arguments course (CS1) using the context of art and creative coding. -

Database Music a History, Technology, and Aesthetics of the Database in Music Composition

Database Music A History, Technology, and Aesthetics of the Database in Music Composition by Federico Nicolás Cámara Halac A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Music New York University May, 2019 Jaime Oliver La Rosa c Federico Nicolás Cámara Halac All Rights Reserved, 2019 Dedication For my mother and father, who have always taught me to never give up with my research, even during the most difficult times. Also to my advisor, Jaime Oliver La Rosa, without his help and continuous guidance, this would have never been possible. For Elizabeth Hoffman and Judy Klein, who always believed in me, and whose words and music I bring everywhere. Finally to Aye, whose love I cannot even begin to describe. iv Acknowledgements I would like to thank my advisor, Jaime Oliver La Rosa, for his role in inspiring this project, as well as his commitment to research, clarity, and academic rigor. I am also indebted to committee members Martin Daughtry and Elizabeth Hoffman, for their ongoing guidance and support even at the very early stages of this project, and William Brent and Robert Rowe, whose insightful, thought-provoking input made this dissertation come to fruition. I am also everlastingly grateful to Judy Klein, for always being available to listen and share her listening. As well as to Aye, for her endless support and her helping me maintain hope in developing this project. I would also like to thank my parents, Ana and Hector, who inspired and nurtured my interest in music from a young age, and my sister Flor and my brother Joaquin who were always with me, next to every word. -

Visual Programming and Creative Code: a Maker Perspective in Software Studies

Visual Programming and Creative Code: A Maker Perspective in Software studies Master thesis MA Mediastudies New Media & Digital Culture Utrecht University Netherlands Jelke de Boer 3884023 2 Abstract If software is the cornerstone of (post) modern society then it is the algorithm that serves as the primary tool of the information age. The field of software studies investigates how software affects culture and society. The initial efforts have contributed to the understanding of the “nature” of code and widespread ‘popular’ or ‘mainstream’ productivity software. This thesis shifts attention to visual programming, a popular tool in artistic performance, interactive installations and electronic music production. How can visual programming be understood as an artistic tool? As an overarching theme three concepts will be addressed: Materiality, Agency and Sociality. Building upon actor network theory it will be argued that, as a tool, visual programming should be understood an act of creative coding that attempts to make hardware performative. Furthermore, it will also be argued that aside of the arts, engineering and design, a new tradition of creators has emerged from maker culture. Keywords Software studies, Visual Programming, Artistic Tools, Creative Code, Agency, Performance, DIY, Maker Culture, Actor Network Theory, Design Fiction. 3 4 Acknowledgments The list of people that somehow deserve to be mentioned for their support is long, including a network of people that covers all three major Educational institutions in the Utrecht area; Utrecht University, the University of Applied Sciences Utrecht and the University of the Arts Utrecht. The Utrecht area offers a wonderful climate for students, lecturers and artists, three roles that I have tried to combine following the New Media & Digital Culture program. -

Programming Interactivity.Pdf

Download at Boykma.Com Programming Interactivity A Designer’s Guide to Processing, Arduino, and openFrameworks Joshua Noble Beijing • Cambridge • Farnham • Köln • Sebastopol • Taipei • Tokyo Download at Boykma.Com Programming Interactivity by Joshua Noble Copyright © 2009 Joshua Noble. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Steve Weiss Indexer: Ellen Troutman Zaig Production Editor: Sumita Mukherji Cover Designer: Karen Montgomery Copyeditor: Kim Wimpsett Interior Designer: David Futato Proofreader: Sumita Mukherji Illustrator: Robert Romano Production Services: Newgen Printing History: July 2009: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Programming Interactivity, the image of guinea fowl, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. TM This book uses RepKover™, a durable and flexible lay-flat binding. -

Code Bending: a New Creative Coding Practice

g e n e r a l a r t i c l e Code Bending A New Creative Coding Practice I l ias B e R g str o m a n d R . B e A u l o tt o Creative coding, or artistic creation through the medium of program include sketching, oil painting, creating pieces from found instructions, is constantly gaining traction, and there is a steady stream objects and live painting. Note the notions of art practice of new resources emerging to support it. However, the question of how and of technique overlap somewhat: A painter may regard creative coding is carried out still deserves more attention. In what ways ABSTRACT may the act of program development be rendered conducive to artistic applying oil paint with a palette knife instead of a paintbrush creativity? As one possible answer to this question, the authors present as employing a new technique. A painter in his studio ver- and discuss a new creative coding practice, that of code bending, sus a live painter in front of an audience may on the other alongside examples and considerations regarding its applications. hand employ the same techniques, but engage in different practices. While programming has been established as a me- dium for art, there is still much room for analogous discus- With the creative coding movement, artists have transcended sion on how creative coding practice may be carried out. In the dependence on using only pre-existing software for creat- what ways may software development, largely established as ing computer art. -

Interdisciplinary Research As Musical Experimentation: a Case Study in Musicianly Approaches to Sound Corpora

Proceedings of the Electroacoustic Music Studies Network Conference, Florence (Italy), June 20-23, 2018. www.ems-network.org Owen Green, Pierre Alexandre Tremblay, Gerard Roma Interdisciplinary Research as Musical Experimentation: A case study in musicianly approaches to sound corpora Centre for Research into New Music (CeReNeM) University of Huddersfield, UK {o.green, p.a.tremblay, g.roma}@hud.ac.uk Abstract Can a commitment to musical pluralism be embedded as a value in musical technologies? This question has come to structure part of our work during the early stages of a five-year project investigating techniques and tools for ‘Fluid Corpus Manipulation’ (FluCoMa)1. In this paper we frame our thinking about this by considering interdisciplinarity in Electroacoustic Music Studies, before proceeding to apply our thoughts on this to our specific project in terms of practice-led design. We end with questions as seeds for future discussion, rather than categorical findings. Introduction The authors are currently in the early stages of a five-year project concerned with the general topic of making music using collections of recorded sound (corpora, in a broad sense) in creative coding environments (Max, Supercollider, PD). The project aims to animate musical and technical research around this topic by developing new tools and learning resources, and by seeding a community of interest (Fischer 2009), made up of diverse researchers and practitioners. We will develop extensions for creative coding environments that enable techno- fluent musicians to explore and develop new techniques for constructing and manipulating corpora of recordings, and that seek to prioritise divergent, open-ended engagement. -

Combining Live Coding and Vjing for Live Visual Performance

CJing: Combining Live Coding and VJing for Live Visual Performance by Jack Voldemars Purvis A thesis submitted to the Victoria University of Wellington in fulfilment of the requirements for the degree of Master of Science in Computer Graphics. Victoria University of Wellington 2019 Abstract Live coding focuses on improvising content by coding in textual inter- faces, but this reliance on low level text editing impairs usability by not al- lowing for high level manipulation of content. VJing focuses on remixing existing content with graphical user interfaces and hardware controllers, but this focus on high level manipulation does not allow for fine-grained control where content can be improvised from scratch or manipulated at a low level. This thesis proposes the code jockey practice (CJing), a new hybrid practice that combines aspects of live coding and VJing practice. In CJing, a performer known as a code jockey (CJ) interacts with code, graphical user interfaces and hardware controllers to create or manipulate real-time visuals. CJing harnesses the strengths of live coding and VJing to enable flexible performances where content can be controlled at both low and high levels. Live coding provides fine-grained control where con- tent can be improvised from scratch or manipulated at a low level while VJing provides high level manipulation where content can be organised, remixed and interacted with. To illustrate CJing, this thesis contributes Visor, a new environment for live visual performance that embodies the practice. Visor's design is based on key ideas of CJing and a study of live coders and VJs in practice.