Programming Interactivity.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

English Version

Deloitte Perspective Deloitte Can the Punch Bowl Really Be Taken Away? The Future of Shared Mobility in China Perspective Gradual Reform Across the Value Chain 2018 (Volume VII) 2018 (Volume VII) 2018 (Volume About Deloitte Global Deloitte refers to one or more of Deloitte Touche Tohmatsu Limited, a UK private company limited by guarantee (“DTTL”), its network of member firms, and their related entities. DTTL and each of its member firms are legally separate and independent entities. DTTL (also referred to as “Deloitte Global”) does not provide services to clients. Please see www.deloitte.com/about to learn more about our global network of member firms. Deloitte provides audit & assurance, consulting, financial advisory, risk advisory, tax and related services to public and private clients spanning multiple industries. Deloitte serves nearly 80 percent of the Fortune Global 500® companies through a globally connected network of member firms in more than 150 countries and territories bringing world-class capabilities, insights, and high-quality service to address clients’ most complex business challenges. To learn more about how Deloitte’s approximately 263,900 professionals make an impact that matters, please connect with us on Facebook, LinkedIn, or Twitter. About Deloitte China The Deloitte brand first came to China in 1917 when a Deloitte office was opened in Shanghai. Now the Deloitte China network of firms, backed by the global Deloitte network, deliver a full range of audit & assurance, consulting, financial advisory, risk advisory and tax services to local, multinational and growth enterprise clients in China. We have considerable experience in China and have been a significant contributor to the development of China's accounting standards, taxation system and local professional accountants. -

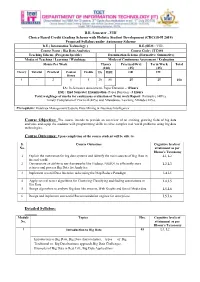

(CBCGS-H 2019) Proposed Syllabus Under Autonomy Scheme

B.E. Semester –VIII Choice Based Credit Grading Scheme with Holistic Student Development (CBCGS-H 2019) Proposed Syllabus under Autonomy Scheme B.E.( Information Technology ) B.E.(SEM : VIII) Course Name : Big Data Analytics Course Code : ITC801 Teaching Scheme (Program Specific) Examination Scheme (Formative/ Summative) Modes of Teaching / Learning / Weightage Modes of Continuous Assessment / Evaluation Hours Per Week Theory Practical/Oral Term Work Total (100) (25) (25) Theory Tutorial Practical Contact Credits IA ESE OR TW Hours 4 - 2 6 5 20 80 25 25 150 IA: In-Semester Assessment- Paper Duration – 1Hours ESE : End Semester Examination- Paper Duration - 3 Hours Total weightage of marks for continuous evaluation of Term work/Report: Formative (40%), Timely Completion of Practical (40%) and Attendance /Learning Attitude (20%). Prerequisite: Database Management System, Data Mining & Business Intelligence Course Objective: The course intends to provide an overview of an exciting growing field of big data analytics and equip the students with programming skills to solve complex real world problems using big data technologies. Course Outcomes: Upon completion of the course student will be able to: S. Course Outcomes Cognitive levels of No. attainment as per Bloom’s Taxonomy 1 Explain the motivation for big data systems and identify the main sources of Big Data in L1, L2 the real world. 2 Demonstrate an ability to use frameworks like Hadoop, NOSQL to efficiently store L2,L3 retrieve and process Big Data for Analytics. 3 Implement several Data Intensive tasks using the Map Reduce Paradigm L4,L5 4 Apply several newer algorithms for Clustering Classifying and finding associations in L4,L5 Big Data 5 Design algorithms to analyze Big data like streams, Web Graphs and Social Media data. -

Redefine Work: the Untapped Opportunity for Expanding Value

A report from the Deloitte Center for the Edge Redefine work The untapped opportunity for expanding value Deloitte Consulting LLP’s Human Capital practice focuses on optimizing and sustaining organiza- tional performance through their most important asset: their workforce. We do this by advising our clients on a core set of issues including: how to successfully navigate the transition to the future of work; how to create the “simply irresistible” experience; how to activate the digital orga- nization by instilling a digital mindset—and optimizing their human capital balance sheet, often the largest part of the overall P&L. The practice’s work centers on transforming the organization, workforce, and HR function through a comprehensive set of services, products, and world-class Bersin research enabled by our proprietary Human Capital Platform. The untapped opportunity for expanding value Contents Introduction: Opportunity awaits—down a different path | 2 A broader view of value | 4 Redefining work everywhere: What does the future of human work look like? | 6 Breaking from routine: Work that draws on human capabilities | 11 Focusing on value for others | 18 Making work context-specific | 21 Giving and exercising latitude | 25 How do we get started? | 31 Endnotes | 34 1 Redefine work Introduction: Opportunity awaits—down a different path Underneath the understandable anxiety about the future of work lies a significant missed opportunity. That opportunity is to return to the most basic question of all: What is work? If we come up with a creative answer to that, we have the potential to create significant new value for the enterprise. -

Intel® Galileo Software Package Version: 1.0.2 for Arduino IDE V1.5.3 Release Notes

Intel® Galileo Software Package Version: 1.0.2 for Arduino IDE v1.5.3 Release Notes 23 June 2014 Order Number: 328686-006US Intel® Galileo Software Release Notes 1 Introduction This document describes extensions and deviations from the functionality described in the Intel® Galileo Board Getting Started Guide, available at: www.intel.com/support/go/galileo This software release supports the following hardware and software: • Intel® Galileo Customer Reference Board (CRB), Fab D with blue PCB • Intel® Galileo (Gen 2) Customer Reference Board (CRB), Gen 2 marking • Intel® Galileo software v1.0.2 for Arduino Integrated Development Environment (IDE) v1.5.3 Note: This release uses a special version of the Arduino IDE. The first thing you must do is download it from the Intel website below and update the SPI flash on the board. Features in this release are described in Section 1.4. Release 1.0.2 adds support for the 2nd generation Intel® Galileo board, also called Intel® Galileo Gen 2. In this document, for convenience: • Software and software package are used as generic terms for the IDE software that runs on both Intel® Galileo and Galileo (Gen 2) boards. • Board is used as a generic term when either Intel® Galileo or Galileo (Gen 2) boards can be used. If the instructions are board-specific, the exact model is identified. 1.1 Downloading the software release Download the latest Arduino IDE and firmware files here: https://communities.intel.com/community/makers/drivers This release contains multiple zip files, including: • Operating system-specific IDE packages, contain automatic SPI flash update: − Intel_Galileo_Arduino_SW_1.5.3_on_Linux32bit_v1.0.2.tgz (73.9 MB) − Intel_Galileo_Arduino_SW_1.5.3_on_Linux64bit_v1.0.2.tgz (75.2 MB) − Intel_Galileo_Arduino_SW_1.5.3_on_MacOSX_v1.0.2.zip (56.0 MB) − Intel_Galileo_Arduino_SW_1.5.3_on_Windows_v1.0.2.zip (106.9 MB) • (Mandatory for WiFi) Files for booting board from SD card. -

Installation Pieter P

Installation Pieter P Important:These are the installation instructions for developers, if you just want to use the library, please see these installation instructions for users. I strongly recommend using a Linux system for development. I'm using Ubuntu, so the installation instructions will focus mainly on this distribution, but it should work ne on others as well. If you're using Windows, my most sincere condolences. Installing the compiler, GNU Make, CMake and Git For development, I use GCC 11, because it's the latest version, and its error messages and warnings are better than previous versions. On Ubuntu, GCC 11 can be installed through the ppa:ubuntu-toolchain-r/test repository: $ sudo add-apt-repository ppa:ubuntu-toolchain-r/test $ sudo apt update $ sudo apt install gcc-11 g++-11 You'll also need GNU Make and CMake as a build system, and Git for version control. Now is also a good time to install GNU Wget, if you haven't already, it comes in handy later. $ sudo apt install make cmake git wget Installing the Arduino IDE, Teensy Core and ESP32 Core To compile sketches for Arduino, I'm using the Arduino IDE. The library focusses on some of the more powerful Arduino-compatible boards, such as PJRC's Teensy 3.x and Espressif's ESP32 platforms. To ensure that nothing breaks, tests are included for these boards specically, so you have to install the appropriate Arduino Cores. Arduino IDE If you're reading this document, you probably know how to install the Arduino IDE. Just in case, you can nd the installation instructions here. -

For Windows Lesson 0 Setting up Development Environment.Pdf

LESSON 0 sets up development environment -- arduino IDE But.. e...... what is arduino IDE......? 0 arduino IDE As an open source software,arduino IDE,basing on Processing IDE development is an integrated development environment officially launched by Arduino. In the next part,each movement of the vehicle is controlled by the program so it’s necessary to get the program installed and set up correctly. By using arduino IDE,You just write the program code in the IDE and upload it to the Arduino circuit board. The program will tell the Arduino circuit board what to do. so,Where can we download arduino IDE? the arduino IDE? STEP 1: Go to https://www.arduino.cc/en/Main/Software and you will see below page. The version available at this website is usually the latest version,and the actual version may be newer than the version in the picture. STEP2: Download the development software that is suited for the operating system of your computer. Take Windows as an example here. If you are macOS, please pull to the end. You can install it using the EXE installation package or the green package. The following is the exe implementation of the installation procedures. Press the char “Windows Installer” 1 STEP3: Press the button “JUST DOWNLOAD” to download the software. The download file: STEP4: These are available in the materials we provide, and the versions of our materials are the latest versions when this course was made. Choose "I Agree" to see the following interface. 2 Choose "Next" to see the following interface. -

A Review of User Interface Design for Interactive Machine Learning

1 A Review of User Interface Design for Interactive Machine Learning JOHN J. DUDLEY, University of Cambridge, United Kingdom PER OLA KRISTENSSON, University of Cambridge, United Kingdom Interactive Machine Learning (IML) seeks to complement human perception and intelligence by tightly integrating these strengths with the computational power and speed of computers. The interactive process is designed to involve input from the user but does not require the background knowledge or experience that might be necessary to work with more traditional machine learning techniques. Under the IML process, non-experts can apply their domain knowledge and insight over otherwise unwieldy datasets to find patterns of interest or develop complex data driven applications. This process is co-adaptive in nature and relies on careful management of the interaction between human and machine. User interface design is fundamental to the success of this approach, yet there is a lack of consolidated principles on how such an interface should be implemented. This article presents a detailed review and characterisation of Interactive Machine Learning from an interactive systems perspective. We propose and describe a structural and behavioural model of a generalised IML system and identify solution principles for building effective interfaces for IML. Where possible, these emergent solution principles are contextualised by reference to the broader human-computer interaction literature. Finally, we identify strands of user interface research key to unlocking more efficient and productive non-expert interactive machine learning applications. CCS Concepts: • Human-centered computing → HCI theory, concepts and models; Additional Key Words and Phrases: Interactive machine learning, interface design ACM Reference Format: John J. -

Prioritizing Pull Requests

Prioritizing pull requests Version of June 17, 2015 Erik van der Veen Prioritizing pull requests THESIS submitted in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE in COMPUTER SCIENCE by Erik van der Veen born in Voorburg, the Netherlands Software Engineering Research Group Q42 Department of Software Technology Waldorpstraat 17F Faculty EEMCS, Delft University of Technology 2521 CA Delft, the Netherlands The Hague, the Netherlands www.ewi.tudelft.nl www.q42.com c 2014 Erik van der Veen. Cover picture: Finding the pull request that needs the most attention. Prioritizing pull requests Author: Erik van der Veen Student id: 1509381 Email: [email protected] Abstract Previous work showed that in the pull-based development model integrators face challenges with regard to prioritizing work in the face of multiple concurrent pull requests. We identified the manual prioritization heuristics applied by integrators and ex- tracted features from these heuristics. The features are used to train a machine learning model, which is capable of predicting a pull request’s importance. The importance is then used to create a prioritized order of the pull requests. Our main contribution is the design and initial implementation of a prototype service, called PRioritizer, which automatically prioritizes pull requests. The service works like a priority inbox for pull requests, recommending the top pull requests the project owner should focus on. It keeps the pull request list up-to-date when pull requests are merged or closed. In addition, the service provides functionality that GitHub is currently lacking. We implemented pairwise pull request conflict detection and several new filter and sorting options e.g. -

JOSH MILLER [email protected]

CREATING VISUALS IN PROCESSING JOSH MILLER [email protected] www.josh-miller.com WHAT IS PROCESSING? • Free software: processing.org • Old... 2001 old, but constantly updated • Started at MIT • Created to teach Comp Sci, embraced by artist nerds WHAT IS PROCESSING? • Free software: processing.org • Old... 2001 old, but constantly updated • Started at MIT • Created to teach Comp Sci, embraced by artist nerds WHAT IS IT? • A java-like programming language that makes Java apps... JAVA??!? • Isn’t that just for malware and exploits? PROCESSING 2.0 • Android • “Javascript” HTML5 canvas tag • processingjs.org WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO Text feltron report WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO WHAT DOES IT DO http://tweetping.net/ WHAT DOES IT DO joshuadavis.com GENERATIVE ART • Art created by a set of rules. • Algorithmic art? CONNECTIONS • libraries • sound, video, animation, visualization, 3d, interface • http://processing.org/reference/libraries/ • hardware • kinect, arduino, sensors, cameras, serial devices, network, tablets, many screens, COMPETITORS • openFrameworks - www.openframeworks.cc • cinder - http://libcinder.org/ • max/msp - http://cycling74.com/ • vvvv - http://vvvv.org/ LEARNING PROCESSING • http://processing.org/learning/ • http://processing.org/reference/ • Books: • Learning Processing & The Nature of Code, Dan Shiffman • Processing: A Programming Handbook for Visual Designers and Artists, Reas & Fry INTRO void setup() { // runs once } void draw() { // runs 30fps... unless you tell it not to } DEMOS! • get excited. WHAT ELSE? • Anything you can do in Java you can do in Processing • libraries • syntax (oop, inheritance, etc) LEARN MORE • THE EXAMPLES MENU! • processing.org • creativeapplications.net PLUG • Teaching a processing course (“Creative Coding”) @ Kutztown this summer • M-T 1-5pm for all of June. -

Design for Use

Research-Technology Management ISSN: 0895-6308 (Print) 1930-0166 (Online) Journal homepage: http://www.tandfonline.com/loi/urtm20 Design for Use Don Norman & Jim Euchner To cite this article: Don Norman & Jim Euchner (2016) Design for Use, Research-Technology Management, 59:1, 15-20 To link to this article: http://dx.doi.org/10.1080/08956308.2016.1117315 Published online: 08 Jan 2016. Submit your article to this journal View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=urtm20 Download by: [Donald Norman] Date: 10 January 2016, At: 13:57 CONVERSATIONS Design for Use An Interview with Don Norman Don Norman talks with Jim Euchner about the design of useful things, from everyday objects to autonomous vehicles. Don Norman and Jim Euchner Don Norman has studied everything from refrigerator the people we’re designing for not only don’t know about thermostats to autonomous vehicles. In the process, he all of these nuances; they don’t care. They don’t care how has derived a set of principles that govern what makes much effort we put into it, or the kind of choices we made, designed objects usable. In this interview, he discusses some or the wonderful technology behind it all. They simply care of those principles, how design can be effectively integrated that it makes their lives better. This requires us to do a into technical organizations, and how designers can work design quite differently than has traditionally been the case. as part of product development teams. -

Introduction to Arduino Software Pdf

Introduction to arduino software pdf Continue Arduino IDE is used to write computer code and upload that code to a physical board. Arduino IDE is very simple, and this simplicity is probably one of the main reasons why Arduino has become so popular. We can certainly say that compatibility with Arduino IDE is now one of the basic requirements for the new microcontroller board. Over the years, many useful features have been added to Arduino IDE, and now you can manage third-party libraries and tips from IDE, and still keep the ease of programming board. The main Arduino IDE window is shown below, with a simple example of Blink. You can get different versions of Arduino IDE from the Download page on Arduino's official website. You have to choose software that is compatible with your operating system (Windows, iOS or Linux). Once the file is downloaded, unpack the file to install Arduino IDE. Arduino Uno, Mega and Arduino Nano automatically draw energy from either, USB connection to your computer or external power. Launch ARduino IDE. Connect the Arduino board to your computer with a USB cable. Some LED power should be ON. Once the software starts, you have two options: you can create a new project or you can open an existing project example. To create a new project, select file → New. And then edit the file. To open an existing project example, select → example → Basics → (select an example from the list). For an explanation, we'll take one of the simplest examples called AnalogReadSerial. It reads the value from the analog A0 pin and prints it. -

CS 413 Ray Tracing and Vector Graphics

Welcome to CS 413 Ray Tracing and Vector Graphics Instructor: Joel Castellanos e-mail: [email protected] Web: http://cs.unm.edu/~joel/ Farris Engineering Center: 2110 2/5/2019 1 Course Resources Textbook: Ray Tracing from the Ground Up by Kevin Suffern Blackboard Learn: https://learn.unm.edu/ ◼ Assignement Drop-box ◼ Discussions ◼ Grades Class website: http://cs.unm.edu/~joel/cs413/ ◼ Syllabus ◼ Projects ◼ Lecture Notes ◼ Readings Assignments 2 ◼ Source Code 2 1 Structure of the Course ◼ Studio: Each of you will, by the end of the semester, build a single, well featured ray tracer / procedural texture renderer. ◼ Stages this project total to 70% of course grade. ◼ No exams ◼ Class time (30% of course grade) : ◼ Lecture ◼ Discussion of Reading ◼ Quizzes ◼ Show & Tell ◼ 3 Code reviews 3 Language and Platform ◼ The textbook examples are all in C++: ◼ STL (Standard Template Library – this is multiplatform). ◼ wxWidgets application framework (Windows and Linux). ◼ Class examples replace wxWidgets with OpenFrameworks. ◼ Decide on an IDE. If you are using Windows, I recommend MS Visual Studio as it is an industry standard and many employers will expect you to be comfortable with it. For Linux, there are a number of good options including:, Eclipse C++, Netbeans for C/C++ Development, Code::Blocks, Sublime Text editor and others. ◼ Your project must be in an on-going repo in GitHub. This can be public or private. Building a good GitHub repo to show prospective employers is essential to a young computer 4 scientist in the current job market. 4 2 Assignment 1 ◼ For Monday, Jan 29: ▪ Read Chapters 1, 2 (skim and reference later) and 3.