Delusions, Acceptances, and Cognitive Feelings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Paranoid – Suspicious; Argumentative; Paranoid; Continually on The

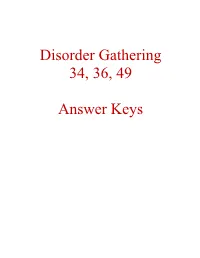

Disorder Gathering 34, 36, 49 Answer Keys A N S W E R K E Y, Disorder Gathering 34 1. Avital Agoraphobia – 2. Ewelina Alcoholism – 3. Martyna Anorexia – 4. Clarissa Bipolar Personality Disorder –. 5. Lysette Bulimia – 6. Kev, Annabelle Co-Dependant Relationship – 7. Archer Cognitive Distortions / all-of-nothing thinking (Splitting) – 8. Josephine Cognitive Distortions / Mental Filter – 9. Mendel Cognitive Distortions / Disqualifying the Positive – 10. Melvira Cognitive Disorder / Labeling and Mislabeling – 11. Liat Cognitive Disorder / Personalization – 12. Noa Cognitive Disorder / Narcissistic Rage – 13. Regev Delusional Disorder – 14. Connor Dependant Relationship – 15. Moira Dissociative Amnesia / Psychogenic Amnesia – (*Jason Bourne character) 16. Eylam Dissociative Fugue / Psychogenic Fugue – 17. Amit Dissociative Identity Disorder / Multiple Personality Disorder – 18. Liam Echolalia – 19. Dax Factitous Disorder – 20. Lorna Neurotic Fear of the Future – 21. Ciaran Ganser Syndrome – 22. Jean-Pierre Korsakoff’s Syndrome – 23. Ivor Neurotic Paranoia – 24. Tucker Persecutory Delusions / Querulant Delusions – 25. Lewis Post-Traumatic Stress Disorder – 26. Abdul Proprioception – 27. Alisa Repressed Memories – 28. Kirk Schizophrenia – 29. Trevor Self-Victimization – 30. Jerome Shame-based Personality – 31. Aimee Stockholm Syndrome – 32. Delphine Taijin kyofusho (Japanese culture-specific syndrome) – 33. Lyndon Tourette’s Syndrome – 34. Adar Social phobias – A N S W E R K E Y, Disorder Gathering 36 Adjustment Disorder – BERKELEY Apotemnophilia -

ULL£TTN of Rsycholoqy and the !0O0 DIV

>ULL£TTN OF rSYCHOLOQY AND THE !0O0 DIV. 10, AMERICAN PSYCHOLOGICAL ASSOCIATION Vol i(2) SPECIAL ISSUE: CREATIVITY AND PSYCHGPATHQLQ@Y SARAH BENOLKEN AND COLIN MAKTINDALE, EDITORS AND ON EXHIBIT AT APA: THE OUTSIDER ART OF HAI TOM ETTfNGER, EDITOR William Adolphe Bouguereau - At the Edge of the River (Detail) From the collection of Fred and Sherry Ross President Paul M. Farnsworth 1945-•1949 Robert 1 Sternberg (1999-2000) Norman C. Meier 1949-•1950 Department of Psychology Paul M. Farnsworth 1950-1951 Yale University Kate Hevner Mueller 1951 1952 Herbert S. Landfeld .1952 •1953 Box 208205 R. M. Ogden 1953 •1954 New Haven CT 06520 Carroll C. Pratt 1954 •1955 Melvin G. Rigg 1955-•1956 President-Elect J. P. Guilford . 1956•195 7 Sandra Russ (2000-2001) Rudolf Arnheim 1957-1958 Department of Psychology James J. Gibson 1958 •1959 Case Western Reserve University Leonard Carmichael 1959 1960 Cleveland OH 44106 ' Abraham Maslow 1060 1961 Joseph Shoben, Jr. 1961 •1962 Robert B. Macleod Past-President 1962 •1963 Carrol C. Pratt 1963 -1964 Louis Sass (1998-1999) Harry Helson 1964 •1965 Rudolf Arnheim 1965-1966 Secretary-Treasurer Irving L. Child 1966 1967 Constance Milbrath (1999-2002) Robert L. Knapp 1967-•1968 SigmundKoch 1968-1969 APA Council Representative Marianne L. Simmel 1969-•1970 Clair Golomb (1998-2001) Rudolf Arnheim 1970-1971 Frank Barron 1971-•1972 Michael A. Wallach Members-at-Large to the Executive 1972-1973 Frederick Wyatt Committee 1973 1974 Daniel E. Berlyne Stephanie Z. Dudek (1999-2002) 1974-•1975 Julian Hochberg 1975 •1976 David Harrington (1997-2000) Edward L. Walker 1976-•1977 Ruth Richards (1999-2002) Joachim Wohlwill 1978-•1979 Pavel Machotka 1979-1980 Ravenna Helson Bulletin Editor 1980-1981 Nathan Kogan Tom Ettinger (1998-2001) 1981-•1982 Salvatore R. -

Cotard's Syndrome: Two Case Reports and a Brief Review of Literature

Published online: 2019-09-26 Case Report Cotard’s syndrome: Two case reports and a brief review of literature Sandeep Grover, Jitender Aneja, Sonali Mahajan, Sannidhya Varma Department of Psychiatry, Post Graduate Institute of Medical Education and Research, Chandigarh, India ABSTRACT Cotard’s syndrome is a rare neuropsychiatric condition in which the patient denies existence of one’s own body to the extent of delusions of immortality. One of the consequences of Cotard’s syndrome is self‑starvation because of negation of existence of self. Although Cotard’s syndrome has been reported to be associated with various organic conditions and other forms of psychopathology, it is less often reported to be seen in patients with catatonia. In this report we present two cases of Cotard’s syndrome, both of whom had associated self‑starvation and nutritional deficiencies and one of whom had associated catatonia. Key words: Catatonia, Cotard’s syndrome, depression Introduction Case Report Cotard’s syndrome is a rare neuropsychiatric condition Case 1 characterized by anxious melancholia, delusions Mr. B, 65‑year‑old retired teacher who was pre‑morbidly of non‑existence concerning one’s own body to the well adjusted with no family history of mental illness, extent of delusions of immortality.[1] It has been most with personal history of smoking cigarettes in dependent commonly seen in patients with severe depression. pattern for last 30 years presented with an insidious However, now it is thought to be less common possibly onset mental illness of one and half years duration due to early institution of treatment in patients precipitated by psychosocial stressors. -

Monothematic Delusions: Towards a Two-Factor Account Davies, Martin, 1950- Coltheart, Max

Monothematic Delusions: Towards a Two-Factor Account Davies, Martin, 1950- Coltheart, Max. Langdon, Robyn. Breen, Nora. Philosophy, Psychiatry, & Psychology, Volume 8, Number 2/3, June/September 2001, pp. 133-158 (Article) Published by The Johns Hopkins University Press DOI: 10.1353/ppp.2001.0007 For additional information about this article http://muse.jhu.edu/journals/ppp/summary/v008/8.2davies.html Access Provided by Australian National University at 08/06/11 11:33PM GMT DAVIES, COLTHEART, LANGDON, AND BREEN / Monothematic Delusions I 133 Monothematic Delusions: Towards a Two-Factor Account Martin Davies, Max Coltheart, Robyn Langdon, and Nora Breen ABSTRACT: We provide a battery of examples of delu- There is more than one idea here, and the sions against which theoretical accounts can be tested. definition offered by the American Psychiatric Then we identify neuropsychological anomalies that Association’s Diagnostic and Statistical Manual could produce the unusual experiences that may lead, of Mental Disorders (DSM) seems to be based on in turn, to the delusions in our battery. However, we argue against Maher’s view that delusions are false something similar to the second part of the OED beliefs that arise as normal responses to anomalous entry: experiences. We propose, instead, that a second factor Delusion: A false belief based on incorrect inference is required to account for the transition from unusual about external reality that is firmly sustained despite experience to delusional belief. The second factor in what almost everyone else believes and despite what the etiology of delusions can be described superficial- constitutes incontrovertible and obvious proof or evi- ly as a loss of the ability to reject a candidate for belief dence to the contrary (American Psychiatric Associa- on the grounds of its implausibility and its inconsis- tion 1994, 765). -

Cotard's Syndrome

MIND & BRAIN, THE JOURNAL OF PSYCHIATRY REVIEW ARTICLE Cotard’s Syndrome Hans Debruyne1,2,3, Michael Portzky1, Kathelijne Peremans1 and Kurt Audenaert1 Affiliations: 1Department of Psychiatry, University Hospital Ghent, Ghent, Belgium; 2PC Dr. Guislain, Psychiatric Hospital, Ghent, Belgium and 3Department of Psychiatry, Zorgsaam/RGC, Terneuzen, The Netherlands ABSTRACT Cotard’s syndrome is characterized by nihilistic delusions focused on the individual’s body including loss of body parts, being dead, or not existing at all. The syndrome as such is neither mentioned in DSM-IV-TR nor in ICD-10. There is growing unanimity that Cotard’s syndrome with its typical nihilistic delusions externalizes an underlying disorder. Despite the fact that Cotard’s syndrome is not a diagnostic entity in our current classification systems, recognition of the syndrome and a specific approach toward the patient is mandatory. This paper overviews the historical aspects, clinical characteristics, classification, epidemiology, and etiological issues and includes recent views on pathogenesis and neuroimaging. A short overview of treatment options will be discussed. Keywords: Cotard’s syndrome, nihilistic delusion, misidentification syndrome, review Correspondence: Hans Debruyne, P.C. Dr. Guislain Psychiatric Hospital Ghent, Fr. Ferrerlaan 88A, 9000 Ghent, Belgium. Tel: 32 9 216 3311; Fax: 32 9 2163312; e-mail: [email protected] INTRODUCTION: HISTORICAL ASPECTS AND of the syndrome. They described a nongeneralized de´lire de CLASSIFICATION negation, associated with paralysis, alcoholic psychosis, dementia, and the ‘‘real’’ Cotard’s syndrome, only found in Cotard’s syndrome is named after Jules Cotard (1840Á1889), anxious melancholia and chronic hypochondria.6 Later, in a French neurologist who described this condition for the 1968, Saavedra proposed a classification into three types: first time in 1880, in a case report of a 43-year-old woman. -

Curriculum Vitae

Steven M. Silverstein, Ph.D. 1 Curriculum Vitae Steven M. Silverstein, Ph.D. ADDRESS: Division of Schizophrenia Research E-mail: [email protected] Rutgers University Behavioral HealthCare Phone: (732) 235-5149 Rutgers, The State University of New Jersey 671 Hoes Lane West, Room D351 Current Positions 2/17 – present Director, Coordinated Specialty Care (first episode psychosis) Clinic, Rutgers University Behavioral Health Care 7/08 – present Professor, Department of Psychiatry, Robert Wood Johnson Medical School, Rutgers University 7/07 – present Director of Research, Rutgers University Behavioral Health Care, Rutgers University 3/06 - present Director, Division of Schizophrenia Research, Rutgers University Behavioral Health Care, Rutgers University Other appointments - 2018: Department of Ophthalmology; 2016: Brain Health Institute (Rutgers); 2008: Rutgers Graduate Program in Neuroscience; Graduate School of Applied and Professional Psychology (Rutgers), Psychology Graduate Faculty (Rutgers). Previous Academic Positions 03/06 – 06/08 Associate Professor of Psychiatry, Department of Psychiatry, Robert Wood Johnson Medical School, University of Medicine and Dentistry of New Jersey (now Rutgers) 10/03 – 03/06 Associate Professor of Psychiatry, Department of Psychiatry, University of Illinois at Chicago, Chicago, IL 03/05 – 03/06 Director of Psychiatric Rehabilitation, Department of Psychiatry, University of Illinois at Chicago, Chicago IL 10/03 – 03/05 Clinical Director, Psychosis Program, University of Illinois Department of Psychiatry, Chicago, IL 10/02 -10/03 Program Director, Psychotic Disorders Division, Weill Medical College of Cornell University-New York Presbyterian Hospital, White Plains, NY 10/99 – 10/03 Associate Professor of Psychology in Psychiatry, Weill Medical College of Cornell University, New York, New York 10/99 – 10/03 Associate Psychologist, New York Presbyterian Hospital, New York, NY Steven M. -

Uncovering Capgras Delusion Using a Large-Scale Medical Records Database Vaughan Bell, Caryl Marshall, Zara Kanji, Sam Wilkinson, Peter Halligan and Quinton Deeley

BJPsych Open (2017) 3, 179–185. doi: 10.1192/bjpo.bp.117.005041 Uncovering Capgras delusion using a large-scale medical records database Vaughan Bell, Caryl Marshall, Zara Kanji, Sam Wilkinson, Peter Halligan and Quinton Deeley Background Neuroimaging provided no evidence for predominantly right Capgras delusion is scientifically important but most commonly hemisphere damage. Individuals were ethnically diverse with a reported as single case studies. Studies analysing large clinical range of psychosis spectrum diagnoses. records databases focus on common disorders but none have investigated rare syndromes. Conclusions Capgras is more diverse than current models assume. Aims Identification of rare syndromes complements existing Identify cases of Capgras delusion and associated ‘big data’ approaches in psychiatry. psychopathology, demographics, cognitive function and neuropathology in light of existing models. Declaration of interests Method V.B. is supported by a Wellcome Trust Seed Award in Science Combined computational data extraction and qualitative (200589/Z/16/Z) and the UCLH NIHR Biomedical Research classification using 250 000 case records from South London and Centre. S.W. is supported by a Wellcome Trust Strategic Award ’ Maudsley Clinical Record Interactive Search (CRIS) database. (WT098455MA). Q.D. has received a grant from King s Health Partners. Results We identified 84 individuals and extracted diagnosis-matched Copyright and usage comparison groups. Capgras was not ‘monothematic’ in the © The Royal College of Psychiatrists 2017. This is an open majority of cases. Most cases involved misidentified family access article distributed under the terms of the Creative members or close partners but others were misidentified in Commons Non-Commercial, No Derivatives (CC BY-NC-ND) 25% of cases, contrary to dual-route face recognition models. -

Low-Dose Clozapine for an Adolescent with TBI-Related Fregoli

f Ps al o ych rn ia u tr o y J Naguy and Al-Tajali, J Psychiatry 2015, 18:5 Journal of Psychiatry DOI: 10.4172/2378-5756.1000306 ISSN: 2378-5756 LetterResearch to Editor Article OpenOpen Access Access Low-Dose Clozapine for an Adolescent with TBI-Related Fregoli Delusions Ahmed Naguy1* and Ali Al-Tajali2 1Child/Adolescent Psychiatrist, Kuwait Centre for Mental Health (KCMH), Kuwait 2General Adult Psychiatrists, Head of Neuromodulation Unit, KCMH, Kuwait Keywords: TBI; Fregoli delusions; Clozapine (80), problems with divided attention were noted. We kept valproate, stopped fluoxetine (self-tapering), gradually tapered quetiapine and To The Editor clonazepam over 2 weeks. A diagnosis of TBI-related psychosis was our ® Fregoli delusion, first described by Courbon and Fail in 1927, working diagnosis. We introduced clozapine (Leponex ), with weekly named after an Italian actor Leopoldo Fregoli, is a rare delusional TLC protocol, at 12.5 mg/d nocte, uptitrated to 100 mg/d (divided) misidentification syndrome which entails that different people are in over 10 days. No EPS reported. After 2 weeks, Fregoli delusions totally fact the same person in disguise who is able to change their appearance disappeared with no residua. He was discharged. Complete remission [1]. It is commonly linked to schizophrenia, schizoaffective disorder, continued with follow-ups at W-4, 8, and 12. BABS was employed and other organic brain syndromes including head trauma, ischaemic throughout to objectify treatment-response on clozapine. strokes, AD, Parkinson’s disease, epilepsy or metabolic derangements Superiority of clozapine in refractory psychosis is well-established [2]. Neurologically, it represents a disconnectivity syndrome of [7]. -

Madness and Introspection in Marcellus Emants's Een Nagelaten Bekentenis

‘Eigenschaften ohne Mann’: Madness and Introspection in Marcellus Emants’s Een nagelaten bekentenis (1894) ‘Eigenschaften ohne Mann’: Waanzin en introspectie in Marcellus Emants’s Een nagelaten bekentenis (1894) Ernst van Alphen, Leiden University Abstract: In the dominant discourse madness is considered as the opposite of rationality. It concerns the decline, and in extreme cases even the disappearance of rationality in the organization of human conduct and experience. In this article the author explores a more recent, modernist discourse on madness. The new discourse does not understand madness as a decline of rationality, but as an increase or intensification of reason. Madness is not the result of abundance of passions, emotions and vitality but rather of the estrangement from these. This modernist discourse on madness manifests itself in literary novels that magnify the practice of introspection to the most extreme extent. These novels feature a first-person narrator who reflects relentlessly and ruthlessly on his own conduct, feelings and experiences. In these cases the narrative device of first-person narration is symptomatic for a new attitude towards human consciousness and the faculty of reason that substantiates it. In order to better understand the radical effects of introspection through first-person narration, the author focuses on the Dutch novel A Posthumous Confession (Een nagelaten bekentenis) from 1894 by Marcellus Emants. The novel has not been read for its first-person speech act of confession; a plot that consists -

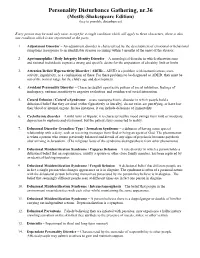

Personality Disturbance Gathering, Nr.36 (Mostly-Shakespeare Edition) (Key to Possible Disturbances)

Personality Disturbance Gathering, nr.36 (Mostly-Shakespeare Edition) (key to possible disturbances) Every person may be used only once, except for a single condition which will apply to three characters; there is also one condition which is not represented at the party. 1. Adjustment Disorder – An adjustment disorder is characterized by the development of emotional or behavioral symptoms in response to an identifiable stressor occurring within 3 months of the onset of the stressor. 2. Apotemnophilia / Body Integrity Identity Disorder – A neurological disorder in which otherwise sane and rational individuals express a strong and specific desire for the amputation of a healthy limb or limbs 3. Attention Deficit Hyperactivity Disorder / ADHD – ADHD is a problem with inattentiveness, over- activity, impulsivity, or a combination of these. For these problems to be diagnosed as ADHD, they must be out of the normal range for the child's age and development. 4. Avoidant Personality Disorder – Characterized by a pervasive pattern of social inhibition, feelings of inadequacy, extreme sensitivity to negative evaluation, and avoidance of social interaction. 5. Cotard Delusion / Cotard’s Syndrome – a rare neuropsychiatric disorder in which people hold a delusional belief that they are dead (either figuratively or literally), do not exist, are putrefying, or have lost their blood or internal organs. In rare instances, it can include delusions of immortality. 6. Cyclothymic disorder – A mild form of Bipolar, it is characterized by mood swings from mild or moderate depression to euphoria and excitement, but the patient stays connected to reality. 7. Delusional Disorder Grandiose Type / Jerusalem Syndrome – a delusion of having some special relationship with a deity, such as receiving messages from God or being an agent of God. -

Pathologies of Hyperfamiliarity in Dreams, Delusions and Déjà Vu

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Frontiers - Publisher Connector HYPOTHESIS AND THEORY ARTICLE published: 20 February 2014 doi: 10.3389/fpsyg.2014.00097 Pathologies of hyperfamiliarity in dreams, delusions and déjà vu Philip Gerrans* Department of Philosophy, University of Adelaide, Adelaide, SA, Australia Edited by: The ability to challenge and revise thoughts prompted by anomalous experiences depends Jennifer M. Windt, Johannes on activity in right dorsolateral prefrontal circuitry. When activity in those circuits is absent Gutenberg-University of Mainz, or compromised subjects are less likely to make this kind of correction. This appears Germany to be the cause of some delusions of misidentification consequent on experiences of Reviewed by: Lisa Bortolotti, University of hyperfamiliarity for faces. Comparing the way the mind responds to the experience Birmingham, UK of hyperfamiliarity in different conditions such as delusions, dreams, pathological and Elizaveta Solomonova, Université de non-pathological déjà vu, provides a way to understand claims that delusions and dreams Montréal, Canada are both states characterized by deficient “reality testing.” *Correspondence: Philip Gerrans, Department of Keywords: delusions of misidentification, deja vu, dreams, hyperfamiliarity, reality testing Philosophy, University of Adelaide, North Terrace, Adelaide, SA 5005, Australia e-mail: philip.gerrans@ adelaide.edu.au INTRODUCTION background knowledge, thereby establishing whether they cor- The concept of impaired or absent “reality testing” is invoked to respond to the world as it is, independent of the subject’s mind explain conditions characterized by persistent inability to detect (Hohwy, 2004). It is for reasons like these that dreams and delu- and correct misrepresentation of aspects of the world (reality). -

Annual Report 2006

Danish National Research Foundation: Center for Subjectivity Research University of Copenhagen Njalsgade 140-142 DK-2300 Copenhagen S Denmark Phone: (+45) 3532 86 80 Fax: (+45) 3532 8681 Email: [email protected] Website: www.cfs.ku.dk Annual report: January 1 - December 31, 2006 Content 1. Introduction 2. Staff 3. External funding for 2006 4. Research 5. Foreign visitors 6. Activities organized by the Center 7. Teaching, supervision, evaluation 8. Various academic and administrative tasks 9. Editorial tasks 10. Collaboration (national and international) 11. Talks and lectures 12. Publications 13. Submitted/accepted manuscripts 1. Introduction Danish National Research Foundation: Center for Subjectivity Research (CFS) was established at the Faculty of Theology, University of Copenhagen on March 1, 2002. The Center is financed by the Danish National Research Foundation with supplementary funding from the University of Copenhagen and Hvidovre Hospital for the period 1.3.2002 – 28.2.2007. The aim of the Center is to undertake a thorough and comprehensive investigation of what might be considered the three fundamental dimensions of subjectivity: Intentionality, self- awareness, and intersubjectivity (i.e., subjectivity in its relation to the world, to itself, and to others). The Center emphasizes interdisciplinary approaches and draws on philosophy of mind, philosophy of religion, and psychopathology and it explicitly seeks to further the dialogue not only between different philosophical traditions (phenomenology, hermeneutics, and analytical philosophy of mind), but also between philosophy and empirical science. During 2006 the Center organized, co-organized, and/or co-sponsored 9 conferences and workshops (with more than 70 speakers) as well as 12 individual guest lectures by invited speakers, and it had 60 foreign visitors.