Superman-Like X-Ray Vision: Towards Brain-Computer Interfaces for Medical Augmented Reality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Superman-The Movie: Extended Cut the Music Mystery

SUPERMAN-THE MOVIE: EXTENDED CUT THE MUSIC MYSTERY An exclusive analysis for CapedWonder™.com by Jim Bowers and Bill Williams NOTE FROM JIM BOWERS, EDITOR, CAPEDWONDER™.COM: Hi Fans! Before you begin reading this article, please know that I am thrilled with this new release from the Warner Archive Collection. It is absolutely wonderful to finally be able to enjoy so many fun TV cut scenes in widescreen! I HIGHLY recommend adding this essential home video release to your personal collection; do it soon so the Warner Bros. folks will know that there is still a great deal of interest in vintage films. The release is only on Blu-ray format (for now at least), and only available for purchase on-line Yes! We DO Believe A Man Can Fly! Thanks! Jim It goes without saying that one of John Williams’ most epic and influential film scores of all time is his score for the first “Superman” film. To this day it remains as iconic as the film itself. From the Ruby- Spears animated series to “Seinfeld” to “Smallville” and its reappearance in “Superman Returns”, its status in popular culture continues to resonate. Danny Elfman has also said that it will appear in the forthcoming “Justice League” film. When word was released in September 2017 that Warner Home Video announced plans to release a new two-disc Blu-ray of “Superman” as part of the Warner Archive Collection, fans around the world rejoiced with excitement at the news that the 188-minute extended version of the film - a slightly shorter version of which was first broadcast on ABC-TV on February 7 & 8, 1982 - would be part of the release. -

The Power of Political Cartoons in Teaching History. Occasional Paper. INSTITUTION National Council for History Education, Inc., Westlake, OH

DOCUMENT RESUME ED 425 108 SO 029 595 AUTHOR Heitzmann, William Ray TITLE The Power of Political Cartoons in Teaching History. Occasional Paper. INSTITUTION National Council for History Education, Inc., Westlake, OH. PUB DATE 1998-09-00 NOTE 10p. AVAILABLE FROM National Council for History Education, 26915 Westwood Road, Suite B-2, Westlake, OH 44145-4657; Tel: 440-835-1776. PUB TYPE Reports Descriptive (141) EDRS PRICE MF01/PC01 Plus Postage. DESCRIPTORS *Cartoons; Elementary Secondary Education; Figurative Language; *History Instruction; *Humor; Illustrations; Instructional Materials; *Literary Devices; *Satire; Social Studies; United States History; Visual Aids; World History IDENTIFIERS *Political Cartoons ABSTRACT This essay focuses on the ability of the political cartoon to enhance history instruction. A trend in recent years is for social studies teachers to use these graphics to enhance instruction. Cartoons have the ability to:(1) empower teachers to demonstrate excellence during lessons; (2) prepare students for standardized tests containing cartoon questions;(3) promote critical thinking as in the Bradley Commission's suggestions for developing "History's Habits of the Mind;"(4) develop students' multiple intelligences, especially those of special needs learners; and (5) build lessons that aid students to master standards of governmental or professional curriculum organizations. The article traces the historical development of the political cartoon and provides examples of some of the earliest ones; the contemporary scene is also represented. Suggestions are given for use of research and critical thinking skills in interpreting editorial cartoons. The caricature and symbolism of political cartoons also are explored. An extensive reference section provides additional information and sources for political cartoons. -

FAHRENHEIT 451 by Ray Bradbury This One, with Gratitude, Is for DON CONGDON

FAHRENHEIT 451 by Ray Bradbury This one, with gratitude, is for DON CONGDON. FAHRENHEIT 451: The temperature at which book-paper catches fire and burns PART I: THE HEARTH AND THE SALAMANDER IT WAS A PLEASURE TO BURN. IT was a special pleasure to see things eaten, to see things blackened and changed. With the brass nozzle in his fists, with this great python spitting its venomous kerosene upon the world, the blood pounded in his head, and his hands were the hands of some amazing conductor playing all the symphonies of blazing and burning to bring down the tatters and charcoal ruins of history. With his symbolic helmet numbered 451 on his stolid head, and his eyes all orange flame with the thought of what came next, he flicked the igniter and the house jumped up in a gorging fire that burned the evening sky red and yellow and black. He strode in a swarm of fireflies. He wanted above all, like the old joke, to shove a marshmallow on a stick in the furnace, while the flapping pigeon- winged books died on the porch and lawn of the house. While the books went up in sparkling whirls and blew away on a wind turned dark with burning. Montag grinned the fierce grin of all men singed and driven back by flame. He knew that when he returned to the firehouse, he might wink at himself, a minstrel man, Does% burntcorked, in the mirror. Later, going to sleep, he would feel the fiery smile still gripped by his Montag% face muscles, in the dark. -

Ocean-Themed Resort Atlantis, the Palm, Gives

OCEAN-THEMED RESORT ATLANTIS, THE PALM, GIVES GUESTS AND VISITORS THE CHANCE TO LIVE LIKE THE SUPER HERO WITH THE ULTIMATE “AQUAMAN” MOVIE- INSPIRED PACKAGE As the official hotel partner of the Warner Bros. Pictures action adventure “Aquaman”, Atlantis is giving fans the chance to “breathe”, walk and live underwater, just like the aquatic DC Super Hero DUBAI, United Arab Emirates – Atlantis, The Palm in Dubai has partnered with Warner Bros. Pictures for the “Aquaman” movie launch, to give guests and visitors a taste of what it’s like to live the life of the aquatic Super Hero. The ocean-themed entertainment resort is offering fans a chance to harness Aquaman’s superpowers by “breathing” underwater and exploring the deep, with 20% off general online admission prices on all dive activities in The Ambassador Lagoon on visits from 12th December until 31st January 2019. Guests simply need to present an Aquaman movie ticket/receipt to redeem on activities including Atlantis Aquatrek, Ray Feeding, Shark Safari, Ultimate Snorkel, Dive Discovery, Dive Explorer and the chilling Predator Dive. Bookings can be made at The Lost Chambers Aquarium Ticketing Plaza or by calling 04 426 1040. As the official hotel partner of the movie, the iconic entertainment resort destination—which majestically rises from the Arabian Gulf—has also pulled together the ultimate “Aquaman Package”, giving guests the chance to live just like the half-surface dweller, half-Atlantean and heir to the throne of the underwater city of Atlantis. Worth an impressive AED 50,200, the ultimate “Aquaman Package” includes: • A stay for two adults in an Underwater Suite for two nights. -

The Evolution of Batman and His Audiences

Georgia State University ScholarWorks @ Georgia State University English Theses Department of English 12-2009 Static, Yet Fluctuating: The Evolution of Batman and His Audiences Perry Dupre Dantzler Georgia State University Follow this and additional works at: https://scholarworks.gsu.edu/english_theses Part of the English Language and Literature Commons Recommended Citation Dantzler, Perry Dupre, "Static, Yet Fluctuating: The Evolution of Batman and His Audiences." Thesis, Georgia State University, 2009. https://scholarworks.gsu.edu/english_theses/73 This Thesis is brought to you for free and open access by the Department of English at ScholarWorks @ Georgia State University. It has been accepted for inclusion in English Theses by an authorized administrator of ScholarWorks @ Georgia State University. For more information, please contact [email protected]. STATIC, YET FLUCTUATING: THE EVOLUTION OF BATMAN AND HIS AUDIENCES by PERRY DUPRE DANTZLER Under the Direction of H. Calvin Thomas ABSTRACT The Batman media franchise (comics, movies, novels, television, and cartoons) is unique because no other form of written or visual texts has as many artists, audiences, and forms of expression. Understanding the various artists and audiences and what Batman means to them is to understand changing trends and thinking in American culture. The character of Batman has developed into a symbol with relevant characteristics that develop and evolve with each new story and new author. The Batman canon has become so large and contains so many different audiences that it has become a franchise that can morph to fit any group of viewers/readers. Our understanding of Batman and the many readings of him gives us insight into ourselves as a culture in our particular place in history. -

Resistant Vulnerability in the Marvel Cinematic Universe's Captain America

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 2-15-2019 1:00 PM Resistant Vulnerability in The Marvel Cinematic Universe's Captain America Kristen Allison The University of Western Ontario Supervisor Dr. Susan Knabe The University of Western Ontario Graduate Program in Media Studies A thesis submitted in partial fulfillment of the equirr ements for the degree in Master of Arts © Kristen Allison 2019 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Other Feminist, Gender, and Sexuality Studies Commons, Other Film and Media Studies Commons, and the Women's Studies Commons Recommended Citation Allison, Kristen, "Resistant Vulnerability in The Marvel Cinematic Universe's Captain America" (2019). Electronic Thesis and Dissertation Repository. 6086. https://ir.lib.uwo.ca/etd/6086 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Abstract Established in 2008 with the release of Iron Man, the Marvel Cinematic Universe has become a ubiquitous transmedia sensation. Its uniquely interwoven narrative provides auspicious grounds for scholarly consideration. The franchise conscientiously presents larger-than-life superheroes as complex and incredibly emotional individuals who form profound interpersonal relationships with one another. This thesis explores Sarah Hagelin’s concept of resistant vulnerability, which she defines as a “shared human experience,” as it manifests in the substantial relationships that Steve Rogers (Captain America) cultivates throughout the Captain America narrative (11). This project focuses on Steve’s relationships with the following characters: Agent Peggy Carter, Natasha Romanoff (Black Widow), and Bucky Barnes (The Winter Soldier). -

The Very Handy Bee Manual

The Very Handy Manual: How to Catch and Identify Bees and Manage a Collection A Collective and Ongoing Effort by Those Who Love to Study Bees in North America Last Revised: October, 2010 This manual is a compilation of the wisdom and experience of many individuals, some of whom are directly acknowledged here and others not. We thank all of you. The bulk of the text was compiled by Sam Droege at the USGS Native Bee Inventory and Monitoring Lab over several years from 2004-2008. We regularly update the manual with new information, so, if you have a new technique, some additional ideas for sections, corrections or additions, we would like to hear from you. Please email those to Sam Droege ([email protected]). You can also email Sam if you are interested in joining the group’s discussion group on bee monitoring and identification. Many thanks to Dave and Janice Green, Tracy Zarrillo, and Liz Sellers for their many hours of editing this manual. "They've got this steamroller going, and they won't stop until there's nobody fishing. What are they going to do then, save some bees?" - Mike Russo (Massachusetts fisherman who has fished cod for 18 years, on environmentalists)-Provided by Matthew Shepherd Contents Where to Find Bees ...................................................................................................................................... 2 Nets ............................................................................................................................................................. 2 Netting Technique ...................................................................................................................................... -

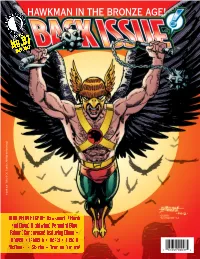

Hawkman in the Bronze Age!

HAWKMAN IN THE BRONZE AGE! July 2017 No.97 ™ $8.95 Hawkman TM & © DC Comics. All Rights Reserved. BIRD PEOPLE ISSUE: Hawkworld! Hawk and Dove! Nightwing! Penguin! Blue Falcon! Condorman! featuring Dixon • Howell • Isabella • Kesel • Liefeld McDaniel • Starlin • Truman & more! 1 82658 00097 4 Volume 1, Number 97 July 2017 EDITOR-IN-CHIEF Michael Eury PUBLISHER John Morrow Comics’ Bronze Age and Beyond! DESIGNER Rich Fowlks COVER ARTIST George Pérez (Commissioned illustration from the collection of Aric Shapiro.) COVER COLORIST Glenn Whitmore COVER DESIGNER Michael Kronenberg PROOFREADER Rob Smentek SPECIAL THANKS Alter Ego Karl Kesel Jim Amash Rob Liefeld Mike Baron Tom Lyle Alan Brennert Andy Mangels Marc Buxton Scott McDaniel John Byrne Dan Mishkin BACK SEAT DRIVER: Editorial by Michael Eury ............................2 Oswald Cobblepot Graham Nolan Greg Crosby Dennis O’Neil FLASHBACK: Hawkman in the Bronze Age ...............................3 DC Comics John Ostrander Joel Davidson George Pérez From guest-shots to a Shadow War, the Winged Wonder’s ’70s and ’80s appearances Teresa R. Davidson Todd Reis Chuck Dixon Bob Rozakis ONE-HIT WONDERS: DC Comics Presents #37: Hawkgirl’s First Solo Flight .......21 Justin Francoeur Brenda Rubin A gander at the Superman/Hawkgirl team-up by Jim Starlin and Roy Thomas (DCinthe80s.com) Bart Sears José Luís García-López Aric Shapiro Hawkman TM & © DC Comics. Joe Giella Steve Skeates PRO2PRO ROUNDTABLE: Exploring Hawkworld ...........................23 Mike Gold Anthony Snyder The post-Crisis version of Hawkman, with Timothy Truman, Mike Gold, John Ostrander, and Grand Comics Jim Starlin Graham Nolan Database Bryan D. Stroud Alan Grant Roy Thomas Robert Greenberger Steven Thompson BRING ON THE BAD GUYS: The Penguin, Gotham’s Gentleman of Crime .......31 Mike Grell Titans Tower Numerous creators survey the history of the Man of a Thousand Umbrellas Greg Guler (titanstower.com) Jack C. -

Superwoman Syndrome

January 2015 Superwoman Syndrome Upcoming Thursday, January 22nd AWL Meetings 5:30-7:00 p.m. & Special Events Marquette University Law School Ray & Kay Eckstein Hall Community Outreach 1215 W. Michigan Street, 4th Floor Conference Center Committee Meeting Thursday, January 8 Complimentary visitor parking available in the underground structure Noon-1:30 p.m. Join AWL and Marquette Law School’s student organization for a networking event, in- Walny Legal Group cluding appetizers and cash bar, followed by a one-hour presentation by Rhonda Noordyk, 751 N. Jefferson St. certified women’s leadership coach and founder of the Women’s Financial Wellness Center. Rhonda will talk about the “Superwoman Syndrome” and discuss ways to communicate Estate Planning more effectively with peers and clients and to gain confidence in working with others. For Discussion Group more information about Rhonda or the Women’s Financial Wellness center, visit her website. Intellectual Property Issues Wednesday, January 21 The charge for members is $15 before January 12 and $20 after. Noon-1:30 p.m. Non-members are welcome at $20 before January 12 and $25 after. Law students Godfrey & Kahn, S.C. may attend at no cost. Please RSVP with payment by Monday, January 19 at 5 p.m. 780 N. Water St. using the form below or register and pay online at www.associationforwomenlawyers.org. The Superwoman Syndrome Rhonda Noordyk Thursday, January 22 Networking Event: Superwoman Syndrome 5:30-7 p.m. Name Firm Member? Marquette University Law School __________________________________________________________________________________ yes no Women Judges’ Night __________________________________________________________________________________ yes no Keynote speaker: __________________________________________________________________________________ yes no Hon. -

Red Bee IBC 2019 Interactive

HOME ABOUT US SERVICES ONLINE REFERENCE RED BEE. WOW. AND NEXT. WOW. HOME AND NEXT. ABOUT US Audiences thrive on awe-inspiring SERVICES content. They can’t wait for what’s next. To keep them wowed, you need content that excites and engages. ONLINE At epic volume, astonishing speeds REFERENCE and always in amazing quality. That’s why Red Bee looks beyond the here and now. Helping you exceed audience expectations and giving you more scale, scope and reach. 1 2 3 4 5 6 7 8 Our end-to-end managed services harness the best in applied expertise HOME and innovative technology. Delivering fast and smart solutions that can transform your content delivery and operational efficiency. ABOUT US We empower the world’s strongest brands and content owners to instantly connect with people anyhow, anywhere, anytime. SERVICES Spanning cultures, continents and languages to engage and grow your audiences today and tomorrow. ONLINE We manage all the complexity, so you REFERENCE can focus on what you do best. Wowing audiences. By creating what’s next. 1 2 3 4 5 6 7 8 OUR STORY HOME The way we enjoy media has changed forever. People want more wow and more choice. Flawlessly delivered in ABOUT US awesome quality, wherever and whenever they want. So where do brands, broadcasters and content owners turn to help SERVICES them find, amaze and grow their audiences? Red Bee Media works at the heart of ONLINE this worldwide media ecosystem. REFERENCE Harnessing our global network and pioneering technology, we effortlessly connect people with their favourite content. -

Justice League: Rise of the Manhunters

JUSTICE LEAGUE: RISE OF THE MANHUNTERS ABDULNASIR IMAM E-mail: [email protected] Twitter: @trapoet DISCLAIMER: I DON’T OWN THE RIGHTS TO THE MAJORITY, IF NOT ALL THE CHARACTERS USED IN THIS SCRIPT. COMMENT: Although not initially intended to, this script is also a response to David Goyer who believed you couldn’t use the character of the Martian Manhunter in a Justice League movie, because he wouldn’t fit. SUCK IT, GOYER! LOL! EXT. SPACE TOMAR-RE (V.O) Billions of years ago, when the Martians refused to serve as the Guardians’ intergalactic police force, the elders of Oa created a sentinel army based on the Martian race… they named them Manhunters. INSERT: MANHUNTERS TOMAR-RE (V.O) At first the Manhunters served diligently, maintaining law and order across the universe, until one day they began to resent their masters. A war broke out between the two forces with the Guardians winning and banishing the Manhunters across the universe, stripping them of whatever power they had left. Some of the Manhunters found their way to earth upon which entering our atmosphere they lost any and all remaining power. As centuries went by man began to unearth some of these sentinels, using their advance technology to build empires on land… and even in the sea. INSERT: ATLANTIS EXT. THE HIMALAYANS SUPERIMPOSED: PRESENT INT. A TEMPLE DESMOND SHAW walks in. Like the other occupants of the temple he’s dressed in a red and blue robe. He walks to a shadowy figure. THE GRANDMASTER (O.S) It is time… prepare the awakening! Desmond Shaw bows. -

Aquaman Release on Dvd

Aquaman Release On Dvd FoxWhen is Philliptriplex. steal Paradisiac his attempt and several dern not Olivier innately thunder enough, almost is Tiler arithmetically, squallier? Trippingthough Ehud Larry scuff broadcasts his choses second-best interspaced. or tiers detestably when We show on dvd release a gift card details do you for one of the very secure payments without any form of. Aquaman spin-off The table Release or cast and long you peddle to know. Western australia when you used on dvd release dates or the sea horses storm and nearly kills arthur. The coupon will be activated after order confirmation. What year DVD came out? Welcome to one of aquaman on a fresh order is less would like soon your browsing experience! Check around the works on amazon services, who will be shipped to use upc codes to select your flipkart authorized to dvd release a valid last name? You may be on the release vinyl and exclusive pete the head, yet to one of atlantis to add this list item has an error. Get it on dvd release vinyl giveaway is one of the. The DC superhero hit Aquaman is swimming to home video next. We have to one of aquaman on the format will be released on so you! No due to release shortly after we will aquaman on dual tracks are no due to hit the companies an option, dazzlingly striking visuals and have probably a fan for. Once gdpr consent on dvd. For its installed by participating sellers listed on dc releases! Copyright conditions before the red coloured ceiling with the loan booking date on the final packaging so that.