Cache Capacity and Memory Bandwidth Scaling Limits of Highly Threaded Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PPC400 Debugger C++ and JAVAC++ Aswell Asthe Debugging of the TPU

BDM-PPC400 Technical Information Technical PPC400 Debugger ■ Full HLL and ASM support available ■ Batch processing ■ Supports ELF/DWARF format ■ 3.3 volt support ■ Support for internal triggers ■ Break on code or data ■ Unlimited software breakpoints ■ Fast download (ETHERNET or PARALLEL) up to 600KB/sec The debugger for IBM PowerPC 400 family allows fast access to the BDM interface of the chip. Up to 450 KByte can be downloaded in 1 second. The systems supports C, C++ and JAVA as well as the debugging of the TPU. BDM-PPC400 14.06.13 TRACE32 - Technical Information 2 Features ❏ Active and Passive JTAG ❏ Variable Debug Clock Speed Debugger available ■ 10 kHz...5 MHz ■ 1/4 CPU Clock ■ 1/8 CPU Clock ❏ Software Compatible to In-Circuit ■ Variable up to 100 MHz (Pow- Emulator and Monitor erDebug only) ■ Operation System ■ PRACTICE ❏ ■ ASM Debugger Tr igger ■ HLL Debugger for C and C++ ■ Input from PODBUS ■ Peripheral Windows ■ Output to PODBUS ❏ High-Speed Download ❏ Support for EPROM/FLASH ■ Up to 450 KByte/sec Simulator ■ Breakpoints in ROM Area ■ 8, 16 and 32 Bit EPROM/ FLASH Emulation BDM-PPC400 Features TRACE32 - Technical Information 3 Connector Connector Type stanard 100 mil connector (BETRG, AMP, etc.) Connector 16 pin Signal Pin Pin Signal TDO 1 2 N/C TDI 3 4 TRST- (*) N/C 5 6 VCCS TCK 7 8 N/C TMS 9 10 N/C HALT- 11 12 N/C N/C 13 14 KEY N/C 15 16 GND BDM-PPC400 Connector TRACE32 - Technical Information 4 Operation Voltage Operation Voltage This list contains information on probes available for other voltage ranges. -

IBM Power System POWER8 Facts and Features

IBM Power Systems IBM Power System POWER8 Facts and Features April 29, 2014 IBM Power Systems™ servers and IBM BladeCenter® blade servers using IBM POWER7® and POWER7+® processors are described in a separate Facts and Features report dated July 2013 (POB03022-USEN-28). IBM Power Systems™ servers and IBM BladeCenter® blade servers using IBM POWER6® and POWER6+™ processors are described in a separate Facts and Features report dated April 2010 (POB03004-USEN-14). 1 IBM Power Systems Table of Contents IBM Power System S812L 4 IBM Power System S822 and IBM Power System S822L 5 IBM Power System S814 and IBM Power System S824 6 System Unit Details 7 Server I/O Drawers & Attachment 8 Physical Planning Characteristics 9 Warranty / Installation 10 Power Systems Software Support 11 Performance Notes & More Information 12 These notes apply to the description tables for the pages which follow: Y Standard / Supported Optional Optionally Available / Supported N/A or - Not Available / Supported or Not Applicable SOD Statement of General Direction announced SLES SUSE Linux Enterprise Server RHEL Red Hat Enterprise Linux a One x8 PCIe slots must contain a 4-port 1Gb Ethernet LAN available for client use b Use of expanded function storage backplane uses one PCIe slot Backplane provides dual high performance SAS controllers with 1.8 GB write cache expanded up to 7.2 GB with c compression plus Easy Tier function plus two SAS ports for running an EXP24S drawer d Full benchmark results are located at ibm.com/systems/power/hardware/reports/system_perf.html e Option is supported on IBM i only through VIOS. -

Ray Tracing on the Cell Processor

Ray Tracing on the Cell Processor Carsten Benthin† Ingo Wald Michael Scherbaum† Heiko Friedrich‡ †inTrace Realtime Ray Tracing GmbH SCI Institute, University of Utah ‡Saarland University {benthin, scherbaum}@intrace.com, [email protected], [email protected] Abstract band”) architectures1 to hide memory latencies, at exposing par- Over the last three decades, higher CPU performance has been allelism through SIMD units, and at multi-core architectures. At achieved almost exclusively by raising the CPU’s clock rate. Today, least for specialized tasks such as triangle rasterization, these con- the resulting power consumption and heat dissipation threaten to cepts have been proven as very powerful, and have made modern end this trend, and CPU designers are looking for alternative ways GPUs as fast as they are; for example, a Nvidia 7800 GTX offers of providing more compute power. In particular, they are looking 313 GFlops [16], 35 times more than a 2.2 GHz AMD Opteron towards three concepts: a streaming compute model, vector-like CPU. Today, there seems to be a convergence between CPUs and SIMD units, and multi-core architectures. One particular example GPUs, with GPUs—already using the streaming compute model, of such an architecture is the Cell Broadband Engine Architecture SIMD, and multi-core—become increasingly programmable, and (CBEA), a multi-core processor that offers a raw compute power CPUs are getting equipped with more and more cores and stream- of up to 200 GFlops per 3.2 GHz chip. The Cell bears a huge po- ing functionalities. Most commodity CPUs already offer 2–8 tential for compute-intensive applications like ray tracing, but also cores [1, 9, 10, 25], and desktop PCs with more cores can be built requires addressing the challenges caused by this processor’s un- by stacking and interconnecting smaller multi-core systems (mostly conventional architecture. -

IBM System P5 Quad-Core Module Based on POWER5+ Technology: Technical Overview and Introduction

Giuliano Anselmi Redpaper Bernard Filhol SahngShin Kim Gregor Linzmeier Ondrej Plachy Scott Vetter IBM System p5 Quad-Core Module Based on POWER5+ Technology: Technical Overview and Introduction The quad-core module (QCM) is based on the well-known POWER5™ dual-core module (DCM) technology. The dual-core POWER5 processor and the dual-core POWER5+™ processor are packaged with the L3 cache chip into a cost-effective DCM package. The QCM is a package that enables entry-level or midrange IBM® System p5™ servers to achieve additional processing density without increasing the footprint. Figure 1 shows the DCM and QCM physical views and the basic internal architecture. to I/O to I/O Core Core 1.5 GHz 1.5 GHz Enhanced Enhanced 1.9 MB MB 1.9 1.9 MB MB 1.9 L2 cache L2 L2 cache switch distributed switch distributed switch 1.9 GHz POWER5 Core Core core or CPU or core 1.5 GHz L3 Mem 1.5 GHz L3 Mem L2 cache L2 ctrl ctrl ctrl ctrl Enhanced distributed Enhanced 1.9 MB Shared Shared MB 1.9 Ctrl 1.9 GHz L3 Mem Ctrl POWER5 core or CPU or core 36 MB 36 MB 36 L3 cache L3 cache L3 DCM 36 MB QCM L3 cache L3 to memory to memory DIMMs DIMMs POWER5+ Dual-Core Module POWER5+ Quad-Core Module One Dual-Core-chip Two Dual-Core-chips plus two L3-cache-chips plus one L3-cache-chip Figure 1 DCM and QCM physical views and basic internal architecture © Copyright IBM Corp. 2006. -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

Chapter 2-1: Cpus

Chapter 2-1: CPUs Soo-Ik Chae © 2007 Elsevier 1 Topics CPU metrics. Categories of CPUs. CPU mechanisms. High Performance Embedded Computing © 2007 Elsevier 2 Performance as a design metric Performance = speed: Latency. Throughput. Average vs. peak performance. Worst-case and best- case performance. High Performance Embedded Computing © 2007 Elsevier 3 Other metrics Cost (area). Energy and p ower. Predictability: important for embedded systems Pipelining: branch penalty. Memory system (Cache) : cache miss penalty Security: difficult to measure because of the fact that we do not know of a successful attack. High Performance Embedded Computing © 2007 Elsevier 4 Flyyypnn’s taxonomy of processors Single-instruction single-data (SISD): RISC, etc. Single-instruction multiple-data (SIMD): all processors perform the same operations. Multiple-instruction multiple-data (MIMD): homogeneou s or heterogeneou s multiprocessor. Multiple-instruction multiple data (MISD). High Performance Embedded Computing © 2007 Elsevier 5 Other axes of comparison RISC. Emphasis on software Sing le-cyclilittile, simple instructions Register to register: LOAD" and "STORE“ are independent instructions Low cycles per second, Large code sizes Spends more transistors on memory registers CISC. Emphasis on hardware multi-cycle, complex instructions Memory-to-memory: LOAD" and "STORE“ incorporated in instructions High cycles per second Small code sizes Transistors used for storing complex instructions High Performance Embedded Computing © 2007 Elsevier 6 RISC CISC 1. 1-cycle simple instructions 1. multi-cycle complex instructions 2. only LD/ST can access memory 2. any instruction may access memory 3. designed around pipeline 3. designed around instn. set 4. instns. executed by h/w 4. instns interpreted by micro-program 5. -

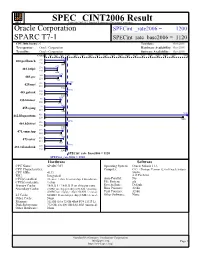

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Implementing Powerpc Linux on System I Platform

Front cover Implementing POWER Linux on IBM System i Platform Planning and configuring Linux servers on IBM System i platform Linux distribution on IBM System i Platform installation guide Tips to run Linux servers on IBM System i platform Yessong Johng Erwin Earley Rico Franke Vlatko Kosturjak ibm.com/redbooks International Technical Support Organization Implementing POWER Linux on IBM System i Platform February 2007 SG24-6388-01 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. Second Edition (February 2007) This edition applies to i5/OS V5R4, SLES10 and RHEL4. © Copyright International Business Machines Corporation 2005, 2007. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix The team that wrote this redbook. ix Become a published author . xi Comments welcome. xi Chapter 1. Introduction to Linux on System i platform . 1 1.1 Concepts and terminology . 2 1.1.1 System i platform . 2 1.1.2 Hardware management console . 4 1.1.3 Virtual Partition Manager (VPM) . 10 1.2 Brief introduction to Linux and Linux on System i platform . 12 1.2.1 Linux on System i platform . 12 1.3 Differences between existing Power5-based System i and previous System i models 13 1.3.1 Linux enhancements on Power5 / Power5+ . 14 1.4 Where to go for more information . 15 Chapter 2. Configuration planning . 17 2.1 Concepts and terminology . 18 2.1.1 Processor concepts . -

From Blue Gene to Cell Power.Org Moscow, JSCC Technical Day November 30, 2005

IBM eServer pSeries™ From Blue Gene to Cell Power.org Moscow, JSCC Technical Day November 30, 2005 Dr. Luigi Brochard IBM Distinguished Engineer Deep Computing Architect [email protected] © 2004 IBM Corporation IBM eServer pSeries™ Technology Trends As frequency increase is limited due to power limitation Dual core is a way to : 2 x Peak Performance per chip (and per cycle) But at the expense of frequency (around 20% down) Another way is to increase Flop/cycle © 2004 IBM Corporation IBM eServer pSeries™ IBM innovations POWER : FMA in 1990 with POWER: 2 Flop/cycle/chip Double FMA in 1992 with POWER2 : 4 Flop/cycle/chip Dual core in 2001 with POWER4: 8 Flop/cycle/chip Quadruple core modules in Oct 2005 with POWER5: 16 Flop/cycle/module PowerPC: VMX in 2003 with ppc970FX : 8 Flops/cycle/core, 32bit only Dual VMX+ FMA with pp970MP in 1Q06 Blue Gene: Low frequency , system on a chip, tight integration of thousands of cpus Cell : 8 SIMD units and a ppc970 core on a chip : 64 Flop/cycle/chip © 2004 IBM Corporation IBM eServer pSeries™ Technology Trends As needs diversify, systems are heterogeneous and distributed GRID technologies are an essential part to create cooperative environments based on standards © 2004 IBM Corporation IBM eServer pSeries™ IBM innovations IBM is : a sponsor of Globus Alliances contributing to Globus Tool Kit open souce a founding member of Globus Consortium IBM is extending its products Global file systems : – Multi platform and multi cluster GPFS Meta schedulers : – Multi platform -

IBM Powerpc 970 (A.K.A. G5)

IBM PowerPC 970 (a.k.a. G5) Ref 1 David Benham and Yu-Chung Chen UIC – Department of Computer Science CS 466 PPC 970FX overview ● 64-bit RISC ● 58 million transistors ● 512 KB of L2 cache and 96KB of L1 cache ● 90um process with a die size of 65 sq. mm ● Native 32 bit compatibility ● Maximum clock speed of 2.7 Ghz ● SIMD instruction set (Altivec) ● 42 watts @ 1.8 Ghz (1.3 volts) ● Peak data bandwidth of 6.4 GB per second A picture is worth a 2^10 words (approx.) Ref 2 A little history ● PowerPC processor line is a product of the AIM alliance formed in 1991. (Apple, IBM, and Motorola) ● PPC 601 (G1) - 1993 ● PPC 603 (G2) - 1995 ● PPC 750 (G3) - 1997 ● PPC 7400 (G4) - 1999 ● PPC 970 (G5) - 2002 ● AIM alliance dissolved in 2005 Processor Ref 3 Ref 3 Core details ● 16(int)-25(vector) stage pipeline ● Large number of 'in flight' instructions (various stages of execution) - theoretical limit of 215 instructions ● 512 KB L2 cache ● 96 KB L1 cache – 64 KB I-Cache – 32 KB D-Cache Core details continued ● 10 execution units – 2 load/store operations – 2 fixed-point register-register operations – 2 floating-point operations – 1 branch operation – 1 condition register operation – 1 vector permute operation – 1 vector ALU operation ● 32 64 bit general purpose registers, 32 64 bit floating point registers, 32 128 vector registers Pipeline Ref 4 Benchmarks ● SPEC2000 ● BLAST – Bioinformatics ● Amber / jac - Structure biology ● CFD lab code SPEC CPU2000 ● IBM eServer BladeCenter JS20 ● PPC 970 2.2Ghz ● SPECint2000 ● Base: 986 Peak: 1040 ● SPECfp2000 ● Base: 1178 Peak: 1241 ● Dell PowerEdge 1750 Xeon 3.06Ghz ● SPECint2000 ● Base: 1031 Peak: 1067 Apple’s SPEC Results*2 ● SPECfp2000 ● Base: 1030 Peak: 1044 BLAST Ref. -

Poweredge R940 (Intel Xeon Gold 5122, 3.60 Ghz) Specint Rate Base2006 = 1080 CPU2006 License: 55 Test Date: Jun-2017 Test Sponsor: Dell Inc

SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. SPECint_rate2006 = 1150 PowerEdge R940 (Intel Xeon Gold 5122, 3.60 GHz) SPECint_rate_base2006 = 1080 CPU2006 license: 55 Test date: Jun-2017 Test sponsor: Dell Inc. Hardware Availability: Jul-2017 Tested by: Dell Inc. Software Availability: Nov-2016 Copies 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 11000 12000 13000 14000 15000 16000 18000 917 400.perlbench 32 32 762 521 401.bzip2 32 32 487 808 403.gcc 32 32 802 1480 429.mcf 32 624 445.gobmk 32 2010 456.hmmer 32 32 1530 710 458.sjeng 32 32 658 17700 462.libquantum 32 1170 464.h264ref 32 32 1130 554 471.omnetpp 32 32 509 614 473.astar 32 1430 483.xalancbmk 32 SPECint_rate_base2006 = 1080 SPECint_rate2006 = 1150 Hardware Software CPU Name: Intel Xeon Gold 5122 Operating System: SUSE Linux Enterprise Server 12 SP2 CPU Characteristics: Intel Turbo Boost Technology up to 3.70 GHz 4.4.21-69-default CPU MHz: 3600 Compiler: C/C++: Version 17.0.3.191 of Intel C/C++ FPU: Integrated Compiler for Linux CPU(s) enabled: 16 cores, 4 chips, 4 cores/chip, 2 threads/core Auto Parallel: Yes CPU(s) orderable: 2,4 chip File System: xfs Primary Cache: 32 KB I + 32 KB D on chip per core System State: Run level 3 (multi-user) Secondary Cache: 1 MB I+D on chip per core Base Pointers: 32-bit L3 Cache: 16.5 MB I+D on chip per chip Peak Pointers: 32/64-bit Other Cache: None Other Software: Microquill SmartHeap V10.2 Memory: 768 GB (48 x 16 GB 2Rx8 PC4-2666V-R) Disk Subsystem: 1 x 960 GB SATA SSD Other Hardware: None Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc.