Learning Player Strategies[4Pt] Using Weak Constraints

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Award -...CHESSPROBLEMS.CA

...CHESSPROBLEMS.CA Contents . ISSUE 14 (JULY 2018) 1 Originals 667 2018 Informal Tourney....... 667 Hors Concours............ 673 2 ChessProblems.ca Bulletin TT6 Award 674 3 Articles 678 Arno T¨ungler:Series-mover Artists: Manfred Rittirsch....... 678 Andreas Thoma:¥ Proca variations with e1 and e3...... 681 Jeff Coakley & Andrey Frolkin: Four Rebuses For The Bulletin 684 Arno T¨ungler:Record Breakers VI. 693 Adrian Storisteanu: Lab Notes........... 695 4 Last Page 699 Pauly's Comet............ 699 Editor: Cornel Pacurar Collaborators: Elke Rehder, . Adrian Storisteanu, Arno T¨ungler Originals: [email protected] Articles: [email protected] Correspondence: [email protected] Rook Endgame III ISSN 2292-8324 [Mixed technique on paper, c Elke Rehder, http://www.elke-rehder.de. Reproduced with permission.] ChessProblems.ca Bulletin IIssue 14I ..... ORIGINALS 2018 Informal Tourney T369 T366 T367 T368 Rom´eoBedoni ChessProblems.ca's annual Informal Tourney V´aclavKotˇeˇsovec V´aclavKotˇeˇsovec V´aclavKotˇeˇsovec S´ebastienLuce is open for series-movers of any type and with ¥ any fairy conditions and pieces. Hors concours mp% compositions (any genre) are also welcome! Send to: [email protected]. |£#% 2018 Judge: Manfred Rittirsch (DEU) p4 2018 Tourney Participants: # 1. Alberto Armeni (ITA) 2. Erich Bartel (DEU) C+ (1+5)ser-h#13 C+ (6+2)ser-!=17 C+ (5+2)ser-!=18 C- (1+16)ser-=67 3. Rom´eoBedoni (FRA) No white king Madrasi Madrasi Frankfurt Chess 4. Geoff Foster (AUS) p| p my = Grasshopper = Grasshopper = Nightrider No white king 5. Gunter Jordan (DEU) 4 my % = Leo = Nightrider = Nightriderhopper Royal pawn d6 ´ 6. LuboˇsKekely (SVK) 2 solutions 2 solutions 2 solutions 7. -

2012 Fall Catalog

Green Valley Recreation Fall Course Catalog The Leader in providing recreation, education and social activities! October - December 2012 www.gvrec.org OOverver 4400 NNewew CClasseslasses oofferedffered tthishis ffall!all! RRegistrationegistration bbeginsegins MMonday,onday, SSeptembereptember 1100 1 Dream! Discover! Play! Green Valley Recreation, Inc. GVR Facility Map Board of Directors Social Center Satellite Center 1. Abrego North Rose Theisen - President 1601 N. Abrego Drive N Interstate 19 Joyce Finkelstein - Vice President 2. Abrego South Duval Mine Road Linda Sparks - Secretary 1655 S. Abrego Drive Joyce Bulau - Asst. Secretary 3. Canoa Hills Social Center Erin McGinnis - Treasurer 3660 S. Camino del Sol 1. Abrego John Haggerty - Asst. Treasurer Office - 625-6200 North 4. Casa 5. Casa Jerry Belenker 4. Casa Paloma I Paloma I 9. Las Campanas Paloma II Russ Carpenter 400 W. Circulo del Paladin La Canada Esperanza Chuck Catino 5. Casa Paloma II Abrego Drive 8. East Blvd. Marge Garneau 330 N. Calle del Banderolas Center 625-9909 10. Madera Mark Haskoe Vista Tom Wilsted 6. Continental Vistas 906 W. Camino Guarina 12. West Center 7. Desert Hills Social Center - Executive Director 2980 S. Camino del Sol 6 Continental Office - 625-5221 Vistas 13. Member Lanny Sloan Services Center 8. East Social Center Continental Road 7 S. Abrego Drive Camino del Sol Road East Frontage Road West Frontage Recreation Supervisor Office - 625-4641 Instructional Courses 9. Las Campanas 565 W. Belltower Drive Carolyn Hupp Office - 648-7669 10. Madera Vista 440 S. Camino del Portillo 2. Abrego Catalog Design by: Camino Encanto South 11. Santa Rita Springs 7. Desert Hills Shelly Jackson 921 W. -

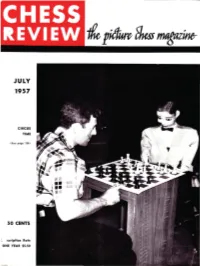

CHESS REVIEW but We Can Give a Bit More in a Few 250 West 57Th St Reet , New York 19, N

JULY 1957 CIRCUS TIME (See page 196 ) 50 CENTS ~ scription Rate ONE YEAR $5.50 From the "Amenities and Background of Chess-Play" by Ewart Napier ECHOES FROM THE PAST From Leipsic Con9ress, 1894 An Exhibition Game Almos t formidable opponent was P aul Lipk e in his pr ime, original a nd pi ercing This instruc tive game displays these a nd effective , Quite typica l of 'h is temper classical rivals in holiUay mood, ex is the ",lid Knigh t foray a t 8. Of COU I'se, ploring a dangerous Queen sacrifice. the meek thil'd move of Black des e r\" e~ Played at Augsburg, Germany, i n 1900, m uss ing up ; Pillsbury adopted t he at thirty moves an hOlll" . Tch igorin move, 3 . N- B3. F A L K BEE R COU NT E R GAM BIT Q U EE N' S PAW N GA ME" 0 1'. E. Lasker H. N . Pi llsbury p . Li pke E. Sch iffers ,Vhite Black W hite Black 1 P_K4 P-K4 9 8-'12 B_ KB4 P_Q4 6 P_ KB4 2 P_KB4 P-Q4 10 0-0- 0 B,N 1 P-Q4 8-K2 Mate announred in eight. 2 P- K3 KN_ B3 7 N_ R3 3 P xQP P-K5 11 Q- N4 P_ K B4 0 - 0 8 N_N 5 K N_B3 12 Q-N3 N-Q2 3 B-Q3 P- K 3? P-K R3 4 Q N- B3 p,p 5 Q_ K2 B-Q3 13 8-83 N-B3 4 N-Q2 P-B4 9 P-K R4 6 P_Q3 0-0 14 N-R3 N_ N5 From Leipsic Con9ress. -

Kirsch, Gesa E., Ed. Ethics and Representation In

DOCUMENT RESUME ED 400 543 CS 215 516 AUTHOR Mortensen, Peter, Ed.; Kirsch, Gesa E., Ed. TITLE Ethics and Representation in Qualitative Studies of Literacy. INSTITUTION National Council of Teachers of English, Urbana, Ill. REPORT NO ISBN-0-8141-1596-9 PUB DATE 96 NOTE 347p.; With a collaborative foreword led by Andrea A. Lunsford and an afterword by Ruth E. Ray. AVAILABLE FROM National Council of Teachers of English, 1111 W. Kenyon Road, Urbana, IL 61801-1096 (Stock No. 15969: $21.95 members, $28.95 nonmembers). PUB TYPE Collected Works General (020) Reports Descriptive (141) EDRS PRICE MFO1 /PC14 Plus Postage. DESCRIPTORS *Case Studies; Elementary Secondary Education; *Ethics; *Ethnography; Higher Education; Participant Observation; *Qualitative Research; *Research Methodology; Research Problems; Social Influences; *Writing Research IDENTIFIERS Researcher Role ABSTRACT Reflecting on the practice of qualitative literacy research, this book presents 14 essays that address the most pressing questions faced by qualitative researchers today: how to represent others and themselves in research narratives; how to address ethical dilemmas in research-participant relationi; and how to deal with various rhetorical, institutional, and historical constraints on research. After a foreword ("Considering Research Methods in Composition and Rhetoric" by Andrea A. Lunsford and others) and an introduction ("Reflections on Methodology in Literacy Studies" by the editors), essays in the book are (1) "Seduction and Betrayal in Qualitative Research" (Thomas Newkirk); (2) "Still-Life: Representations and Silences in the. Participant-Observer Role" (Brenda Jo Brueggemann);(3) "Dealing with the Data: Ethical Issues in Case Study Research" (Cheri L. Williams);(4) "'Everything's Negotiable': Collaboration and Conflict in Composition Research" (Russel K. -

Havannah and Twixt Are Pspace-Complete

Havannah and TwixT are pspace-complete Édouard Bonnet, Florian Jamain, and Abdallah Saffidine Lamsade, Université Paris-Dauphine, {edouard.bonnet,abdallah.saffidine}@dauphine.fr [email protected] Abstract. Numerous popular abstract strategy games ranging from hex and havannah to lines of action belong to the class of connection games. Still, very few complexity results on such games have been obtained since hex was proved pspace-complete in the early eighties. We study the complexity of two connection games among the most widely played. Namely, we prove that havannah and twixt are pspace- complete. The proof for havannah involves a reduction from generalized geog- raphy and is based solely on ring-threats to represent the input graph. On the other hand, the reduction for twixt builds up on previous work as it is a straightforward encoding of hex. Keywords: Complexity, Connection game, Havannah, TwixT, General- ized Geography, Hex, pspace 1 Introduction A connection game is a kind of abstract strategy game in which players try to make a specific type of connection with their pieces [5]. In many connection games, the goal is to connect two opposite sides of a board. In these games, players take turns placing or/and moving pieces until they connect the two sides of the board. Hex, twixt, and slither are typical examples of this type of game. However, a connection game can also involve completing a loop (havannah) or connecting all the pieces of a color (lines of action). A typical process in studying an abstract strategy game, and in particular a connection game, is to develop an artificial player for it by adapting standard techniques from the game search literature, in particular the classical Alpha-Beta algorithm [1] or the more recent Monte Carlo Tree Search paradigm [6,2]. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E. -

1 Jess Rudolph Shogi

Jess Rudolph Shogi – the Chess of Japan Its History and Variants When chess was first invented in India by the end of the sixth century of the current era, probably no one knew just how popular or wide spread the game would become. Only a short time into the second millennium – if not earlier – chess was being played as far as the most distant lands of the known world – the Atlantic coast of Europe and Japan. All though virtually no contact existed for centuries to come between these lands, people from both cultures were playing a game that was very similar; in Europe it was to become the chess most westerners know today and in Japan it was shogi – the Generals Game. Though shogi has many things in common with many other chess variants, those elements are not always clear because of the many differences it also has. Sadly, how the changes came about is not well known since much of the early history of shogi has been lost. In some ways the game is more similar to the Indian chaturanga than its neighboring cousin in China – xiangqi. In other ways, it’s closer to xiangqi than to any other game. In even other ways it has similarities to the Thai chess of makruk. Most likely it has elements from all these lands. It is generally believed that chess came to Japan from China through the trade routs in Korea in more than one wave, the earliest being by the end of tenth century, possibly as early as the eighth. -

Chess & Bridge

2013 Catalogue Chess & Bridge Plus Backgammon Poker and other traditional games cbcat2013_p02_contents_Layout 1 02/11/2012 09:18 Page 1 Contents CONTENTS WAYS TO ORDER Chess Section Call our Order Line 3-9 Wooden Chess Sets 10-11 Wooden Chess Boards 020 7288 1305 or 12 Chess Boxes 13 Chess Tables 020 7486 7015 14-17 Wooden Chess Combinations 9.30am-6pm Monday - Saturday 18 Miscellaneous Sets 11am - 5pm Sundays 19 Decorative & Themed Chess Sets 20-21 Travel Sets 22 Giant Chess Sets Shop online 23-25 Chess Clocks www.chess.co.uk/shop 26-28 Plastic Chess Sets & Combinations or 29 Demonstration Chess Boards www.bridgeshop.com 30-31 Stationery, Medals & Trophies 32 Chess T-Shirts 33-37 Chess DVDs Post the order form to: 38-39 Chess Software: Playing Programs 40 Chess Software: ChessBase 12` Chess & Bridge 41-43 Chess Software: Fritz Media System 44 Baker Street 44-45 Chess Software: from Chess Assistant 46 Recommendations for Junior Players London, W1U 7RT 47 Subscribe to Chess Magazine 48-49 Order Form 50 Subscribe to BRIDGE Magazine REASONS TO SHOP ONLINE 51 Recommendations for Junior Players - New items added each and every week 52-55 Chess Computers - Many more items online 56-60 Bargain Chess Books 61-66 Chess Books - Larger and alternative images for most items - Full descriptions of each item Bridge Section - Exclusive website offers on selected items 68 Bridge Tables & Cloths 69-70 Bridge Equipment - Pay securely via Debit/Credit Card or PayPal 71-72 Bridge Software: Playing Programs 73 Bridge Software: Instructional 74-77 Decorative Playing Cards 78-83 Gift Ideas & Bridge DVDs 84-86 Bargain Bridge Books 87 Recommended Bridge Books 88-89 Bridge Books by Subject 90-91 Backgammon 92 Go 93 Poker 94 Other Games 95 Website Information 96 Retail shop information page 2 TO ORDER 020 7288 1305 or 020 7486 7015 cbcat2013_p03to5_woodsets_Layout 1 02/11/2012 09:53 Page 1 Wooden Chess Sets A LITTLE MORE INFORMATION ABOUT OUR CHESS SETS.. -

Abstract Games and IQ Puzzles

CZECH OPEN 2019 IX. Festival of abstract games and IQ puzzles Part of 30th International Chess and Games Festival th th rd th Pardubice 11 - 18 and 23 – 27 July 2019 Organizers: International Grandmaster David Kotin in cooperation with AVE-KONTAKT s.r.o. Rules of individual games and other information: http://www.mankala.cz/, http://www.czechopen.net Festival consist of open playing, small tournaments and other activities. We have dozens of abstract games for you to play. Many are from boxed sets while some can be played on our home made boards. We will organize tournaments in any of the games we have available for interested players. This festival will show you the variety, strategy and tactics of many different abstract strategy games including such popular categories as dama variants, chess variants and Mancala games etc while encouraging you to train your brain by playing more than just one favourite game. Hopefully, you will learn how useful it is to learn many new and different ideas which help develop your imagination and creativity. Program: A) Open playing Open playing is playing for fun and is available during the entire festival. You can play daily usually from the late morning to early evening. Participation is free of charge. B) Tournaments We will be very pleased if you participate by playing in one or more of our tournaments. Virtually all of the games on offer are easy to learn yet challenging. For example, try Octi, Oska, Teeko, Borderline etc. Suitable time limit to enable players to record games as needed. -

CHESS by Peter Mesehter

The , Every ail: monilia the Yugoalav Ch... Federation brin~ out a ne" book of the fin.. t gamell played d uring Ihe preceding half year. A unique, newly-deviaed ayatam of annotating gam_ by coded aiqns avoid. aU lanquage obatacles. Thia makes poaaible a \lIl.iversalJ.y "'able and yet r.aaonably.priced book which brin91 the newest idecu in the openings and throughout the game to "ery dun enthusiaBt more quickly than ever before. Book 6 contain. 821 gam_ played ~tw.. n July I and December 31, 1968. A qreat ••lecti on 01 theoretically importcmt gam.. from 28 tournaments and match.. including the Lugano Olympiad. World Student Team Championship (Ybb.), Mar del Plata. Netanyo, Amsterdam. Skopje. Dehrecen. Sombor. Havana, Vinkovd , Belgrade, Palma de Majorca. and Atheu, Special New Feature! Beginning with Book 6, each CHESS INFORMANT contains a aection for rIDE communications, r. placing the former official publication FIDE REVIEW. The FIDE MCl lon in th1I iuue contain, complete RequlatioD.8 for the Tournament. and Match.. for the Men'. emd Ladi ..' World Championahipl. Prescribes the entire competi tion sr-tem from Zonal emd Intenonal Toumamentl through the Candidat. Matche. to the World Cbampionsbip Match. Book 6 hcu aectiona featuring 51 brilliant Combinationa and (S Endings from actual play during the precedinq ail:: months. Another iDter.. Ung feature is a table lilting in order the Ten Best Gam.. from Book 5 emd ahowing how each of the eiqht Grandmmtera on the jury voted. Conloins em English·lcmquage introduction, explemation of the annotation code, index of play. ers and commentators, and lilt of tournamentl emd match.. -

Read Book Japanese Chess: the Game of Shogi Ebook, Epub

JAPANESE CHESS: THE GAME OF SHOGI PDF, EPUB, EBOOK Trevor Leggett | 128 pages | 01 May 2009 | Tuttle Shokai Inc | 9784805310366 | English | Kanagawa, Japan Japanese Chess: The Game of Shogi PDF Book Memorial Verkouille A collection of 21 amateur shogi matches played in Ghent, Belgium. Retrieved 28 November In particular, the Two Pawn violation is most common illegal move played by professional players. A is the top class. This collection contains seven professional matches. Unlike in other shogi variants, in taikyoku the tengu cannot move orthogonally, and therefore can only reach half of the squares on the board. There are no discussion topics on this book yet. Visit website. The promoted silver. Brian Pagano rated it it was ok Oct 15, Checkmate by Black. Get A Copy. Kai Sanz rated it really liked it May 14, Cross Field Inc. This is a collection of amateur games that were played in the mid 's. The Oza tournament began in , but did not bestow a title until Want to Read Currently Reading Read. This article may be too long to read and navigate comfortably. White tiger. Shogi players are expected to follow etiquette in addition to rules explicitly described. The promoted lance. Illegal moves are also uncommon in professional games although this may not be true with amateur players especially beginners. Download as PDF Printable version. The Verge. It has not been shown that taikyoku shogi was ever widely played. Thus, the end of the endgame was strategically about trying to keep White's points above the point threshold. You might see something about Gene Davis Software on them, but they probably work. -

A Companion to Digital Art WILEY BLACKWELL COMPANIONS to ART HISTORY

A Companion to Digital Art WILEY BLACKWELL COMPANIONS TO ART HISTORY These invigorating reference volumes chart the influence of key ideas, discourses, and theories on art, and the way that it is taught, thought of, and talked about throughout the English‐speaking world. Each volume brings together a team of respected international scholars to debate the state of research within traditional subfields of art history as well as in more innovative, thematic configurations. Representing the best of the scholarship governing the field and pointing toward future trends and across disciplines, the Blackwell Companions to Art History series provides a magisterial, state‐ of‐the‐art synthesis of art history. 1 A Companion to Contemporary Art since 1945 edited by Amelia Jones 2 A Companion to Medieval Art edited by Conrad Rudolph 3 A Companion to Asian Art and Architecture edited by Rebecca M. Brown and Deborah S. Hutton 4 A Companion to Renaissance and Baroque Art edited by Babette Bohn and James M. Saslow 5 A Companion to British Art: 1600 to the Present edited by Dana Arnold and David Peters Corbett 6 A Companion to Modern African Art edited by Gitti Salami and Monica Blackmun Visonà 7 A Companion to Chinese Art edited by Martin J. Powers and Katherine R. Tsiang 8 A Companion to American Art edited by John Davis, Jennifer A. Greenhill and Jason D. LaFountain 9 A Companion to Digital Art edited by Christiane Paul 10 A Companion to Public Art edited by Cher Krause Knight and Harriet F. Senie A Companion to Digital Art Edited by Christiane Paul