Assessing and Understanding Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

Microbenchmarks in Big Data

M Microbenchmark Overview Microbenchmarks constitute the first line of per- Nicolas Poggi formance testing. Through them, we can ensure Databricks Inc., Amsterdam, NL, BarcelonaTech the proper and timely functioning of the different (UPC), Barcelona, Spain individual components that make up our system. The term micro, of course, depends on the prob- lem size. In BigData we broaden the concept Synonyms to cover the testing of large-scale distributed systems and computing frameworks. This chap- Component benchmark; Functional benchmark; ter presents the historical background, evolution, Test central ideas, and current key applications of the field concerning BigData. Definition Historical Background A microbenchmark is either a program or routine to measure and test the performance of a single Origins and CPU-Oriented Benchmarks component or task. Microbenchmarks are used to Microbenchmarks are closer to both hardware measure simple and well-defined quantities such and software testing than to competitive bench- as elapsed time, rate of operations, bandwidth, marking, opposed to application-level – macro or latency. Typically, microbenchmarks were as- – benchmarking. For this reason, we can trace sociated with the testing of individual software microbenchmarking influence to the hardware subroutines or lower-level hardware components testing discipline as can be found in Sumne such as the CPU and for a short period of time. (1974). Furthermore, we can also find influence However, in the BigData scope, the term mi- in the origins of software testing methodology crobenchmarking is broadened to include the during the 1970s, including works such cluster – group of networked computers – acting as Chow (1978). One of the first examples of as a single system, as well as the testing of a microbenchmark clearly distinguishable from frameworks, algorithms, logical and distributed software testing is the Whetstone benchmark components, for a longer period and larger data developed during the late 1960s and published sizes. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

I.MX 8Quadxplus Power and Performance

NXP Semiconductors Document Number: AN12338 Application Note Rev. 4 , 04/2020 i.MX 8QuadXPlus Power and Performance 1. Introduction Contents This application note helps you to design power 1. Introduction ........................................................................ 1 management systems. It illustrates the current drain 2. Overview of i.MX 8QuadXPlus voltage supplies .............. 1 3. Power measurement of the i.MX 8QuadXPlus processor ... 2 measurements of the i.MX 8QuadXPlus Applications 3.1. VCC_SCU_1V8 power ........................................... 4 Processors taken on NXP Multisensory Evaluation Kit 3.2. VCC_DDRIO power ............................................... 4 (MEK) Platform through several use cases. 3.3. VCC_CPU/VCC_GPU/VCC_MAIN power ........... 5 3.4. Temperature measurements .................................... 5 This document provides details on the performance and 3.5. Hardware and software used ................................... 6 3.6. Measuring points on the MEK platform .................. 6 power consumption of the i.MX 8QuadXPlus 4. Use cases and measurement results .................................... 6 processors under a variety of low- and high-power 4.1. Low-power mode power consumption (Key States modes. or ‘KS’)…… ......................................................................... 7 4.2. Complex use case power consumption (Arm Core, The data presented in this application note is based on GPU active) ......................................................................... 11 5. SOC -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

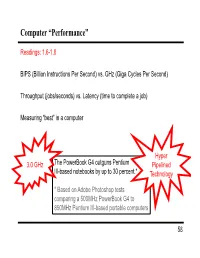

Computer “Performance”

Computer “Performance” Readings: 1.6-1.8 BIPS (Billion Instructions Per Second) vs. GHz (Giga Cycles Per Second) Throughput (jobs/seconds) vs. Latency (time to complete a job) Measuring “best” in a computer Hyper 3.0 GHz The PowerBook G4 outguns Pentium Pipelined III-based notebooks by up to 30 percent.* Technology * Based on Adobe Photoshop tests comparing a 500MHz PowerBook G4 to 850MHz Pentium III-based portable computers 58 Performance Example: Homebuilders Builder Time per Houses Per House Dollars Per House Month Options House Self-build 24 months 1/24 Infinite $200,000 Contractor 3 months 1 100 $400,000 Prefab 6 months 1,000 1 $250,000 Which is the “best” home builder? Homeowner on a budget? Rebuilding Haiti? Moving to wilds of Alaska? Which is the “speediest” builder? Latency: how fast is one house built? Throughput: how long will it take to build a large number of houses? 59 Computer Performance Primary goal: execution time (time from program start to program completion) 1 Performance ExecutionTime To compare machines, we say “X is n times faster than Y” Performance ExecutionTime n x y Performancey ExecutionTimex Example: Machine Orange and Grape run a program Orange takes 5 seconds, Grape takes 10 seconds Orange is _____ times faster than Grape 60 Execution Time Elapsed Time counts everything (disk and memory accesses, I/O , etc.) a useful number, but often not good for comparison purposes CPU time doesn't count I/O or time spent running other programs can be broken up into system time, and user time Example: Unix “time” command linux15.ee.washington.edu> time javac CircuitViewer.java 3.370u 0.570s 0:12.44 31.6% Our focus: user CPU time time spent executing the lines of code that are "in" our program 61 CPU Time CPU execution time CPU clock cycles =*Clock period for a program for a program CPU execution time CPU clock cycles 1 =* for a program for a program Clock rate Application example: A program takes 10 seconds on computer Orange, with a 400MHz clock. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

Advanced Computer Architecture Lecture No. 30

Advanced Computer Architecture-CS501 ________________________________________________________ Advanced Computer Architecture Lecture No. 30 Reading Material Vincent P. Heuring & Harry F. Jordan Chapter 8 Computer Systems Design and Architecture 8.3.3, 8.4 Summary • Nested Interrupts • Interrupt Mask • DMA Nested Interrupts (Read from Book, Jordan Page 397) Interrupt Mask (Read from Book, Jordan Page 397) Priority Mask (Read from Book, Jordan Page 398) Examples Example # 123 Assume that three I/O devices are connected to a 32-bit, 10 MIPS CPU. The first device is a hard drive with a maximum transfer rate of 1MB/sec. It has a 32-bit bus. The second device is a floppy drive with a transfer rate of 25KB/sec over a 16-bit bus, and the third device is a keyboard that must be polled thirty times per second. Assuming that the polling operation requires 20 instructions for each I/O device, determine the percentage of CPU time required to poll each device. Solution: The hard drive can transfer 1MB/sec or 250 K 32-bit words every second. Thus, this hard drive should be polled using at least this rate. Using 1K=210, the number of CPU instructions required would be 250 x 210 x 20 = 5120000 instructions per second. 23 Adopted from [H&P org] Last Modified: 01-Nov-06 Page 309 Advanced Computer Architecture-CS501 ________________________________________________________ Percentage of CPU time required for polling is (5.12 x 106)/ (10 x106) = 51.2% The floppy disk can transfer 25K/2= 12.5 x 210 half-words per second. It should be polled with at least this rate. -

Srovnání Kvality Virtuálních Strojů Assessment of Virtual Machines Performance

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DSpace at VSB Technical University of Ostrava VŠB - Technická univerzita Ostrava Fakulta elektrotechniky a informatiky Katedra Informatiky Srovnání kvality virtuálních strojů Assessment of Virtual Machines Performance 2011 Lenka Novotná Prohlašuji, že jsem tuto bakalářskou práci vypracovala samostatně. Uvedla jsem všechny literární prameny a publikace, ze kterých jsem čerpala. V Ostravě dne 20. dubna 2011 ……………………… Lenka Novotná Ráda bych poděkovala vedoucímu bakalářské práce, Ing. Petru Olivkovi, za pomoc, věcné připomínky a veškerý čas, který mi věnoval. Abstrakt Hlavním cílem této bakalářské práce je vysvětlit pojem virtualizace, virtuální stroj, a dále popsat a porovnat dostupné virtualizační prostředky, které se využívají ve světovém oboru informačních technologií. Práce se především zabývá srovnáním výkonu virtualizačních strojů pro desktopové operační systémy MS Windows a Linux. Při testování jsem se zaměřila na tři hlavní faktory a to propustnost sítě, při které jsem použila aplikaci Iperf, ke změření výkonu diskových operací jsem využila program IOZone a pro test posledního faktoru, který je zaměřen na přidělování CPU procesů, jsem použila známé testovací aplikace Dhrystone a Whetstone. Všechna zmiňovaná měření okruhů byla provedena na třech virtualizačních platformách, kterými jsou VirtualBox OSE, VMware Player a KVM s QEMU. Klíčová slova: Virtualizace, virtuální stroj, VirtualBox, VMware, KVM, QEMU, plná virtualizace, paravirtualizace, částečná virtualizace, hardwarově asistovaná virtualizace, virtualizace na úrovní operačního systému, měření výkonu CPU, měření propustnosti sítě, měření diskových operací, Dhrystone, Whetstone, Iperf, IOZone. Abstract The main goal of this thesis is explain the term of Virtualization and Virtual Machine, describe and compare available virtualization resources, which we can use in worldwide field of information technology. -

Xilinx Running the Dhrystone 2.1 Benchmark on a Virtex-II Pro

Product Not Recommended for New Designs Application Note: Virtex-II Pro Device R Running the Dhrystone 2.1 Benchmark on a Virtex-II Pro PowerPC Processor XAPP507 (v1.0) July 11, 2005 Author: Paul Glover Summary This application note describes a working Virtex™-II Pro PowerPC™ system that uses the Dhrystone benchmark and the reference design on which the system runs. The Dhrystone benchmark is commonly used to measure CPU performance. Introduction The Dhrystone benchmark is a general-performance benchmark used to evaluate processor execution time. This benchmark tests the integer performance of a CPU and the optimization capabilities of the compiler used to generate the code. The output from the benchmark is the number of Dhrystones per second (that is, the number of iterations of the main code loop per second). This application note describes a PowerPC design created with Embedded Development Kit (EDK) 7.1 that runs the Dhrystone benchmark, producing 600+ DMIPS (Dhrystone Millions of Instructions Per Second) at 400 MHz. Prerequisites Required Software • Xilinx ISE 7.1i SP1 • Xilinx EDK 7.1i SP1 • WindRiver Diab DCC 5.2.1.0 or later Note: The Diab compiler for the PowerPC processor must be installed and included in the path. • HyperTerminal Required Hardware • Xilinx ML310 Demonstration Platform • Serial Cable • Xilinx Parallel-4 Configuration Cable Dhrystone Developed in 1984 by Reinhold P. Wecker, the Dhrystone benchmark (written in C) was Description originally developed to benchmark computer systems, a short benchmark that was representative of integer programming. The program is CPU-bound, performing no I/O functions or operating system calls. -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements.