Evaluating NLU Models with Harder Generalization Tasks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gatorade® Recognizes Colorado Football Standout with Its National Player of the Year Award Byers Becomes First Lineman to Win in Award’S 19-Year History

FOR IMMEDIATE RELEASE Gatorade® Recognizes Colorado Football Standout With Its National Player of the Year Award Byers becomes first lineman to win in award’s 19-year history Loveland, Colo. (December 11, 2003) – In its 19th year of honoring the nation’s elite high school athletes, Gatorade® Thirst Quencher, in partnership with Scholastic Coach & Athletic Director magazine, today announced Jeff Byers of Loveland High School in Loveland, Colo., as the 2003 Gatorade National High School Football Player of the Year. The award, which recognizes athletic achievement as well as the academic performance and overall character of its recipient, distinguishes Jeff as the nation’s best high school football player. The Gatorade National Advisory Board, a group of sportswriters and sport-specific experts from around the country, selected Jeff from the more than one million high school football players nationwide. Rated by many scouting services as the country’s top center, Jeff paved the way for Loveland’s running attack and did not allow a sack in three years as a starter. Defensively, he recorded 203 tackles in his senior season, 97 of them solo and 55 of them for a loss. In addition, he racked up 10 sacks and forced 14 fumbles, one of which he returned 75 yards for a touchdown in Loveland’s 50-0 state semifinal victory. Jeff capped off his high school career by leading Loveland High School to the Colorado 4A state championship, the school’s sixth overall and its second during his four years on the varsity. “Jeff is the rare individual who can absolutely dominate the game as a lineman. -

His Splendid Moment - the Boston Globe Page 1 of 3

Boston Red Sox - His splendid moment - The Boston Globe Page 1 of 3 THIS STORY HAS BEEN FORMATTED FOR EASY PRINTING ONE-HIT WONDERS His splendid moment In a pinch, Hardy gained starring role By Stan Grossfeld, Globe Staff | December 20, 2009 First in an occasional series on memorable Boston sports figures who had their 15 minutes of fame. LONGMONT, Colo. - At 76, former Red Sox outfielder Carroll Hardy is in stellar health, but he knows his obituary is all but set in stone. The only man ever to pinch hit for Ted Williams. “I’m kind of excited by it,’’ says Hardy, a glint in his eye. “I think it’s funny.’’ He’s been described as having the good fortune of Forrest Gump, and for good reason. Hardy also played one year in the NFL and caught four touchdown passes from Hall of Fame quarterback Y.A. Tittle. He pinch hit for a young Yaz and a rookie Roger Maris. He was tutored by the legendary Tris Speaker, coached for the volatile Billy Martin in Triple A Denver, and hit a walkoff grand slam at Fenway Park. He was even responsible for a change in the NFL draft. Hardy was a journeyman outfielder for the Red Sox, Indians, Colt .45s, and Twins who hit just .225 with 17 home runs and 113 RBIs in 433 games over an eight-year major league career. But he received baseball immortality on Sept. 20, 1960, in the first inning of a game in Baltimore. “Skinny Brown was pitching this particular day. -

Super Bowl Games of the 1990'S Crossword Puzzle

Super Bowl Games of the 1990’s Crossword Puzzle Name _______________________________ Item 4999 Super Bowl Games of the 1990’s Across 5. What city hosted Super Bowl XXVI? 7. Who was the MVP in Super Bowl XXVII? 8. What city hosted Super Bowl XXXII? 9. The New York Giants won Super Bowl XXV by how many points? 10. Name the quarterback who was named MVP in Super Bowl XXXIII. Down 1. What state hosted Super Bowl XXX? 2. The San Francisco 49ers beat the _________ in Super Bowl XXIV. 3. Who was the coach of the New England Patriots in Super Bowl XXXI? 4. Emmitt Smith played for what team in Super Bowl XXVIII? 6. What California team lost Super Bowl XXIX? www.tlsbooks.com Copyright ©2008 T. Smith Publishing. All rights reserved. Graphics ©JupiterImages Corp. Super Bowl Games of the 1990’s Crossword Puzzle Answer Key Item 4999 Across 5. What city hosted Super Bowl XXVI? Minneapolis 7. Who was the MVP in Super Bowl XXVII? Troy Aikman 8. What city hosted Super Bowl XXXII? San Diego 9. The New York Giants won Super Bowl XXV by how many points? one 10. Name the quarterback who was named MVP in Super Bowl XXXIII. John Elway Down 1. What state hosted Super Bowl XXX? Arizona 2. The San Francisco 49ers beat the _________ in Super Bowl XXIV. Denver Broncos 3. Who was the coach of the New England Patriots in Super Bowl XXXI? Bill Parcell 4. Emmitt Smith played for what team in Super Bowl XXVIII? Dallas Cowboys 6. What California team lost Super Bowl XXIX? San Diego Chargers Copyright ©2008 T. -

NFL World Championship Game, the Super Bowl Has Grown to Become One of the Largest Sports Spectacles in the United States

/ The Golden Anniversary ofthe Super Bowl: A Legacy 50 Years in the Making An Honors Thesis (HONR 499) by Chelsea Police Thesis Advisor Mr. Neil Behrman Signed Ball State University Muncie, Indiana May 2016 Expected Date of Graduation May 2016 §pCoJI U ncler.9 rod /he. 51;;:, J_:D ;l.o/80J · Z'7 The Golden Anniversary ofthe Super Bowl: A Legacy 50 Years in the Making ~0/G , PG.5 Abstract Originally known as the AFL-NFL World Championship Game, the Super Bowl has grown to become one of the largest sports spectacles in the United States. Cities across the cotintry compete for the right to host this prestigious event. The reputation of such an occasion has caused an increase in demand and price for tickets, making attendance nearly impossible for the average fan. As a result, the National Football League has implemented free events for local residents and out-of-town visitors. This, along with broadcasting the game, creates an inclusive environment for all fans, leaving a lasting legacy in the world of professional sports. This paper explores the growth of the Super Bowl from a novelty game to one of the country' s most popular professional sporting events. Acknowledgements First, and foremost, I would like to thank my parents for their unending support. Thank you for allowing me to try new things and learn from my mistakes. Most importantly, thank you for believing that I have the ability to achieve anything I desire. Second, I would like to thank my brother for being an incredible role model. -

SUPER BOWL XLVIII Malcolm Smith Was Having an Amazing 2013–2014 Season in the NFL

Malcolm Smith and the Seattle Seahawks SUPER BOWL XLVIII Malcolm Smith was having an amazing 2013–2014 season in the NFL. The linebacker for the Seattle Seahawks had helped his team reach the SUPER BOWL XLVIII NFC Championship Game against the San Francisco 49ers. Late in the game, Malcolm caught an interception in the end zone, sending Seattle SUPER BOWL XLVIII to the Super Bowl against the Denver Broncos. Could Malcolm take the next step and win a Super Bowl championship? Troy Aikman Santonio Holmes Joe Montana and the Dallas Cowboys and the Pittsburgh Steelers and the San Francisco 49ers SUPER BOWL XXVII SUPER BOWL XLIII SUPER BOWL XXIV Tom Brady Dexter Jackson Aaron Rodgers and the New England Patriots and the Tampa Bay Buccaneers and the Green Bay Packers SUPER BOWL XXXVIII SUPER BOWL XXXVII SUPER BOWL XLV Drew Brees Eli Manning Malcolm Smith and the New Orleans Saints and the New York Giants and the Seattle Seahawks SUPER BOWL XLIV SUPER BOWL XLII SUPER BOWL XLVIII Sandler John Elway Eli Manning Hines Ward and the Denver Broncos and the New York Giants and the Pittsburgh Steelers SUPER BOWL XXXIII SUPER BOWL XLVI SUPER BOWL XL Joe Flacco Peyton Manning Kurt Warner and the Baltimore Ravens and the Indianapolis Colts and the St. Louis Rams SUPER BOWL XLVII SUPER BOWL XLI SUPER BOWL XXXIV by Michael Sandler [Intentionally Left Blank] by Michael Sandler Consultant: Charlie Zegers Football Expert and Consultant for Basketball Heroes Making a Difference Credits Cover and Title Page, © Christian Petersen/Getty Images; 4, © Ric Tapia/Icon SMI; 5, © Marcio Jose Sanchez; 6, © John Pyle/Icon SMI; 7, © Richard A. -

GARY KUBIAK HEAD COACH – 22Nd NFL Season (12Th with Broncos)

GARY KUBIAK HEAD COACH – 22nd NFL Season (12th with Broncos) Gary Kubiak, a 23-year coaching veteran and three-time Super Bowl champion, enters his third decade with the Denver Broncos after being named the 15th head coach in club history on Jan. 19, 2015. A backup quarterback for nine seasons (1983-91) with the Broncos and an offensive coordinator for 11 years (1995- 2005) with the club, Kubiak returns to Denver after spending eight years (2006-13) as head coach of the Houston Texans and one season (2014) as offensive coordinator with the Baltimore Ravens. During 21 seasons working in the NFL, Kubiak has coached 30 players to a total of 57 Pro Bowl selections. He has appeared in eight conference championship games and six Super Bowls as a player or coach and was part of three World Championship staffs (S.F., 1994; Den., 1997-98) as a quarterbacks coach or offensive coordinator. In his most recent position as Baltimore’s offensive coordinator in 2014, he oversaw one of the NFL’s most improved and explosive units to help the Ravens advance to the AFC Divisional Playoffs. His offense posted the third-largest overall improvement (+57.5 ypg) in the NFL from the previous season and posted nearly 50 percent more big plays (74 plays of 20+yards) from the year before he arrived. Ravens quarterback Joe Flacco recorded career highs in passing yards (3,986) and touchdown passes (27) under Kubiak’s guidance while running back Justin Forsett ranked fifth in the league in rushing (1,266 yds.) to earn his first career Pro Bowl selection. -

TONY GONZALEZ FACT SHEET BIOS, RECORDS, QUICK FACTS, NOTES and QUOTES TONY GONZALEZ Is One of Eight Members of the Pro Football Hall of Fame, Class of 2019

TONY GONZALEZ FACT SHEET BIOS, RECORDS, QUICK FACTS, NOTES AND QUOTES TONY GONZALEZ is one of eight members of the Pro Football Hall of Fame, Class of 2019. CAPSULE BIO 17 seasons, 270 games … First-round pick (13th player overall) by Chiefs in 1997 … Named Chiefs’ rookie of the year after recording 33 catches for 368 yards and 2 TDs, 1997 … Recorded more than 50 receptions in a season in each of his last 16 years (second most all-time) including 14 seasons with 70 or more catches … Led NFL in receiving with career-best 102 receptions, 2004 … Led Chiefs in receiving eight times … Traded to Atlanta in 2009 … Led Falcons in receiving, 2012… Set Chiefs record with 26 games with 100 or more receiving yards; added five more 100-yard efforts with Falcons … Ranks behind only Jerry Rice in career receptions … Career statistics: 1,325 receptions for 15,127 yards, 111 TDs … Streak of 211 straight games with a catch, 2000-2013 (longest ever by tight end, second longest in NFL history at time of retirement) … Career-long 73- yard TD catch vs. division rival Raiders, Nov. 28, 1999 …Team leader that helped Chiefs and Falcons to two division titles each … Started at tight end for Falcons in 2012 NFC Championship Game, had 8 catches for 78 yards and 1 TD … Named First-Team All- Pro seven times (1999-2003, TIGHT END 2008, 2012) … Voted to 14 Pro Bowls … Named Team MVP by Chiefs 1997-2008 KANSAS CITY CHIEFS (2008) and Falcons (2009) … Selected to the NFL’s All-Decade Team of 2009-2013 ATLANTA FALCONS 2000s … Born Feb. -

DENVER BRONCOS EDITION Denver Broncos Team History

TEACHER ACTIVITY GUIDE DENVER BRONCOS EDITION Denver Broncos Team History The Denver Broncos have been one of pro football’s biggest winners since the merger of the American and National Football Leagues in 1970. The Broncos’ on-the-fi eld success is more than matched by a spectacular attendance record of sellout crowds (except for strike-replacement games) every year since 1970. Denver’s annual sale of approximately 74,000 season tickets is backed by a waiting list in the tens of thousands. The Broncos now play in the new INVESCO Field at Mile High which opened in 2001, but for 41 seasons played on the same plot of ground on which the original AFL team performed in 1960. This, however, is the only similarity of Denver teams of yesteryear and today. The upstart AFL was the target of many jokes and jeers by the established National Football League in the early 1960s, but the Broncos were the most laughed-at of all. Bob Howsam, a successful minor league baseball owner who built Bears Stadium in the 1940s, was awarded an AFL charter franchise on August 14, 1959. Severely limited fi nancially, Howsam clothed his fi rst team in used uniforms from the defunct Copper Bowl in Tucson, Ariz. Making the uniforms particularly joke-worthy were the vertically-striped socks that completed the Broncos’ dress. Two years later, when Jack Faulkner took over as head coach and general manager, the socks were destroyed in a public burning ceremony. While Denver’s on-the-fi eld experience during the 10 years of the AFL was for the most part bleak, the Broncos did have some bright moments. -

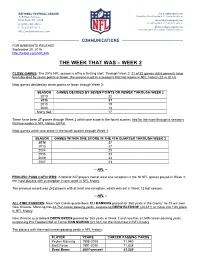

The Week That Was – Week 2

FOR IMMEDIATE RELEASE September 20, 2016 http://twitter.com/NFL345 THE WEEK THAT WAS – WEEK 2 CLOSE GAMES: The 2016 NFL season is off to a thrilling start. Through Week 2, 21 of 32 games (65.6 percent) have been decided by seven points or fewer, the second-most in a season’s first two weeks in NFL history (22 in 2013). Most games decided by seven points or fewer through Week 2: SEASON GAMES DECIDED BY SEVEN POINTS OR FEWER THROUGH WEEK 2 2013 22 2016 21 2010 19 2000 18 Many tied 17 There have been 27 games through Week 2 within one score in the fourth quarter, tied for the most through a season’s first two weeks in NFL history (2013). Most games within one score in the fourth quarter through Week 2: SEASON GAMES WITHIN ONE SCORE IN THE 4TH QUARTER THROUGH WEEK 2 2016 27 2013 27 2004 25 2015 24 2009 23 2007 23 -- NFL -- PROLIFIC PASS CATCHERS: A total of 247 players had at least one reception in the 16 NFL games played in Week 2, the most players with a reception in one week in NFL history. The previous record was 242 players with at least one reception, which was set in Week 12 last season. -- NFL -- ALL-TIME PASSERS: New York Giants quarterback ELI MANNING passed for 368 yards in the Giants’ 16-13 win over New Orleans. Manning has 44,762 career passing yards, surpassing DREW BLEDSOE (44,611) to move into 10th place in NFL history. New Orleans quarterback DREW BREES passed for 263 yards in Week 2 and now has 61,589 career passing yards, surpassing Pro Football Hall of Famer DAN MARINO (61,361) for the third-most in NFL history. -

10000000* *009899

Filed for intro on 01/15/98 SENATE JOINT RESOLUTION 376 By Gilbert A RESOLUTION to commend Peyton Manning, University of Tennessee quarterback, on the conclusion of a brilliant collegiate career. WHEREAS, the Tennessee General Assembly is very proud to specially recognize outstanding athletes who are, more importantly, quality persons; and WHEREAS, widely recognized and acknowledged for his quarterback skills, Peyton Manning has demonstrated tremendous productivity, leadership and character, both on and off the gridiron. He also has the all-too-rare quality of confidence in his own abilities and humility; and WHEREAS, going back to his brilliant high school career, his outstanding leadership skills were strongly evident; a three-year starter for Newman High School, in New Orleans, Manning led his team to an excellent combined overall record of 34-5. His numerous honors as a star high school player included being selected as the Gatorade Circle of Champions National Player of the Year; and WHEREAS, after delighting ecstatic Tennessee fans by announcing his decision to play for the Big Orange, Peyton replaced injured starters Jerry Colquitt and Todd Helton his freshman year and masterfully guided the Volunteers to a fine 7-1 record in the remaining games, including a resounding 45-23 victory over Virginia Tech in the 1994 Gator Bowl. He was specially honored by being selected as the SEC Freshman of the Year; and *10000000* *009899* 10000000 *00989947* WHEREAS, in his sophomore season, the Tennessee team beat Alabama by an incredible 41-14 -

CONGRESSIONAL RECORD—SENATE February

2588 CONGRESSIONAL RECORD—SENATE February 12, 1999 widespread and well-documented expressing their views since the Presi- SENATE RESOLUTION 46—RELAT- human rights abuses in violation of dent’s human rights dialogue at the ING TO THE RETIREMENT OF internationally accepted norms, in- June 1998 summit in Beijing. WILLIAM D. LACKEY Mr. President, the situation is just as cluding extrajudicial killings, the use Mr. LOTT (for himself and Mr. bad in Tibet, where, according to of torture, arbitrary arrest and deten- DASCHLE) submitted the following reso- Human Rights Watch, at least ten pris- tion, forced abortion and sterilization, lution; which was considered and oners reportedly died following two the sale of organs from executed pris- agreed to: oners, and tight control over the exer- protests in a prison in the Tibetan cap- S. RES. 46 cise of the rights of freedom of speech, ital in May. In the weeks following, press, and religion.’’ scores of prisoners were interrogated, Whereas, William D. Lackey has faithfully According to testimony to Congress beaten and placed in solitary confine- served the United States Senate as an em- ment. Other deaths in prison report- ployee of the Senate since September 4, 1964, by Amnesty International, the human and since that date has ably and faithfully rights situation in China shows no fun- edly occurred in June, with Chinese au- upheld the highest standards and traditions damental change, despite the recent thorities claiming that many were sui- of the staff of the United States Senate; promises from the government of cides. Further, during the 1998, Chinese Whereas, during his 35 years in positions of China. -

GAME NOTES Patriots at Miami Dolphins – September 12, 2011

GAME NOTES Patriots at Miami Dolphins – September 12, 2011 BRADY SETS CAREER MARK-HIGH AND FRANCHISE RECORD WITH 517 PASSING YARDS. Brady set a franchise record with 517 passing yards. The previous high was 426 yards by Drew Bledsoe vs. Minnesota on No. 13, 1994. Brady’s previous best was 310 passing yards vs. Kansas City on Sept. 2, 2002. Brady became the 10th NFL quarterback to throw for 500 yards or more. His 517 yards are the fifth highest in NFL history. Norm Van Brocklin (554) Warren Moon (527) Boomer Esiason (522) Dan Marino (521) Tom Brady (517) PATRIOTS START GOOD The Patriots have won eight straight games on opening day dating back to 2004. The eight straight opening day wins is the longest active streak in the NFL. Head coach Bill Belichick 10-6 all-time on opening day including 8-3 as Patriots head coach Brady is 8-1 on opening day. NINE STRAIGHT GAMES WITH 30 POINTS TIES PATRIOTS’ OWN NFL RECORD The Patriots tied a team mark with their ninth straight regular season game with 30 or more points dating back to Nov. 14 of last season with a 39-26 win at Pittsburgh. The Patriots also scored 30 or more points in nine straight games in 2006-07 with the last game of the 2006 regular season and the first eight games of the 2007 season. The Patriots nine straight games with 30 or more points are second in NFL history to the 14 straight games by the St. Louis Rams (1999-2000). The Patriots eight straight games with 30 or more points in 2010 tied an NFL record for most consecutive games with 30 or more points in a single season.