Generalized Instruction Selector Generation: the Automatic Construction of Instruction Selectors from Descriptions of Compiler Internal Forms and Target Machines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glibc and System Calls Documentation Release 1.0

Glibc and System Calls Documentation Release 1.0 Rishi Agrawal <[email protected]> Dec 28, 2017 Contents 1 Introduction 1 1.1 Acknowledgements...........................................1 2 Basics of a Linux System 3 2.1 Introduction...............................................3 2.2 Programs and Compilation........................................3 2.3 Libraries.................................................7 2.4 System Calls...............................................7 2.5 Kernel.................................................. 10 2.6 Conclusion................................................ 10 2.7 References................................................ 11 3 Working with glibc 13 3.1 Introduction............................................... 13 3.2 Why this chapter............................................. 13 3.3 What is glibc .............................................. 13 3.4 Download and extract glibc ...................................... 14 3.5 Walkthrough glibc ........................................... 14 3.6 Reading some functions of glibc ................................... 17 3.7 Compiling and installing glibc .................................... 18 3.8 Using new glibc ............................................ 21 3.9 Conclusion................................................ 23 4 System Calls On x86_64 from User Space 25 4.1 Setting Up Arguements......................................... 25 4.2 Calling the System Call......................................... 27 4.3 Retrieving the Return Value...................................... -

Preview Objective-C Tutorial (PDF Version)

Objective-C Objective-C About the Tutorial Objective-C is a general-purpose, object-oriented programming language that adds Smalltalk-style messaging to the C programming language. This is the main programming language used by Apple for the OS X and iOS operating systems and their respective APIs, Cocoa and Cocoa Touch. This reference will take you through simple and practical approach while learning Objective-C Programming language. Audience This reference has been prepared for the beginners to help them understand basic to advanced concepts related to Objective-C Programming languages. Prerequisites Before you start doing practice with various types of examples given in this reference, I'm making an assumption that you are already aware about what is a computer program, and what is a computer programming language? Copyright & Disclaimer © Copyright 2015 by Tutorials Point (I) Pvt. Ltd. All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book can retain a copy for future reference but commercial use of this data is not allowed. Distribution or republishing any content or a part of the content of this e-book in any manner is also not allowed without written consent of the publisher. We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at [email protected] ii Objective-C Table of Contents About the Tutorial .................................................................................................................................. -

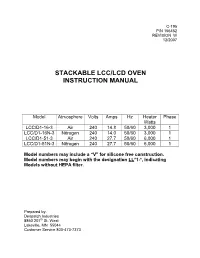

Stackable Lcc/Lcd Oven Instruction Manual

C-195 P/N 156452 REVISION W 12/2007 STACKABLE LCC/LCD OVEN INSTRUCTION MANUAL Model Atmosphere Volts Amps Hz Heater Phase Watts LCC/D1-16-3 Air 240 14.8 50/60 3,000 1 LCC/D1-16N-3 Nitrogen 240 14.0 50/60 3,000 1 LCC/D1-51-3 Air 240 27.7 50/60 6,000 1 LCC/D1-51N-3 Nitrogen 240 27.7 50/60 6,000 1 Model numbers may include a “V” for silicone free construction. Model numbers may begin with the designation LL *1-*, indicating Models without HEPA filter. Prepared by: Despatch Industries 8860 207 th St. West Lakeville, MN 55044 Customer Service 800-473-7373 NOTICE Users of this equipment must comply with operating procedures and training of operation personnel as required by the Occupational Safety and Health Act (OSHA) of 1970, Section 6 and relevant safety standards, as well as other safety rules and regulations of state and local governments. Refer to the relevant safety standards in OSHA and National Fire Protection Association (NFPA), section 86 of 1990. CAUTION Setup and maintenance of the equipment should be performed by qualified personnel who are experienced in handling all facets of this type of system. Improper setup and operation of this equipment could cause an explosion that may result in equipment damage, personal injury or possible death. Dear Customer, Thank you for choosing Despatch Industries. We appreciate the opportunity to work with you and to meet your heat processing needs. We believe that you have selected the finest equipment available in the heat processing industry. -

The Lcc 4.X Code-Generation Interface

The lcc 4.x Code-Generation Interface Christopher W. Fraser and David R. Hanson Microsoft Research [email protected] [email protected] July 2001 Technical Report MSR-TR-2001-64 Abstract Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler: Design and Implementation. This report details the lcc 4.x code- generation interface, which defines the interaction between the target- independent front end and the target-dependent back ends. This interface differs from the interface described in Chap. 5 of A Retargetable C Com- piler. Additional infomation about lcc is available at http://www.cs.princ- eton.edu/software/lcc/. Microsoft Research Microsoft Corporation One Microsoft Way Redmond, WA 98052 http://www.research.microsoft.com/ The lcc 4.x Code-Generation Interface 1. Introduction Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler [1]. Version 4.x is the current release of lcc, and it uses a different code-generation interface than the inter- face described in Chap. 5 of Reference 1. This report details the 4.x interface. Lcc distributions are available at http://www.cs.princeton.edu/software/lcc/ along with installation instruc- tions [2]. The code generation interface specifies the interaction between lcc’s target-independent front end and target-dependent back ends. The interface consists of a few shared data structures, a 33-operator language, which encodes the executable code from a source program in directed acyclic graphs, or dags, and 18 functions, that manipulate or modify dags and other shared data structures. On most targets, implementations of many of these functions are very simple. -

The Glib/GTK+ Development Platform

The GLib/GTK+ Development Platform A Getting Started Guide Version 0.8 Sébastien Wilmet March 29, 2019 Contents 1 Introduction 3 1.1 License . 3 1.2 Financial Support . 3 1.3 Todo List for this Book and a Quick 2019 Update . 4 1.4 What is GLib and GTK+? . 4 1.5 The GNOME Desktop . 5 1.6 Prerequisites . 6 1.7 Why and When Using the C Language? . 7 1.7.1 Separate the Backend from the Frontend . 7 1.7.2 Other Aspects to Keep in Mind . 8 1.8 Learning Path . 9 1.9 The Development Environment . 10 1.10 Acknowledgments . 10 I GLib, the Core Library 11 2 GLib, the Core Library 12 2.1 Basics . 13 2.1.1 Type Definitions . 13 2.1.2 Frequently Used Macros . 13 2.1.3 Debugging Macros . 14 2.1.4 Memory . 16 2.1.5 String Handling . 18 2.2 Data Structures . 20 2.2.1 Lists . 20 2.2.2 Trees . 24 2.2.3 Hash Tables . 29 2.3 The Main Event Loop . 31 2.4 Other Features . 33 II Object-Oriented Programming in C 35 3 Semi-Object-Oriented Programming in C 37 3.1 Header Example . 37 3.1.1 Project Namespace . 37 3.1.2 Class Namespace . 39 3.1.3 Lowercase, Uppercase or CamelCase? . 39 3.1.4 Include Guard . 39 3.1.5 C++ Support . 39 1 3.1.6 #include . 39 3.1.7 Type Definition . 40 3.1.8 Object Constructor . 40 3.1.9 Object Destructor . -

Linux Assembly HOWTO Linux Assembly HOWTO

Linux Assembly HOWTO Linux Assembly HOWTO Table of Contents Linux Assembly HOWTO..................................................................................................................................1 Konstantin Boldyshev and François−René Rideau................................................................................1 1.INTRODUCTION................................................................................................................................1 2.DO YOU NEED ASSEMBLY?...........................................................................................................1 3.ASSEMBLERS.....................................................................................................................................1 4.METAPROGRAMMING/MACROPROCESSING............................................................................2 5.CALLING CONVENTIONS................................................................................................................2 6.QUICK START....................................................................................................................................2 7.RESOURCES.......................................................................................................................................2 1. INTRODUCTION...............................................................................................................................2 1.1 Legal Blurb........................................................................................................................................2 -

An Analysis of the D Programming Language Sumanth Yenduri University of Mississippi- Long Beach

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CSUSB ScholarWorks Journal of International Technology and Information Management Volume 16 | Issue 3 Article 7 2007 An Analysis of the D Programming Language Sumanth Yenduri University of Mississippi- Long Beach Louise Perkins University of Southern Mississippi- Long Beach Md. Sarder University of Southern Mississippi- Long Beach Follow this and additional works at: http://scholarworks.lib.csusb.edu/jitim Part of the Business Intelligence Commons, E-Commerce Commons, Management Information Systems Commons, Management Sciences and Quantitative Methods Commons, Operational Research Commons, and the Technology and Innovation Commons Recommended Citation Yenduri, Sumanth; Perkins, Louise; and Sarder, Md. (2007) "An Analysis of the D Programming Language," Journal of International Technology and Information Management: Vol. 16: Iss. 3, Article 7. Available at: http://scholarworks.lib.csusb.edu/jitim/vol16/iss3/7 This Article is brought to you for free and open access by CSUSB ScholarWorks. It has been accepted for inclusion in Journal of International Technology and Information Management by an authorized administrator of CSUSB ScholarWorks. For more information, please contact [email protected]. Analysis of Programming Language D Journal of International Technology and Information Management An Analysis of the D Programming Language Sumanth Yenduri Louise Perkins Md. Sarder University of Southern Mississippi - Long Beach ABSTRACT The C language and its derivatives have been some of the dominant higher-level languages used, and the maturity has stemmed several newer languages that, while still relatively young, possess the strength of decades of trials and experimentation with programming concepts. -

Intro to C Programming 1

Introduction to C via Examples Last update: Cristinel Ababei, January 2012 1. Objective The objective of this material is to introduce you to several programs in C and discuss how to compile, link, and execute on Windows or Linux. The first example is the simplest hello world example. The second example is designed to expose you to as many C concepts as possible within the simplest program. It assumes no prior knowledge of C. However, you must allocate significant time to read suggested materials, but especially work on as many examples as possible. 2. Working on Windows: Example 1 To get started with learning C, I recommend using a simple and general/generic IDE with a freeC/C++ compiler. For example, Dev-C++ is a nice compiler system for windows. Download and install it on your own computer from here: http://www.bloodshed.net/devcpp.html In my case, I installed it in M:\Dev-Cpp, where I created a new directory M:\Dev-Cpp\cristinel to store all projects I will work on. Inside this new directory, I created yet one more M:\Dev- Cpp\cristinel\project1, where I’ll store the files for the first program, which is simply the famous “hello world” program. We’ll create a new project directory for each new program; this way we keep thing nicely organized in individual directories. We create a program by creating a new project in Dev-C++. So, start Dev-C++ and create a new project. In the dialog window, select “Console Application” as the type of project and name it hello. -

C Programming Tutorial

C Programming Tutorial C PROGRAMMING TUTORIAL Simply Easy Learning by tutorialspoint.com tutorialspoint.com i COPYRIGHT & DISCLAIMER NOTICE All the content and graphics on this tutorial are the property of tutorialspoint.com. Any content from tutorialspoint.com or this tutorial may not be redistributed or reproduced in any way, shape, or form without the written permission of tutorialspoint.com. Failure to do so is a violation of copyright laws. This tutorial may contain inaccuracies or errors and tutorialspoint provides no guarantee regarding the accuracy of the site or its contents including this tutorial. If you discover that the tutorialspoint.com site or this tutorial content contains some errors, please contact us at [email protected] ii Table of Contents C Language Overview .............................................................. 1 Facts about C ............................................................................................... 1 Why to use C ? ............................................................................................. 2 C Programs .................................................................................................. 2 C Environment Setup ............................................................... 3 Text Editor ................................................................................................... 3 The C Compiler ............................................................................................ 3 Installation on Unix/Linux ............................................................................ -

Graph-Based Procedural Abstraction

Graph-Based Procedural Abstraction A. Dreweke, M. Worlein,¨ I. Fischer, D. Schell, Th. Meinl, M. Philippsen University of Erlangen-Nuremberg, Computer Science Department 2, Martensstr. 3, 91058 Erlangen, Germany, {dreweke, woerlein, schell, philippsen}@cs.fau.de ALTANA Chair for Applied Computer Science, University of Konstanz, BOX M712, 78457 Konstanz, Germany, {Ingrid.Fischer, Thorsten.Meinl}@inf.uni-konstanz.de Abstract optimizations that can be done much earlier and more often improve the current practice. Even a night or weekend of Procedural abstraction (PA) extracts duplicate code seg- optimization is worthwhile since the resulting savings when ments into a newly created method and hence reduces code building cars or cell phones are enormous. Moreover, off- size. For embedded micro computers the amount of mem- line optimizations of machine code can even be applied to ory is still limited so code reduction is an important is- code that is only available in compiled form. sue. This paper presents a novel approach to PA, that is Of course there are compiler flags that avoid code bloat especially targeted towards embedded systems. Earlier ap- and there are smart linkers [38, 41] that reduce code size. proaches of PA are blind with respect to code reordering, But still, there are space-wasting code duplications that are i.e., two code segments with the same semantic effect but mainly caused by the compiler’s code generation templates, with different instruction orders were not detected as candi- by cut-and-paste programming, by use of standard libraries, dates for PA. Instead of instruction sequences, in our ap- and by translation technology for templates/generics (al- proach the data flow graphs of basic blocks are consid- though the latter are not yet in daily use in the embedded ered. -

What's New in Omnis Studio 8.1.7

What’s New in Omnis Studio 8.1.7 Omnis Software April 2019 48-042019-01a The software this document describes is furnished under a license agreement. The software may be used or copied only in accordance with the terms of the agreement. Names of persons, corporations, or products used in the tutorials and examples of this manual are fictitious. No part of this publication may be reproduced, transmitted, stored in a retrieval system or translated into any language in any form by any means without the written permission of Omnis Software. © Omnis Software, and its licensors 2019. All rights reserved. Portions © Copyright Microsoft Corporation. Regular expressions Copyright (c) 1986,1993,1995 University of Toronto. © 1999-2019 The Apache Software Foundation. All rights reserved. This product includes software developed by the Apache Software Foundation (http://www.apache.org/). Specifically, this product uses Json-smart published under Apache License 2.0 (http://www.apache.org/licenses/LICENSE-2.0) The iOS application wrapper uses UICKeyChainStore created by http://kishikawakatsumi.com and governed by the MIT license. Omnis® and Omnis Studio® are registered trademarks of Omnis Software. Microsoft, MS, MS-DOS, Visual Basic, Windows, Windows Vista, Windows Mobile, Win32, Win32s are registered trademarks, and Windows NT, Visual C++ are trademarks of Microsoft Corporation in the US and other countries. Apple, the Apple logo, Mac OS, Macintosh, iPhone, and iPod touch are registered trademarks and iPad is a trademark of Apple, Inc. IBM, DB2, and INFORMIX are registered trademarks of International Business Machines Corporation. ICU is Copyright © 1995-2003 International Business Machines Corporation and others. -

Porting GCC for Dunces

Porting GCC for Dunces Hans-Peter Nilsson∗ May 21, 2000 ∗Email: <[email protected]>. Thanks to Richard Stallman <[email protected]> for guidance to letting this document be more than a master's thesis. 1 2 Contents 1 Legal notice 11 2 Introduction 13 2.1 Background . 13 2.1.1 Compilers . 13 2.2 This work . 14 2.2.1 Result . 14 2.2.2 Porting . 14 2.2.3 Restrictions in this document . 14 3 The target system 17 3.1 The target architecture . 17 3.1.1 Registers . 17 3.1.2 Sizes . 18 3.1.3 Addressing modes . 18 3.1.4 Instructions . 19 3.2 The target run-time system and libraries . 21 3.3 Simulation environment . 21 4 The GNU C compiler 23 4.1 The compiler system . 23 4.1.1 The compiler parts . 24 4.2 The porting mechanisms . 25 4.2.1 The C macros: tm.h ..................... 26 4.2.2 Variable arguments . 42 4.2.3 The C file: tm.c ....................... 43 4.2.4 The machine description: md . 43 4.2.5 The building process of the compiler . 54 4.2.6 Execution of the compiler . 57 5 The CRIS port 61 5.1 Preparations . 61 5.2 The target ABI . 62 5.2.1 Fundamental types . 62 3 4 CONTENTS 5.2.2 Non-fundamental types . 63 5.2.3 Memory layout . 64 5.2.4 Parameter passing . 64 5.2.5 Register usage . 65 5.2.6 Return values . 66 5.2.7 The function stack frame .