Answers in the Tool Box. Academic Intensity, Attendance Patterns, and Bachelor's Degree Attainment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rockland Gazette

The Rockland Gazette. Gazette Job Printing PUBLISHED EVERY THURSDAY AFTERNOON bY ESTABLISHMENT. VOSE & PORTEH, Having every facility in Presses, Type and MatosW- to which we are constantly maHng aiddltlooa, wa ara 2 I O M a in S tre e t. piepared to execute with promptness and goad styla every variety of Job Printing, ineladta* Town Reports, Catalogues, TERMS: Posters, Shop B ills, Hand B ills, Pro If paid strictly in advance—per annum, $2.00. If payment is delayed 6 mouths, 2.25. grammes, Circulars, Bill Heads, If not paid till the close of the year, 2.60. Letter Heads, Law and Corpor <8i“ Newsuhscribeifl areexpected to make the first ation Blanks, Receipts, Bills payment in advance. of Lading, Business, Ad paper will bo discontinued until ALL Alt- dress and Wedding ■ Earues are paid, unless at the option of the publish ers. Cards, Tags, x* dingle copies live cents—for sale nt the office and Labels, at the Bookstores. VOLUME 35. ROCKLAND, MAINE, THURSDAY, MARCH 18, 1880. &c.9 Z. POPE VOSE. J. B. PORTER. NO. 16. PRINTING IN COLORS AND BRONZINO will receive prompt attention. harvests of heaven will never become such carrjed her into the house and there lenrned derness was like that of a mother. “ God “ I thought I’d tell ye, gentleman, the ns shespoke.and I promised. Her “ friend ” deserts hut some good seed will tako root all. Both had been deceived. sent von to me. For her sake this shall mistress is justcomin’. ’ The saints purtect therein.” —I would have promised her anything for POND’S It was a terrible scene tlie old front room he your home. -

Pine Cove Market &

Real POSTMASTER: Dated material, please deliver March 14-16, 2012 Estate See page 15. Idyllwild News bites Crime Local pleads guilty to burglary. TCoveringo the Sanw Jacinto and Santan Rosa Moun tainsC from Twinr Pinesı to Anzaer to Pinyon See page 2 Almost all the News — Part of the Time Daylight Saving Time The view from Idyllwild VOL. 67 NO. 11 75¢ (Tax Included) IDYLLWILD, CA THURS., MARCH 15, 2012 Arts students. See page 4. Smart Meters Sheriff to discuss SoCal Edison proposed opt-out plan. See page 8. local crime Jail grants By Marshall Smith Staff Reporter Riverside County County gets $100 million Sheriff’s meeting to expand jails. he recent escalation on local crime See page 9. in criminal activity Tin the Idyllwild area, 2 p.m. Saturday, Sports! including an armed rob- March 24 Champs crowned at end bery and a growing number of volleyball and basketball of felony property crimes, Idyllwild School seasons. has prompted the Riverside Gymnasium See pages 10 and 11. County Sheriff’s Depart- ment to call a community Lilacs meeting in Idyllwild. Cap- crime, the role and use of The strategy for a future tain Scot Collins, Hemet Sta- the Mountain Community tion Commander and staff spring festival. Patrol, and what community will meet with Hill com- members can do to protect See page 14. On Saturday, this male bald eagle graced the skies over Lake Hemet while humans munity members at a town their homes and neigh- counted the eagles for the Forest Service. Photo by Careena Chase meeting at the Idyllwild borhoods. -

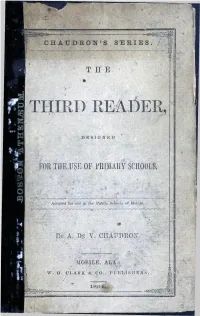

For the Use of Primary Schools

—— -----------------*----------- -— 1 I CHAUDRON’S SERIES. / i THE THIRD 1> E S I G N K D FOR THE USE OF PRIMARY SCHOOLS, /*V Adopted tor me in tlie Public Schools? o f Mohije. r>Y A. Dp Y. CriAIWvOX. MOBILE, ALA.: I W. G. CL A K 1C &. CO., PUBLISHERS. \ H- ■B CHALDRON’S SERIES THE THIRD READER, DESIGNED FOR THE USE OF PRIMARY SCHOOLS, Adopted for ic Schools o f Mobile. - o O o--r j < > . \ V By A. B e T . CHAUDRON. 0 \ MOBILE, ALA.: W . Q . CLARK & CO., PUBLISHERS. n f t 1 8 0 4 . .“3 Entered according to the Act o f Congress, in the year 1864, by A . D e V. CH AU DRON, in the Clerk's Office o f the C. S. District Court o f the Southern Division of the District of Alabama. j b 7 * ' p t N A j ■ , r T CO* ■ r* J w J * CONTENTS. TAGE. Child’s Prayer. ■ Part First. LESSON. PAGE. 1 Punctuation................................................................................................ 9 2 Elementary E x ercise s..........................................................................12 3 Rich and Poor ......................................................... ............................ 1^ •1 Hugh and Ellen......................................................................................... 16 5 Albert’s Pony ................... .......................... ...................... ...................... 18 6 I will be good to-day.................................................................................20 7 Mary's Ilome..............................................................................................21 8 God sees us................................................ -

Vipers in the Storm: Diary of a Gulf War Fighter Pilot

VIPERS IN THE STORM DIARY OF A GULF WAR FIGHTER PILOT This page intentionally left blank VIPERS IN THE STORM DIARY OF A GULF WAR FIGHTER PILOT KEITH ROSENKRANZ McGraw-Hill New York San Francisco Washington, D.C. Auckland Bogota Caracas Lisbon London Madrid Mexico City Milan Montreal New Delhi San Juan Singapore Sydney Tokyo Toronto Copyright © 2002 by The McGraw-Hill Companies, Inc. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the pub- lisher. ISBN: 978-0-07-170668-1 MHID: 0-07-170668-2 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-140040-4, MHID: 0-07-140040-0. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occur- rence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. To contact a representative please e-mail us at [email protected]. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. -

The Ocean Last Night - an Exegesis & Creative Artefact

The Ocean Last Night - an Exegesis & Creative Artefact by Gregory Day Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy Deakin University December 2017 The Ocean Last Night an Exegesis & Creative Artefact Gregory Day Table of Contents The Ocean Last Night - an exegesis ......................................................... 1 Introduction ............................................................................. 2 Chapter 1 - My Psychologically Ultimate Seashore ......................... 12 Chapter 2 - One True Note? ....................................................... 38 Chapter 3 – Otway Taenarum .................................................... 58 Conclusion .............................................................................. 81 References .............................................................................. 86 The Ocean Last Night – Creative Artefact ............................................ 93 The Patron Saint of Eels ........................................................... 95 Ron McCoy’s Sea of Diamonds ................................................. 140 The Grand Hotel …………………………………………………………199 Archipelago Of Souls .............................................................. 240 Coda - ampliphone XI - thaark thurr boonea…………………………... 289 The Ocean Last Night - an exegesis 1 Introduction I Running through the body of this exegetic augmentation of my published novels is the narrative of how my family came to dwell in the specific southwest Victorian coastal -

An Edition of the Journals of Adlard Welby

AN EDITION OF THE JOURNALS OF ADLARD WELBY Volume One Thesis submitted for the degree of Doctor of Philosophy at the University of Leicester by Sue Boettcher MA (Leicester) School of English University of Leicester January 2014 ii An Edition of the Journals of Adlard Welby Sue Boettcher ABSTRACT This thesis comprises edited and annotated transcriptions of four of the six known journals of Adlard Welby, (1776 – 1861), Lincolnshire landowner and traveller. The transcriptions have been made from the original manuscript journals, housed in the Lincolnshire Archives and the Sleaford Museum Trust. They cover the periods 1832 to 1856. Welby’s domestic life was unusual, in that he had two families, one with his wife Elizabeth, and a second, with his mistress Mary Hutchinson. The Italian Journal (1832-1835), is a detailed record of his first three years in Italy, where he lived with Mary and their ten children, after giving up his family estate. The remaining three journals, dating from 1841 to 1856, are a record of his travels in Europe and his experiences following Mary’s death in 1840. He was a thoughtful and outspoken diarist, and the journals provide a unique record of his view of the world he lived and travelled in. The Introduction considers the contents of these Journals together with references to other manuscript letters and documents, the remaining two journals and his only published work, A Visit to North America and the English Settlements in Illinois, (1821). Welby’s writing is placed in the context of nineteenth-century life writing and the travel writing of the period. -

0Mmtt$

A CHEERFUL OL0 STATESMAN. AI JFOTTNIA ! C Mr. Caleb Cashing came in with the centu- THE CHICAGO & NORTH-WESTERN RAILWAY. ry on the 17th of January. Since that he has been a lawyer in large practice, a mem- Kna'wtce- uude. one the Hi. I way U«w» <»« « fcs»r ui.«l NOH.rH- ber of either branch of the Massachusetts ai„i with iis i)urn romi braacfae* and con- Legislature, a traveler and a writer of books ne ioas, lor.us Uic u.id quickest rotue be of travel, a member of the National Houae of CWMU Ohka-o au i aU iMi.il- '» Aiscoinsiu, N,i«ti Tn Michigan, Mumm-***, Iowa, Nebraska, 0MMtt$ Representatives, a Commissioner to China, a <"aiif .ruin auJ ttio tt extern Territories. Its Colonel and a Brigadier-General in tbe Mexi- Dm .ill a aad California Line can War, a Mayor of Newburyport, a Judge Is the shortest aud b.:st route Lor ail points in North- of the Massachusetts Supreme Court, the At- ern Illinois, Iowa, Dakota, Nebraska. Wyoming, torney-General of the United States, Counsel Colorado, Utah. Nevada, • ^ahforuia, Oregon, China, Japan aad Australia. Us for the United States at the Geneva Confer- Chicago. St. Paul & Madison Line Volume 19. STAFFORD SPRIN&S, CONN., THURSDAY, SEPTEMBER 21, 1876, Number 25. ence, and lastly, Minister to Spain. After is the short line for Northern Wisconsin and Min- this large and multifarious experience of pub- nesota, and for Madisou, St. Paul, Minneapolis, Du- lic affairs, it gives us pleasure to say that Mr. -

Nielsen Music & Billboard

NIELSEN MUSIC & BILLBOARD PRESENT CANADA 150 CHARTS A CELEBRATION OF CANADA’S 150TH ANNIVERSARY — THE TOP CANADIAN ARTISTS, ALBUMS AND SONGS IN THE NIELSEN MUSIC CANADA ERA Copyright © 2017 The Nielsen Company 1 Copyright © 2017 The Nielsen Company In celebration of Canada's 150th birthday — Billboard's top Canadian artists, albums and songs in the Nielsen Music Canada era by Karen Bliss The official 150th birthday of Canada — commemorating confederation — is this Saturday, July 1, known as Canada Day, but it’s been a year-long celebration with the government putting in a half-billion dollars towards the branded A CELEBRATION OF CANADA’S ”Canada 150” festivities and businesses commemorating the sesquicentennial their own way. Nielsen Music Canada, in collaboration with Billboard, has compiled its own celebratory lists of the biggest artists, albums and songs since the TH data-tracking system came into Canada in 1996 (and cut off Dec. 31, 2016). 150 ANNIVERSARY “We’re excited to celebrate the success of Canadian artists at home, in partnership with Billboard, as we honour [sic] THE TOP CANADIAN ARTISTS, ALBUMS & SONGS Canada’s 150th anniversary,” said Paul Shaver, Head of Nielsen Music Canada. Nielsen Music Canada’s recap charts include all-format and radio charts (started April 1995), album sales (1996 -) and IN THE NIELSEN MUSIC CANADA ERA digital song sales (2005 - ). The most recent chart for streaming (July 2014 -) extends to March 2017. The top-selling Canadian artist (by album sales in Canada) during the 20-year “Nielsen Music Canada era,” is, not surprisingly, Celine Dion when tallying up all her physical and digital sales for the Top Overall Artist chart. -

(500) DAYS of SUMMER by Scott Neustadter & Michael H

(500) DAYS OF SUMMER by Scott Neustadter & Michael H. Weber First Draft 2006 NOTE: THE FOLLOWING IS A WORK OF FICTION. ANY RESEMBLANCE TO PERSONS LIVING OR DEAD IS PURELY COINCIDENTAL. 1. ESPECIALLY YOU JENNY BECKMAN. 2. BITCH. 3. SIMPLE BLACK ON WHITE CREDITS ROLL TO BIG STAR’S “I’M IN LOVE WITH A GIRL.” When all is said and done, up comes a single number in parenthesis, like so: (478) EXT. PARK - DAY For a few seconds we watch A MAN (20s) and a WOMAN (20s) on a park bench. Their names are TOM and SUMMER. Neither one says a word. CLOSE ON her HAND, covering his. Notice the wedding ring. No words are spoken. Tom looks at her the way every woman wants to be looked at. A DISTINGUISHED VOICE begins to speak to us. NARRATOR This is a story of boy meets girl. CUT TO: (1) INT CONFERENCE ROOM - DAY The boy is TOM HANSEN. He sits at a very long rectangular conference table. The walls are lined with framed blow-up sized greeting cards. Tom, dark hair and blue eyes, wears a t- shirt under his sports coat and Adidas tennis shoes to balance out the corporate dress code. He looks pretty bored. NARRATOR The boy, Tom Hansen of Margate, New Jersey, grew up believing that he’d never truly be happy until the day he met his... “soulmate.” CUT TO: INT LIVING ROOM - 1989 PRE-TEEN TOM sits alone on his bed engrossed in a movie. His walls are covered in posters of obscure bands. -

Track List As at November 15, 2014 28,000 Tracks

Track List As At November 15, 2014 28,000 Tracks - Andalucia - Angela & Betsy Money Dance - At Last (Karaoke) - Doug's Intro Mix - Doug's Intro Mix 2 - El Nigro Esta Cocinando - Entry_First_Dance - Espana Cani - Everything I Do, I Do It For You - Happy Birthday Wishes - Happy Feet - Heartbeat (Instrumental) - I Cross Mt Heart - It's Time To Disco - Kal Ho Naa Ho - Kal Ho Naa Ho (Sad) - Kuch To Hua Hai - Maahi Ve - Moon River - Pretty Woman - Shall We Dance - The L Train - Under The Bridges Of Paris - - Whole Lotta Shakin' Goin' On (Wellington Version) & Britney Spears [Radio Edit] [Radio Mix] [Remix] <Unknown> - Agua <Unknown> - Faber College Theme <Unknown> - Gracias Por La Música <Unknown> - I Met Someone <Unknown> - Intro <Unknown> - Intro <Unknown> - Intro <Unknown> - Intro <Unknown> - Intro Apres Ski Hits 2005 <Unknown> - Merengue <Unknown> - Skyline Date <Unknown> - To Italy For A Kiss 1 Plus 1 - Cherry Bomb 1 Plus 1 - Cherry Bomb 10 CC - The Things We Do For Love 10 CC - The Things We Do For Love 10 CC - The Things We Do For Love 10 Years - Wasteland 10,000 Maniacs - More Than This [Tee's Radio Edit] 1000 Mona Lisas - You Oughta Know (X-Rated Lyrics) 101 - Move Your Body 11:30 - I Will Not Cry Anymore 11:30 - Let's Go All Night 11:30 - Let's Go All Night 11:30 - Ole Ole 112 - It's Over Now 112 - Only You 112 - Only You 112 - Peaches & Cream [Radio Mix] 112 & Beanie Sigel - Dance With Me 15þ1 - La Música Cubana 187 Lockdown - Kung Fu 2 AM Club - Let Me Down Easy 2 Brothers On The Floor - I'm Thinkin' Of U 2 Brothers On The Floor - The Sun Will Be Shining 2 Chainz - I'm Different 2 Chainz Ft.