On–The–Go Text Entry: Evaluating and Improving Mobile Text Input on Mini–Qwerty Keyboards

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

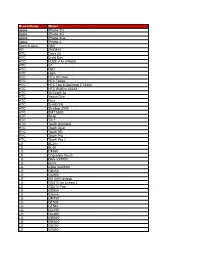

2014 BT Compatibility List 20141030

Item Brand Name Model 1 Acer Acer beTouch E210 2 Acer acer E400 3 Acer acer P400 4 Acer DX650 5 Acer E200 6 Acer Liquid E 7 Acer Liquid Mini (E310) 8 Acer M900 9 Acer S110 10 Acer Smart handheld 11 Acer Smart handheld 12 Acer Smart handheld E100 13 Acer Smart handheld E101 14 Adec & Partner AG AG vegas 15 Alcatel Alcatel OneTouch Fierce 2 16 Alcatel MISS SIXTY MSX10 17 Alcatel OT-800/ OT-800A 18 Alcatel OT-802/ OT-802A 19 Alcatel OT-806/ OT-806A/ OT-806D/ OT-807/ OT-807A/ OT-807D 20 Alcatel OT-808/ OT-808A 21 Alcatel OT-880/ OT-880A 22 Alcatel OT-980/ OT-980A 23 Altek Altek A14 24 Amazon Amazon Fire Phone 25 Amgoo Telecom Co LTD AM83 26 Apple Apple iPhone 4S 27 Apple Apple iPhone 5 28 Apple Apple iPhone 6 29 Apple Apple iPhone 6 Plus 30 Apple iPhone 2G 31 Apple iPhone 3G 32 Apple iPhone 3Gs 33 Apple iPhone 4 34 Apple iPhone 5C 35 Apple iPHone 5S 36 Aramasmobile.com ZX021 37 Ascom Sweden AB 3749 38 Asustek 1000846 39 Asustek A10 40 Asustek G60 41 Asustek Galaxy3_L and Galaxy3_S 42 Asustek Garmin-ASUS M10E 43 Asustek P320 44 Asustek P565c 45 BlackBerry BlackBerry Passport 46 BlackBerry BlackBerry Q10 47 Broadcom Corporation BTL-A 48 Casio Hitachi C721 49 Cellnet 7 Inc. DG-805 Cellon Communications 50 C2052, Technology(Shenzhen) Co., Ltd. Cellon Communications 51 C2053, Technology(Shenzhen) Co., Ltd. Cellon Communications 52 C3031 Technology(Shenzhen) Co., Ltd. Cellon Communications 53 C5030, Technology(Shenzhen) Co., Ltd. -

View Annual Report

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 40-F REGISTRATION STATEMENT PURSUANT TO SECTION 12 OF THE SECURITIES EXCHANGE ACT OF 1934 or ⌧ ANNUAL REPORT PURSUANT TO SECTION 13(a) OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended March 2, 2013 Commission File Number 0-29898 Research In Motion Limited (Exact name of Registrant as specified in its charter) Ontario 3661 Not Applicable (Province or other Jurisdiction (Primary Standard Industrial (I.R.S. Employer of Incorporation or Organization) Classification Code Number) Identification No) 295 Phillip Street Waterloo, Ontario Canada, N2L 3W8 (519) 888-7465 (Address and telephone number of Registrant’s principal executive offices) Research In Motion Corporation 5000 Riverside Drive, Suite 100E, Irving, Texas, USA 75039 (972) 650-6126 (Name, address and telephone number of agent for service in the United States) Securities registered or to be registered pursuant to Section 12(b) of the Act: Common Shares, without par value Securities registered or to be registered pursuant to Section 12(g) of the Act: None Securities for which there is a reporting obligation pursuant to Section 15(d) of the Act: None For annual reports, indicate by check mark the information filed with this Form: ⌧ Annual information form ⌧ Audited annual financial statements Indicate the number of outstanding shares of each of the Registrant’s classes of capital or common stock as of the close of the period covered by this annual report. The Registrant had 524,159,844 Common Shares outstanding as at March 2, 2013 Indicate by check mark whether the Registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Exchange Act during the preceding 12 months (or for such shorter period that the Registrant was required to file such reports) and (2) has been subject to such filing requirements for the past 90 days. -

7021697429.Pdf

• • • • Thumb Cellular Plans The Fr moll tionwid National Choice National Choice National Choice National Choice 750 1500 USO 3000 $40 DO/month $70 OO/month $1 00 DO/month $125 DO/month 750 NatIonwide 1500 Nationwide 2250 Nationwide 3000 Nationwide Anytime Minutes Anytime Minutes Anytime Minutes Anytime Minutes $.25/ addItional $.251 additional $.25/ additional $.25/ additional minute minute minute mmute Free Mobile to Free Mobile to Free Mobile to Free Mobile to Mobile Mobile Mobile Mobile Unlimited Night Unlimited Night and Unlimited Night and Unlimited Night and and Weekend Weekend Calling Weekend Calling Weekend Calling Calling PI n Det i1~ Add up to " companions for 515 e3ch/month. Companions ..'lill al!.o receive free Mobile to Mobile and Unlimited Night & Weekend calling. Mobile to Mobile and Nigh t and Weekend (ailing good only in Thumb Area. • • Unlimit d P\;ln . S45/Month Get unlimited calling from the Thumb Cellular system to anywhere in the United States. Add up to 3 companions for 520 each per month. Pl. nOt. its Minutes used outside of the Thumb Cellulilr S~stem will be charged at 5.50 per minute. Calls to directory il5sistance not included in unlimited minutes. Nationwide minutes are shared between aU phones on the account. Multiple Choice ionwide M'nute~ 100 Minutes 515/month -lOa Minutes 525/month 700 Minu tes 535.1 man th 1100 Minutes.•... 560fmonth 1500 Minutes.....S85/month PI n Det;,i1s Nationwide minutes can be added to any phone with an unlimited calling package or Thumb 500 packi\.ge and companions of these plans. Nationwide minutes will be used an~/time ~'ou use your phone outside of the Thumb area. -

Hearing Aid Compatibility with Blackberry Smartphones

Hearing Aid Compatibility with BlackBerry Smartphones About hearing aid compatibility Several BlackBerry smartphones have received certification from the United States Federal Communications Commission (FCC) stating that the smartphones meet the hearing aid compatibility requirements. BlackBerry smartphones rated M3 or M4 meet FCC requirements and are likely to generate less interference with hearing devices than wireless devices that are not rated. M4 is the higher of the two ratings. BlackBerry smartphones rated T3 or T4 meet FCC requirements and are likely to be more compatible with a hearing device's telecoil (T-coil, T Switch, or Telephone Switch) than wireless devices that are not rated. T4 is the higher of the two ratings. Not all hearing devices have telecoils in them. Results might vary depending on the hearing device and the degree of hearing loss. If your hearing device is vulnerable to interference, you might not be able to use a rated wireless device successfully. The best way to evaluate compatibility of your hearing device and a rated wireless device is to test the devices together and consult with your hearing health professional. For more information about hearing aid compatibility, see the Description of Hearing Aid Compliance Ratings booklet at http://www.blackberry.com/accessibility. Hearing aid compatibility ratings The following table lists BlackBerry smartphones rated for hearing aid compatibility and their respective M and T ratings. Note: M and T compatibility ratings apply only to cellular bands. Smartphone Wi-Fi -

BT IOP Status Report (20110307)

Brand Name Model Apple iPhone 2G Apple iPhone 3G Apple iPhone 3Gs Apple iPhone 4 Garmin asus G60 HP iPAD912 HTC Dash 3G HTC Droid Eris HTC FUZE (TM ) P4600 HTC G1 HTC HD2 HTC Hero HTC HTC HD mini HTC HTC Tattoo HTC HTC Touch Diamond 2 T5353 HTC HTC Wildfire A3333 HTC MyTouch 3g HTC Nexus One HTC Pure HTC SHADOW HTC Shadow 2009 HTC SMT 5800 HTC Snap HTC Tilt 2 HTC Touch Diamond HTC Touch Dual HTC Touch HD HTC Touch Pro HTC Touch Pro 2 LG BL20v LG BL40 LG CF360 LG Chocolate Touch LG Dare VX9700 LG enV3 LG eXpo (GW820) LG GB250 LG GC900 LG GD 580 Lollipop LG GD310 Ice Cream 2 LG GD510 Pop LG GD900 LG Glance LG GM750 LG GT505 LG GT540 LG GU230 LG GU285 LG GW520 LG GW620 LG GX200 LG GX500 LG INCITE LG KC910 LG KM555 LG KM900 LG KP275 LG KP500 LG KS360 LG Lotus LX600 LG LX290 LG LX370 LG Neon (TM ) GT365 LG Rumor 2 LG Scoop / AX-260 LG Shine 2 LG Shine II GD710 AT&T LG Shine KE970 LG Versa LG Voyager VX10000 LG VX5500 LG VX8360 LG VX8610 LG Xenon (TM ) GR500 LG LX400 Moto Backflip ME600 Moto EM35 Moto Z9N Motorola Cliq Motorola Clutch (i465) Motorola Droid Motorola E1 Motorola E1070 Motorola E398 Motorola E8 Motorola EM30 Motorola i365 Motorola i576 Motorola i776 Motorola I9 Motorola K3 Motorola Karma QA1 Motorola MOTO (TM ) Z9 Motorola Q11 Motorola Q9H Motorola RAZR V3 Motorola RAZR V3xx Pink Motorola RAZR2 V9 Motorola Rival Motorola SLVR L72 Motorola U9 Motorola V1100 Motorola V360 Motorola V365 Motorola V3i Motorola V3x Motorola V500 Motorola V550 Motorola V551 Motorola V6 Motorola V750 Motorola V80 Motorola V860 Motorola V9 Motorola V950 -

1 in the United States District Court for the District Of

Case 1:17-cv-00082-LPS-CJB Document 21 Filed 05/30/17 Page 1 of 56 PageID #: 374 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE 3G LICENSING, S.A., ) KONINKLIJKE KPN N.V., and ) ORANGE S.A., ) C.A. No. 17-cv-82-LPS-CJB ) Plaintiffs, ) JURY TRIAL DEMANDED ) v. ) ) BLACKBERRY LIMITED ) and BLACKBERRY CORPORATION ) ) Defendants. ) ) SECOND AMENDED COMPLAINT FOR PATENT INFRINGEMENT This is an action for patent infringement in which Plaintiffs 3G Licensing, S.A. (hereafter “3G Licensing”), Orange S.A. (hereafter “Orange”) and Koninklijke KPN N.V. (hereafter “KPN”) (collectively “Plaintiffs”) make the following allegations against BlackBerry Ltd., and BlackBerry Corporation (collectively “BlackBerry” or “Defendants”): BACKGROUND 1. 3G Licensing holds more than 400 patent and patent applications fundamental to a variety of core technologies involving consumer electronics and wireless telecommunication implementations. 3G Licensing’s patents have been licensed by many of the world’s leading mobile technology companies, including BlackBerry’s competitors. 2. KPN’s extensive research and development efforts likewise have led to hundreds of issued patents in the United States and across the world. These patents have been licensed in turn by leading global telecommunications companies, including many of BlackBerry’s mobile technology competitors. 1 Case 1:17-cv-00082-LPS-CJB Document 21 Filed 05/30/17 Page 2 of 56 PageID #: 375 3. Plaintiffs have made their patents available for license on an individual basis through bilateral negotiations and, at the licensor’s option, collectively through joint licensing or patent pool licensing arrangements. 4. Prior to filing suit in this action, Plaintiffs provided BlackBerry with notice of the patents at issue and engaged in lengthy negotiations with BlackBerry to try to resolve this dispute. -

Blackberry Blues

BlackBerry Blues Contents Chapter 1 - Products 1. The new BlackBerry 9360: It’s got sex appeal 04 2. 9 reasons why we won’t be playing with the new PlayBook! 07 3. Blackberry Playbook: RIM should ditch tablets to focus on smartphones 10 4. Now, use your BlackBerry as a wallet 12 5. Should you buy the new BlackBerry Bold 9900? 14 Chapter 2 - The BB is dying! 6. RIM’s silence on BlackBerry outage betrays crisis of leadership 17 7. Is BlackBerry the new Nokia? 19 8. BlackBerry has to think fast to protect its corporate turfes 21 9. More trouble for RIM: No one wants to sell the new Playbook 23 10. BlackBerry: From Crackberry to withdrawal 24 Chapter 3 - India’s BlackBerry Boys 11. Can India save BlackBerry? 28 12. RIM unveils BlackBerry Torch 9860, priced at Rs 28,940 30 13. BlackBerry-Government impasse may end with real time interception plan 31 Chapter 5 - BB and the UK riots 14. How Blackberry Messenger was used to organise London’s riots 33 15. London Riots: BlackBerry boys and BlackBerry yobs 34 16. BlackBerry may switch off UK service during strife 37 17. London Riots: Don’t blame tech but the people who use it 38 Chapter 5 - The Outage Day 1 18. Vodafone India BlackBerry users face major glitch 41 19. BlackBerry services restored day after outrage 42 Day 2 20. BlackBerry services crash for the second day 44 21. BlackBerry maker gets a black eye as outage spreads 45 Day 3 22. BlackBerry outage really an outrage 48 23. -

The Blackberry® Style™ 9670 Smartphone Only from Sprint

The BlackBerry® Style™ 9670 smartphone Only from Sprint. Only on the Now Network.Network.™ © 2010 Sprint. This information is subject to Sprint policies regarding use and is the propertyy ofof SprintSprint and/orand/or its relevant affi liates and may contain restricted, confi dential or privileged materials intendedd forfor the sole use of the intended recipient. Any review, use, distribution or disclosure is prohibited without authorization.horization. 105976 Business style redefined Improve business communications with the new BlackBerry Style 9670 smartphone • Secure and powerful device in a sleek, compact flip form factor • Combines enhanced browsing, universal search and an intuitive design with core BlackBerry® capabilities, like BlackBerry Messenger, security and IT control • Enables mobile professionals to work on the go with the security of BlackBerry • Allows workers to gain quick and secure access to important business applications, back office information and customer data, which increases business efficiency and productivity © 2010 Sprint. This information is subject to Sprint policies regarding use and is the property of Sprint and/or its relevant affiliates and may contain restricted, 2 confidential or privileged materials intended for the sole use of the intended recipient. Any review, use, distribution or disclosure is prohibited without authorization. BlackBerry Style 9670 smartphone benefits Expands the boundaries of the mobile professional • Intuitive, fluid design for movement • Fast, enhanced browsing with tabbed with -

Blackberry 10

Universidad Catolica´ \Nuestra Senora~ de la Asuncion"´ Teor´ıa y Aplicacion´ de la Informatica´ II BlackBerry 10 Docente: Autor: Ing. Juan de Oscar Pedrozo Urraza Resumen Este trabajo se enfoca principalmente en el dispositivo black- berry en general y en el ´ultimolanzamiento de su sistema operativo blackberry 10 que mediante esto intento salir a flote y posicionarse otra vez en el n´umerouno entre los smarthphone de la actualidad. Conocere- mos su historia, aparici´on,carasteristicas, uso y diferencia con los dem´as smarthphone que se encuentra en el mercado. ´Indice general 1. Introducci´on........................................4 1.1. Origen del Nombre...............................4 1.2. Historia........................................5 1.3. Evoluci´on de Blackberry..........................6 Comenz´ocon un \busca" Serie 850.................6 La Serie 957:....................................6 BlackBerry 5810(2002)...........................7 BlackBerry 6210 (2004)...........................7 BlackBerry 7250 (2005)...........................8 BlackBerry Charm 7100 (2004)....................9 BlackBerry Pearl 8100 (2006).....................9 La familia 8000.................................. 10 BlackBerry Curve 8300 (2007)..................... 11 BlackBerry Storm, BlackBerry Pearl Flip y BlackBery Bold 9000 (2008).................. 12 BlackBerry Style 9670 (2010)...................... 13 BlackBerry Torch 9850 (2011)..................... 13 BlackBerry Z10.................................. 14 2. BlackBerry OS..................................... -

Análisis Comparativo Entre Sistemas Operativos De Dispositivos Móviles Android, Iphone Y Blackberry

ANÁLISIS COMPARATIVO ENTRE SISTEMAS OPERATIVOS DE DISPOSITIVOS MÓVILES ANDROID, IPHONE Y BLACKBERRY YEISON MIGUEL BERMÚDEZ MORENO JUAN GUILLERMO LÓPEZ HINCAPIÉ UNIVERSIDAD TECNOLÓGICA DE PEREIRA FACULTAD DE INGENIERÍAS INGENIERÍA DE SISTEMAS Y COMPUTACIÓN PEREIRA 2011 ANÁLISIS COMPARATIVO ENTRE SISTEMAS OPERATIVOS DE DISPOSITIVOS MÓVILES ANDROID, IPHONE Y BLACKBERRY YEISON MIGUEL BERMÚDEZ MORENO JUAN GUILLERMO LÓPEZ HINCAPIÉ Proyecto de grado Director INGENIERO OMAR IVÁN TREJOS UNIVERSIDAD TECNOLÓGICA DE PEREIRA FACULTAD DE INGENIERÍAS INGENIERÍA DE SISTEMAS Y COMPUTACIÓN PEREIRA 2011 Nota Aceptación: __________________________________ __________________________________ __________________________________ __________________________________ Firma del presidente del jurado ___________________________________ Firma jurado __________________________________ Firma jurado Pereira, viernes, 11 de noviembre de 2011 DEDICATORIA YEISON MIGUEL BERMÚDEZ MORENO Dedico este proyecto de grado con el más sincero amor a mi madre Secundina Moreno M, y mi padre Miguel Bermúdez Valencia, por haber brindado su apoyo incondicional durante toda mi vida, sin esperar nada a cambio, ellos depositaron en mí todo su afecto, confianza y esfuerzo solo con el único objetivo de verme ser una persona de bien y que pudiese ayudar en la construcción de una mejor sociedad a partir de mis aportes positivos. Los momentos difíciles nunca permitieron que su apoyo no fuera constante, los aprecio con todo mi corazón y estas palabras son solo una expresión de cariño y no logran describir todo lo que siento por ellos, este trabajo es fruto de todo el esfuerzo por el que ellos tuvieron que pasar, para ellos mi más sincero amor y dedicatoria. JUAN GUILLERMO LÓPEZ HINCAPIÉ Este proyecto va dedicado en primer lugar a mis padres Guillermo López López y Luz Aydee Hincapié, pues gracias a su amor, esfuerzo y preocupación desinteresados hacia mí y mis estudios a lo largo de mi vida, me permiten hoy día estar a punto de obtener un título profesional. -

Wikipedia Mobile Ethnography United States

Wikipedia Mobile Ethnography United States Research Dates: August 31–October 6, 2011 CONTENTS Background & Objectives Methodology Executive Summary – Overall Themes & Opportunities Executive Summary – Platform-Specific Opportunities Executive Summary – Additional Opportunities for Engagement Overall Mobile Device Usage Overall Wikipedia Experiences Mobile Wikipedia Experiences About AnswerLab 11/14/2011 Wikipedia Mobile Ethnography – Final Research Report BACKGROUND & OBJECTIVES Wikimedia Foundation would like to get a better understanding of its mobile consumer. Key research questions and areas: • How do users access, read, search and consume Wikipedia content on their mobile devices? • Observe and understand Wikipedia editors’ current editing processes on desktop and mobile devices. • What are notable behaviors, preferences, barriers and technologies that impact usage of Wikipedia through mobile devices? • Identify needs and opportunities to inform the WMF mobile strategy. • What are the opportunities and threshold for contributions on the mobile platform? 11/14/2011 2 Wikipedia Mobile Ethnography – Final Research Report METHODOLOGY • AnswerLab conducted a 5-week field study to assess the effectiveness of Wikimedia Foundation’s mobile offerings as participants used them in their natural environment • Study participants were located in San Francisco, Chicago, and Dallas o Phase 1: 45-minute introductory phone interviews with 36 users o Phase 2: 3 weeks of self-reported feedback on mobile and Wikipedia usage o Phase 3: 120-minute in-home -

Mobile Phone Monitor Software User's Manual

Mobile Phone Monitor Software User’s Manual For Blackberry only Table of Contents 1 OVERVIEW......................................................................................................... 3 1.1 General Introduction..............................................................................................................................3 1.2 Feature....................................................................................................................................................3 1.3 Environment ...........................................................................................................................................3 2 SOFTWARE INSTALLATION ............................................................................. 4 3 OPERATION....................................................................................................... 5 3.1 DVR Setup..............................................................................................................................................5 3.1.1 Encode ...............................................................................................................................................5 3.1.2 Account...............................................................................................................................................5 3.2 Mobile Phone Software.........................................................................................................................6 3.2.1 Software Login ..................................................................................................................................6