COMPUTER ORGANIZATION-Basics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fill Your Boots: Enhanced Embedded Bootloader Exploits Via Fault Injection and Binary Analysis

IACR Transactions on Cryptographic Hardware and Embedded Systems ISSN 2569-2925, Vol. 2021, No. 1, pp. 56–81. DOI:10.46586/tches.v2021.i1.56-81 Fill your Boots: Enhanced Embedded Bootloader Exploits via Fault Injection and Binary Analysis Jan Van den Herrewegen1, David Oswald1, Flavio D. Garcia1 and Qais Temeiza2 1 School of Computer Science, University of Birmingham, UK, {jxv572,d.f.oswald,f.garcia}@cs.bham.ac.uk 2 Independent Researcher, [email protected] Abstract. The bootloader of an embedded microcontroller is responsible for guarding the device’s internal (flash) memory, enforcing read/write protection mechanisms. Fault injection techniques such as voltage or clock glitching have been proven successful in bypassing such protection for specific microcontrollers, but this often requires expensive equipment and/or exhaustive search of the fault parameters. When multiple glitches are required (e.g., when countermeasures are in place) this search becomes of exponential complexity and thus infeasible. Another challenge which makes embedded bootloaders notoriously hard to analyse is their lack of debugging capabilities. This paper proposes a grey-box approach that leverages binary analysis and advanced software exploitation techniques combined with voltage glitching to develop a powerful attack methodology against embedded bootloaders. We showcase our techniques with three real-world microcontrollers as case studies: 1) we combine static and on-chip dynamic analysis to enable a Return-Oriented Programming exploit on the bootloader of the NXP LPC microcontrollers; 2) we leverage on-chip dynamic analysis on the bootloader of the popular STM8 microcontrollers to constrain the glitch parameter search, achieving the first fully-documented multi-glitch attack on a real-world target; 3) we apply symbolic execution to precisely aim voltage glitches at target instructions based on the execution path in the bootloader of the Renesas 78K0 automotive microcontroller. -

18-447 Computer Architecture Lecture 6: Multi-Cycle and Microprogrammed Microarchitectures

18-447 Computer Architecture Lecture 6: Multi-Cycle and Microprogrammed Microarchitectures Prof. Onur Mutlu Carnegie Mellon University Spring 2015, 1/28/2015 Agenda for Today & Next Few Lectures n Single-cycle Microarchitectures n Multi-cycle and Microprogrammed Microarchitectures n Pipelining n Issues in Pipelining: Control & Data Dependence Handling, State Maintenance and Recovery, … n Out-of-Order Execution n Issues in OoO Execution: Load-Store Handling, … 2 Reminder on Assignments n Lab 2 due next Friday (Feb 6) q Start early! n HW 1 due today n HW 2 out n Remember that all is for your benefit q Homeworks, especially so q All assignments can take time, but the goal is for you to learn very well 3 Lab 1 Grades 25 20 15 10 5 Number of Students 0 30 40 50 60 70 80 90 100 n Mean: 88.0 n Median: 96.0 n Standard Deviation: 16.9 4 Extra Credit for Lab Assignment 2 n Complete your normal (single-cycle) implementation first, and get it checked off in lab. n Then, implement the MIPS core using a microcoded approach similar to what we will discuss in class. n We are not specifying any particular details of the microcode format or the microarchitecture; you can be creative. n For the extra credit, the microcoded implementation should execute the same programs that your ordinary implementation does, and you should demo it by the normal lab deadline. n You will get maximum 4% of course grade n Document what you have done and demonstrate well 5 Readings for Today n P&P, Revised Appendix C q Microarchitecture of the LC-3b q Appendix A (LC-3b ISA) will be useful in following this n P&H, Appendix D q Mapping Control to Hardware n Optional q Maurice Wilkes, “The Best Way to Design an Automatic Calculating Machine,” Manchester Univ. -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

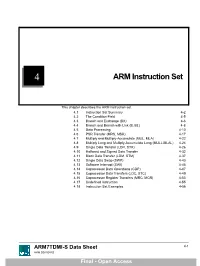

ARM Instruction Set

4 ARM Instruction Set This chapter describes the ARM instruction set. 4.1 Instruction Set Summary 4-2 4.2 The Condition Field 4-5 4.3 Branch and Exchange (BX) 4-6 4.4 Branch and Branch with Link (B, BL) 4-8 4.5 Data Processing 4-10 4.6 PSR Transfer (MRS, MSR) 4-17 4.7 Multiply and Multiply-Accumulate (MUL, MLA) 4-22 4.8 Multiply Long and Multiply-Accumulate Long (MULL,MLAL) 4-24 4.9 Single Data Transfer (LDR, STR) 4-26 4.10 Halfword and Signed Data Transfer 4-32 4.11 Block Data Transfer (LDM, STM) 4-37 4.12 Single Data Swap (SWP) 4-43 4.13 Software Interrupt (SWI) 4-45 4.14 Coprocessor Data Operations (CDP) 4-47 4.15 Coprocessor Data Transfers (LDC, STC) 4-49 4.16 Coprocessor Register Transfers (MRC, MCR) 4-53 4.17 Undefined Instruction 4-55 4.18 Instruction Set Examples 4-56 ARM7TDMI-S Data Sheet 4-1 ARM DDI 0084D Final - Open Access ARM Instruction Set 4.1 Instruction Set Summary 4.1.1 Format summary The ARM instruction set formats are shown below. 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 9876543210 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 Cond 0 0 I Opcode S Rn Rd Operand 2 Data Processing / PSR Transfer Cond 0 0 0 0 0 0 A S Rd Rn Rs 1 0 0 1 Rm Multiply Cond 0 0 0 0 1 U A S RdHi RdLo Rn 1 0 0 1 Rm Multiply Long Cond 0 0 0 1 0 B 0 0 Rn Rd 0 0 0 0 1 0 0 1 Rm Single Data Swap Cond 0 0 0 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 Rn Branch and Exchange Cond 0 0 0 P U 0 W L Rn Rd 0 0 0 0 1 S H 1 Rm Halfword Data Transfer: register offset Cond 0 0 0 P U 1 W L Rn Rd Offset 1 S H 1 Offset Halfword Data Transfer: immediate offset Cond 0 -

The Von Neumann Computer Model 5/30/17, 10:03 PM

The von Neumann Computer Model 5/30/17, 10:03 PM CIS-77 Home http://www.c-jump.com/CIS77/CIS77syllabus.htm The von Neumann Computer Model 1. The von Neumann Computer Model 2. Components of the Von Neumann Model 3. Communication Between Memory and Processing Unit 4. CPU data-path 5. Memory Operations 6. Understanding the MAR and the MDR 7. Understanding the MAR and the MDR, Cont. 8. ALU, the Processing Unit 9. ALU and the Word Length 10. Control Unit 11. Control Unit, Cont. 12. Input/Output 13. Input/Output Ports 14. Input/Output Address Space 15. Console Input/Output in Protected Memory Mode 16. Instruction Processing 17. Instruction Components 18. Why Learn Intel x86 ISA ? 19. Design of the x86 CPU Instruction Set 20. CPU Instruction Set 21. History of IBM PC 22. Early x86 Processor Family 23. 8086 and 8088 CPU 24. 80186 CPU 25. 80286 CPU 26. 80386 CPU 27. 80386 CPU, Cont. 28. 80486 CPU 29. Pentium (Intel 80586) 30. Pentium Pro 31. Pentium II 32. Itanium processor 1. The von Neumann Computer Model Von Neumann computer systems contain three main building blocks: The following block diagram shows major relationship between CPU components: the central processing unit (CPU), memory, and input/output devices (I/O). These three components are connected together using the system bus. The most prominent items within the CPU are the registers: they can be manipulated directly by a computer program. http://www.c-jump.com/CIS77/CPU/VonNeumann/lecture.html Page 1 of 15 IPR2017-01532 FanDuel, et al. -

Hp Storageworks Disk System 2405

user’s guide hp StorageWorks disk system 2405 Edition E0902 . Notice Trademark Information © Hewlett-Packard Company, 2002. All rights Red Hat is a registered trademark of Red Hat Co. reserved. C.A. UniCenter TNG is a registered trademark of A6250-96020 Computer Associates International, Inc. Hewlett-Packard Company makes no warranty of Microsoft, Windows NT, and Windows 2000 are any kind with regard to this material, including, but registered trademarks of Microsoft Corporation not limited to, the implied warranties of HP, HP-UX are registered trademarks of Hewlett- merchantability and fitness for a particular purpose. Packard Company. Command View, Secure Hewlett-Packard shall not be liable for errors Manager, Business Copy, Auto Path, Smart Plug- contained herein or for incidental or consequential Ins are trademarks of Hewlett-Packard Company damages in connection with the furnishing, performance, or use of this material. Adobe and Acrobat are trademarks of Adobe Systems Inc. This document contains proprietary information, which is protected by copyright. No part of this Java and Java Virtual Machine are trademarks of document may be photocopied, reproduced, or Sun Microsystems Inc. translated into another language without the prior NetWare is a trademark of Novell, Inc. written consent of Hewlett-Packard. The information contained in this document is subject to AIX is a registered trademark of International change without notice. Business Machines, Inc. Tru64 and OpenVMS are registered trademarks of Format Conventions Compaq Corporation. -

Title Studies on Hardware Algorithms for Arithmetic Operations

Studies on Hardware Algorithms for Arithmetic Operations Title with a Redundant Binary Representation( Dissertation_全文 ) Author(s) Takagi, Naofumi Citation Kyoto University (京都大学) Issue Date 1988-01-23 URL http://dx.doi.org/10.14989/doctor.r6406 Right Type Thesis or Dissertation Textversion author Kyoto University Studies on Hardware Algorithms for Arithmetic Operations with a Redundant Binary Representation Naofumi TAKAGI Department of Information Science Faculty of Engineering Kyoto University August 1987 Studies on Hardware Algorithms for Arithmetic Operations with a Redundant Binary Representation Naofumi Takagi Abstract Arithmetic has played important roles in human civilization, especially in the area of science, engineering and technology. With recent advances of IC (Integrated Circuit) technology, more and more sophisticated arithmetic processors have become standard hardware for high-performance digital computing systems. It is desired to develop high-speed multipliers, dividers and other specialized arithmetic circuits suitable for VLSI (Very Large Scale Integrated circuit) implementation. In order to develop such high-performance arithmetic circuits, it is important to design hardware algorithms for these operations, i.e., algorithms suitable for hardware implementation. The design of hardware algorithms for arithmetic operations has become a very attractive research subject. In this thesis, new hardware algorithms for multiplication, division, square root extraction and computations of several elementary functions are proposed. In these algorithms a redundant binary representation which has radix 2 and a digit set {l,O,l} is used for internal computation. In the redundant binary number system, addition can be performed in a constant time i independent of the word length of the operands. The hardware algorithms proposed in this thesis achieve high-speed computation by using this property. -

3.2 the CORDIC Algorithm

UC San Diego UC San Diego Electronic Theses and Dissertations Title Improved VLSI architecture for attitude determination computations Permalink https://escholarship.org/uc/item/5jf926fv Author Arrigo, Jeanette Fay Freauf Publication Date 2006 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California 1 UNIVERSITY OF CALIFORNIA, SAN DIEGO Improved VLSI Architecture for Attitude Determination Computations A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Electrical and Computer Engineering (Electronic Circuits and Systems) by Jeanette Fay Freauf Arrigo Committee in charge: Professor Paul M. Chau, Chair Professor C.K. Cheng Professor Sujit Dey Professor Lawrence Larson Professor Alan Schneider 2006 2 Copyright Jeanette Fay Freauf Arrigo, 2006 All rights reserved. iv DEDICATION This thesis is dedicated to my husband Dale Arrigo for his encouragement, support and model of perseverance, and to my father Eugene Freauf for his patience during my pursuit. In memory of my mother Fay Freauf and grandmother Fay Linton Thoreson, incredible mentors and great advocates of the quest for knowledge. iv v TABLE OF CONTENTS Signature Page...............................................................................................................iii Dedication … ................................................................................................................iv Table of Contents ...........................................................................................................v -

Compressor Based 32-32 Bit Vedic Multiplier Using Reversible Logic

IAETSD JOURNAL FOR ADVANCED RESEARCH IN APPLIED SCIENCES. ISSN NO: 2394-8442 COMPRESSOR BASED 32-32 BIT VEDIC MULTIPLIER USING REVERSIBLE LOGIC KAVALI USMAVATHI *, PROF. NAVEEN RATHEE** * PG SCHOLAR, Department of VLSI System Design, Bharat Institute of Engineering and Technology ** Professor, Department of Electronics and Communication Engineering at Bharat Institute of Engineering and Technology, TS, India. ABSTRACT: Vedic arithmetic is the name arithmetic and algebraic operations which given to the antiquated Indian arrangement are accepted by worldwide. The origin of of arithmetic that was rediscovered in the Vedic mathematics is from Vedas and to early twentieth century from antiquated more specific Atharva Veda which deals Indian figures (Vedas). This paper proposes with Engineering branches, Mathematics, the plan of fast Vedic Multiplier utilizing the sculpture, Medicine and all other sciences procedures of Vedic Mathematics that have which are ruling today’s world. Vedic been altered to improve execution. A fast mathematics, which simplifies arithmetic processor depends enormously on the and algebraic operations, can be multiplier as it is one of the key equipment implemented both in decimal and binary obstructs in most computerized flag number systems. It is an ancient technique, preparing frameworks just as when all is which simplifies multiplication, division, said in done processors. Vedic Mathematics complex numbers, squares, cubes, square has an exceptional strategy of estimations roots and cube roots. Recurring decimals based on 16 Sutras. This paper presents and auxiliary fractions can be handled by think about on rapid 32x32 piece Vedic Vedic mathematics. This made possible to multiplier design which is very not quite the solve many Engineering applications, Signal same as the Traditional technique for processing Applications, DFT’s, FFT’s and augmentation like include and move. -

Design and Implementation of Floating Point Multiplier for Better Timing Performance

ISSN: 2278 – 1323 International Journal of Advanced Research in Computer Engineering & Technology (IJARCET) Volume 1, Issue 7, September 2012 Design and Implementation of Floating Point Multiplier for Better Timing Performance B.Sreenivasa Ganesh1,J.E.N.Abhilash2, G. Rajesh Kumar3 SwarnandhraCollege of Engineering& Technology1,2, Vishnu Institute of Technology3 Abstract— IEEE Standard 754 floating point is the p0 = a0b0, p1 = a0b1 + a1b0, p2 = a0b2 + a1b1 + a2b0 + most common representation today for real numbers on carry(p1), etc. This circuit operates only with positive computers. This paper gives a brief overview of IEEE (unsigned) inputs, also producing a positive floating point and its representation. This paper describes (unsigned) output. a single precision floating point multiplier for better timing performance. The main object of this paper is to reduce the power consumption and to increase the speed of execution by implementing certain algorithm for multiplying two floating point numbers. In order to design this VHDL is the hardware description language is used and targeted on a Xilinx Spartan-3 FPGA. The implementation’s tradeoffs are area speed and accuracy. This multiplier also handles overflow and underflow cases. For high accuracy of the results normalization is also applied. By the use of pipelining process this multiplier is very good at speed and accuracy compared with the previous multipliers. This pipelined architecture is described in VHDL and is implemented on Xilinx Spartan 3 FPGA. Timing performance is measured with Xilinx Timing Analyzer. Timing performance is compared with standard multipliers. Fig 1 Binary Multiplication Index Terms— Floating This unsigned multiplier is useful in the point,multiplication,VHDL,Spartan-3 FPGA, Pipelined multiplication of mantissa part of floating point architecture, Timing Analyzer, IEEE Standard 754 numbers. -

A 4.7 Million-Transistor CISC Microprocessor

Auriga2: A 4.7 Million-Transistor CISC Microprocessor J.P. Tual, M. Thill, C. Bernard, H.N. Nguyen F. Mottini, M. Moreau, P. Vallet Hardware Development Paris & Angers BULL S.A. 78340 Les Clayes-sous-Bois, FRANCE Tel: (+33)-1-30-80-7304 Fax: (+33)-1-30-80-7163 Mail: [email protected] Abstract- With the introduction of the high range version of parallel multi-processor architecture. It is used in a family of the DPS7000 mainframe family, Bull is providing a processor systems able to handle up to 24 such microprocessors, which integrates the DPS7000 CPU and first level of cache on capable to support 10 000 simultaneously connected users. one VLSI chip containing 4.7M transistors and using a 0.5 For the development of this complex circuit, a system level µm, 3Mlayers CMOS technology. This enhanced CPU has design methodology has been put in place, putting high been designed to provide a high integration, high performance emphasis on high-level verification issues. A lot of home- and low cost systems. Up to 24 such processors can be made CAD tools were developed, to meet the stringent integrated in a single system, enabling performance levels in performance/area constraints. In particular, an integrated the range of 850 TPC-A (Oracle) with about 12 000 Logic Synthesis and Formal Verification environment tool simultaneously active connections. The design methodology has been developed, to deal with complex circuitry issues involved massive use of formal verification and symbolic and to enable the designer to shorten the iteration loop layout techniques, enabling to reach first pass right silicon on between logical design and physical implementation of the several foundries. -

Embedded Multi-Core Processing for Networking

12 Embedded Multi-Core Processing for Networking Theofanis Orphanoudakis University of Peloponnese Tripoli, Greece [email protected] Stylianos Perissakis Intracom Telecom Athens, Greece [email protected] CONTENTS 12.1 Introduction ............................ 400 12.2 Overview of Proposed NPU Architectures ............ 403 12.2.1 Multi-Core Embedded Systems for Multi-Service Broadband Access and Multimedia Home Networks . 403 12.2.2 SoC Integration of Network Components and Examples of Commercial Access NPUs .............. 405 12.2.3 NPU Architectures for Core Network Nodes and High-Speed Networking and Switching ......... 407 12.3 Programmable Packet Processing Engines ............ 412 12.3.1 Parallelism ........................ 413 12.3.2 Multi-Threading Support ................ 418 12.3.3 Specialized Instruction Set Architectures ....... 421 12.4 Address Lookup and Packet Classification Engines ....... 422 12.4.1 Classification Techniques ................ 424 12.4.1.1 Trie-based Algorithms ............ 425 12.4.1.2 Hierarchical Intelligent Cuttings (HiCuts) . 425 12.4.2 Case Studies ....................... 426 12.5 Packet Buffering and Queue Management Engines ....... 431 399 400 Multi-Core Embedded Systems 12.5.1 Performance Issues ................... 433 12.5.1.1 External DRAMMemory Bottlenecks ... 433 12.5.1.2 Evaluation of Queue Management Functions: INTEL IXP1200 Case ................. 434 12.5.2 Design of Specialized Core for Implementation of Queue Management in Hardware ................ 435 12.5.2.1 Optimization Techniques .......... 439 12.5.2.2 Performance Evaluation of Hardware Queue Management Engine ............. 440 12.6 Scheduling Engines ......................... 442 12.6.1 Data Structures in Scheduling Architectures ..... 443 12.6.2 Task Scheduling ..................... 444 12.6.2.1 Load Balancing ................ 445 12.6.3 Traffic Scheduling ...................