Ccp4 Newsletter on Protein Crystallography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Yutaka Oiwa. "Implementation of a Fail-Safe ANSI C Compiler"

Implementation of a Fail-Safe ANSI C Compiler 安全な ANSI C コンパイラの実装手法 Doctoral Dissertation 博士論文 Yutaka Oiwa 大岩 寛 Submitted to Department of Computer Science, Graduate School of Information Science and Technology, The University of Tokyo on December 16, 2004 in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract Programs written in the C language often suffer from nasty errors due to dangling pointers and buffer overflow. Such errors in Internet server programs are often ex- ploited by malicious attackers to “crack” an entire system, and this has become a problem affecting society as a whole. The root of these errors is usually corruption of on-memory data structures caused by out-of-bound array accesses. The C lan- guage does not provide any protection against such out-of-bound access, although recent languages such as Java, C#, Lisp and ML provide such protection. Never- theless, the C language itself should not be blamed for this shortcoming—it was designed to provide a replacement for assembly languages (i.e., to provide flexible direct memory access through a light-weight high-level language). In other words, lack of array boundary protection is “by design.” In addition, the C language was designed more than thirty years ago when there was not enough computer power to perform a memory boundary check for every memory access. The real prob- lem is the use of the C language for current casual programming, which does not usually require such direct memory accesses. We cannot realistically discard the C language right away, though, because there are many legacy programs written in the C language and many legacy programmers accustomed to the C language and its programming style. -

WWW-Based Collaboration Environments with Distributed Tool Services

WWWbased Collab oration Environments with Distributed To ol Services Gail E Kaiser Stephen E Dossick Wenyu Jiang Jack Jingshuang Yang SonnyXiYe Columbia University Department of Computer Science Amsterdam Avenue Mail Co de New York NY UNITED STATES fax kaisercscolumbiaedu CUCS February Abstract Wehave develop ed an architecture and realization of a framework for hyp ermedia collab oration environments that supp ort purp oseful work by orchestrated teams The hyp ermedia represents all plausible multimedia artifacts concerned with the collab orative tasks at hand that can b e placed or generated online from applicationsp ecic materials eg source co de chip layouts blueprints to formal do cumentation to digital library resources to informal email and chat transcripts The environment capabilities include b oth internal hyp ertext and external link server links among these artifacts which can b e added incrementally as useful connections are discovered pro jectsp ecic hyp ermedia search and browsing automated construction of artifacts and hyp erlinks according to the semantics of the group and individual tasks and the overall pro cess workow application of to ols to the artifacts and collab orativework for geographically disp ersed teams We present a general architecture for what wecallhyp ermedia subwebs and imp osition of groupspace services op erating on shared subwebs based on World Wide Web technology which could b e applied over the Internet andor within an organizational intranet We describ e our realization in OzWeb which -

LISTSERV 16.0 Site Manager's Operations Manual

Information in this document is subject to change without notice. Companies, names, and data used in examples herein are fictitious unless otherwise noted. L-Soft does not endorse or approve the use of any of the product names or trademarks appearing in this document. Permission is granted to copy this document, at no charge and in its entirety, if the copies are not used for commercial advantage, the source is cited, and the present copyright notice is included in all copies. Recipients of such copies are equally bound to abide by the present conditions. Prior written permission is required for any commercial use of this document, in whole or in part, and for any partial reproduction of the contents of this document exceeding 50 lines of up to 80 characters, or equivalent. The title page, table of contents, and index, if any, are not considered to be part of the document for the purposes of this copyright notice, and can be freely removed if present. Copyright 2009 L-Soft international, Inc. All Rights Reserved Worldwide. LISTSERV is a registered trademark licensed to L-Soft international, Inc. ListPlex, CataList, and EASE are service marks of L-Soft international, Inc. LSMTP is a registered trademark of L-Soft international, Inc. The Open Group, Motif, OSF/1 UNIX and the “X” device are registered trademarks of The Open Group in the United State and other countries. Digital, Alpha AXP, AXP, Digital UNIX, OpenVMS, HP, and HP-UX are trademarks of Hewlett- Packard Company in the United States and other countries. Microsoft, Windows, Windows 2000, Windows XP, and Windows NT are registered trademarks of Microsoft Corporation in the United States and other countries. -

Cognitive Abilities, Interfaces and Tasks: Effects on Prospective Information Handling in Email

Cognitive Abilities, Interfaces and Tasks: Effects on Prospective Information Handling in Email by Jacek Stanisław Gwizdka A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Mechanical and Industrial Engineering University of Toronto © Copyright by Jacek Gwizdka 2004 “Cognitive Abilities, Interfaces and Tasks: Effects on Prospective Information Handling in Email” Degree of Doctor of Philosophy, 2004 Jacek Stanisław Gwizdka Department of Mechanical and Industrial Engineering, University of Toronto Abstract This dissertation is focused on new email user interfaces that may improve awareness and handling of task-laden messages in the inbox. The practical motivation for this research was to help email users process messages more effectively. A field study was conducted to examine email practices related to handling messages that refer to pending tasks. Individual differences in message handling style were observed, with one group of users transferring such messages out of their email pro- grams to other applications (e.g., calendars), while the other group kept prospective messages in email and used the inbox as a reminder of future events. Two novel graphical user interfaces were designed to facilitate monitoring and retrieval of prospective information from email messages. The TaskView interface displayed task- laden messages on a two-dimensional grid (with time on the horizontal axis). The WebT- askMail interface retained the two-dimensional grid, but extended the representation of pending tasks (added distinction between events and to-do's with deadlines), a vertical date reading line, and more space for email message headers. ii Two user studies were conducted to test hypothesized benefits of the new visual repre- sentations and to examine the effects of different levels of selected cognitive abilities on task-laden message handling performance. -

Unix Quickref.Dvi

Summary of UNIX commands Table of Contents df [dirname] display free disk space. If dirname is omitted, 1. Directory and file commands 1994,1995,1996 Budi Rahardjo ([email protected]) display all available disks. The output maybe This is a summary of UNIX commands available 2. Print-related commands in blocks or in Kbytes. Use df -k in Solaris. on most UNIX systems. Depending on the config- uration, some of the commands may be unavailable 3. Miscellaneous commands du [dirname] on your site. These commands may be a commer- display disk usage. cial program, freeware or public domain program that 4. Process management must be installed separately, or probably just not in less filename your search path. Check your local documentation or 5. File archive and compression display filename one screenful. A pager similar manual pages for more details (e.g. man program- to (better than) more. 6. Text editors name). This reference card, obviously, cannot de- ls [dirname] scribe all UNIX commands in details, but instead I 7. Mail programs picked commands that are useful and interesting from list the content of directory dirname. Options: a user's point of view. 8. Usnet news -a display hidden files, -l display in long format 9. File transfer and remote access mkdir dirname Disclaimer make directory dirname The author makes no warranty of any kind, expressed 10. X window or implied, including the warranties of merchantabil- more filename 11. Graph, Plot, Image processing tools ity or fitness for a particular purpose, with regard to view file filename one screenfull at a time the use of commands contained in this reference card. -

Site Manager's Operations Manual for LISTSERV®, Version 14.3

L-Soft international, Inc. Site Manager's Operations Manual for LISTSERV®, version 14.3 7 December 2004 LISTSERV 14.3 Release The reference number of this document is 0412-MD-01. Information in this document is subject to change without notice. Companies, names and data used in examples herein are fictitious unless otherwise noted. L-Soft international, Inc. does not endorse or approve the use of any of the product names or trademarks appearing in this document. Permission is granted to copy this document, at no charge and in its entirety, provided that the copies are not used for commercial advantage, that the source is cited and that the present copyright notice is included in all copies, so that the recipients of such copies are equally bound to abide by the present conditions. Prior written permission is required for any commercial use of this document, in whole or in part, and for any partial reproduction of the contents of this document exceeding 50 lines of up to 80 characters, or equivalent. The title page, table of contents and index, if any, are not considered to be part of the document for the purposes of this copyright notice, and can be freely removed if present. The purpose of this copyright is to protect your right to make free copies of this manual for your friends and colleagues, to prevent publishers from using it for commercial advantage, and to prevent ill-meaning people from altering the meaning of the document by changing or removing a few paragraphs. Copyright © 1996-2004 L-Soft international, Inc. -

Tailored Application-Specific System Call Tables

Tailored Application-specific System Call Tables Qiang Zeng, Zhi Xin, Dinghao Wu, Peng Liu, and Bing Mao ABSTRACT policies may be relaxed for setuid programs.1 Once a pro- The system call interface defines the services an operating gram is compromised, a uniform set of kernel services are system kernel provides to user space programs. An oper- potentially exposed to attackers as a versatile and handy ating system usually provides a uniform system call inter- toolkit. While a uniform system call interface simplifies op- face to all user programs, while in practice no programs erating system design, it does not meet the principle of least utilize the whole set of the system calls. Existing system privileges in the perspective of security, as the interface is call based sandboxing and intrusion detection systems fo- not tailored to the needs of applications. cus on confining program behavior using sophisticated finite state or pushdown automaton models. However, these au- Existing researches on system call based sandboxing and tomata generally incur high false positives when modeling intrusion detection focus on system call pattern checking program behavior such as signal handling and multithread- [16, 12, 31, 35, 7], arguments validation [35, 23, 25, 4, 3], ing, and the runtime overhead is usually significantly high. and call stack integrity verification [10, 15]. Some establish We propose to use a stateless model, a whitelist of system sophisticated models such as Finite State Automata (FSA) calls needed by the target program. Due to the simplicity [31, 35, 15, 13] and Pushdown Automata (PDA) [35, 14] we are able to construct the model via static analysis on by training [12, 31, 34, 10] or static analysis [35, 14, 15, the program's binary with much higher precision that in- 13], while others rely on manual configuration to specify the curs few false positives. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Index Images Download 2006 News Crack Serial Warez Full 12 Contact

index images download 2006 news crack serial warez full 12 contact about search spacer privacy 11 logo blog new 10 cgi-bin faq rss home img default 2005 products sitemap archives 1 09 links 01 08 06 2 07 login articles support 05 keygen article 04 03 help events archive 02 register en forum software downloads 3 security 13 category 4 content 14 main 15 press media templates services icons resources info profile 16 2004 18 docs contactus files features html 20 21 5 22 page 6 misc 19 partners 24 terms 2007 23 17 i 27 top 26 9 legal 30 banners xml 29 28 7 tools projects 25 0 user feed themes linux forums jobs business 8 video email books banner reviews view graphics research feedback pdf print ads modules 2003 company blank pub games copyright common site comments people aboutus product sports logos buttons english story image uploads 31 subscribe blogs atom gallery newsletter stats careers music pages publications technology calendar stories photos papers community data history arrow submit www s web library wiki header education go internet b in advertise spam a nav mail users Images members topics disclaimer store clear feeds c awards 2002 Default general pics dir signup solutions map News public doc de weblog index2 shop contacts fr homepage travel button pixel list viewtopic documents overview tips adclick contact_us movies wp-content catalog us p staff hardware wireless global screenshots apps online version directory mobile other advertising tech welcome admin t policy faqs link 2001 training releases space member static join health -

Validation of Correction of Macromolecular Structural Models

Validation of Correction of Macromolecular Structural Models William Rochira MSc by Research University of York Chemistry December 2020 Abstract Recent advances in automation in the field of computational structural biology have created a void to be filled by novel validation software. In this project, the problems and inadequacies of currently available validation tools are identified, and the requirements of a novel validation tool are both ascertained and addressed. The development of a new validation software package is described in detail, starting with the development of the front-end interface and the back-end calculations, followed by the integration of these two components to produce an all-in-one validation package, which can calculate its own comprehensive per-residue validation metrics and present them in a compact, interactive, graphical interface, so as to allow the intuitive and thorough analysis of a protein model’s quality that is understandable at a glance. This interface features a novel graphical representation of validation, which plots multiple validation metrics along concentric axes such that correlations between those metrics are immediately apparent, and poorly-modelled regions are emphasised to the user. The software can be run standalone, or plugged into new or existing validation pipelines, and can incorporate calculated metrics from other validation services such as MolProbity (1). It supports multi-model comparison in its single view, and runs with negligible time penalty, making it especially suitable for evaluating incremental changes that result from automated or manual iterative model building. To showcase its extensibility and pluggable design, the integration of this package into the existing CCP4i2 (2) software suite is described. -

Linux-FAQ.Pdf

The Linux FAQ David C. Merrill david −AT− lupercalia.net 2003−09−19 Revision History Revision 1.20 2001−12−04 Revised by: rk Revision 2.0 2002−04−25 Revised by: dcm Some reorganization and markup changes. Revision 2.1 2003−05−19 Revised by: dcm Fairly complete reorganization and conversion to WikiText. Revision 2.1.1 2003−09−19 Revised by: dcm Minor corrections. Revision 2.1.2 2004−02−28 Revised by: dcm Minor corrections. This is the list of Frequently Asked Questions for Linux, the Free/Open Source UNIX−like operating system kernel that runs on many modern computer systems. The Linux FAQ Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. About the FAQ..................................................................................................................................1 1.2. Asking Questions and Sending Comments.......................................................................................1 1.3. Authorship and Acknowledgments...................................................................................................1 1.4. Copyright and License......................................................................................................................2 1.5. Disclaimer.........................................................................................................................................2 2. General Information.......................................................................................................................................3 -

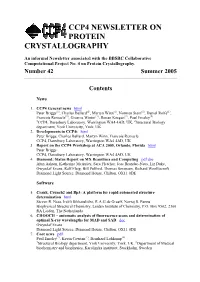

Ccp4 Newsletter on Protein Crystallography

CCP4 NEWSLETTER ON PROTEIN CRYSTALLOGRAPHY An informal Newsletter associated with the BBSRC Collaborative Computational Project No. 4 on Protein Crystallography. Number 42 Summer 2005 Contents News 1. CCP4 General news html Peter Briggs(1), Charles Ballard(1), Martyn Winn(1), Norman Stein(1), Daniel Rolfe(1), Francois Remacle(1), Graeme Winter(1), Ronan Keegan(1), Paul Emsley(2) 1CCP4, Daresbury Laboratory, Warrington WA4 4AD, UK, 2Structural Biology department, York University, York, UK 2. Developments in CCP4i html Peter Briggs, Charles Ballard, Martyn Winn, Francois Remacle CCP4, Daresbury Laboratory, Warrington WA4 4AD, UK 3. Report on the CCP4 Workshop at ACA 2005, Orlando, Florida html Peter Briggs CCP4, Daresbury Laboratory, Warrington WA4 4AD, UK 4. Diamond: Status Report on MX Beamlines and Computing pdf doc Alun Ashton, Katherine McAuley, Sara Fletcher, Jose Brandao-Neto, Liz Duke, Gwyndaf Evans, Ralf Flaig, Bill Pulford, Thomas Sorensen, Richard Woolliscroft Diamond Light Source, Diamond House, Chilton, OX11 0DE Software 5. Crank, Crunch2 and Bp3: A platform for rapid automated structure determination html Steven R. Ness, Irakli Sikharulidze, R.A.G de Graaff, Navraj S. Pannu Biophysical Structural Chemistry, Leiden Institute of Chemistry, P.O. Box 9502, 2300 RA Leiden, The Netherlands 6. CHOOCH – automatic analysis of fluorescence scans and determination of optimal X-ray wavelengths for MAD and SAD doc Gwyndaf Evans Diamond Light Source, Diamond House, Chilton, OX11 0DE 7. Coot news pdf Paul Emsley(1), Kevin Cowtan(1), Bernhard Lohkamp(2) 1Structural Biology department, York University, York, UK, 2Department of Medical biochemistry and biophysics, Karolinska institutet, Stockholm, Sweden 8. The Phenix refinement framework doc Afonine P.V., Grosse-Kunstleve R.W, Adams P.D.