On the Complexity of Trick-Taking Card Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Artificial Intelligence and Machine Learning

ISSUE 1 · 2018 TECHNOLOGY TODAY Highlighting Raytheon’s Engineering & Technology Innovations SPOTLIGHT EYE ON TECHNOLOGY SPECIAL INTEREST Artificial Intelligence Mechanical the invention engine Raytheon receives the 10 millionth and Machine Learning Modular Open Systems U.S. Patent in history at raytheon Architectures Discussing industry shifts toward open standards designs A MESSAGE FROM Welcome to the newly formatted Technology Today magazine. MARK E. While the layout has been updated, the content remains focused on critical Raytheon engineering and technology developments. This edition features Raytheon’s advances in Artificial Intelligence RUSSELL and Machine Learning. Commercial applications of AI and ML — including facial recognition technology for mobile phones and social applications, virtual personal assistants, and mapping service applications that predict traffic congestion Technology Today is published by the Office of — are becoming ubiquitous in today’s society. Furthermore, ML design Engineering, Technology and Mission Assurance. tools provide developers the ability to create and test their own ML-based applications without requiring expertise in the underlying complex VICE PRESIDENT mathematics and computer science. Additionally, in its 2018 National Mark E. Russell Defense Strategy, the United States Department of Defense has recognized the importance of AI and ML as an enabler for maintaining CHIEF TECHNOLOGY OFFICER Bill Kiczuk competitive military advantage. MANAGING EDITORS Raytheon understands the importance of these technologies and Tony Pandiscio is applying AI and ML to solutions where they provide benefit to our Tony Curreri customers, such as in areas of predictive equipment maintenance, SENIOR EDITORS language classification of handwriting, and automatic target recognition. Corey Daniels Not only does ML improve Raytheon products, it also can enhance Eve Hofert our business operations and manufacturing efficiencies by identifying DESIGN, PHOTOGRAPHY AND WEB complex patterns in historical data that result in process improvements. -

American Skat : Or, the Game of Skat Defined : a Descriptive and Comprehensive Guide on the Rules and Plays of This Interesting

'JTV American Skat, OR, THE GAME OF SKAT DEFINED. BY J. CHARLES EICHHORN A descriptive and comprehensive Guide on the Rules and Plays of if. is interesting game, including table, definition of phrases, finesses, and a full treatise on Skat as played to-day. CHICAGO BREWER. BARSE & CO. Copyrighted, January 21, 1898 EICHHORN By J. CHARLES SKAT, with its many interesting features and plays, has now gained such a firm foothold in America, that my present edition in the English language on this great game of cards, will not alone serve as a guide for beginners, but will be a complete compendium on this absorbing game. It is just a decade since my first book was pub- lished. During the past 10 years, the writer has visited, and been in personal touch with almost all the leading authorities on the game of Skat in Amer- ica as well as in Europe, besides having been contin- uously a director of the National Skat League, as well as a committeeman on rules. In pointing out the features of the game, in giving the rules, defining the plays, tables etc., I shall be as concise as possible, using no complicated or lengthy remarks, but in short and comprehensive manner, give all the points and information required. The game of Skat as played today according to the National Skat League values and rulings, with the addition of Grand Guckser and Passt Nicht as variations, is as well balanced a game, as the leading authorities who have given the same both thorough study and consideration, can make. -

The Game of Whist

THE GAME OF WHIST “WHIST” comes from an English word meaning silent and attentive. Whist is a trick-based card game similar to Euchre and Hearts. Originally developed in eighteenth-century English social circles, it quickly became one of the most popular games in the American colonies and early United States. LAWS OF THE GAME Whist is played with 4 people in pairs of 2. Cut the deck: the player who draws a high card is the dealer! The dealer deals each player 13 cards. The last card (to the dealer) is dealt face up, indicating the trump suit. The player to the dealer’s left leads the first “trick” with any card they choose. “A Pig in a Poke, Whist, Whist” Hand-coloured etching Aces are high. Twos are low. by James Gillray, 1788. National Portrait Gallery, London Moving clockwise, each player plays a card on that trick. If they can, players must follow the suit of the card led or they may play a trump card. Players with no card of that suit can play any card, including a trump card. The player with the highest card of the led suit or the highest trump card wins the trick and places the 4 cards to their side. TRICKS The winning player leads the next trick. When all of the cards have been played, for total of 12 tricks, each pair earns 1 point for every trick they won in excess of 6. For example, a player who wins 8 tricks would earn 2 points. A trick penalty is given to anyone who fails to follow suit when they have that suit in hand. -

Table of Contents

TABLE OF CONTENTS System Requirements . 3 Installation . 3. Introduction . 5 Signing In . 6 HOYLE® PLAYER SERVICES Making a Face . 8 Starting a Game . 11 Placing a Bet . .11 Bankrolls, Credit Cards, Loans . 12 HOYLE® ROYAL SUITE . 13. HOYLE® PLAYER REWARDS . 14. Trophy Case . 15 Customizing HOYLE® CASINO GAMES Environment . 15. Themes . 16. Playing Cards . 17. Playing Games in Full Screen . 17 Setting Game Rules and Options . 17 Changing Player Setting . 18 Talking Face Creator . 19 HOYLE® Computer Players . 19. Tournament Play . 22. Short cut Keys . 23 Viewing Bet Results and Statistics . 23 Game Help . 24 Quitting the Games . 25 Blackjack . 25. Blackjack Variations . 36. Video Blackjack . 42 1 HOYLE® Card Games 2009 Bridge . 44. SYSTEM REQUIREMENTS Canasta . 50. Windows® XP (Home & Pro) SP3/Vista SP1¹, Catch The Ten . 57 Pentium® IV 2 .4 GHz processor or faster, Crazy Eights . 58. 512 MB (1 GB RAM for Vista), Cribbage . 60. 1024x768 16 bit color display, Euchre . 63 64MB VRAM (Intel GMA chipsets supported), 3 GB Hard Disk Space, Gin Rummy . 66. DVD-ROM drive, Hearts . 69. 33 .6 Kbps modem or faster and internet service provider Knockout Whist . 70 account required for internet access . Broadband internet service Memory Match . 71. recommended .² Minnesota Whist . 73. Macintosh® Old Maid . 74. OS X 10 .4 .10-10 .5 .4 Pinochle . 75. Intel Core Solo processor or better, Pitch . 81 1 .5 GHz or higher processor, Poker . 84. 512 MB RAM, 64MB VRAM (Intel GMA chipsets supported), Video Poker . 86 3 GB hard drive space, President . 96 DVD-ROM drive, Rummy 500 . 97. 33 .6 Kbps modem or faster and internet service provider Skat . -

Whist Points and How to Make Them

We inuBt speak by the card, or equivocation will undo us, —Hamlet wtii5T • mm And How to Make Them By C. R. KEILEY G V »1f-TMB OONTINBNTIkL OLUMj NBWYOH 1277 F'RIOE 2 5 Cknxs K^7 PUBLISHED BY C. M. BRIGHT SYRACUSE, N. Y. 1895 WHIST POINTS And How to ^Make Them ^W0 GUL ^ COPYRIGHT, 1895 C. M. BRIGHT INTRODUCTORY. This little book presupposes some knowledge of "whist on the part of the reader, and is intended to contain suggestions rather than fiats. There is nothing new in it. The compiler has simply placed before the reader as concisely as possible some of the points of whist which he thinks are of the most importance in the development of a good game. He hopes it may be of assistance to the learner in- stead of befogging him, as a more pretentious effort might do. There is a reason for every good play in whist. If the manner of making the play is given, the reason for it will occur to every one who can ever hope to become a whist player. WHIST POINTS. REGULAR LEADS. Until you have mastered all the salient points of the game, and are blessed with a partner possessing equal intelligence and information, you will do well to follow the general rule:—Open your hand by leading your longest suit. There are certain well established leads from combinations of high cards, which it would be well for every student of the game to learn. They are easy to remember, and are of great value in giving^ information regarding numerical and trick taking strength simultaneously, ACE. -

The Penguin Book of Card Games

PENGUIN BOOKS The Penguin Book of Card Games A former language-teacher and technical journalist, David Parlett began freelancing in 1975 as a games inventor and author of books on games, a field in which he has built up an impressive international reputation. He is an accredited consultant on gaming terminology to the Oxford English Dictionary and regularly advises on the staging of card games in films and television productions. His many books include The Oxford History of Board Games, The Oxford History of Card Games, The Penguin Book of Word Games, The Penguin Book of Card Games and the The Penguin Book of Patience. His board game Hare and Tortoise has been in print since 1974, was the first ever winner of the prestigious German Game of the Year Award in 1979, and has recently appeared in a new edition. His website at http://www.davpar.com is a rich source of information about games and other interests. David Parlett is a native of south London, where he still resides with his wife Barbara. The Penguin Book of Card Games David Parlett PENGUIN BOOKS PENGUIN BOOKS Published by the Penguin Group Penguin Books Ltd, 80 Strand, London WC2R 0RL, England Penguin Group (USA) Inc., 375 Hudson Street, New York, New York 10014, USA Penguin Group (Canada), 90 Eglinton Avenue East, Suite 700, Toronto, Ontario, Canada M4P 2Y3 (a division of Pearson Penguin Canada Inc.) Penguin Ireland, 25 St Stephen’s Green, Dublin 2, Ireland (a division of Penguin Books Ltd) Penguin Group (Australia) Ltd, 250 Camberwell Road, Camberwell, Victoria 3124, Australia -

GIB: Imperfect Information in a Computationally Challenging Game

Journal of Artificial Intelligence Research 14 (2001) 303–358 Submitted 10/00; published 6/01 GIB: Imperfect Information in a Computationally Challenging Game Matthew L. Ginsberg [email protected] CIRL 1269 University of Oregon Eugene, OR 97405 USA Abstract This paper investigates the problems arising in the construction of a program to play the game of contract bridge. These problems include both the difficulty of solving the game’s perfect information variant, and techniques needed to address the fact that bridge is not, in fact, a perfect information game. Gib, the program being described, involves five separate technical advances: partition search, the practical application of Monte Carlo techniques to realistic problems, a focus on achievable sets to solve problems inherent in the Monte Carlo approach, an extension of alpha-beta pruning from total orders to arbitrary distributive lattices, and the use of squeaky wheel optimization to find approximately optimal solutions to cardplay problems. Gib is currently believed to be of approximately expert caliber, and is currently the strongest computer bridge program in the world. 1. Introduction Of all the classic games of mental skill, only card games and Go have yet to see the ap- pearance of serious computer challengers. In Go, this appears to be because the game is fundamentally one of pattern recognition as opposed to search; the brute-force techniques that have been so successful in the development of chess-playing programs have failed al- most utterly to deal with Go’s huge branching factor. Indeed, the arguably strongest Go program in the world (Handtalk) was beaten by 1-dan Janice Kim (winner of the 1984 Fuji Women’s Championship) in the 1997 AAAI Hall of Champions after Kim had given the program a monumental 25 stone handicap. -

1 Problem Statement

Stanford University: CS230 Multi-Agent Deep Reinforcement Learning in Imperfect Information Games Category: Reinforcement Learning Eric Von York [email protected] March 25, 2020 1 Problem Statement Reinforcement Learning has recently made big headlines with the success of AlphaGo and AlphaZero [7]. The fact that a computer can learn to play Go, Chess or Shogi better than any human player by playing itself, only knowing the rules of the game (i.e. tabula rasa), has many people interested in this fascinating topic (myself included of course!). Also, the fact that experts believe that AlphaZero is developing new strategies in Chess is also quite impressive [4]. For my nal project, I developed a Deep Reinforcement Learning algorithm to play the card game Pedreaux (or Pedro [8]). As a kid growing up, we played a version of Pedro called Pitch with Fives or Catch Five [3], which is the version of this game we will consider for this project. Why is this interesting/hard? First, it is a game with four players, in two teams of two. So we will have four agents playing the game, and two will be cooperating with each other. Second, there are two distinct phases of the game: the bidding phase, then the playing phase. To become procient at playing the game, the agents need to learn to bidbased on their initial hands, and learn how to play the cards after the bidding phase. In addition, it is complex. There are 52 9 initial hands to consider per agent, coupled with a complicated action space, and bidding decisions, which 9 > 3×10 makes the state space too large for tabular methods and one well suited for Deep Reinforcement Learning methods such as those presented in [2, 6]. -

Winona Daily & Sunday News

Winona State University OpenRiver Winona Daily News Winona City Newspapers 3-15-1971 Winona Daily News Winona Daily News Follow this and additional works at: https://openriver.winona.edu/winonadailynews Recommended Citation Winona Daily News, "Winona Daily News" (1971). Winona Daily News. 1065. https://openriver.winona.edu/winonadailynews/1065 This Newspaper is brought to you for free and open access by the Winona City Newspapers at OpenRiver. It has been accepted for inclusion in Winona Daily News by an authorized administrator of OpenRiver. For more information, please contact [email protected]. Snow ending * News in Print: tonight; partly You Can See If, cloudy Tuesday Reread If/ Keep It Soviefs switch emphasis Near Sepone to keep grip on Egypt By DENNIS NEELD tries agreed to exchange lomat who had photograph- BEIRUT, Lebanon (AP) technical and other informa- ed a restricted military zone — ' As some diplomats see tion on security, the Cairo In Alexandria. Reds attack lt, the Soviet Union is quiet- press reported. An East German organ- ly getting a grip on Egypt's A new Egyptian police ization for sports and tech- civil and political appara- nical training, ia providing tus. force made its appearance paramilitary training for The Russians are pre- in Cairo early this year aft- Egyptian youngsters. sumed to believe that a set- er a nine-month training pro- A recent mission from the tlement of the Arab-Israeli gram which included /politi- Czech communist party was HAMba NGHL Vietnam se(AP) - Division , insaid the North LaosViet- ed finding 70 North Vietnamese conflict would lessen the cal studies conducted by headed by the hard-line Cen- North Vietnamese troops namese were moving two regi- bodies Sunday about eight importance of their mili- the East Germans. -

Red Book of Contract Bridge

The RED BOOK of CONTRACT BRIDGE A DIGEST OF ALL THE POPULAR SYSTEMS E. J. TOBIN RED BOOK of CONTRACT BRIDGE By FRANK E. BOURGET and E. J. TOBIN I A Digest of The One-Over-One Approach-Forcing (“Plastic Valuation”) Official and Variations INCLUDING Changes in Laws—New Scoring Rules—Play of the Cards AND A Recommended Common Sense Method “Sound Principles of Contract Bridge” Approved by the Western Bridge Association albert?whitman £7-' CO. CHICAGO 1933 &VlZ%z Copyright, 1933 by Albert Whitman & Co. Printed in U. S. A. ©CIA 67155 NOV 15 1933 PREFACE THE authors of this digest of the generally accepted methods of Contract Bridge have made an exhaustive study of the Approach- Forcing, the Official, and the One-Over-One Systems, and recog¬ nize many of the sound principles advanced by their proponents. While the Approach-Forcing contains some of the principles of the One-Over-One, it differs in many ways with the method known strictly as the One-Over-One, as advanced by Messrs. Sims, Reith or Mrs, Kerwin. We feel that many of the millions of players who have adopted the Approach-Forcing method as advanced by Mr. and Mrs. Culbertson may be prone to change their bidding methods and strategy to conform with the new One-Over-One idea which is being fused with that system, as they will find that, by the proper application of the original Approach- Forcing System, that method of Contract will be entirely satisfactory. We believe that the One-Over-One, by Mr. Sims and adopted by Mrs. -

Pinochle-Rules.Pdf

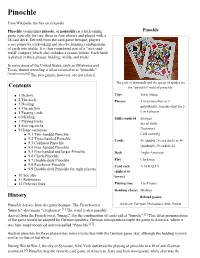

Pinochle From Wikipedia, the free encyclopedia Pinochle (sometimes pinocle, or penuchle) is a trick-taking Pinochle game typically for two, three or four players and played with a 48 card deck. Derived from the card game bezique, players score points by trick-taking and also by forming combinations of cards into melds. It is thus considered part of a "trick-and- meld" category which also includes a cousin, belote. Each hand is played in three phases: bidding, melds, and tricks. In some areas of the United States, such as Oklahoma and Texas, thumb wrestling is often referred to as "pinochle". [citation needed] The two games, however, are not related. The jack of diamonds and the queen of spades are Contents the "pinochle" meld of pinochle. 1 History Type Trick-taking 2 The deck Players 4 in partnerships or 3 3 Dealing individually, variants exist for 2- 4 The auction 6 or 8 players 5 Passing cards 6 Melding Skills required Strategy 7 Playing tricks Social skills 8 Scoring tricks Teamwork 9 Game variations 9.1 Two-handed Pinochle Card counting 9.2 Three-handed Pinochle Cards 48 (double 24 card deck) or 80 9.3 Cutthroat Pinochle (quadruple 20 card deck) 9.4 Four-handed Pinochle 9.5 Five-handed and larger Pinochle Deck Anglo-American 9.6 Check Pinochle 9.7 Double-deck Pinochle Play Clockwise 9.8 Racehorse Pinochle Card rank A 10 K Q J 9 9.9 Double-deck Pinochle for eight players (highest to 10 See also lowest) 11 References 12 External links Playing time 1 to 5 hours Random chance Medium History Related games Pinochle derives from the game bezique. -

History of the Modern Point Count System in Large Part from Frank Hacker’S Article from Table Talk in January 2013

History of the Modern Point Count System In large part from Frank Hacker’s article from Table Talk in January 2013 Contract bridge (as opposed to auction bridge) was invented in the late 1920s. During the first 40 years of contract bridge: Ely Culbertson (1891 – 1955) and Charles Goren (1901 -1991) were the major contributors to hand evaluation. It’s of interest to note that Culbertson died in Brattleboro, Vermont. Ely Culbertson did not use points to evaluate hands. Instead he used honor tricks. Goren and Culbertson overlapped somewhat. Charles Goren popularized the point count with his 1949 book “Point Count Bidding in Contract Bridge.” This book sold 3 million copies and went through 12 reprintings in its first 5 years. Charles Goren does not deserve the credit for introducing or developing the point count. Bryant McCampbell introduced the 4-3-2-1 point count in 1915, not for auction bridge, but for auction pitch. Bryant McCampbell was also an expert on auction bridge and he published a book on auction bridge in 1916. This book is still in print and available from Amazon. Bryant Mc Campbell was only 50 years old when he died at 103 East Eighty-fourth Street in NY City on May 6 1927 after an illness of more than two years. He was born on January 2, 1877 in St Louis? to Amos Goodwin Mc Campbell and Sarah Leavelle Bryant. He leaves a wife, Irene Johnson Gibson of Little Rock, Ark. They were married on October 16, 1915. Bryant was a retired Textile Goods Merchant and was in business with his younger brother, Leavelle who was born on May 28, 1879.