Aberrant Beliefs and Reasoning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Task-Based Taxonomy of Cognitive Biases for Information Visualization

A Task-based Taxonomy of Cognitive Biases for Information Visualization Evanthia Dimara, Steven Franconeri, Catherine Plaisant, Anastasia Bezerianos, and Pierre Dragicevic Three kinds of limitations The Computer The Display 2 Three kinds of limitations The Computer The Display The Human 3 Three kinds of limitations: humans • Human vision ️ has limitations • Human reasoning 易 has limitations The Human 4 ️Perceptual bias Magnitude estimation 5 ️Perceptual bias Magnitude estimation Color perception 6 易 Cognitive bias Behaviors when humans consistently behave irrationally Pohl’s criteria distilled: • Are predictable and consistent • People are unaware they’re doing them • Are not misunderstandings 7 Ambiguity effect, Anchoring or focalism, Anthropocentric thinking, Anthropomorphism or personification, Attentional bias, Attribute substitution, Automation bias, Availability heuristic, Availability cascade, Backfire effect, Bandwagon effect, Base rate fallacy or Base rate neglect, Belief bias, Ben Franklin effect, Berkson's paradox, Bias blind spot, Choice-supportive bias, Clustering illusion, Compassion fade, Confirmation bias, Congruence bias, Conjunction fallacy, Conservatism (belief revision), Continued influence effect, Contrast effect, Courtesy bias, Curse of knowledge, Declinism, Decoy effect, Default effect, Denomination effect, Disposition effect, Distinction bias, Dread aversion, Dunning–Kruger effect, Duration neglect, Empathy gap, End-of-history illusion, Endowment effect, Exaggerated expectation, Experimenter's or expectation bias, -

The Being of Analogy Noah Roderick Noah Roderick the Being of Analogy

Noah Roderick The Being of Analogy Noah Roderick Noah Roderick The Being of Analogy The Being of Modern physics replaced the dualism of matter and form with a new distinction between matter and force. In this way form was marginalized, and with it the related notion of the object. Noah Roderick’s book is a refreshing effort to reverse the consequences of this now banal mainstream materialism. Ranging from physics through literature to linguistics, spanning philosophy from East to West, and weaving it all together in remarkably lucid prose, Roderick intro- duces a new concept of analogy that sheds unfamiliar light on such thinkers as Marx, Deleuze, Goodman, Sellars, and Foucault. More than a literary device, analogy teaches us something about being itself. OPEN HUMANITIES PRESS Cover design by Katherine Gillieson · Illustration by Tammy Lu The Being of Analogy New Metaphysics Series Editors: Graham Harman and Bruno Latour The world is due for a resurgence of original speculative metaphysics. The New Metaphys- ics series aims to provide a safe house for such thinking amidst the demoralizing caution and prudence of professional academic philosophy. We do not aim to bridge the analytic- continental divide, since we are equally impatient with nail-filing analytic critique and the continental reverence for dusty textual monuments. We favor instead the spirit of the intel- lectual gambler, and wish to discover and promote authors who meet this description. Like an emergent recording company, what we seek are traces of a new metaphysical ‘sound’ from any nation of the world. The editors are open to translations of neglected metaphysical classics, and will consider secondary works of especial force and daring. -

“Dysrationalia” Among University Students: the Role of Cognitive

“Dysrationalia” among university students: The role of cognitive abilities, different aspects of rational thought and self-control in explaining epistemically suspect beliefs Erceg, Nikola; Galić, Zvonimir; Bubić, Andreja Source / Izvornik: Europe’s Journal of Psychology, 2019, 15, 159 - 175 Journal article, Published version Rad u časopisu, Objavljena verzija rada (izdavačev PDF) https://doi.org/10.5964/ejop.v15i1.1696 Permanent link / Trajna poveznica: https://urn.nsk.hr/urn:nbn:hr:131:942674 Rights / Prava: Attribution 4.0 International Download date / Datum preuzimanja: 2021-09-29 Repository / Repozitorij: ODRAZ - open repository of the University of Zagreb Faculty of Humanities and Social Sciences Europe's Journal of Psychology ejop.psychopen.eu | 1841-0413 Research Reports “Dysrationalia” Among University Students: The Role of Cognitive Abilities, Different Aspects of Rational Thought and Self-Control in Explaining Epistemically Suspect Beliefs Nikola Erceg* a, Zvonimir Galić a, Andreja Bubić b [a] Department of Psychology, Faculty of Humanities and Social Sciences, University of Zagreb, Zagreb, Croatia. [b] Department of Psychology, Faculty of Humanities and Social Sciences, University of Split, Split, Croatia. Abstract The aim of the study was to investigate the role that cognitive abilities, rational thinking abilities, cognitive styles and self-control play in explaining the endorsement of epistemically suspect beliefs among university students. A total of 159 students participated in the study. We found that different aspects of rational thought (i.e. rational thinking abilities and cognitive styles) and self-control, but not intelligence, significantly predicted the endorsement of epistemically suspect beliefs. Based on these findings, it may be suggested that intelligence and rational thinking, although related, represent two fundamentally different constructs. -

Working Memory, Cognitive Miserliness and Logic As Predictors of Performance on the Cognitive Reflection Test

Working Memory, Cognitive Miserliness and Logic as Predictors of Performance on the Cognitive Reflection Test Edward J. N. Stupple ([email protected]) Centre for Psychological Research, University of Derby Kedleston Road, Derby. DE22 1GB Maggie Gale ([email protected]) Centre for Psychological Research, University of Derby Kedleston Road, Derby. DE22 1GB Christopher R. Richmond ([email protected]) Centre for Psychological Research, University of Derby Kedleston Road, Derby. DE22 1GB Abstract Most participants respond that the answer is 10 cents; however, a slower and more analytic approach to the The Cognitive Reflection Test (CRT) was devised to measure problem reveals the correct answer to be 5 cents. the inhibition of heuristic responses to favour analytic ones. The CRT has been a spectacular success, attracting more Toplak, West and Stanovich (2011) demonstrated that the than 100 citations in 2012 alone (Scopus). This may be in CRT was a powerful predictor of heuristics and biases task part due to the ease of administration; with only three items performance - proposing it as a metric of the cognitive miserliness central to dual process theories of thinking. This and no requirement for expensive equipment, the practical thesis was examined using reasoning response-times, advantages are considerable. There have, moreover, been normative responses from two reasoning tasks and working numerous correlates of the CRT demonstrated, from a wide memory capacity (WMC) to predict individual differences in range of tasks in the heuristics and biases literature (Toplak performance on the CRT. These data offered limited support et al., 2011) to risk aversion and SAT scores (Frederick, for the view of miserliness as the primary factor in the CRT. -

A Diffusion Model Analysis of Belief Bias: Different Cognitive Mechanisms Explain How Cogni

A diffusion model analysis of belief bias: Different cognitive mechanisms explain how cogni- tive abilities and thinking styles contribute to conflict resolution in reasoning Anna-Lena Schuberta, Mário B. Ferreirab, André Matac, & Ben Riemenschneiderd aInstitute of Psychology, Heidelberg University, Heidelberg, Germany, E-mail: anna- [email protected] bCICPSI, Faculdade de Psicologia, Universidade de Lisboa, Portugal, E-mail: mferreira@psi- cologia.ulisboa.pt cCICPSI, Faculdade de Psicologia, Universidade de Lisboa, Portugal, E-mail: aomata@psico- logia.ulisboa.pt dInstitute of Psychology, Heidelberg University, Heidelberg, Germany, E-mail: riemenschnei- [email protected] Word count: 17,116 words Author Note Correspondence concerning this article should be addressed to Anna-Lena Schubert, Institute of Psychology, Heidelberg University, Hauptstrasse 47-51, D-69117 Heidelberg, Germany, Phone: +49 (0) 6221-547354, Fax: +49 (0) 6221-547325, E-mail: anna-lena.schubert@psy- chologie.uni-heidelberg.de. The authors thank Joana Reis for her help in setting up the experi- ment, and Adrian P. Banks and Christopher Hope for sharing their experimental material. THE ROLE OF COGNITIVE ABILITIES AND THINKING STYLES IN REASONING 2 Abstract Recent results have challenged the widespread assumption of dual process models of belief bias that sound reasoning relies on slow, careful reflection, whereas biased reasoning is based on fast intuition. Instead, parallel process models of reasoning suggest that rule- and belief- based problem features are processed in parallel and that reasoning problems that elicit a con- flict between rule- and belief-based problem features may also elicit more than one Type 1 re- sponse. This has important implications for individual-differences research on reasoning, be- cause rule-based responses by certain individuals may reflect that these individuals were ei- ther more likely to give a rule-based default response or that they successfully inhibited and overrode a belief-based default response. -

Aesthetics After Finitude Anamnesis Anamnesis Means Remembrance Or Reminiscence, the Collection and Re- Collection of What Has Been Lost, Forgotten, Or Effaced

Aesthetics After Finitude Anamnesis Anamnesis means remembrance or reminiscence, the collection and re- collection of what has been lost, forgotten, or effaced. It is therefore a matter of the very old, of what has made us who we are. But anamnesis is also a work that transforms its subject, always producing something new. To recollect the old, to produce the new: that is the task of Anamnesis. a re.press series Aesthetics After Finitude Baylee Brits, Prudence Gibson and Amy Ireland, editors re.press Melbourne 2016 re.press PO Box 40, Prahran, 3181, Melbourne, Australia http://www.re-press.org © the individual contributors and re.press 2016 This work is ‘Open Access’, published under a creative commons license which means that you are free to copy, distribute, display, and perform the work as long as you clearly attribute the work to the authors, that you do not use this work for any commercial gain in any form whatso- ever and that you in no way alter, transform or build on the work outside of its use in normal aca- demic scholarship without express permission of the author (or their executors) and the publisher of this volume. For any reuse or distribution, you must make clear to others the license terms of this work. For more information see the details of the creative commons licence at this website: http://creativecommons.org/licenses/by-nc-nd/2.5/ National Library of Australia Cataloguing-in-Publication Data Title: Aesthetics after finitude / Baylee Brits, Prudence Gibson and Amy Ireland, editors. ISBN: 9780980819793 (paperback) Series: Anamnesis Subjects: Aesthetics. -

Constructing the Self, Constructing America: a Cultural History of Psychotherapy Pdf

FREE CONSTRUCTING THE SELF, CONSTRUCTING AMERICA: A CULTURAL HISTORY OF PSYCHOTHERAPY PDF Philip Cushman | 448 pages | 30 Sep 1996 | The Perseus Books Group | 9780201441925 | English | Cambridge, MA, United States Constructing The Self, Constructing America: A Cultural History Of Psychotherapy by Philip Cushman Rhetoric of therapy is a concept coined by American academic Dana L. Cloud to describe "a set of political and cultural discourses that have adopted psychotherapy's lexicon—the conservative language of healing, coping, adaptation, and restoration of previously existing order—but in contexts of social and political conflict". Constructing the Self argued that the rhetoric of therapy encourages people to focus on themselves and their private lives rather than attempt to reform flawed systems of social and political power. This form of persuasion is primarily used by politicians, managers, journalists and entertainers as a way to cope with the crisis of the American Dream. The rhetoric of therapy has two functions, according to Cloud: 1 to exhort conformity with the prevailing social order and 2 to encourage identification Constructing the Self therapeutic values: individualismfamilism, self-helpand self- absorption. The origins of therapeutic discourse, along with advertising and other consumerist cultural forms, emerged during the industrialization of the West during the 18th century. The new emphasis on the acquisition of wealth during this period produced discourse about the "democratic self- determination of individuals conceived as autonomous, self-expressive, self-reliant subjects" or, in short, the " self-made man ". Therefore, the language of personal responsibility, adaptation, and healing served not to liberate the working class, the poor, and the socially marginalized, but to persuade members of these classes that they are individually responsible for their plight. -

Ilidigital Master Anton 2.Indd

services are developed to be used by humans. Thus, understanding humans understanding Thus, humans. by used be to developed are services obvious than others but certainly not less complex. Most products bioengineering, and as shown in this magazine. Psychology mightbusiness world. beBe it more the comparison to relationships, game elements, or There are many non-business flieds which can betransfered to the COGNTIVE COGNTIVE is key to a succesfully develop a product orservice. is keytoasuccesfullydevelopproduct BIASES by ANTON KOGER The Power of Power The //PsychologistatILI.DIGITAL WE EDIT AND REINFORCE SOME WE DISCARD SPECIFICS TO WE REDUCE EVENTS AND LISTS WE STORE MEMORY DIFFERENTLY BASED WE NOTICE THINGS ALREADY PRIMED BIZARRE, FUNNY, OR VISUALLY WE NOTICE WHEN WE ARE DRAWN TO DETAILS THAT WE NOTICE FLAWS IN OTHERS WE FAVOR SIMPLE-LOOKING OPTIONS MEMORIES AFTER THE FACT FORM GENERALITIES TO THEIR KEY ELEMENTS ON HOW THEY WERE EXPERIENCED IN MEMORY OR REPEATED OFTEN STRIKING THINGS STICK OUT MORE SOMETHING HAS CHANGED CONFIRM OUR OWN EXISTING BELIEFS MORE EASILY THAN IN OURSELVES AND COMPLETE INFORMATION way we see situations but also the way we situationsbutalsotheway wesee way the biasesnotonlychange Furthermore, overload. cognitive avoid attention, ore situations, guide help todesign massively can This in. take people information of kind explainhowandwhat ofperception egory First,biasesinthecat andappraisal. ory, self,mem perception, into fourcategories: roughly bedivided Cognitive biasescan within thesesituations. forusers interaction andeasy in anatural situationswhichresults sible toimprove itpos and adaptingtothesebiasesmakes ingiven situations.Reacting ways certain act sively helpstounderstandwhypeople mas into consideration biases ing cognitive Tak humanbehavior. topredict likely less or andmore relevant illusionsare cognitive In each situation different every havior day. -

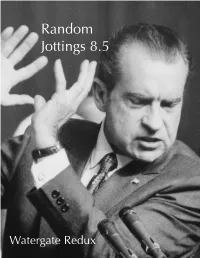

Random Jottings 8.5

Random Jottings 8.5 Watergate Redux Random Jottings 8.5, Watergate Redux is an irregularly published amateur magazine. It is available in printed form directly from Timespinner Press or through Amazon.com; a free PDF version is available at http://efanzines.com/RandomJottings/. It is also available for “the usual”: contributions of art or written material, letters of comment, attendance at Corflu 31, or editorial whim. Letters of comment or other inquiries to Michael Dobson, 8042 Park Overlook Drive, Bethesda, Maryland 20817-2724, [email protected]. Copyright © 2014 Timespinner Press on behalf of the individual contributors. This issue was originally contained in the print edition of Random Jottings 9. For reasons of file size, I’ve broken this section off as a separate issue. The print edition, perfect bound with wraparound color cover, was published by CreateSpace and is available on Amazon for $9.95 retail, though they’re discounting it to $8.96, at least at the time of writing. http://www.amazon.com/dp/ 1499134088/. This issue also contains the letter column on Random Jottings 8, which kind of works. Artwork is by Steve Stiles except for pages 3 and 26, which are by Alexis Gilliland. Photos and memorabilia are from Earl Kemp or Wikimedia Commons. What Would Nixon Do? Finally, my neighbor Mark Hill sent me a link RANDOM JOTTINGS 8 WAS the “Watergate to a Smithsonian Magazine story containing Considered as an Org Chart of Semi-Precious Richard Nixon’s FBI application, which I’ve Stones” issue, an anthology of various essays, reprinted here. The full story is at http:// comic book scripts, and other stuff about the tinyurl.com/NixonFBI. -

A Philosophical Investigation Into Coercive Psychiatric Practices

MIC, University of Limerick A philosophical investigation into coercive psychiatric practices 2 Volumes Gerald Roche MSc, MPhil, BCL, BL. Volume 1 of 2 A thesis submitted to the University of Limerick for the degree of Ph.D. in Philosophy Doctoral Supervisor: Dr. Niall Keane, MIC, University of Limerick. External Examiner: Professor Joris Vandenberghe, University of Leuven, Leuven, Belgium. Internal Examiner: Dr. Stephen Thornton, MIC, University of Limerick. Submitted to the University of Limerick, February 2012. Abstract This dissertation seeks to examine the validity of the justification commonly offered for a coercive 1 psychiatric intervention, namely that the intervention was in the ‘best interests’ of the subject and/or that the subject posed a danger to others. As a first step, it was decided to analyse justifications based on ‘best interests’ [the ‘ Stage 1 ’ argument] separately from those based on dangerousness [the ‘Stage 2 ’ argument]. Justifications based on both were the focus of the ‘ Stage 3 ’ argument. Legal and philosophical analyses of coercive psychiatric interventions generally regard such interventions as embodying a benign paternalism occasioning slight, if any, ethical concern. Whilst there are some dissenting voices even at the very heart of academic and professional psychiatry, the majority of psychiatrists also appear to share such views. The aim of this dissertation is to show that such a perspective is mistaken and that such interventions raise philosophical and ethical questions of the profoundest importance. -

Illusion and Well-Being: a Social Psychological Perspective on Mental Health

Psyehologlcal Bulletin Copyright 1988 by the American Psychological Association, Inc. 1988, Vol. 103, No. 2, 193-210 0033-2909/88/$00.75 Illusion and Well-Being: A Social Psychological Perspective on Mental Health Shelley E. Taylor Jonathon D. Brown University of California, Los Angeles Southern Methodist University Many prominenttheorists have argued that accurate perceptions of the self, the world, and the future are essential for mental health. Yet considerable research evidence suggests that overly positive self- evaluations, exaggerated perceptions of control or mastery, and unrealistic optimism are characteris- tic of normal human thought. Moreover, these illusions appear to promote other criteria of mental health, including the ability to care about others, the ability to be happy or contented, and the ability to engage in productive and creative work. These strategies may succeed, in large part, because both the social world and cognitive-processingmechanisms impose filters on incoming information that distort it in a positive direction; negativeinformation may be isolated and represented in as unthreat- ening a manner as possible. These positive illusions may be especially useful when an individual receives negative feedback or is otherwise threatened and may be especially adaptive under these circumstances. Decades of psychological wisdom have established contact dox: How can positive misperceptions of one's self and the envi- with reality as a hallmark of mental health. In this view, the ronment be adaptive when accurate information processing wcU-adjusted person is thought to engage in accurate reality seems to be essential for learning and successful functioning in testing,whereas the individual whose vision is clouded by illu- the world? Our primary goal is to weave a theoretical context sion is regarded as vulnerable to, ifnot already a victim of, men- for thinking about mental health. -

Prior Belief Innuences on Reasoning and Judgment: a Multivariate Investigation of Individual Differences in Belief Bias

Prior Belief Innuences on Reasoning and Judgment: A Multivariate Investigation of Individual Differences in Belief Bias Walter Cabral Sa A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Education University of Toronto O Copyright by Walter C.Si5 1999 National Library Bibliothèque nationaIe of Canada du Canada Acquisitions and Acquisitions et Bibliographic Services services bibliographiques 395 Wellington Street 395, rue WePington Ottawa ON K1A ON4 Ottawa ON KtA ON4 Canada Canada Your file Votre rëfërence Our fi& Notre réterence The author has granted a non- L'auteur a accordé une licence non exclusive Licence allowing the exclusive permettant à la National Libraq of Canada to Bibliothèque nationale du Canada de reproduce, loan, distribute or sell reproduire, prêter, distribuer ou copies of this thesis in microforni, vendre des copies de cette thèse sous paper or electronic formats. la forme de microfiche/film, de reproduction sur papier ou sur format électronique. The author retains ownership of the L'auteur conserve la propriété du copyright in this thesis. Neither the droit d'auteur qui protège cette thèse. thesis nor subçtantial extracts fi-om it Ni la thèse ni des extraits substantiels may be printed or otherwise de celle-ci ne doivent être imprimés reproduced without the author's ou autrement reproduits sans son permission. autorisation. Prior Belief Influences on Reasoning and Judgment: A Multivariate Investigation of Individual Differences in Belief Bias Doctor of Philosophy, 1999 Walter Cabral Sa Graduate Department of Education University of Toronto Belief bias occurs when reasoning or judgments are found to be overly infiuenced by prior belief at the expense of a normatively prescribed accommodation of dl the relevant data.