I Know What You Enter on Gear VR

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Top 20 Influencers

Top 20 AR/VR InfluencersWhat Fits You Best? Sanem Avcil Palmer Luckey @Sanemavcil @PalmerLuckey Founder of Coolo Games, Founder of Oculus Rift; CEO of Politehelp & And, the well known Imprezscion Yazilim Ve voice in VR. Elektronik. Chris Milk Alex Kipman @milk @akipman Maker of stuff, Key player in the launch of Co-Founder/CEO of Within. Microsoft Hololens. Creator of Focusing on innovative human the Microsoft Kinect experiences in VR. motion controller. Philip Rosedale Tony Parisi @philiprosedale @auradeluxe Founder of Head of AR and VR Strategy at 2000s MMO experience, Unity, began his VR career Second Life. co-founding VRML in 1994 with Mark Pesce. Kent Bye Clay Bavor @kentbye @claybavor Host of leading Vice President of Virtual Reality VR podcast, Voices of VR & at Google. Esoteric Voices. Rob Crasco Benjamin Lang @RoblemVR @benz145 VR Consultant at Co-founder & Executive Editor of VR/AR Consulting. Writes roadtovr.com, one of the leading monthly articles on VR for VR news sites in the world. Bright Metallic magazine. Vanessa Radd Chris Madsen @vanradd @deep_rifter Founder, XR Researcher; Director at Morph3D, President, VRAR Association. Ambassador at Edge of Discovery. VR/AR/Experiencial Technology. Helen Papagiannis Cathy Hackl @ARstories @CathyHackl PhD; Augmented Reality Founder, Latinos in VR/AR. Specialist. Author of Marketing Co-Chair at VR/AR Augmented Human. Assciation; VR/AR Speaker. Brad Waid Ambarish Mitra @Techbradwaid @rishmitra Global Speaker, Futurist, Founder & CEO at blippar, Educator, Entrepreneur. Young Global Leader at Wef, Investor in AugmentedReality, AI & Genomics. Tom Emrich Gaia Dempsey @tomemrich @fianxu VC at Super Ventures, Co-founder at DAQRI, Fonder, We Are Wearables; Augmented Reality Futurist. -

Samsung Announces New Windows-Based Virtual-Reality Headset at Microsoft Event 4 October 2017, by Matt Day, the Seattle Times

Samsung announces new Windows-based virtual-reality headset at Microsoft event 4 October 2017, by Matt Day, The Seattle Times Samsung is joining Microsoft's virtual reality push, Microsoft also said that it had acquired AltspaceVR, announcing an immersive headset that pairs with a California virtual reality software startup that was Windows computers. building social and communications tools until it ran into funding problems earlier this year. The Korean electronics giant unveiled its Samsung HMD Odyssey at a Microsoft event in San ©2017 The Seattle Times Francisco recently. It will sell for $499. Distributed by Tribune Content Agency, LLC. The device joins Windows-based immersive headsets built by Lenovo, HP, Acer and Dell, and aimed for release later this year. Microsoft is among the companies seeking a slice of the emerging market for modern head-mounted devices. High-end headsets, like Facebook-owned Oculus's Rift and the HTC Vive, require powerful Windows PCs to run. Others, including the Samsung Gear VR and Google's Daydream, are aimed at the wider audience of people who use smartphones. Microsoft's vision, for now, is tied to the PC, and specifically new features in the Windows operating system designed to make it easier to build and display immersive environments. The company also has its own hardware, but that hasn't been on display recently. Microsoft's HoloLens was a trailblazer when it was unveiled in 2015. The headset, whose visor shows computer-generated images projected onto objects in the wearer's environment without obscuring the view of the real world completely, was subsequently offered for sale to developers and businesses. -

Getting Real with the Library

Getting Real with the Library Samuel Putnam, Sara Gonzalez Marston Science Library University of Florida Outline What is Augmented Reality (AR) & Virtual Reality (VR)? What can you do with AR/VR? How to Create AR/VR AR/VR in the Library Find Resources What is Augmented and Virtual Reality? Paul Milgram ; Haruo Takemura ; Akira Utsumi ; Fumio Kishino; Augmented reality: a class of displays on the reality- virtuality continuum. Proc. SPIE 2351, Telemanipulator and Telepresence Technologies, 282 (December 21, 1995) What is Virtual Reality? A computer-generated simulation of a lifelike environment that can be interacted with in a seemingly real or physical way by a person, esp. by means of responsive hardware such as a visor with screen or gloves with sensors. "virtual reality, n". OED Online 2017. Web. 16 May 2017. Head mounted display, U.S. Patent Number 8,605,008 VR in the 90s By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, Attribution, https://commons.wikimedia.org/w/index.php?curid=32899409 By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, Attribution, Attribution, https://commons.wikimedia.org/w/index.php?curid=32525338 https://commons.wikimedia.org/w/index.php?curid=32525505 1 2 3 VR with a Phone 1. Google Daydream View 2. Google Cardboard 3. Samsung Gear VR Oculus Rift ● Popular VR system: headset, hand controllers, headset tracker ($598) ● Headset has speakers -> immersive environment ● Requires a powerful PC for full VR OSVR Headset ● Open Source ● “Plug in, Play Everything” ● Discounts for Developers and Academics ● Requires a powerful PC for full VR Augmented Reality The use of technology which allows the perception of the physical world to be enhanced or modified by computer-generated stimuli perceived with the aid of special equipment. -

Virtual Reality Controllers

Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets By Summer Lindsey Bachelor of Arts Psychology Florida Institute of Technology May 2015 A thesis Submitted to the College of Aeronautics at Florida Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science In Aviation Human Factors Melbourne, Florida April 2017 © Copyright 2017 Summer Lindsey All Rights Reserved The author grants permission to make single copies. _________________________________ The undersigned committee, having examined the attached thesis " Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets," by Summer Lindsey hereby indicates its unanimous approval. _________________________________ Deborah Carstens, Ph.D. Professor and Graduate Program Chair College of Aeronautics Major Advisor _________________________________ Meredith Carroll, Ph.D. Associate Professor College of Aeronautics Committee Member _________________________________ Neil Ganey, Ph.D. Human Factors Engineer Northrop Grumman Committee Member _________________________________ Christian Sonnenberg, Ph.D. Assistant Professor and Assistant Dean College of Business Committee Member _________________________________ Korhan Oyman, Ph.D. Dean and Professor College of Aeronautics Abstract Title: Evaluation of Low Cost Controllers for Mobile Based Virtual Reality Headsets Author: Summer Lindsey Major Advisor: Dr. Deborah Carstens Virtual Reality (VR) is no longer just for training purposes. The consumer VR market has become a large part of the VR world and is growing at a rapid pace. In spite of this growth, there is no standard controller for VR. This study evaluated three different controllers: a gamepad, the Leap Motion, and a touchpad as means of interacting with a virtual environment (VE). There were 23 participants that performed a matching task while wearing a Samsung Gear VR mobile based VR headset. -

Virtual Reality and Attitudes Toward Tourism Destinations*

Virtual Reality and Attitudes toward Tourism Destinations* Iis P. Tussyadiaha, Dan Wangb and Chenge (Helen) Jiab aSchool of Hospitality Business Management Carson College of Business Washington State University Vancouver, USA [email protected] bSchool of Hotel & Tourism Management The Hong Kong Polytechnic University, Hong Kong {d.wang; chenge.jia}@polyu.edu.hk Abstract Recent developments in Virtual Reality (VR) technology present a tremendous opportunity for the tourism industry. This research aims to better understand how the VR experience may influence travel decision making by investigating spatial presence in VR environments and its impact on attitudes toward tourism destinations. Based on a study involving virtual walkthrough of tourism destinations with 202 participants, two dimensions of spatial presence were identified: being somewhere other than the actual environment and self-location in a VR environment. The analysis revealed that users’ attention allocation to VR environments contributed significantly to spatial presence. It was also found that spatial presence positively affects post VR attitude change toward tourism destinations, indicating the persuasiveness of VR. No significant differences were found across VR stimuli (devices) and across prior visitation. Keywords: virtual reality; spatial presence; attitude change; virtual tourism; non-travel. 1 Introduction Virtual reality (VR) is touted to be one of the important contemporary technological developments to greatly impact the tourism industry. While VR has been around since the late 1960s, recent developments in VR platforms, devices, and hypermedia content production tools have allowed for the technology to emerge from the shadows into the realm of everyday experiences. The (potential) roles of VR in tourism management and marketing have been discussed in tourism literature (e.g., Cheong, 1995; Dewailly, 1999; Guttentag, 2010; Huang et al., 2016; Williams & Hobson, 1995). -

Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices

Multimodal Technologies and Interaction Article Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices Minh Nguyen *, Huy Tran and Huy Le School of Engineering, Computer & Mathematical Sciences, Auckland University of Technology, 55 Wellesley Street East, Auckland Central, Auckland 1010, New Zealand; [email protected] (H.T.); [email protected] (H.L.) * Correspondence: [email protected]; Tel.: +64-211-754-956 Received: 27 April 2017; Accepted: 17 July 2017; Published: 21 July 2017 Abstract: This article describes technical basics and applications of graphically interactive and online Virtual Reality (VR) frameworks. It automatically extracts and displays left and right stereo images from the Internet search engines, e.g., Google Image Search. Within a short waiting time, many 3D related results are returned to the users regarding aligned left and right stereo photos; these results are viewable through VR glasses. The system automatically filters different types of available 3D data from redundant pictorial datasets on the public networks (the Internet). To reduce possible copyright issues, only the search for images that are “labelled for reuse” is performed; meaning that the obtained pictures can be used for any purpose, in any area, without being modified. The system then automatically specifies if the picture is a side-by-side stereo pair, an anaglyph, a stereogram, or just a “normal” 2D image (not optically 3D viewable). The system then generates a stereo pair from the collected dataset, to seamlessly display 3D visualisation on State-of-the-art VR devices such as the low-cost Google Cardboard, Samsung Gear VR or Google Daydream. -

A Case Study of Security and Privacy Threats from Augmented Reality (AR)

A Case Study of Security and Privacy Threats from Augmented Reality (AR) Song Chen∗, Zupei Liy, Fabrizio DAngeloy, Chao Gaoy, Xinwen Fuz ∗Binghamton University, NY, USA; Email: [email protected] yDepartment of Computer Science, University of Massachusetts Lowell, MA, USA Email: fzli1, cgao, [email protected] zDepartment of Computer Science, University of Central Florida, FL, USA Email: [email protected] Abstract—In this paper, we present a case study of potential security and privacy threats from virtual reality (VR) and augmented reality (AR) devices, which have been gaining popularity. We introduce a computer vision- based attack using an AR system based on the Samsung Gear VR and ZED stereo camera against authentication approaches on touch-enabled devices. A ZED stereo cam- era is attached to a Gear VR headset and works as the video feed for the Gear VR. While pretending to be playing, an attacker can capture videos of a victim inputting a password on touch screen. We use depth Fig. 1. AR Based on Samsung Gear VR and ZED and distance information provided by the stereo camera to reconstruct the attack scene and recover the victim’s and Microsoft Hololens recently. Google Glass is a password. We use numerical passwords as an example and relatively simple, light weight, head-mounted display perform experiments to demonstrate the performance of intended for daily use. Microsoft Hololens is a pair of our attack. The attack’s success rate is 90% when the augmented reality smart glasses with powerful compu- distance from the victim is 1.5 meters and a reasonably tational capabilities and multiple sensors. -

Envrment: Investigating Experience in a Virtual User-Composed Environment

ENVRMENT: INVESTIGATING EXPERIENCE IN A VIRTUAL USER-COMPOSED ENVIRONMENT A Thesis presented to the Faculty of California Polytechnic State University, San Luis Obispo In Partial Fulfillment of the Requirements for the Degree Master of Science in Computer Science by Matthew Key December 2020 c 2020 Matthew Key ALL RIGHTS RESERVED ii COMMITTEE MEMBERSHIP TITLE: EnVRMent: Investigating Experience in a Virtual User-Composed Environment AUTHOR: Matthew Key DATE SUBMITTED: December 2020 COMMITTEE CHAIR: Zo¨eWood, Ph.D. Professor of Computer Science COMMITTEE MEMBER: Christian Eckhardt, Ph.D. Professor of Computer Science COMMITTEE MEMBER: Franz Kurfess, Ph.D. Professor of Computer Science iii ABSTRACT EnVRMent: Investigating Experience in a Virtual User-Composed Environment Matthew Key Virtual Reality is a technology that has long held society's interest, but has only recently began to reach a critical mass of everyday consumers. The idea of modern VR can be traced back decades, but because of the limitations of the technology (both hardware and software), we are only now exploring its potential. At present, VR can be used for tele-surgery, PTSD therapy, social training, professional meetings, conferences, and much more. It is no longer just an expensive gimmick to go on a momentary field trip; it is a tool, and as with the automobile, personal computer, and smartphone, it will only evolve as more and more adopt and utilize it in various ways. It can provide a three dimensional interface where only two dimensions were previously possible. It can allow us to express ourselves to one another in new ways regardless of the distance between individuals. -

The Android Platform Security Model∗

The Android Platform Security Model∗ RENÉ MAYRHOFER, Google and Johannes Kepler University Linz JEFFREY VANDER STOEP, Google CHAD BRUBAKER, Google NICK KRALEVICH, Google Android is the most widely deployed end-user focused operating system. With its growing set of use cases encompassing communication, navigation, media consumption, entertainment, finance, health, and access to sensors, actuators, cameras, or microphones, its underlying security model needs to address a host of practical threats in a wide variety of scenarios while being useful to non-security experts. The model needs to strike a difficult balance between security, privacy, and usability for end users, assurances for app developers, and system performance under tight hardware constraints. While many of the underlying design principles have implicitly informed the overall system architecture, access control mechanisms, and mitigation techniques, the Android security model has previously not been formally published. This paper aims to both document the abstract model and discuss its implications. Based on a definition of the threat model and Android ecosystem context in which it operates, we analyze how the different security measures in past and current Android implementations work together to mitigate these threats. There are some special cases in applying the security model, and we discuss such deliberate deviations from the abstract model. CCS Concepts: • Security and privacy → Software and application security; Domain-specific security and privacy architectures; Operating systems security; • Human-centered computing → Ubiquitous and mobile devices. Additional Key Words and Phrases: Android, security, operating system, informal model 1 INTRODUCTION Android is, at the time of this writing, the most widely deployed end-user operating system. -

User Manual Please Read This Manual Before Operating Your Device and Keep It for Future Reference

User Manual Please read this manual before operating your device and keep it for future reference. Table of Contents Support .......................................................................... 3 Basic Navigation and Selection ............................25 Using the Touchpad ..............................................25 Read me first ................................................................ 4 Moving the On-Screen Pointer ............................28 Wearing the Gear VR .............................................. 5 Making a Selection ...............................................29 Precautions ............................................................... 6 Navigation - Home Screen ...................................30 Before Using the Gear VR Headset ..................... 6 Navigation - App Screen ......................................31 About the Gear VR .................................................. 7 Using the Universal Menu ....................................34 Additional Notifications ........................................... 8 Calls .........................................................................40 Getting Started ............................................................ 9 Viewing Notifications .............................................41 Device Features ....................................................... 9 Function Overview .................................................12 Applications ................................................................42 Loading New Applications -

Viral SKILLS 2018-1-AT02-KA204-039300 COMPENDIUM

Fostering Virtual Reality applications within Adult Learning to improve low skills and qualifications Project No. 2018-1-AT02-KA204-039300 The European Commission support for the production of this publication does not constitute an endorsement of the contents which reflects the views only of the authors, and the Commission cannot be held responsible for any use which may be made of the information contained therein. ViRAL SKILLS 2018-1-AT02-KA204-039300 COMPENDIUM Project Information Project Acronym: ViRAL Skills Project Title: Fostering Virtual Reality applications within Adult Learning to improve low skills and qualifications Project No.: 2018-1-AT02-KA204-039300 Funding Programme: Erasmus+ Key Action 2: Strategic Partnerships More Information: www.viralskills.eu www.facebook.com/viralskillsEU [email protected] With the support of the Erasmus+ Programme of the European Union. Disclaimer: The European Commission support for the production of this publication does not constitute an endorsement of the contents which reflects the views only of the authors, and the Commission cannot be held responsible for any use which may be made of the information contained therein. ViRAL SKILLS 2018-1-AT02-KA204-039300 COMPENDIUM Table of contents Introduction ......................................................................................................................... 1 1 Technical Introduction to VR ....................................................................................... 3 1.1 Virtual Reality in the educational sector ............................................................ -

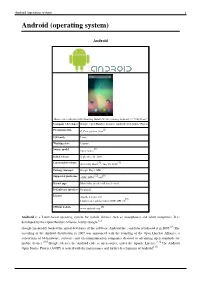

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Home screen displayed by Samsung Galaxy Nexus, running Android 4.1 "Jelly Bean" Company / developer Google, Open Handset Alliance, Android Open Source Project [1] Programmed in C, C++, python, Java OS family Linux Working state Current [2] Source model Open source Initial release September 20, 2008 [3] [4] Latest stable release 4.1.1 Jelly Bean / July 10, 2012 Package manager Google Play / APK [5] [6] Supported platforms ARM, MIPS, x86 Kernel type Monolithic (modified Linux kernel) Default user interface Graphical License Apache License 2.0 [7] Linux kernel patches under GNU GPL v2 [8] Official website www.android.com Android is a Linux-based operating system for mobile devices such as smartphones and tablet computers. It is developed by the Open Handset Alliance, led by Google.[2] Google financially backed the initial developer of the software, Android Inc., and later purchased it in 2005.[9] The unveiling of the Android distribution in 2007 was announced with the founding of the Open Handset Alliance, a consortium of 86 hardware, software, and telecommunication companies devoted to advancing open standards for mobile devices.[10] Google releases the Android code as open-source, under the Apache License.[11] The Android Open Source Project (AOSP) is tasked with the maintenance and further development of Android.[12] Android (operating system) 2 Android has a large community of developers writing applications ("apps") that extend the functionality of the devices. Developers write primarily in a customized version of Java.[13] Apps can be downloaded from third-party sites or through online stores such as Google Play (formerly Android Market), the app store run by Google.