Virtual & Augmented Reality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Audio for Virtual and Augmented Reality

2016 AES CONFERENCE AUDIO FOR VIRTUAL AND AUGMENTED REALITY FRIDAY, SEPT 30 THRU SATURDAY, OCT 1 LOS ANGELES CONVENTION CENTER CONFERENCE PROGRAM PLATINUM SPONSORS GOLD SPONSORS SPONSORS MESSAGE FROM THE CONFERENCE CO-CHAIRS Welcome to the first AES International Conference on Audio for Virtual and Augmented Reality! We are really proud to present this amazing technical program, which is the result of many months of extremely hard teamwork. We aimed for the best content we could possibly provide, and here you have it. We are extremely thankful to our great presenters, authors, keynote speakers and sponsors. Together, we made this possible and we sincerely hope you will take away a lot of useful information. Also, I´d like to extend our special thanks to our delegates, coming from all over the world to ANDRES MAYO attend this truly unique event, and to our really hard working team of volunteers, which ultimately made it possible to Conference Co-chair pack this awesome quality and quantity of knowledge in 2 full days crammed with papers, workshops, tutorials and even a technical showcase. Welcome to the show! I would like to extend a warm welcome to all of our delegates, authors, presenters and sponsors. This conference has been a dream of Andres’ and mine since May of 2015. The world of VR / AR has grown so quickly, so fast that we knew we had to bring a conference dedicated specifically to this topic to the audio community. We could not have done this without the hard work and dedication of an incredible conference committee. -

Samsung Announces New Windows-Based Virtual-Reality Headset at Microsoft Event 4 October 2017, by Matt Day, the Seattle Times

Samsung announces new Windows-based virtual-reality headset at Microsoft event 4 October 2017, by Matt Day, The Seattle Times Samsung is joining Microsoft's virtual reality push, Microsoft also said that it had acquired AltspaceVR, announcing an immersive headset that pairs with a California virtual reality software startup that was Windows computers. building social and communications tools until it ran into funding problems earlier this year. The Korean electronics giant unveiled its Samsung HMD Odyssey at a Microsoft event in San ©2017 The Seattle Times Francisco recently. It will sell for $499. Distributed by Tribune Content Agency, LLC. The device joins Windows-based immersive headsets built by Lenovo, HP, Acer and Dell, and aimed for release later this year. Microsoft is among the companies seeking a slice of the emerging market for modern head-mounted devices. High-end headsets, like Facebook-owned Oculus's Rift and the HTC Vive, require powerful Windows PCs to run. Others, including the Samsung Gear VR and Google's Daydream, are aimed at the wider audience of people who use smartphones. Microsoft's vision, for now, is tied to the PC, and specifically new features in the Windows operating system designed to make it easier to build and display immersive environments. The company also has its own hardware, but that hasn't been on display recently. Microsoft's HoloLens was a trailblazer when it was unveiled in 2015. The headset, whose visor shows computer-generated images projected onto objects in the wearer's environment without obscuring the view of the real world completely, was subsequently offered for sale to developers and businesses. -

7 Things About Google Glass

7 Things about Google Glass 1 - What is it? Google Glass is a computer that includes an optical display with a head mount, touch sensitive pad, a camera and a speaker. Google Glass is a head-mounted computer with technologies that make it comparable to a smartphone.1 It is a revolutionary device that lets you multitask without having to fumble for a phone or take your attention off of things in front of you.2 Learn more http://www.google.com/glass/ 2 - How does it work? Google Glass has a touch sensitive pad on one side of the frame that you use for scrolling, selecting and navigating.3 Glass also has the ability to read movement with multiple sensors. With a simple movement of your head, you are able to put the Google Glass to sleep, scroll through options or even wake it up. Voice sensors also give a user the ability to select, search and record.4 3 - Who’s doing it? Google Glass was developed in Google labs in Mountain View, California. Google calls the project, “Project Glass” and it is expected to be released to the public in 2014. Google engineers are currently working on new designs including a prescription glasses version.5 1 http://www.pcmag.com/article2/0,2817,2402613,00.asp 2http://www.google.com/glass/start/what-it-does/ 3 https://www.google.com/glass/help/ 4 http://www.google.com/glass/start/what-it-does/ 5 http://www.cnn.com/2014/01/28/tech/innovation/google-glass-lenses/index.html 4 - Why is it significant? Google Glass not only allows the user to take pictures, record videos, surf the web, check the weather, listen to music or message a friend but it allows them to do all of this without holding a device or having one awkwardly mounted to them. -

P15083: Virtual Visualization for Anatomy Teaching, Training and Surgery Simulation Applications

P15083: Virtual Visualization for Anatomy Teaching, Training and Surgery Simulation Applications Problem Definition Preamble ● Thank you for attending our review! We are looking for: ● constructive criticisms ● outside perspective Team Member Introduction Member Role Alex Dawson-Elli Team Lead - Biomedical Engineer Nate Burrell Biomedical Engineer Jascha Wilcox Biomedical Engineer Kenny LaMarca Computer Engineer Kevin Alexandre Computer Engineer context context volume rendering stereo visualization technology Background ● Anatomy is inherently a 3D problem ● conventional teaching techniques: ○ book -> 2D ○ dissection -> may discourage some students ● Modern technology is underutilized in the classroom Problem Statement Current State:Students learning anatomy from a textbook, at a low level of excitement, and a low level of exposure to modern imaging modalities Desired State: Students learning in a stereoscopic anatomy visualization environment, with a high level of excitement, and a high level of exposure to modern imaging modalities Project Goals: create a fully virtual stereo anatomy viewer Constraints: budget, pre-designed hardware, must use existing data set Stakeholders ● Project advisor ○ Dr. Christian Linte ● Teachers ○ Instructor of students (grade 6-12) ● Students ○ Our target age group is middle and high school students (grade 6-12) Use Scenario Customer Needs Customer Rqmt. # Importance Description CR1 9 Easy for a teacher to learn CR2 9 Easy for the Student to use CR3 9 Graphical Interface CR4 9 Stereo Viewing System, in 3D -

Holographic Computer

MECHANICAL ENGINEERING | DECEMBER 2018 | P.33 bioengineering WEARABLE Holographic Computer Microsoft’s HoloLens mixed reality headset is a breakthrough in human-computer interaction. STORY BY AGAM SHAH • ILLUSTRATION BY ZINA SAUNDERS he Kinect camera, which headset, Thyssenkrupp is equipping Microsoft released in 2010, elevator service technicians with brought unprecedented HoloLens to visualize and identify T levels of gesture and voice problems ahead of a job, and have interaction to video games. “When remote, hands-free access to technical we created Kinect for Xbox 360, and expert information when onsite. we aimed to build a device capable The company says that has helped of recognizing and understanding improve service times. Ford is also people so that computers could using HoloLens, enabling their design operate in ways that are more and engineering teams to visualize human,” said Alex Kipman, technical that projects the graphics onto a full-scale models in 3-D. They’ve fellow at Microsoft, who helped lens. As a user walks around a room converted processes that used to invent the camera. or turns his head, the position or take weeks down to days, and more Microsoft wanted to build on orientation of the graphical image is easily and securely share ideas across the success of the Kinect’s sensor altered so that it appears to the user the company and consider more technology by parlaying it into a as occupying a consistent location concepts than before. And NASA has radically new device—a mixed reality in space. provided HoloLens to astronauts on headset that would project computer- HoloLens has emerged as the go- the International Space Station as a generated images seemingly into real to device for engineers to integrate holographic instruction manual and a space. -

TV in VR Changho Choi, Peter Langner, Praveen Reddy, Satender Saroha, Sunil Srinivasan, Naveen Suryavamsh

TV in VR Changho Choi, Peter Langner, Praveen Reddy, Satender Saroha, Sunil Srinivasan, Naveen Suryavamsh Introduction The evolution of storytelling has gone through various phases. Earliest known methods were through plain text. Plays and theatres were used to bring some of these stories to life but for the most part, artists relied on their audience to imagine the fictional worlds they were describing. Illustrations were a nice addition to help visualize an artist's perception. With the advent of cinema in the early 1900’s starting with silent films to the current summer blockbusters with their CGI, 3D and surround sound – viewers are transported into these imaginary worlds – to experience these worlds just as the creators of this content envisioned it. Virtual reality, with its ability to provide an immersive medium with a sense of presence and depth is the next frontier of storytelling. Seminal events in history Moon landing in 1969 When Neil Armstrong and Buzz Aldrin took the first steps on the moon it captured the imagination of the world. The culmination of a grand vision and the accompanying technological breakthroughs brought about an event that transfixed generations to come. As it happened in the 1969, the enabling technology for experiencing this event was the trusted radio or through grainy broadcasts of television anchors describing the events as they were described to them! Super Bowl 49 As the Seattle Seahawks stood a yard away from winning the Super Bowl in 2015, 115 million people watched on NBC in the United States alone. In front of their big screen TVs and every possible option explained to them by the commentators, the casual and the rabid football fan alike watched as the Seahawks lost due to a confluence of events. -

Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration

ORIGINAL RESEARCH published: 14 June 2021 doi: 10.3389/frvir.2021.697367 Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration Allison Jing*, Kieran May, Gun Lee and Mark Billinghurst Empathic Computing Lab, Australian Research Centre for Interactive and Virtual Environment, STEM, The University of South Australia, Mawson Lakes, SA, Australia Gaze is one of the predominant communication cues and can provide valuable implicit information such as intention or focus when performing collaborative tasks. However, little research has been done on how virtual gaze cues combining spatial and temporal characteristics impact real-life physical tasks during face to face collaboration. In this study, we explore the effect of showing joint gaze interaction in an Augmented Reality (AR) interface by evaluating three bi-directional collaborative (BDC) gaze visualisations with three levels of gaze behaviours. Using three independent tasks, we found that all bi- directional collaborative BDC visualisations are rated significantly better at representing Edited by: joint attention and user intention compared to a non-collaborative (NC) condition, and Parinya Punpongsanon, hence are considered more engaging. The Laser Eye condition, spatially embodied with Osaka University, Japan gaze direction, is perceived significantly more effective as it encourages mutual gaze Reviewed by: awareness with a relatively low mental effort in a less constrained workspace. In addition, Naoya Isoyama, Nara Institute of Science and by offering additional virtual representation that compensates for verbal descriptions and Technology (NAIST), Japan hand pointing, BDC gaze visualisations can encourage more conscious use of gaze cues Thuong Hoang, Deakin University, Australia coupled with deictic references during co-located symmetric collaboration. -

Getting Real with the Library

Getting Real with the Library Samuel Putnam, Sara Gonzalez Marston Science Library University of Florida Outline What is Augmented Reality (AR) & Virtual Reality (VR)? What can you do with AR/VR? How to Create AR/VR AR/VR in the Library Find Resources What is Augmented and Virtual Reality? Paul Milgram ; Haruo Takemura ; Akira Utsumi ; Fumio Kishino; Augmented reality: a class of displays on the reality- virtuality continuum. Proc. SPIE 2351, Telemanipulator and Telepresence Technologies, 282 (December 21, 1995) What is Virtual Reality? A computer-generated simulation of a lifelike environment that can be interacted with in a seemingly real or physical way by a person, esp. by means of responsive hardware such as a visor with screen or gloves with sensors. "virtual reality, n". OED Online 2017. Web. 16 May 2017. Head mounted display, U.S. Patent Number 8,605,008 VR in the 90s By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, Attribution, https://commons.wikimedia.org/w/index.php?curid=32899409 By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, By Dr. Waldern/Virtuality Group - Dr. Jonathan D. Waldern, Attribution, Attribution, https://commons.wikimedia.org/w/index.php?curid=32525338 https://commons.wikimedia.org/w/index.php?curid=32525505 1 2 3 VR with a Phone 1. Google Daydream View 2. Google Cardboard 3. Samsung Gear VR Oculus Rift ● Popular VR system: headset, hand controllers, headset tracker ($598) ● Headset has speakers -> immersive environment ● Requires a powerful PC for full VR OSVR Headset ● Open Source ● “Plug in, Play Everything” ● Discounts for Developers and Academics ● Requires a powerful PC for full VR Augmented Reality The use of technology which allows the perception of the physical world to be enhanced or modified by computer-generated stimuli perceived with the aid of special equipment. -

Effects of Control Device and Task Complexity on Performance and Task Shedding During a Robotic Arm Task

Old Dominion University ODU Digital Commons Psychology Theses & Dissertations Psychology Spring 2019 Effects of Control Device and Task Complexity on Performance and Task Shedding During a Robotic Arm Task Shelby K. Long Old Dominion University, [email protected] Follow this and additional works at: https://digitalcommons.odu.edu/psychology_etds Part of the Applied Behavior Analysis Commons, Human Factors Psychology Commons, and the Robotics Commons Recommended Citation Long, Shelby K.. "Effects of Control Device and Task Complexity on Performance and Task Shedding During a Robotic Arm Task" (2019). Master of Science (MS), Thesis, Psychology, Old Dominion University, DOI: 10.25777/yh1y-0016 https://digitalcommons.odu.edu/psychology_etds/231 This Thesis is brought to you for free and open access by the Psychology at ODU Digital Commons. It has been accepted for inclusion in Psychology Theses & Dissertations by an authorized administrator of ODU Digital Commons. For more information, please contact [email protected]. EFFECTS OF CONTROL DEVICE AND TASK COMPLEXITY ON PERFORMANCE AND TASK SHEDDING DURING A ROBOTIC ARM TASK by Shelby K. Long B.S. December 2013, Georgia Institute of Technology A Thesis Submitted to the Faculty of Old Dominion University in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE PSYCHOLOGY OLD DOMINION UNIVERSITY May 2019 Approved by: James P. Bliss (Director) Yusuke Yamani (Member) Xiaoxiao Hu (Member) ABSTRACT EFFECTS OF CONTROL DEVICE AND TASK COMPLEXITY ON PERFORMANCE AND TASK SHEDDING DURING A ROBOTIC ARM TASK Shelby K. Long Old Dominion University, 2019 Director: Dr. James P. Bliss The use of robotic arms across domains is increasing, but the relationship between control features and performance is not fully understood. -

Evaluating Performance Benefits of Head Tracking in Modern Video

Evaluating Performance Benefits of Head Tracking in Modern Video Games Arun Kulshreshth Joseph J. LaViola Jr. Department of EECS Department of EECS University of Central Florida University of Central Florida 4000 Central Florida Blvd 4000 Central Florida Blvd Orlando, FL 32816, USA Orlando, FL 32816, USA [email protected] [email protected] ABSTRACT PlayStation Move, TrackIR 5) that support 3D spatial in- teraction have been implemented and made available to con- We present a study that investigates user performance ben- sumers. Head tracking is one example of an interaction tech- efits of using head tracking in modern video games. We nique, commonly used in the virtual and augmented reality explored four di↵erent carefully chosen commercial games communities [2, 7, 9], that has potential to be a useful ap- with tasks which can potentially benefit from head tracking. proach for controlling certain gaming tasks. Recent work on For each game, quantitative and qualitative measures were head tracking and video games has shown some potential taken to determine if users performed better and learned for this type of gaming interface. For example, Sko et al. faster in the experimental group (with head tracking) than [10] proposed a taxonomy of head gestures for first person in the control group (without head tracking). A game ex- shooter (FPS) games and showed that some of their tech- pertise pre-questionnaire was used to classify participants niques (peering, zooming, iron-sighting and spinning) are into casual and expert categories to analyze a possible im- useful in games. In addition, previous studies [13, 14] have pact on performance di↵erences. -

2(D) Citation Watch – Google Inc Towergatesoftware Towergatesoftware.Com 1 866 523 TWG8

2(d) Citation Watch – Google inc towergatesoftware towergatesoftware.com 1 866 523 TWG8 Firm/Corresp Owner (cited) Mark (cited) Mark (refused) Owner (refused) ANDREW ABRAMS Google Inc. G+ EXHIBIA SOCIAL SHOPPING F OR Exhibía OY 85394867 G+ ACCOUNT REQUIRED TO BID 86325474 Andrew Abrams Google Inc. GOOGLE CURRENTS THE GOOGLE HANDSHAKE Goodway Marketing Co. 85564666 85822092 Andrew Abrams Google Inc. GOOGLE TAKEOUT GOOGLEBEERS "Munsch, Jim" 85358126 86048063 Annabelle Danielvarda Google Inc. BROADCAST YOURSELF ORR TUBE BROADCAST MYSELF "Orr, Andrew M" 78802315 85206952 Annabelle Danielvarda Google Inc. BROADCAST YOURSELF WEBCASTYOURSELF Todd R Saunders 78802315 85213501 Annabelle Danielvarda Google Inc. YOUTUBE ORR TUBE BROADCAST MYSELF "Orr, Andrew M" 77588871 85206952 Annabelle Danielvarda Google Inc. YOUTUBE YOU PHOTO TUBE Jorge David Candido 77588871 85345360 Annabelle Danielvarda Google Inc. YOUTUBE YOUTOO SOCIAL TV "Youtoo Technologies, Llc" 77588871 85192965 Building 41 Google Inc. GMAIL GOT GMAIL? "Kuchlous, Ankur" 78398233 85112794 Building 41 Google Inc. GMAIL "VOG ART, KITE, SURF, SKATE, "Kruesi, Margaretta E." 78398233 LIFE GRETTA KRUESI WWW.GRETTAKRUESI.COM [email protected]" 85397168 "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP PAY BUMPTOPAY Nexus Taxi Inc 85549958 86242487 1 Copyright 2015 TowerGate Software Inc 2(d) Citation Watch – Google inc towergatesoftware towergatesoftware.com 1 866 523 TWG8 Firm/Corresp Owner (cited) Mark (cited) Mark (refused) Owner (refused) "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP BUMP.COM Bump Network 77701789 85287257 "BUMP TECHNOLOGIES, INC." GOOGLE INC. BUMP BUMPTOPAY Nexus Taxi Inc 77701789 86242487 Christine Hsieh Google Inc. GLASS GLASS "Border Stylo, Llc" 85661672 86063261 Christine Hsieh Google Inc. GOOGLE MIRROR MIRROR MIX "Digital Audio Labs, Inc." 85793517 85837648 Christine Hsieh Google Inc. -

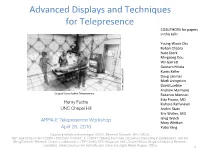

Advanced Displays and Techniques for Telepresence COAUTHORS for Papers in This Talk

Advanced Displays and Techniques for Telepresence COAUTHORS for papers in this talk: Young-Woon Cha Rohan Chabra Nate Dierk Mingsong Dou Wil GarreM Gentaro Hirota Kur/s Keller Doug Lanman Mark Livingston David Luebke Andrei State (UNC) 1994 Andrew Maimone Surgical Consulta/on Telepresence Federico Menozzi EMa Pisano, MD Henry Fuchs Kishore Rathinavel UNC Chapel Hill Andrei State Eric Wallen, MD ARPA-E Telepresence Workshop Greg Welch Mary WhiMon April 26, 2016 Xubo Yang Support gratefully acknowledged: CISCO, Microsoft Research, NIH, NVIDIA, NSF Awards IIS-CHS-1423059, HCC-CGV-1319567, II-1405847 (“Seeing the Future: Ubiquitous Computing in EyeGlasses”), and the BeingThere Int’l Research Centre, a collaboration of ETH Zurich, NTU Singapore, UNC Chapel Hill and Singapore National Research Foundation, Media Development Authority, and Interactive Digital Media Program Office. 1 Video Teleconferencing vs Telepresence • Video Teleconferencing • Telepresence – Conven/onal 2D video capture and – Provides illusion of presence in the display remote or combined local&remote space – Single camera, single display at each – Provides proper stereo views from the site is common configura/on for precise loca/on of the user Skype, Google Hangout, etc. – Stereo views change appropriately as user moves – Provides proper eye contact and eye gaze cues among all the par/cipants Cisco TelePresence 3000 Three distant rooms combined into a single space with wall-sized 3D displays 2 Telepresence Component Technologies • Acquisi/on (cameras) • 3D reconstruc/on Cisco