Subjective Event Summarization of Sports Events Using Twitter

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Red Point of View – Issue 1

A RED Point of View GRAEME SHINNIE NEW CAPTAIN NEW SEASON... ISSUE 01 JULY 2017 Welcome... cONTRIBUTORS: It is with great pleasure that I welcome Ryan Crombie: you to “A Red Point of View” the unof- @ryan_crombie ficial online Dons magazine. We are an entirely new publication, dedicated to Ally Begg: bringing you content based upon the @ally_begg club we love. As a regular blogger and writer, I have written many pieces on the Scott Baxter: club I have supported from birth, this perhaps less through choice but family @scottscb tradition. I thank my dad for this. As a re- Matthew Findlay: sult of engaging and sharing my personal @matt_findlay19 writing across social media for several years now, I have grown to discover the monumental online Dons support that Tom Mackinnon: exists across all platforms. The aim of @tom_mackinnon this magazine is to provide a focal point for Aberdeen fans online, to access some Martin Stone: of the best writing that the support have @stonefish100 to offer, whilst giving the writers a plat- form to voice anything and everything Finlay Hall: Dons related. In this first issue we have @FinHall a plethora of content ranging from the pre-season thoughts from one of the Red Army’s finest, Ally Begg, to an ex- Mark Gordon: @Mark_SGordon clusive interview with Darren Mackie, who opens up about his lengthy time at Pittodrie. Guest writer Scott Baxter, Lewis Michie: the club photographer at Aberdeen tells @lewismichie0 what it’s like to photograph the Dons. Finlay Hall analyses the necessity of Ewan Beattie: fanzines and we get some views from @Ewan_Beattie the terraces as fans send in their pieces. -

Sample Download

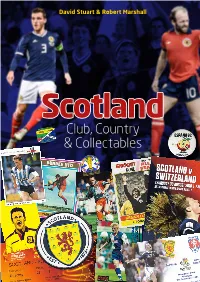

David Stuart & RobertScotland: Club, Marshall Country & Collectables Club, Country & Collectables 1 Scotland Club, Country & Collectables David Stuart & Robert Marshall Pitch Publishing Ltd A2 Yeoman Gate Yeoman Way Durrington BN13 3QZ Email: [email protected] Web: www.pitchpublishing.co.uk First published by Pitch Publishing 2019 Text © 2019 Robert Marshall and David Stuart Robert Marshall and David Stuart have asserted their rights in accordance with the Copyright, Designs and Patents Act 1988 to be identified as the authors of this work. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission in writing of the publisher and the copyright owners, or as expressly permitted by law, or under terms agreed with the appropriate reprographics rights organization. Enquiries concerning reproduction outside the terms stated here should be sent to the publishers at the UK address printed on this page. The publisher makes no representation, express or implied, with regard to the accuracy of the information contained in this book and cannot accept any legal responsibility for any errors or omissions that may be made. A CIP catalogue record for this book is available from the British Library. 13-digit ISBN: 9781785315419 Design and typesetting by Olner Pro Sport Media. Printed in India by Replika Press Scotland: Club, Country & Collectables INTRODUCTION Just when you thought it was safe again to and Don Hutchison, the match go back inside a quality bookshop, along badges (stinking or otherwise), comes another offbeat soccer hardback (or the Caribbean postage stamps football annual for grown-ups) from David ‘deifying’ Scotland World Cup Stuart and Robert Marshall, Scottish football squads and the replica strips which writing’s answer to Ernest Hemingway and just defy belief! There’s no limit Mary Shelley. -

28 November 2012 Opposition

Date: 28 November 2012 Times Telegraph Echo November 28 2012 Opposition: Tottenham Hotspur Guardian Mirror Evening Standard Competition: League Independent Mail BBC Own goal a mere afterthought as Bale's wizardry stuns Liverpool Bale causes butterflies at both ends as Spurs hold off Liverpool Tottenham Hotspur 2 With one glorious assist, one goal at the right end, one at the wrong end and a Liverpool 1 yellow card for supposed simulation, Gareth Bale was integral to nearly Gareth Bale ended up with a booking for diving and an own goal to his name, but everything that Tottenham did at White Hart Lane. Spurs fans should enjoy it still the mesmerising manner in which he took this game to Liverpool will live while it lasts, because with Bale's reputation still soaring, Andre Villas-Boas longer in the memory. suggested other clubs will attempt to lure the winger away. The Wales winger was sufficiently sensational in the opening quarter to "He is performing extremely well for Spurs and we are amazed by what he can do render Liverpool's subsequent recovery too little too late -- Brendan Rodgers's for us," Villas-Boas said. "He's on to a great career and obviously Tottenham want team have now won only once in nine games -- and it was refreshing to be lauding to keep him here as long as we can but we understand that players like this have Tottenham's prodigious talent rather than lamenting the sick terrace chants in the propositions in the market. That's the nature of the game." games against Lazio and West Ham United. -

P20 Layout 1

Atletico top Kenyans dominate Spanish as Farah League toils in London MONDAY, APRIL 14, 2014 18 19 Pacquiao defeats Bradley to regain WBO crown Page 16 LONDON: Liverpool’s Philippe Coutinho (center) celebrates with teammate Steven Gerrard (left) after he scored the third goal of the game for his side during their English Premier League soccer match against Manchester City. — AP Liverpool win crunch title clash through Raheem Sterling and Martin a fine, incisive pass and the 19-year-old ing to clear a Kompany header off the line Skrtel, and means that they will be exhibited superb composure to send and Liverpool goalkeeper Simon Mignolet EPL results/standings Liverpool 3 crowned champions if they win their Kompany and goalkeeper Joe Hart one blocking a volley from Fernandinho. Liverpool 3 (Sterling 6, Skrtel 26, Coutinho 78) Manchester City remaining four games. way and then the other before shooting City manager Manuel Pellegrini intro- 2 (Silva 57, Johnson 62-og); Swansea 0 Chelsea 1 (Ba 68). City responded impressively in the sec- into an unguarded net. duced James Milner for Jesus Navas early in ond half to draw level. David Silva scored In reply, Toure ballooned a shot over the the second half and in the 57th minute he English Premier League table after yesterday’s matches (played, won, drawn, lost, goals for, goals against, points): Man City 2 and then forced an own goal by Glen bar but seemed to injure himself in the created the goal that gave City a foothold in Johnson, but their destiny is no longer in process. -

Full Time Report AALBORG BK CELTIC FC

Full Time Report Matchday 5 - Tuesday 25 November 2008 Group E - Aalborg Stadion - Aalborg AALBORG BK CELTIC FC 220:45 1 (0) (0) half time half time 1 Karim Zaza 1 Artur Boruc 2 Michael Jakobsen 2 Andreas Hinkel 3 Martin Pedersen 4 Stephen McManus 6 Steve Olfers 5 Gary Caldwell 8 Andreas Johansson 7 Scott McDonald 9 Thomas Augustinussen 8 Scott Brown 14 Jeppe Curth 9 Georgios Samaras 16 Kasper Bøgelund 12 Mark Wilson 18 Caca 19 Barry Robson 21 Kasper Risgård 22 Glenn Loovens 23 Thomas Enevoldsen 25 Shunsuke Nakamura 30 Kenneth Stenild 21 Mark Brown 7 Anders Due 3 Lee Naylor 10 Marek Saganowski 11 Paul Hartley 15 Siyabonga Nomvethe 45' 13 Shaun Maloney 20 Simon Bræmer 1'03" 26 Cillian Sheridan 24 Jens-Kristian Sørensen 48 Darren O'Dea 27 Patrick Kristensen 52 Paul Caddis Coach: Coach: Allan Kuhn Gordon Strachan Full 53' 19 Barry Robson Full Half Half Total shot(s) 4 9 Total shot(s) 2 13 Shot(s) on target 2 4 Shot(s) on target 0 4 Free kick(s) to goal 1 2 Free kick(s) to goal 1 1 Save(s) 0 3 Save(s) 2 3 Corner(s) 4 7 Corner(s) 1 4 Foul(s) committed 7 13 8 Andreas Johansson 69' Foul(s) committed 8 14 Foul(s) suffered 8 14 7 Anders Due in in 26 Cillian Sheridan Foul(s) suffered 7 13 70' 69' Offside(s) 1 3 23 Thomas Enevoldsen out out 9 Georgios Samaras Offside(s) 3 3 10 Marek Saganowski in Possession 48% 47% 14 Jeppe Curth out 70' Possession 52% 53% Ball in play 14'09" 27'12" 18 Caca 73' Ball in play 15'15" 30'37" Total ball in play 29'24" 57'49" Total ball in play 29'24" 57'49" in Referee: 27 Patrick Kristensen out 81' Konrad Plautz (AUT) 3 Martin Pedersen Assistant referees: Armin Eder (AUT) Raimund Buch (AUT) 5 Gary Caldwell 87' in 13 Shaun Maloney 90' out 12 Mark Wilson Fourth official: Stefan Messner (AUT) 90' UEFA delegate: Maurizio Laudi (ITA) 3'17" Attendance: 10,096 22:38:07 CET Goal Booked Sent off Substitution Penalty Owngoal Captain Goalkeeper Misses next match if booked 25 Nov 2008 UEFA Media Information. -

A Comparative Study of After-Match Reports on 'Lost'and 'Won'football

International Journal of Applied Linguistics & English Literature ISSN 2200-3592 (Print), ISSN 2200-3452 (Online) Vol. 4 No. 1; January 2015 Copyright © Australian International Academic Centre, Australia A Comparative Study Of After-match Reports On ‘Lost’ And ‘Won’ Football Games Within The Framework Of Critical Discourse Analysis Biook Behnam Department of English, Islamic Azad University, Tabriz, Iran E-mail: [email protected] Hasan Jahanban Isfahlan (Corresponding Author) Department of English, Islamic Azad University, Tabriz, Iran E-mail: [email protected] Received: 09-07-2014 Accepted: 02-09-2014 Published: 01-01-2015 doi:10.7575/aiac.ijalel.v.4n.1p.115 URL: http://dx.doi.org/10.7575/aiac.ijalel.v.4n.1p.115 Abstract In spite of the obvious differences in research styles, all critical discourse analysts aim at exploring the role of discourse in the production and reproduction of power relations within social structures. Nowadays, after-match written reports are prepared immediately after every football match and provide the readers with the apparently objective representations of important events and occurrences of the game. The authors of this paper analyzed four after-match reports (retrieved from Manchester United’s own website), two regarding their lost games and two on their won games. Hodge and Kress’s (1996) framework was followed in the analysis of the grammatical features of the texts at hand. Also, taking into consideration the pivotal role of lexicalization as one dimension of the textualization process (Fairclough, 2012), vocabulary and more specifically the choice of specific verbs, nouns, adverbs and noun or noun phrase modification were examined. -

The Journal of the Association for Journalism Education

Journalism Education The Journal of the Association for Journalism Education Volume one, number one April 2012 Page 2 Journalism Education Volume 1 number 1 Journalism Education Journalism Education is the journal of the Association for Journalism Education a body representing educators in HE in the UK and Ireland. The aim of the journal is to promote and develop analysis and understanding of journalism education and of journalism, particu- larly when that is related to journalism education. Editors Mick Temple, Staffordshire University Chris Frost, Liverpool John Moores University Jenny McKay Sunderland University Stuart Allan, Bournemouth University Reviews editor: Tor Clark, de Montfort University You can contact the editors at [email protected] Editorial Board Chris Atton, Napier University Olga Guedes Bailey, Nottingham Trent University David Baines, Newcastle University Guy Berger, Rhodes University Jane Chapman, University of Lincoln Martin Conboy, Sheffield University Ros Coward, Roehampton University Stephen Cushion, Cardiff University Susie Eisenhuth, University of Technology, Sydney Ivor Gaber, Bedfordshire University Roy Greenslade, City University Mark Hanna, Sheffield University Michael Higgins, Strathclyde University John Horgan, Irish press ombudsman. Sammye Johnson, Trinity University, San Antonio, USA Richard Keeble, University of Lincoln Mohammed el-Nawawy, Queens University of Charlotte An Duc Nguyen, Bournemouth University Sarah Niblock, Brunel University Bill Reynolds, Ryerson University, Canada Ian Richards, University -

KT 8-5-2013 Layout 1

SUBSCRIPTION WEDNESDAY, MAY 8, 2013 JAMADA ALTHANI 28, 1434 AH www.kuwaittimes.net 20 dead Man City in Mexico edge gas tanker West Brom truck blast7 20 Dow receives $2.2bn in Max 36º Min 20º damages from Kuwait High Tide 10:48 & 23:57 Low Tide Amount does not include $300m interest for payment delay 04:52 & 17:38 40 PAGES NO: 15802 150 FILS KUWAIT: Kuwait’s state-owned Petrochemical conspiracy theories Industries Co (PIC) said yesterday it reached a final set- tlement with US giant Dow Chemical and paid around $2.2 billion as a penalty for scrapping a joint venture. PIC said in a statement cited by the official KUNA news As usual, agency that the amount did not include $300 million of interest for delaying the payment awarded a year ago. “The settlement stipulates that PIC pays the value of halfway damages and costs as per the ruling of the International Chamber of Commerce (ICC) without the interest of $300 million,” the statement said. solutions The US company said on its website that it has received the cash payment, which “reflects the full dam- ages awarded by the ICC, as well as recovery of Dow’s costs”. PIC confirmed it had made the payment and that it had “exhausted all possible challenges to the award”. Last May, the ICC acting as an international arbitration granted Dow Chemical around $2.2 billion in compen- By Badrya Darwish sation for Kuwait pulling out of the $17.4 billion ven- ture. “Dow and Kuwait share a long history, and payment of this award brings final and appropriate resolution and closure to the issue,” Andrew Liveris, Dow chairman CLEVELAND, Ohio: Amanda Berry (center) is reunited with her sister [email protected] and chief executive officer said in a statement. -

Year Ended 30 June 2004 Celtic Plc Annual Report

Celtic plc Annual Report Year Ended 30 June 2004 CONTENTS Chairman’s Statement 1 Directors’ Report 13 Highlights of the Results 2 Corporate Governance 17 Operating Review 3 Remuneration Report 19 Financial Review 7 Statement of Directors’ Responsibilities 23 Five Year Record 23 Group Balance Sheet 28 Notice and Notes 45 Independent Auditors’ Report Company Balance Sheet 29 Explanatory Notes 46 to the Members 24 Group Cash Flow Statement 30 Directors, Officers Celtic Charity Fund 25 and Advisers 48 Notes to the Financial Group Profit and Loss Account 27 Statements 31 Chairman’s Statement Brian Quinn CBE In these circumstances Celtic has League and the UEFA Cup. Narrow worked hard to remain on course to defeats away from home in Munich achieve a sustainable balance between and Lyon meant that the team did not football progress and financial stability. go on to the knockout stage of the ...further progress Let me emphasise how difficult it can UEFA Champions’ League; but it did be to achieve those objectives. Success reach the quarter-final of the UEFA in building the may or may not generate further Cup after a memorable victory over success in football; but it certainly Barcelona. Deprived through injury of ‘‘ feeds expectations among supporters 3 of our 4 recognised strikers, Celtic Celtic brand and demands by the media. Those went out to Villarreal. Once again expectations and demands have to be Martin O’Neill, supported by his very and the business. managed in a climate in which public able staff, has given the team great focus on finances becomes important leadership and drive. -

Ronaldo Hits Third Hat-Trick

Rain wreaks havoc Top-placed Thais in India-Bangladesh eye golden finish Test SUNDAY, JUNE 14, 2015 16 17 Ronaldo hits third hat-trick European qualifiers YEREVAN: Portugal moved five points clear at the top of European Championship qualifying Group I yesterday after Cristiano Ronaldo’s third successive hat-trick secured a crucial 3-2 win in Armenia. The Portuguese, who played the last 30 minutes down to 10 men, Maloney frustrates were given a real scare by an Armenian side who had picked up just a single point in their first four group games. Ireland once again The hosts were under new management after former Swiss coach Bernard Challandes stood down following their last match-a 2-1 DUBLIN: Shaun Maloney was the Republic of Ireland’s nemesis once more as Scotland delivered a massive blow to their hosts’ Euro 2016 qualifying defeat against Albania. qualification hopes with a 1-1 draw at Lansdowne Road yesterday. Record caps holder (131 appearances) Sargis Hovsepyan is now in The Chicago Fire midfielder’s second-half strike took a massive deflec- the hot seat and his influence appeared to have the desired effect as tion off Ireland skipper John O’Shea to cancel out Jon Walters’ first-half Armenia took a 14th-minute lead through Brazilian-born midfielder goal, and the result leaves Ireland five points behind Group D leaders Marcos Pizzelli. Poland in fourth place. But Fernando Santos’ Portugal levelled just before the half-hour However, the point will be welcomed by Gordon Strachan’s men, who mark via a predictable source, with World Player of the Year Ronaldo knew avoiding defeat would make Ireland’s task of going to France next converting a penalty. -

Manchester United V Wigan Athletic FA Community Shield Sponsored by Mcdonald’S

Manchester United v Wigan Athletic FA Community Shield sponsored by McDonald’s Wembley Stadium Sunday 11 August 2013 Kick-off 2pm FA Community Shield Contents Introduction by David Barber, FA Historian 1 Background 2 Shield results 3-5 Shield appearances 5 Head-to-head 7 Manager profiles 8 Premier League 2012/13 League table 10 Team statistic review 11 Manchester United player stats 12 Wigan player stats 13 Goals breakdown 14 Results 2012-13 15-16 FA Cup 2012/13 Results 17 Officials 18 Referee statistics & Discipline record 19 Rules & Regulations 20-21 Manchester United v Wigan Athletic, Wembley Stadium, Sunday 11 August 2013, Kick-off 2pm FA Community Shield Introduction by David Barber, FA Historian Manchester United, Premier League champions for the 13th time, meet Wigan Athletic, FA Cup-winners for the first time, in the traditional season curtain-raiser at Wembley Stadium. The match will be the 91st time the Shield has been contested. The only match ever to go to a replay was the very first - in 1908. No club has a Shield pedigree like Manchester United. They were its first winners and have now clocked up a record 15 successes. They have shared the Shield an additional four times and have featured in a total of 28 contests. Wigan Athletic, by contrast, were a non-League club until 1978 and are playing the first Shield match in their 81-year history. The FA Charity Shield, its name until 2002, evolved from the Sheriff of London Shield - an annual fixture between the leading professional and amateur clubs of the time. -

Monday, 08. 04. 2013 SUBSCRIPTION

Monday, 08. 04. 2013 SUBSCRIPTION MONDAY, APRIL 7, 2013 JAMADA ALAWWAL 27, 1434 AH www.kuwaittimes.net Clashes after New fears in Burgan Bank Lorenzo funeral of Lanka amid CEO spells wins Qatar Egypt Coptic anti-Muslim out growth MotoGP Christians7 campaign14 strategy22 18 MPs want amendments to Max 33º Min 19º residency law for expats High Tide 10:57 & 23:08 Govt rebuffs Abu Ghaith relatives ‘Peninsula Lions’ may be freed Low Tide • 04:44 & 17:01 40 PAGES NO: 15772 150 FILS By B Izzak conspiracy theories KUWAIT: Five MPs yesterday proposed amendments to the residency law that call to allow foreign residents to Menopause!!! stay outside Kuwait as long as their residence permit is valid and also call to make it easier to grant certain cate- gories of expats with residencies. The five MPs - Nabeel Al-Fadhl, Abdulhameed Dashti, Hani Shams, Faisal Al- Kandari and Abdullah Al-Mayouf - also proposed in the amendments to make it mandatory for the immigration department to grant residence permits and renew them By Badrya Darwish in a number of cases, especially when the foreigners are relatives of Kuwaitis. These cases include foreigners married to Kuwaiti women and that their permits cannot be cancelled if the relationship is severed if they have children. These [email protected] also include foreign wives of Kuwaitis and their resi- dence permits cannot be cancelled if she has children from the Kuwaiti husband. Other beneficiaries of the uys, you are going to hear the most amazing amendments include foreign men or women whose story of 2013.