Superheterodyne Receiver with Separate Sections for Processing and Recovering the Sound and Picture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toward a Large Bandwidth Photonic Correlator for Infrared Heterodyne Interferometry a first Laboratory Proof of Concept

A&A 639, A53 (2020) Astronomy https://doi.org/10.1051/0004-6361/201937368 & c G. Bourdarot et al. 2020 Astrophysics Toward a large bandwidth photonic correlator for infrared heterodyne interferometry A first laboratory proof of concept G. Bourdarot1,2, H. Guillet de Chatellus2, and J-P. Berger1 1 Univ. Grenoble Alpes, CNRS, IPAG, 38000 Grenoble, France e-mail: [email protected] 2 Univ. Grenoble Alpes, CNRS, LIPHY, 38000 Grenoble, France Received 19 December 2019 / Accepted 17 May 2020 ABSTRACT Context. Infrared heterodyne interferometry has been proposed as a practical alternative for recombining a large number of telescopes over kilometric baselines in the mid-infrared. However, the current limited correlation capacities impose strong restrictions on the sen- sitivity of this appealing technique. Aims. In this paper, we propose to address the problem of transport and correlation of wide-bandwidth signals over kilometric dis- tances by introducing photonic processing in infrared heterodyne interferometry. Methods. We describe the architecture of a photonic double-sideband correlator for two telescopes, along with the experimental demonstration of this concept on a proof-of-principle test bed. Results. We demonstrate the a posteriori correlation of two infrared signals previously generated on a two-telescope simulator in a double-sideband photonic correlator. A degradation of the signal-to-noise ratio of 13%, equivalent to a noise factor NF = 1:15, is obtained through the correlator, and the temporal coherence properties of our input signals are retrieved from these measurements. Conclusions. Our results demonstrate that photonic processing can be used to correlate heterodyne signals with a potentially large increase of detection bandwidth. -

Army Radio Communication in the Great War Keith R Thrower, OBE

Army radio communication in the Great War Keith R Thrower, OBE Introduction Prior to the outbreak of WW1 in August 1914 many of the techniques to be used in later years for radio communications had already been invented, although most were still at an early stage of practical application. Radio transmitters at that time were predominantly using spark discharge from a high voltage induction coil, which created a series of damped oscillations in an associated tuned circuit at the rate of the spark discharge. The transmitted signal was noisy and rich in harmonics and spread widely over the radio spectrum. The ideal transmission was a continuous wave (CW) and there were three methods for producing this: 1. From an HF alternator, the practical design of which was made by the US General Electric engineer Ernst Alexanderson, initially based on a specification by Reginald Fessenden. These alternators were primarily intended for high-power, long-wave transmission and not suitable for use on the battlefield. 2. Arc generator, the practical form of which was invented by Valdemar Poulsen in 1902. Again the transmitters were high power and not suitable for battlefield use. 3. Valve oscillator, which was invented by the German engineer, Alexander Meissner, and patented in April 1913. Several important circuits using valves had been produced by 1914. These include: (a) the heterodyne, an oscillator circuit used to mix with an incoming continuous wave signal and beat it down to an audible note; (b) the detector, to extract the audio signal from the high frequency carrier; (c) the amplifier, both for the incoming high frequency signal and the detected audio or the beat signal from the heterodyne receiver; (d) regenerative feedback from the output of the detector or RF amplifier to its input, which had the effect of sharpening the tuning and increasing the amplification. -

Lecture 25 Demodulation and the Superheterodyne Receiver EE445-10

EE447 Lecture 6 Lecture 25 Demodulation and the Superheterodyne Receiver EE445-10 HW7;5-4,5-7,5-13a-d,5-23,5-31 Due next Monday, 29th 1 Figure 4–29 Superheterodyne receiver. m(t) 2 Couch, Digital and Analog Communication Systems, Seventh Edition ©2007 Pearson Education, Inc. All rights reserved. 0-13-142492-0 1 EE447 Lecture 6 Synchronous Demodulation s(t) LPF m(t) 2Cos(2πfct) •Only method for DSB-SC, USB-SC, LSB-SC •AM with carrier •Envelope Detection – Input SNR >~10 dB required •Synchronous Detection – (no threshold effect) •Note the 2 on the LO normalizes the output amplitude 3 Figure 4–24 PLL used for coherent detection of AM. 4 Couch, Digital and Analog Communication Systems, Seventh Edition ©2007 Pearson Education, Inc. All rights reserved. 0-13-142492-0 2 EE447 Lecture 6 Envelope Detector C • Ac • (1+ a • m(t)) Where C is a constant C • Ac • a • m(t)) 5 Envelope Detector Distortion Hi Frequency m(t) Slope overload IF Frequency Present in Output signal 6 3 EE447 Lecture 6 Superheterodyne Receiver EE445-09 7 8 4 EE447 Lecture 6 9 Super-Heterodyne AM Receiver 10 5 EE447 Lecture 6 Super-Heterodyne AM Receiver 11 RF Filter • Provides Image Rejection fimage=fLO+fif • Reduces amplitude of interfering signals far from the carrier frequency • Reduces the amount of LO signal that radiates from the Antenna stop 2/22 12 6 EE447 Lecture 6 Figure 4–30 Spectra of signals and transfer function of an RF amplifier in a superheterodyne receiver. 13 Couch, Digital and Analog Communication Systems, Seventh Edition ©2007 Pearson Education, Inc. -

Design Considerations for Optical Heterodyne

DESIGNCONSIDERATIONS FOR OPTICAL HETERODYNERECEIVERS: A RFXIEW John J. Degnan Instrument Electro-optics Branch NASA GoddardSpace Flight Center Greenbelt,Maryland 20771 ABSTRACT By its verynature, an optical heterodyne receiver is both a receiver and anantenna. Certain fundamental antenna properties ofheterodyne receivers are describedwhich set theoretical limits on the receiver sensitivity for the detectionof coherent point sources, scattered light, and thermal radiation. In order to approachthese limiting sensitivities, the geometry of the optical antenna-heterodyne receiver configurationmust be carefully tailored to the intendedapplication. The geometric factors which affect system sensitivity includethe local osciliator (LO) amplitudedistribution, mismatches between the signaland LO phasefronts,central obscurations of the optical antenna, and nonuniformmixer quantum efficiencies. The current state of knowledge in this area, which rests heavilyon modern concepts of partial coherence, is reviewed. Following a discussion of noiseprocesses in the heterodyne receiver and the manner in which sensitivity is increasedthrough time integration of the detectedsignal, we derivean expression for the mean squaresignal current obtained by mixing a coherent local oscillator with a partially coherent, quasi- monochromaticsource. We thendemonstrate the manner in which the IF signal calculationcan be transferred to anyconvenient plane in the optical front end ofthe receiver. Using these techniques, we obtain a relativelysimple equation forthe coherently detected signal from anextended incoherent source and apply it to theheterodyne detection of an extended thermal source and to theback- scatter lidar problem where the antenna patternsof both the transmitter beam andheterodyne receiver mustbe taken into account. Finally, we considerthe detectionof a coherentsource and, in particular, a distantpoint source such as a star or laser transmitter in a longrange heterodyne communications system. -

Heterodyne Detector for Measuring the Characteristic of Elliptically Polarized Microwaves

Downloaded from orbit.dtu.dk on: Oct 02, 2021 Heterodyne detector for measuring the characteristic of elliptically polarized microwaves Leipold, Frank; Nielsen, Stefan Kragh; Michelsen, Susanne Published in: Review of Scientific Instruments Link to article, DOI: 10.1063/1.2937651 Publication date: 2008 Document Version Publisher's PDF, also known as Version of record Link back to DTU Orbit Citation (APA): Leipold, F., Nielsen, S. K., & Michelsen, S. (2008). Heterodyne detector for measuring the characteristic of elliptically polarized microwaves. Review of Scientific Instruments, 79(6), 065103. https://doi.org/10.1063/1.2937651 General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Users may download and print one copy of any publication from the public portal for the purpose of private study or research. You may not further distribute the material or use it for any profit-making activity or commercial gain You may freely distribute the URL identifying the publication in the public portal If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. REVIEW OF SCIENTIFIC INSTRUMENTS 79, 065103 ͑2008͒ Heterodyne detector for measuring the characteristic of elliptically polarized microwaves Frank Leipold, Stefan Nielsen, and Susanne Michelsen Association EURATOM, Risø National Laboratory, Technical University of Denmark, OPL-128, Frederiksborgvej 399, 4000 Roskilde, Denmark ͑Received 28 January 2008; accepted 6 May 2008; published online 4 June 2008͒ In the present paper, a device is introduced, which is capable of determining the three characteristic parameters of elliptically polarized light ͑ellipticity, angle of ellipticity, and direction of rotation͒ for microwave radiation at a frequency of 110 GHz. -

Before Valve Amplification) Page 1 of 15 Before Valve Amplification - Wireless Communication of an Early Era

(Before Valve Amplification) Page 1 of 15 Before Valve Amplification - Wireless Communication of an Early Era by Lloyd Butler VK5BR At the turn of the century there were no amplifier valves and no transistors, but radio communication across the ocean had been established. Now we look back and see how it was done and discuss the equipment used. (Orininally published in the journal "Amateur Radio", July 1986) INTRODUCTION In the complex electronics world of today, where thousands of transistors junctions are placed on a single silicon chip, we regard even electron tube amplification as being from a bygone era. We tend to associate the early development of radio around the electron tube as an amplifier, but we should not forget that the pioneers had established radio communications before that device had been discovered. This article examines some of the equipment used for radio (or should we say wireless) communications of that day. Discussion will concentrate on the equipment used and associated circuit descriptions rather than the history of its development. Anyone interested in history is referred to a thesis The Historical Development of Radio Communications by J R Cox VK6NJ published as a series in Amateur Radio, from December 1964 to June 1965. Over the years, some of the early terms used have given-way to other commonly used ones. Radio was called wireless, and still is to some extent. For example, it is still found in the name of our own representative body, the W1A. Electro Magnetic (EM) Waves were called hertzian waves or ether waves and the medium which supported them was known as the ether. -

C:\Andrzej\PDF\ABC Nagrywania P³yt CD\1 Strona.Cdr

IDZ DO PRZYK£ADOWY ROZDZIA£ SPIS TREFCI Wielka encyklopedia komputerów KATALOG KSI¥¯EK Autor: Alan Freedman KATALOG ONLINE T³umaczenie: Micha³ Dadan, Pawe³ Gonera, Pawe³ Koronkiewicz, Rados³aw Meryk, Piotr Pilch ZAMÓW DRUKOWANY KATALOG ISBN: 83-7361-136-3 Tytu³ orygina³u: ComputerDesktop Encyclopedia Format: B5, stron: 1118 TWÓJ KOSZYK DODAJ DO KOSZYKA Wspó³czesna informatyka to nie tylko komputery i oprogramowanie. To setki technologii, narzêdzi i urz¹dzeñ umo¿liwiaj¹cych wykorzystywanie komputerów CENNIK I INFORMACJE w ró¿nych dziedzinach ¿ycia, jak: poligrafia, projektowanie, tworzenie aplikacji, sieci komputerowe, gry, kinowe efekty specjalne i wiele innych. Rozwój technologii ZAMÓW INFORMACJE komputerowych, trwaj¹cy stosunkowo krótko, wniós³ do naszego ¿ycia wiele nowych O NOWOFCIACH mo¿liwoYci. „Wielka encyklopedia komputerów” to kompletne kompendium wiedzy na temat ZAMÓW CENNIK wspó³czesnej informatyki. Jest lektur¹ obowi¹zkow¹ dla ka¿dego, kto chce rozumieæ dynamiczny rozwój elektroniki i technologii informatycznych. Opisuje wszystkie zagadnienia zwi¹zane ze wspó³czesn¹ informatyk¹; przedstawia zarówno jej historiê, CZYTELNIA jak i trendy rozwoju. Zawiera informacje o firmach, których produkty zrewolucjonizowa³y FRAGMENTY KSI¥¯EK ONLINE wspó³czesny Ywiat, oraz opisy technologii, sprzêtu i oprogramowania. Ka¿dy, niezale¿nie od stopnia zaawansowania swojej wiedzy, znajdzie w niej wyczerpuj¹ce wyjaYnienia interesuj¹cych go terminów z ró¿nych bran¿ dzisiejszej informatyki. • Komunikacja pomiêdzy systemami informatycznymi i sieci komputerowe • Grafika komputerowa i technologie multimedialne • Internet, WWW, poczta elektroniczna, grupy dyskusyjne • Komputery osobiste — PC i Macintosh • Komputery typu mainframe i stacje robocze • Tworzenie oprogramowania i systemów komputerowych • Poligrafia i reklama • Komputerowe wspomaganie projektowania • Wirusy komputerowe Wydawnictwo Helion JeYli szukasz ]ród³a informacji o technologiach informatycznych, chcesz poznaæ ul. -

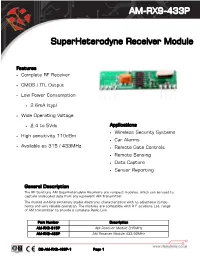

AM-RX9-433P Superheterodyne Receiver Module

AM-RX9-433P SuperHeterodyne Receiver Module Features Complete RF Receiver CMOS / TTL Output Low Power Consumption 2.6mA (typ) Wide Operating Voltage 2.4 to 5Vdc Applications Wireless Security Systems High sensitivity 110dBm Car Alarms Available as 315 / 433MHz Remote Gate Controls Remote Sensing Data Capture Sensor Reporting General Description The RF Solutions AM Superheterodyne Receivers are compact modules, which can be used to capture undecoded data from any equivalent AM Transmitter The module exhibits extremely stable electronic characteristics with no adjustable compo- nents and very reliable operation. The modules are compatible with R F solutions Ltd. range of AM transmitter to provide a complete Radio Link. Part Number Description AM-RX9-315P AM Receiver Module 315MHz AM-RX9-433P AM Receiver Module 433.92MHz DS-AM-RX9-433P-1 Page 1 AM-RX9-433P Mechanical Data Pin Decriptions Pin Description 1 Antenna In 2, 3, 8 Ground 4, 5 Supply voltage 6,7 Data Output Electrical Characteristics Ambient temp = 25°C unless otherwise stated. Characteristic Min Typical Max Dimensions Supply Voltage 2.4 5 5.5 Vdc Supply Current 2.6 mA RF Sensitivity (Vcc=5V, 1Kbps AM 99% -109 dBm @433MHz Square wave modulation) 315 Working Frequency MHz 433.92 High Level Output 0.7Vcc V Low Level Output 0.3Vcc V IF Bandwidth 280 KHz Data Rate 1 10 Kbps DS-AM-RX9-433P-1 Page 2 AM-RX9-433P Typical Application Vcc 1K 15K 13 14 4 Sleep Vcc 17 RX3 Receiver L Vcc OP1 O/P 1 12 Option 1 2 3 7 11 13 15 Status LKIN 18 LED Link OP2 O/P 2 M 1 OP3 O/P 3 2 OP4 O/P 4 10 3 LRN LB Transmitter RF600D Low Battery 1K 11 Serial Data Learn SD1 Output Switch 9 7 IN ECLK GND 5 22K RF Meter RF Multi Meter is a versatile handheld test meter checking Radio signal strength or interference in a given area. -

Superheterodyne Receiver

Superheterodyne Receiver The received RF-signals must transformed in a videosignal to get the wanted informations from the echoes. This transformation is made by a super heterodyne receiver. The main components of the typical superheterodyne receiver are shown on the following picture: Figure 1: Block diagram of a Superheterodyne The superheterodyne receiver changes the rf frequency into an easier to process lower IF- frequency. This IF- frequency will be amplified and demodulated to get a videosignal. The Figure shows a block diagram of a typical superheterodyne receiver. The RF-carrier comes in from the antenna and is applied to a filter. The output of the filter are only the frequencies of the desired frequency-band. These frequencies are applied to the mixer stage. The mixer also receives an input from the local oscillator. These two signals are beat together to obtain the IF through the process of heterodyning. There is a fixed difference in frequency between the local oscillator and the rf-signal at all times by tuning the local oscillator. This difference in frequency is the IF. this fixed difference an ganged tuning ensures a constant IF over the frequency range of the receiver. The IF-carrier is applied to the IF-amplifier. The amplified IF is then sent to the detector. The output of the detector is the video component of the input signal. Image-frequency Filter A low-noise RF amplifier stage ahead of the converter stage provides enough selectivity to reduce the image-frequency response by rejecting these unwanted signals and adds to the sensitivity of the receiver. -

A Polarization-Insensitive Recirculating Delayed Self-Heterodyne Method for Sub-Kilohertz Laser Linewidth Measurement

hv photonics Communication A Polarization-Insensitive Recirculating Delayed Self-Heterodyne Method for Sub-Kilohertz Laser Linewidth Measurement Jing Gao 1,2,3 , Dongdong Jiao 1,3, Xue Deng 1,3, Jie Liu 1,3, Linbo Zhang 1,2,3 , Qi Zang 1,2,3, Xiang Zhang 1,2,3, Tao Liu 1,3,* and Shougang Zhang 1,3 1 National Time Service Center, Chinese Academy of Sciences, Xi’an 710600, China; [email protected] (J.G.); [email protected] (D.J.); [email protected] (X.D.); [email protected] (J.L.); [email protected] (L.Z.); [email protected] (Q.Z.); [email protected] (X.Z.); [email protected] (S.Z.) 2 University of Chinese Academy of Sciences, Beijing 100039, China 3 Key Laboratory of Time and Frequency Standards, Chinese Academy of Sciences, Xi’an 710600, China * Correspondence: [email protected]; Tel.: +86-29-8389-0519 Abstract: A polarization-insensitive recirculating delayed self-heterodyne method (PI-RDSHM) is proposed and demonstrated for the precise measurement of sub-kilohertz laser linewidths. By a unique combination of Faraday rotator mirrors (FRMs) in an interferometer, the polarization-induced fading is effectively reduced without any active polarization control. This passive polarization- insensitive operation is theoretically analyzed and experimentally verified. Benefited from the recirculating mechanism, a series of stable beat spectra with different delay times can be measured simultaneously without changing the length of delay fiber. Based on Voigt profile fitting of high- order beat spectra, the average Lorentzian linewidth of the laser is obtained. The PI-RDSHM has advantages of polarization insensitivity, high resolution, and less statistical error, providing an Citation: Gao, J.; Jiao, D.; Deng, X.; effective tool for accurate measurement of sub-kilohertz laser linewidth. -

TDS3000 & TDS3000B Series Digital Phosphor Oscilloscopes Programmer Manual

Programmer Manual TDS3000 & TDS3000B Series Digital Phosphor Oscilloscopes 071-0381-02 This document applies to firmware version 3.00 and above. www.tektronix.com Copyright © Tektronix, Inc. All rights reserved. Licensed software products are owned by Tektronix or its suppliers and are protected by United States copyright laws and international treaty provisions. Use, duplication, or disclosure by the Government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013, or subparagraphs (c)(1) and (2) of the Commercial Computer Software – Restricted Rights clause at FAR 52.227-19, as applicable. Tektronix products are covered by U.S. and foreign patents, issued and pending. Information in this publication supercedes that in all previously published material. Specifications and price change privileges reserved. Tektronix, Inc., P.O. Box 500, Beaverton, OR 97077 TEKTRONIX and TEK are registered trademarks of Tektronix, Inc. DPX, WaveAlert, and e*Scope are trademarks of Tektronix, Inc. Contacting Tektronix Phone 1-800-833-9200* Address Tektronix, Inc. Department or name (if known) 14200 SW Karl Braun Drive P.O. Box 500 Beaverton, OR 97077 USA Web site www.tektronix.com Sales support 1-800-833-9200, select option 1* Service support 1-800-833-9200, select option 2* Technical support Email: [email protected] 1-800-833-9200, select option 3* 1-503-627-2400 6:00 a.m. – 5:00 p.m. Pacific time * This phone number is toll free in North America. After office hours, please leave a voice mail message. -

CX74017 on the Direct Conversion Receiver

CX74017 On the Direct Conversion Receiver architectures, this paper presents the direct conversion Abstract reception technique and highlights some of the system-level issues associated with DCR. Increased pressure for low power, small form factor, low cost, and reduced bill of materials in such radio applications as mobile communications has driven academia and industry to resurrect Traditional Reception Techniques the Direct Conversion Receiver (DCR). Long abandoned in favor of the mature superheterodyne receiver, direct conversion has The Superheterodyne emerged over the last decade or so thanks to improved semiconductor process technologies and astute design The superheterodyne, or more generally heterodyne1, receiver techniques. This paper describes the characteristics of the DCR is the most widely used reception technique. This technique and the issues it raises. finds numerous applications from personal communication devices to radio and TV tuners, and has been tried inside out Introduction and is therefore well understood. It comes in a variety of combinations [7-9], but essentially relies on the same idea: the Very much like its well-established superheterodyne receiver RF signal is first amplified in a frequency selective low-noise counterpart, introduced in 1918 by Armstrong [1], the origins of stage, then translated to a lower intermediate frequency (IF), the DCR date back to the first half of last century when a single with significant amplification and additional filtering, and finally down-conversion receiver was first described by F.M. Colebrook downconverted to baseband either with a phase discriminatory in 1924 [2], and the term homodyne was applied. Additional or straight mixer, depending on the modulation format.