Computing the Bayes Factor from a Markov Chain Monte Carlo

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Fundamental Theorem of Calculus for Lebesgue Integral

Divulgaciones Matem´aticasVol. 8 No. 1 (2000), pp. 75{85 The Fundamental Theorem of Calculus for Lebesgue Integral El Teorema Fundamental del C´alculo para la Integral de Lebesgue Di´omedesB´arcenas([email protected]) Departamento de Matem´aticas.Facultad de Ciencias. Universidad de los Andes. M´erida.Venezuela. Abstract In this paper we prove the Theorem announced in the title with- out using Vitali's Covering Lemma and have as a consequence of this approach the equivalence of this theorem with that which states that absolutely continuous functions with zero derivative almost everywhere are constant. We also prove that the decomposition of a bounded vari- ation function is unique up to a constant. Key words and phrases: Radon-Nikodym Theorem, Fundamental Theorem of Calculus, Vitali's covering Lemma. Resumen En este art´ıculose demuestra el Teorema Fundamental del C´alculo para la integral de Lebesgue sin usar el Lema del cubrimiento de Vi- tali, obteni´endosecomo consecuencia que dicho teorema es equivalente al que afirma que toda funci´onabsolutamente continua con derivada igual a cero en casi todo punto es constante. Tambi´ense prueba que la descomposici´onde una funci´onde variaci´onacotada es ´unicaa menos de una constante. Palabras y frases clave: Teorema de Radon-Nikodym, Teorema Fun- damental del C´alculo,Lema del cubrimiento de Vitali. Received: 1999/08/18. Revised: 2000/02/24. Accepted: 2000/03/01. MSC (1991): 26A24, 28A15. Supported by C.D.C.H.T-U.L.A under project C-840-97. 76 Di´omedesB´arcenas 1 Introduction The Fundamental Theorem of Calculus for Lebesgue Integral states that: A function f :[a; b] R is absolutely continuous if and only if it is ! 1 differentiable almost everywhere, its derivative f 0 L [a; b] and, for each t [a; b], 2 2 t f(t) = f(a) + f 0(s)ds: Za This theorem is extremely important in Lebesgue integration Theory and several ways of proving it are found in classical Real Analysis. -

Shape Analysis, Lebesgue Integration and Absolute Continuity Connections

NISTIR 8217 Shape Analysis, Lebesgue Integration and Absolute Continuity Connections Javier Bernal This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8217 NISTIR 8217 Shape Analysis, Lebesgue Integration and Absolute Continuity Connections Javier Bernal Applied and Computational Mathematics Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8217 July 2018 INCLUDES UPDATES AS OF 07-18-2018; SEE APPENDIX U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Undersecretary of Commerce for Standards and Technology ______________________________________________________________________________________________________ This Shape Analysis, Lebesgue Integration and publication Absolute Continuity Connections Javier Bernal is National Institute of Standards and Technology, available Gaithersburg, MD 20899, USA free of Abstract charge As shape analysis of the form presented in Srivastava and Klassen’s textbook “Functional and Shape Data Analysis” is intricately related to Lebesgue integration and absolute continuity, it is advantageous from: to have a good grasp of the latter two notions. Accordingly, in these notes we review basic concepts and results about Lebesgue integration https://doi.org/10.6028/NIST.IR.8217 and absolute continuity. In particular, we review fundamental results connecting them to each other and to the kind of shape analysis, or more generally, functional data analysis presented in the aforeme- tioned textbook, in the process shedding light on important aspects of all three notions. Many well-known results, especially most results about Lebesgue integration and some results about absolute conti- nuity, are presented without proofs. -

An Introduction to Measure Theory Terence

An introduction to measure theory Terence Tao Department of Mathematics, UCLA, Los Angeles, CA 90095 E-mail address: [email protected] To Garth Gaudry, who set me on the road; To my family, for their constant support; And to the readers of my blog, for their feedback and contributions. Contents Preface ix Notation x Acknowledgments xvi Chapter 1. Measure theory 1 x1.1. Prologue: The problem of measure 2 x1.2. Lebesgue measure 17 x1.3. The Lebesgue integral 46 x1.4. Abstract measure spaces 79 x1.5. Modes of convergence 114 x1.6. Differentiation theorems 131 x1.7. Outer measures, pre-measures, and product measures 179 Chapter 2. Related articles 209 x2.1. Problem solving strategies 210 x2.2. The Radamacher differentiation theorem 226 x2.3. Probability spaces 232 x2.4. Infinite product spaces and the Kolmogorov extension theorem 235 Bibliography 243 vii viii Contents Index 245 Preface In the fall of 2010, I taught an introductory one-quarter course on graduate real analysis, focusing in particular on the basics of mea- sure and integration theory, both in Euclidean spaces and in abstract measure spaces. This text is based on my lecture notes of that course, which are also available online on my blog terrytao.wordpress.com, together with some supplementary material, such as a section on prob- lem solving strategies in real analysis (Section 2.1) which evolved from discussions with my students. This text is intended to form a prequel to my graduate text [Ta2010] (henceforth referred to as An epsilon of room, Vol. I ), which is an introduction to the analysis of Hilbert and Banach spaces (such as Lp and Sobolev spaces), point-set topology, and related top- ics such as Fourier analysis and the theory of distributions; together, they serve as a text for a complete first-year graduate course in real analysis. -

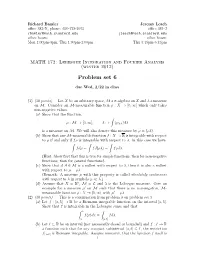

Problem Set 6

Richard Bamler Jeremy Leach office 382-N, phone: 650-723-2975 office 381-J [email protected] [email protected] office hours: office hours: Mon 1:00pm-3pm, Thu 1:00pm-2:00pm Thu 3:45pm-6:45pm MATH 172: Lebesgue Integration and Fourier Analysis (winter 2012) Problem set 6 due Wed, 2/22 in class (1) (20 points) Let X be an arbitrary space, M a σ-algebra on X and λ a measure on M. Consider an M-measurable function ρ : X ! [0; 1] which only takes non-negative values. (a) Show that the function, Z µ : M! [0; 1];A 7! (ρχA)dλ is a measure on M. We will also denote this measure by µ = (ρλ). (b) Show that any M-measurable function f : X ! R is integrable with respect to µ if and only if fρ is integrable with respect to λ. In this case we have Z Z Z fdµ = fd(ρλ) = fρdλ. (Hint: Show first that this is true for simple functions, then for non-negative functions, then for general functions). (c) Show that if A 2 M is a nullset with respect to λ, then it is also a nullset with respect to µ = ρλ. (Remark: A measure µ with this property is called absolutely continuous with respect to λ in symbols µ λ.) (d) Assume that X = Rn, M = L and λ is the Lebesgue measure. Give an example for a measure µ0 on M such that there is no non-negative, M- measurable function ρ : X ! [0; 1] with µ0 = ρλ. -

Lecture 3 the Lebesgue Integral

Lecture 3:The Lebesgue Integral 1 of 14 Course: Theory of Probability I Term: Fall 2013 Instructor: Gordan Zitkovic Lecture 3 The Lebesgue Integral The construction of the integral Unless expressly specified otherwise, we pick and fix a measure space (S, S, m) and assume that all functions under consideration are defined there. Definition 3.1 (Simple functions). A function f 2 L0(S, S, m) is said to be simple if it takes only a finite number of values. The collection of all simple functions is denoted by LSimp,0 (more precisely by LSimp,0(S, S, m)) and the family of non-negative simple Simp,0 functions by L+ . Clearly, a simple function f : S ! R admits a (not necessarily unique) representation n f = ∑ ak1Ak , (3.1) k=1 for a1,..., an 2 R and A1,..., An 2 S. Such a representation is called the simple-function representation of f . When the sets Ak, k = 1, . , n are intervals in R, the graph of the simple function f looks like a collection of steps (of heights a1,..., an). For that reason, the simple functions are sometimes referred to as step functions. The Lebesgue integral is very easy to define for non-negative simple functions and this definition allows for further generalizations1: 1 In fact, the progression of events you will see in this section is typical for measure theory: you start with indica- Definition 3.2 (Lebesgue integration for simple functions). For f 2 tor functions, move on to non-negative Simp,0 R simple functions, then to general non- L+ we define the (Lebesgue) integral f dm of f with respect to m negative measurable functions, and fi- by nally to (not-necessarily-non-negative) Z n f dm = a m(A ) 2 [0, ¥], measurable functions. -

Measure, Integrals, and Transformations: Lebesgue Integration and the Ergodic Theorem

Measure, Integrals, and Transformations: Lebesgue Integration and the Ergodic Theorem Maxim O. Lavrentovich November 15, 2007 Contents 0 Notation 1 1 Introduction 2 1.1 Sizes, Sets, and Things . 2 1.2 The Extended Real Numbers . 3 2 Measure Theory 5 2.1 Preliminaries . 5 2.2 Examples . 8 2.3 Measurable Functions . 12 3 Integration 15 3.1 General Principles . 15 3.2 Simple Functions . 20 3.3 The Lebesgue Integral . 28 3.4 Convergence Theorems . 33 3.5 Convergence . 40 4 Probability 41 4.1 Kolmogorov's Probability . 41 4.2 Random Variables . 44 5 The Ergodic Theorem 47 5.1 Transformations . 47 5.2 Birkho®'s Ergodic Theorem . 52 5.3 Conclusions . 60 6 Bibliography 63 0 Notation We will be using the following notation throughout the discussion. This list is included here as a reference. Also, some of the concepts and symbols will be de¯ned in subsequent sections. However, due to the number of di®erent symbols we will use, we have compiled the more archaic ones here. lR; lN : the real and natural numbers lR¹ : the extended natural numbers, i.e. the interval [¡1; 1] ¹ lR+ : the nonnegative (extended) real numbers, i.e. the interval [0; 1] : a sample space, a set of possible outcomes, or any arbitrary set F : an arbitrary σ-algebra on subsets of a set σ hAi : the σ-algebra generated by a subset A ⊆ . T : a function between the set and itself Á, Ã : simple functions on the set ¹ : a measure on a space with an associated σ-algebra F (Xn) : a sequence of objects Xi, i. -

14. Lebesgue Integral Dered Numbers Cn, Cn < Cn+1, Which Is Finite Or

106 2. THE LEBESGUE INTEGRATION THEORY 14. Lebesgue integral 14.1. Piecewise continuous functions on R. Consider a collection of or- dered numbers cn, cn < cn+1, which is finite or countable. Suppose that any interval (a,b) contains only finitely many numbers cn. Con- sider open intervals Ωn =(cn,cn+1). If the set of numbers cn contains the smallest number m, then the interval Ω− = (−∞,m) is added to the set of intervals {Ωn}. If the set of numbers cn contains the greatest + number M, then the interval Ω = (M, ∞) is added to {Ωn}. The − union of the closures Ωn =[cn,cn+1] (and possibly Ω =(−∞,m] and Ω+ =[M, ∞)) is the whole real line R. So, the characteristic properties of the collection {Ωn} are: 0 (i) Ωn ∩ Ωn0 = ∅ , n =6 n (ii) (a,b) ∩{Ωn} = {cj,cj+1,...,cm} , (iii) Ωn = R , [n Property (ii) also means that any bounded interval is covered by finitely many intervals Ωn. Alternatively, the sequence of endpoints cn is not allowed to have a limit point. For example, let cn = n where n is an integer. Then any interval (a,b) contains only finitely many integers. The real line R is the union of intervals [n, n+1] because every real x either lies between two integers or coincides with an integer. If cn is the collection of all non-negative integers, then R is the union of I− = (−∞, 0] and all [n, n + 1], n = 1 0, 1,.... However, the collection cn = n , n = 1, 2,..., does not have the property that any interval (a,b) contains only finitely many elements cn because 0 <cn < 2 for all n. -

Familiarizing Students with Definition of Lebesgue Integral: Examples of Calculation Directly from Its Definition Using Mathemat

Math.Comput.Sci. (2017) 11:363–381 DOI 10.1007/s11786-017-0321-5 Mathematics in Computer Science Familiarizing Students with Definition of Lebesgue Integral: Examples of Calculation Directly from Its Definition Using Mathematica Włodzimierz Wojas · Jan Krupa Received: 7 December 2016 / Revised: 19 April 2017 / Accepted: 20 April 2017 / Published online: 30 May 2017 © The Author(s) 2017. This article is an open access publication Abstract We present in this paper several examples of Lebesgue integral calculated directly from its definitions using Mathematica. Calculation of Riemann integrals directly from its definitions for some elementary functions is standard in higher mathematics education. But it is difficult to find analogical examples for Lebesgue integral in the available literature. The paper contains Mathematica codes which we prepared to calculate symbolically Lebesgue sums and limits of sums. We also visualize the graphs of simple functions used for approximation of the integrals. We also show how to calculate the needed sums and limits by hand (without CAS). We compare our calculations in Mathematica with calculations in some other CAS programs such as wxMaxima, MuPAD and Sage for the same integrals. Keywords Higher education · Lebesgue integral · Application of CAS · Mathematica · Mathematical didactics Mathematics Subject Classification 97R20 · 97I50 · 97B40 1 Introduction Young man, in mathematics you don’t understand things. You just get used to them John von Neumann I hear and I forget. I see and I remember. I do and I understand Chinese Quote Electronic Supplementary material The online version of this article (doi:10.1007/s11786-017-0321-5) contains supplementary material, which is available to authorized users. -

Contents 1. the Riemann and Lebesgue Integrals. 1 2. the Theory of the Lebesgue Integral

Contents 1. The Riemann and Lebesgue integrals. 1 2. The theory of the Lebesgue integral. 7 2.1. The Monotone Convergence Theorem. 7 2.2. Basic theory of Lebesgue integration. 9 2.3. Lebesgue measure. Sets of measure zero. 12 2.4. Nonmeasurable sets. 13 2.5. Lebesgue measurable sets and functions. 14 3. More on the Riemann integral. 18 3.1. The fundamental theorems of calculus. 21 3.2. Characterization of Riemann integrability. 22 1. The Riemann and Lebesgue integrals. Fix a positive integer n. Recall that Rn and Mn are the family of rectangles in Rn and the algebra of multirectangles in Rn, respec- tively. Definition 1.1. We let F + F B n ; n; n; be the set of [0; 1] valued functions on Rn; the vector space of real valued functions n on R ; the vector space of f 2 Fn such that ff =6 0g[rng f is bounded, respectively. We let S B \S M ⊂ F S+ S \F + ⊂ S+ M n = n ( n) n and we let n = n n ( n): n Thus s 2 Sn if and only if s : R ! R, rng s is finite, fs = yg is a multirectangle 2 R f 6 g 2 S+ 2 S ≥ for each y and s = 0 is bounded and s n if and only if s n and s 0. S+ S+ 2 S+ We let n;" be the set of nondecreasing sequences in n . For each s n;" we let 2 F + sup s n be such that n sup s(x) = supfsν (x): ν 2 Ng for x 2 R and we let n f + 2 N+g In;"(s) = sup In (sν ): ν : 2 S+ Remark 1.1. -

(Riemann) Integration Sucks!!!

(Riemann) Integration Sucks!!! Peyam Ryan Tabrizian Friday, November 18th, 2010 1 Are all functions integrable? Unfortunately not! Look at the handout "Solutions to 5.2.67, 5.2.68", we get two examples of functions f which are not Riemann integrable! Let's recap what those two examples were! 1 The first one was f(x) = x on [0; 1]. The reason that this function fails to be integrable is that it goes to 1 is a very fast way when x goes to 0, so the area under the graph of this function is infinite. Remember that it is not enough to say that this function has a vertical p1 asymptote at x = 0. For example, the function g(x) = x has an asymptote at x = 0, but it is still integrable, because by the FTC: Z 1 1 p p 1 dx = 2 x 0 = 2 0 x 1 This is not too bad! Basically, we have that f(x) = x is not integrable because its integral is ’infinite’, whatever this might mean! The point is: if a function is integrable, then its integral is finite. There's a way to get around this! In Math 1B, you'll learn that even though this function is not integrable, we can define its integral to be 1, so you'd write: Z 1 1 dx = 1 0 x The second example was way worse! The function in question was: 0 if x is rational f(x) = 1 if x is irrational And its graph looks like this: 1 1A/Handouts/Nonintegrable.png This function is not integrable because it basically can't make up its mind! It sometimes is 0 and sometimes is 1. -

Measure Theory and Lebesgue Integration

Appendix D Measure theory and Lebesgue integration ”As far as the laws of mathematics refer to reality, they are not certain, and as far as they are certain, they do not refer to reality.” Albert Einstein (1879-1955) In this appendix, we will briefly recall the main concepts and results about the measure theory and Lebesgue integration. Then, we will introduce the Lp spaces that prove especially useful in the analysis of the solutions of partial differential equations. Contents D.1 Measure theory . 107 D.2 Riemann integration . 109 D.3 Lebesgue integration . 111 D.4 Lp spaces . 116 D.1 Measure theory Measure theory initially was proposed to provide an analysis of and generalize notions such as length, area and volume (not strictly related to physical sizes) of subsets of Euclidean spaces. The approach to measure and integration is axomatic, i.e. a measure is any function µ defined on subsets which satisfy a cetain list of properties. In this respect, measure theory is a branch of real analysis which investigates, among other concepts, measurable functions and integrals. D.1.1 Definitions and properties Definition D.1 A collection S of subsets of a set X is said to be a topology in X is S has the following three properties: (i) S and X S, ∅ ∈ ∈ 107 108 Chapter D. Measure theory and Lebesgue integration (ii) if V S for i = 1, . , n then, V V V S, i ∈ 1 ∩ 2 ∩ · · · ∩ n ∈ (iii) if V is an arbitrary collection of members of S (finite, countable or not), then V inS. -

Another Method of Integration: Lebesgue Integral

Another method of Integration: Lebesgue Integral Shengjun Wang 2017/05 1 2 Abstract Centuries ago, a French mathematician Henri Lebesgue noticed that the Riemann Integral does not work well on unbounded functions. It leads him to think of another approach to do the integration, which is called Lebesgue Integral. This paper will briefly talk about the inadequacy of the Riemann integral, and introduce a more comprehensive definition of integration, the Lebesgue integral. There are also some discussion on Lebesgue measure, which establish the Lebesgue integral. Some examples, like Fσ set, Gδ set and Cantor function, will also be mentioned. CONTENTS 3 Contents 1 Riemann Integral 4 1.1 Riemann Integral . .4 1.2 Inadequacies of Riemann Integral . .7 2 Lebesgue Measure 8 2.1 Outer Measure . .9 2.2 Measure . 12 2.3 Inner Measure . 26 3 Lebesgue Integral 28 3.1 Lebesgue Integral . 28 3.2 Riemann vs. Lebesgue . 30 3.3 The Measurable Function . 32 CONTENTS 4 1 Riemann Integral 1.1 Riemann Integral In a first year \real analysis" course, we learn about continuous functions and their integrals. Mostly, we will use the Riemann integral, but it does not always work to find the area under a function. The Riemann integral that we studied in calculus is named after the Ger- man mathematician Bernhard Riemann and it is applied to many scientific areas, such as physics and geometry. Since Riemann's time, other kinds of integrals have been defined and studied; however, they are all generalizations of the Rie- mann integral, and it is hardly possible to understand them or appreciate the reasons for developing them without a thorough understanding of the Riemann integral [1].