Gradual Adjustment and Equilibrium Uniqueness Under Noisy Monitoring∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Instructed Eucharist

CHRIST CHURCH AN EPISCOPAL CHURCH IN THE DIOCESE OF EAST CAROLINA FOUNDED 1715, NEW BERN, NORTH CAROLINA Our Vision: To be a church that loves the way God Loves THE SIXTH SUNDAY AFTER PENTECOST July 21, 2019 - 10:00 AM An Instructed Eucharist When presented with an option to either “stand or kneel,” we hope you will choose the posture that is both comfortable and prayerful. Please be sure all cell phones are silenced. Restrooms are located in the Parish House, through the double doors at the front of the church and then to the left, between the kiosk and reception desk. Hearing assistance is available through our sound system on frequency 72.900mhz. Book of Common (BCP) and Hymnal pages are listed on the right. BCP: Book of Common Prayer (black), S or H: Hymnal 1982 (blue), WLP: Wonder, Love, and Praise (green), L: Lift Every Voice (red & black) Our weekly newsletter, the Messenger, is available at the entry doors. Please take one with you A NOTE ABOUT TODAY’S LITURGY… For 2,000 years, Christians of all ages have come together Sunday after Sunday (and sometimes other days of the week!) to worship God and to celebrate Jesus’ presence with us in the Holy Eucharist. Eucharist comes from a Greek word that means “thanksgiving.” Each week, we offer our thanks to God for all the things we have in our life and all the ways God loves us. The Eucharist is not something that only a priest does; it is something that we do together. It takes all of us here to help make the Eucharist happen. -

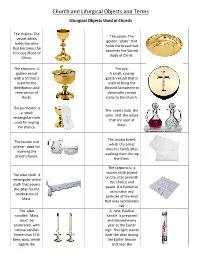

Church and Liturgical Objects and Terms

Church and Liturgical Objects and Terms Liturgical Objects Used in Church The chalice: The The paten: The vessel which golden “plate” that holds the wine holds the bread that that becomes the becomes the Sacred Precious Blood of Body of Christ. Christ. The ciborium: A The pyx: golden vessel A small, closing with a lid that is golden vessel that is used for the used to bring the distribution and Blessed Sacrament to reservation of those who cannot Hosts. come to the church. The purificator is The cruets hold the a small wine and the water rectangular cloth that are used at used for wiping Mass. the chalice. The lavabo towel, The lavabo and which the priest pitcher: used for dries his hands after washing the washing them during priest's hands. the Mass. The corporal is a square cloth placed The altar cloth: A on the altar beneath rectangular white the chalice and cloth that covers paten. It is folded so the altar for the as to catch any celebration of particles of the Host Mass. that may accidentally fall The altar A new Paschal candles: Mass candle is prepared must be and blessed every celebrated with year at the Easter natural candles Vigil. This light stands (more than 51% near the altar during bees wax), which the Easter Season signify the and near the presence of baptismal font Christ, our light. during the rest of the year. It may also stand near the casket during the funeral rites. The sanctuary lamp: Bells, rung during A candle, often red, the calling down that burns near the of the Holy Spirit tabernacle when the to consecrate the Blessed Sacrament is bread and wine present there. -

Altar Server Instructions Booklet

Christ the King Catholic Church ALTAR SERVER INSTRUCTIONS Revised May, 2012 - 1 - Table of Contents Overview – All Positions ................................................................................................................ 4 Pictures of Liturgical Items ............................................................................................................. 7 Definition of Terms: Liturgical Items Used At Mass ..................................................................... 8 Helpful Hints and Red Cassocks................................................................................................... 10 1st Server Instructions ................................................................................................................. 11 2nd Server Instructions ................................................................................................................ 14 Crucifer Instructions .................................................................................................................... 17 Special Notes about FUNERALS ................................................................................................ 19 BENEDICTION .......................................................................................................................... 23 - 2 - ALTAR SERVER INSTRUCTIONS Christ the King Church OVERVIEW INTRODUCTION First of all, THANK YOU for answering God’s call to assist at Mass. You are now one of the liturgical ministers, along with the priest, deacon, lector and Extraordinary -

Lent and Easter Season

LENT/EASTER SEASON February 22, 2015 WHAT’S THIS? At its root, Lent is a name for Spring, and is a 40-day period of preparation for Easter Sunday and one of the major liturgical seasons of the Catholic Church. A penitential season marked by prayer, fasting and abstinence, and almsgiving, Lent begins on Ash Wednesday and ends on Holy Saturday. The color of Lent is purple; The six Sundays in Lent are not part of the Lenten fast, and thus we say there are 40 days of Lent – a biblical number – while there are really 46; The Stations of the Cross are a devotion imitating a pilgrimage with Jesus to commemorate 14 key events around the crucifixion; Because of the solemnity of Lent, the Gloria and Alleluia are not said or sung. March 1, 2015 WHAT’S THIS? During Lent the Church is called to embrace a spirit of repentance and metanoia (“a change of heart”) or conversion. There are many opportunities for prayer – communally or individually – such as: Daily Mass (communal) Stations of the Cross (communal and individual) The Rosary (communal and individual) Liturgy of the Hours (individual) Reconciliation (communal and individual) Adoration of the Eucharist in the Blessed Sacrament Chapel every Friday (individual) Free web Lent program offered by Dynamic Catholic—sign up at BestLentEver.com. March 8, 2015 WHAT’S THIS? The next four weeks of “What’s This” will be highlighting specific components that lead up through the Easter Vigil. Palm Sunday – March 29: The liturgical color of Palm Sunday is red. Red signifies Christ’s Passion; The Palm Sunday liturgy begins with an additional Gospel highlighting the jubilant entrance of Jesus into Jerusalem; The palms are ancient symbols of victory and hope, as well as new life; The Palm Sunday liturgy takes on a more somber tone with the second Gospel reading of Christ’s Passion; The blessed palms received this day should be discarded as other blessed articles. -

THE CATHOLIC UNIVERSITY of AMERICA the Missa Chrismatis: a Liturgical Theology a DISSERTATION Submitted to the Faculty of the S

THE CATHOLIC UNIVERSITY OF AMERICA The Missa Chrismatis: A Liturgical Theology A DISSERTATION Submitted to the Faculty of the School of Theology and Religious Studies Of The Catholic University of America In Partial Fulfillment of the Requirements For the Degree Doctor of Sacred Theology © Copyright All rights reserved By Seth Nater Arwo-Doqu Washington, DC 2013 The Missa Chrismatis: A Liturgical Theology Seth Nater Arwo-Doqu, S.T.D. Director: Kevin W. Irwin, S.T.D. The Missa Chrismatis (“Chrism Mass”), the annual ritual Mass that celebrates the blessing of the sacramental oils ordinarily held on Holy Thursday morning, was revised in accordance with the decrees of Vatican II and promulgated by the authority of Pope Paul VI and inserted in the newly promulgated Missale Romanum in 1970. Also revised, in tandem with the Missa Chrismatis, is the Ordo Benedicendi Oleum Catechumenorum et Infirmorum et Conficiendi Chrisma (Ordo), and promulgated editio typica on December 3, 1970. Based upon the scholarly consensus of liturgical theologians that liturgical events are acts of theology, this study seeks to delineate the liturgical theology of the Missa Chrismatis by applying the method of liturgical theology proposed by Kevin Irwin in Context and Text. A critical study of the prayers, both ancient and new, for the consecration of Chrism and the blessing of the oils of the sick and of catechumens reveals rich theological data. In general it can be said that the fundamental theological principle of the Missa Chrismatis is initiatory and consecratory. The study delves into the history of the chrismal liturgy from its earliest foundations as a Mass in the Gelasianum Vetus, including the chrismal consecration and blessing of the oils during the missa in cena domini, recorded in the Hadrianum, Ordines Romani, and Pontificales Romani of the Middle Ages, through the reforms of 1955-56, 1965 and, finally, 1970. -

Vestments and Sacred Vessels Used at Mass

Vestments and Sacred Vessels used at Mass Amice (optional) This is a rectangular piece of cloth with two long ribbons attached to the top corners. The priest puts it over his shoulders, tucking it in around the neck to hide his cassock and collar. It is worn whenever the alb does not completely cover the ordinary clothing at the neck (GI 297). It is then tied around the waist. It symbolises a helmet of salvation and a sign of resistance against temptation. 11 Alb This long, white, vestment reaching to the ankles and is worn when celebrating Mass. Its name comes from the Latin ‘albus’ meaning ‘white.’ This garment symbolises purity of heart. Worn by priest, deacon and in many places by the altar servers. Cincture (optional) This is a long cord used for fastening some albs at the waist. It is worn over the alb by those who wear an alb. It is a symbol of chastity. It is usually white in colour. Stole A stole is a long cloth, often ornately decorated, of the same colour and style as the chasuble. A stole traditionally stands for the power of the priesthood and symbolises obedience. The priest wears it around the neck, letting it hang down the front. A deacon wears it over his right shoulder and fastened at his left side like a sash. Chasuble The chasuble is the sleeveless outer vestment, slipped over the head, hanging down from the shoulders and covering the stole and alb. It is the proper Mass vestment of the priest and its colour varies according to the feast. -

The Crucifix Crisis Challenge 2: the Vestment Controversy

Challenge 1: The Crucifix Crisis I’m a Catholic and even though I’m not happy with the Queen being a Protestant, her new religion doesn't upset me that much, but we loved our idols, candles & statues in our churches, so we’re glad she has kept them in her new churches, so you Puritans can jog on if you think we’re losing our crucifix that the queen has allowed, it is central to our faith! Oh no you don’t!! I am very angry that the Queen has shown too much lenience to the Catholics in her new religion, we Puritans demanded a much more plain religion and church, there is no space in the relationship with God for idols, statues and definitely not the crucifix, it is just TOO CATHOLIC! As you all know, I have tried to create a religion that appeals to the majority, I demand the crucifix remains. If I lose the crucifix I will appear weak as a Queen, as well as upset the Catholics and there are a lot of Catholics, but I am a Protestant, I should please them – Oh this is a dilemma! This is especially difficult and dangerous as the Pope has just told my loyal subjects, the English Catholics, to boycott my new Church, not sure I am ready for that fight just yet! In the end, Elizabeth backed down to the Puritans, (partly due to the influence of Cecil & Dudley) and removed the crucifix from her churches, however, she compromised once again by allowing them to stay in the Royal Chapel in every church. -

Appendices 1 – 12

APPENDICES 1 – 12 Religion Course of Study PreK-12 --- Diocese of Toledo --- 2018 Appendix 1: God’s Plan of Salvation -- A Summary (Used with permission, Diocese of Green Bay, WI) It is very important that before we dive into the religion Course of Study each year, we set the stage with an overview of God’s plan of salvation – the adventurous story of God’s unfailing love for us, his persistence in drawing us back to himself, and the characters along the way who succeed and fail in their quest for holiness. The context of the Story of Salvation will provide the proper foundation for the rest of your catechetical instruction. The Story can be taught as a one-day lesson, or a week long lesson. Each teacher must make a determination of how long they will take to present the Story to their students. It is important that the story be presented so that each of us can understand our place and purpose in the larger plan of God, as well as how the Church is central to God’s plan of salvation for the world. An overview of God’s plan is to be presented at the beginning of each year, and should be revisited periodically during the year as the subject matter or liturgical season warrants. Please make the presentation appropriate to the grade level. 1. God is a communion of Persons: God the Father, God the Son, and God the Holy Spirit. The three Persons in one God is the Blessed Trinity. God has no beginning and no end. -

St. Matthew's Church Newport Beach, California

St. Matthew’s Church Newport Beach, California Copyright © The Rt. Rev’d Stephen Scarlett, 2012 Publication Copyright © St. Matthew’s Church & School, 2012 stmatthewsnewport.com ALL RIGHTS RESERVED Cover Image: Caravaggio, Supper at Emmaus, 1606 Brera Fine Arts Academy, Milan TABLE OF CONTENTS Introduction 9-11 Chapter 1: The Creeds of the Church 13-27 Chapter 2: The Moral Law and the Gospel 29-40 Chapter 3: The Sacraments 41-53 Chapter 4: The Church and Its Symbolism 55-64 Chapter 5: Commentary on the Liturgy of the Holy Communion 65-103 Chapter 6: The Church Calendar 105-110 Chapter 7: The Life of Prayer 111-121 Chapter 8: The Duties of a Christian 123-129 INTRODUCTION HE Inquirers’ Class is designed to provide an introduction to what the church believes and does. TOne goal of the class is to provide space in the church for people who have questions to pursue answers. Another goal is that people who work their way through this material will be able to begin to participate meaningfully in the ministry and prayer life of the church. The Inquirers’ Class is not a Bible study. However, the main biblical truths of the faith are the focus of the class. The Inquirers’ Class gives the foundation and framework for our practice of the faith. If the class has its desired impact, participants will begin the habit of daily Bible reading in the context of daily prayer. The Need for An Inquirer’s Class People who come to the liturgy without any instruction will typically be lost or bored. -

GLOSSARY of the LITURGY the University of St. Francis

GLOSSARY OF THE LITURGY The University of St. Francis. Accident: In relation to the liturgy, an “accident” refers to the appearance of a thing which can appear as another thing. In the case of the consecrated vs. unconsecrated host, both “appear” as flour and water mixed together and baked. However, after the consecration, the “accident” may appear the same but the “substance” has changed. (See Substance). Acclamations: These are short phrases of praise to God. The Gospel Acclamation occurs before the Gospel using either Alleluia or a different phrase during Lent. The Memorial Acclamation is proclaimed by the assembly following the Narrative Institution during the Eucharistic Prayer. Acolyte: A liturgical minister appointed to assist at liturgical celebrations. Priests and deacons receive this ministry before they are ordained. Lay men may be installed permanently in the ministry of acolyte through a rite of institution and blessing (903, 1672). Adoration CCC 2096, 2628, 1083: The acknowledgment of God as God, Creator and Savior, the Lord and Master of everything that exists. Through worship and prayer, the Church and individual persons give to God the adoration which is the first act of the virtue of religion. The first commandment of the law obliges us to adore God (2096, 2628; cf. 1083). Agnus Dei: “Lamb of God” An ancient hymn to Jesus Christ, it is usually sung during the fraction rite prior to the distribution of Holy Communion. (John 1:29) In the new Roman Missal, it reads “Behold the Lamb of God, behold him who takes away the sins of the world. Blessed are those called to the supper of the Lamb.” GLOSSARY OF THE LITURGY Page 1 The University of St. -

Stole, Maniple, Amice, Pallium, Ecclesiastical Girdle, Humeral Veil

CHAPTER 8 Minor Vestments: Stole, Maniple, Amice, Pallium, Ecclesiastical Girdle, Humeral Veil Introduction vestment of a pope, and of such bishops as were granted it by the pope as a sign of their metropolitan status.4 The term ‘minor vestments’ is used here to signify a Mostly, but not exclusively, the pallium was granted by number of smaller items which are not primary dress, the pope to archbishops – but they had to request it for- in the sense that albs, chasubles, copes and dalmatics mally, the request accompanied by a profession of faith are dress, but are nevertheless insignia of diaconal and (now an oath of allegiance). It seems to have been con- priestly (sometimes specifically episcopal) office, given sidered from early times as a liturgical vestment which at the appropriate service of ordination or investiture. could be used only in church and during mass, and, in- Other insignia are considered in other sections: the mitre creasingly, only on certain festivals. In the sixth century (Chapter 1); ecclesiastical shoes, buskins and stockings it took the form of a wide white band with a red or black (Chapters 7 and 9), and liturgical gloves (Chapter 10). cross at its end, draped around the neck and shoulders The girdle, pallium, stole and maniple all have the in such a way that it formed a V in the front, with the form of long narrow bands. The girdle was recognised ends hanging over the left shoulder, one at the front and as part of ecclesiastical dress from the ninth century at one at the back. -

China's Unfinished Economic Transition

Upjohn Institute Press China’s Unfinished Economic Transition Nicholas R. Lardy The Brookings Institution Chapter 4 (pp. 69-76) in: When is Transition Over? Annette N. Brown, ed. Kalamazoo, MI: W.E. Upjohn Institute for Employment Research, 1999 DOI: 10.17848/9780585282978.ch4 Copyright ©1999. W.E. Upjohn Institute for Employment Research. All rights reserved. 4 China’s Unfinished Economic Transition Nicholas R. Lardy The Brookings Institution The hallmark of China’s approach to economic reform is gradual- ism. China’s reformers, in effect, have eschewed the conventional wis- dom that successful economic transitions are built on the basis of rapid privatization of state-owned firms, overnight price reform, and a quick dismantling of trade barriers. Although a variety of new forms of own- ership have flourished in China, de facto privatization of small state- owned firms did not get underway until the mid 1990s, and few, if any, medium and large state-owned firms have been privatized. Relative prices in China for the most part have converged to world levels, but this process was extremely gradual. Opening the economy to foreign trade was similarly gradual and was a key part of the process by which relative domestic prices have converged to world levels. Moreover, foreign trade liberalization is far from complete, as China maintains a trade system with substantial protection for many industries. Paradoxically, China’s economic performance, by almost all mea- sures, has been superior to that in other transition economies. In con- trast to a period of sharply declining output in several of the states of Eastern Europe and the former Soviet Union, China’s gradual reform generated record rates of economic growth from the outset.