Tracert/ Traceroute) the Trace Route Utility Does Exactly What Its Name Implies—It Traces the Route Between Two Hosts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

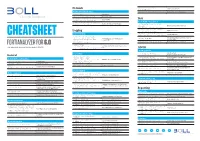

Fortianalyzer

Network diagnose fortilogd Device message rate msgrate-device Network Troubleshooting diagnose fortilogd msgrate-type Message rate for each log type execute ping [host] Ping utility execute traceroute [host] Traceroute utility diag sniffer packet <interface> Packet sniffer Disk <filter> <level> <timestamp> Disk / RAID / Virtual Disk config system fortiview settings Resolve IP address to hostname set resolve-ip enable config system locallog disk setting What happens with oldest logs set diskfull nolog / overwrite Logging diagnose system raid [option] RAID information status, hwinfo, alarms Log Forwarding diagnose system disk [option] Disk information info, health, errors, attributes CHEATSHEET config system log-forward edit log-aggregation <id> For virtual machines: provides a list of Forwarding logs to FortiAnalyzer / execute lvm info available disks aggregation-client set mode Syslog / CEF <realtime, execute lvm extend <disk nr.> For virtual machines: Add disk FORTIANALYZER FOR 6.0 aggregation, disable> config system Configure the FortiAnalyzer that receives © BOLL Engineering AG, FortiAnalyzer Cheat Sheet Version 1.1 / 08.02.2019 log-forward-service logs ADOM set accept-aggregation enable ADOM operation Log Backup config system global ADOM settings set adom-status [en/dis] Enable or disable ADOM mode General execute backup logs config system global Set ADOM mode to normal or advanced / Default device information <device name | all> for VDOMs) <ftp | sftp | scp> <server ip> Backup logs to external storage set adom-mode [normal/advanced] -

Introduction to UNIX at MSI June 23, 2015 Presented by Nancy Rowe

Introduction to UNIX at MSI June 23, 2015 Presented by Nancy Rowe The Minnesota Supercomputing Institute for Advanced Computational Research www.msi.umn.edu/tutorial/ © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Overview • UNIX Overview • Logging into MSI © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Frequently Asked Questions msi.umn.edu > Resources> FAQ Website will be updated soon © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research What’s the difference between Linux and UNIX? The terms can be used interchangeably © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research UNIX • UNIX is the operating system of choice for engineering and scientific workstations • Originally developed in the late 1960s • Unix is flexible, secure and based on open standards • Programs are often designed “to do one simple thing right” • Unix provides ways for interconnecting these simple programs to work together and perform more complex tasks © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Getting Started • MSI account • Service Units required to access MSI HPC systems • Open a terminal while sitting at the machine • A shell provides an interface for the user to interact with the operating system • BASH is the default shell at MSI © 2015 Regents of the University of Minnesota. All rights reserved. Supercomputing Institute for Advanced Computational Research Bastion Host • login.msi.umn.edu • Connect to bastion host before connecting to HPC systems • Cannot run software on bastion host (login.msi.umn.edu) © 2015 Regents of the University of Minnesota. -

Chapter 19 RECOVERING DIGITAL EVIDENCE from LINUX SYSTEMS

Chapter 19 RECOVERING DIGITAL EVIDENCE FROM LINUX SYSTEMS Philip Craiger Abstract As Linux-kernel-based operating systems proliferate there will be an in evitable increase in Linux systems that law enforcement agents must process in criminal investigations. The skills and expertise required to recover evidence from Microsoft-Windows-based systems do not neces sarily translate to Linux systems. This paper discusses digital forensic procedures for recovering evidence from Linux systems. In particular, it presents methods for identifying and recovering deleted files from disk and volatile memory, identifying notable and Trojan files, finding hidden files, and finding files with renamed extensions. All the procedures are accomplished using Linux command line utilities and require no special or commercial tools. Keywords: Digital evidence, Linux system forensics !• Introduction Linux systems will be increasingly encountered at crime scenes as Linux increases in popularity, particularly as the OS of choice for servers. The skills and expertise required to recover evidence from a Microsoft- Windows-based system, however, do not necessarily translate to the same tasks on a Linux system. For instance, the Microsoft NTFS, FAT, and Linux EXT2/3 file systems work differently enough that under standing one tells httle about how the other functions. In this paper we demonstrate digital forensics procedures for Linux systems using Linux command line utilities. The ability to gather evidence from a running system is particularly important as evidence in RAM may be lost if a forensics first responder does not prioritize the collection of live evidence. The forensic procedures discussed include methods for identifying and recovering deleted files from RAM and magnetic media, identifying no- 234 ADVANCES IN DIGITAL FORENSICS tables files and Trojans, and finding hidden files and renamed files (files with renamed extensions. -

Your Performance Task Summary Explanation

Lab Report: 11.2.5 Manage Files Your Performance Your Score: 0 of 3 (0%) Pass Status: Not Passed Elapsed Time: 6 seconds Required Score: 100% Task Summary Actions you were required to perform: In Compress the D:\Graphics folderHide Details Set the Compressed attribute Apply the changes to all folders and files In Hide the D:\Finances folder In Set Read-only on filesHide Details Set read-only on 2017report.xlsx Set read-only on 2018report.xlsx Do not set read-only for the 2019report.xlsx file Explanation In this lab, your task is to complete the following: Compress the D:\Graphics folder and all of its contents. Hide the D:\Finances folder. Make the following files Read-only: D:\Finances\2017report.xlsx D:\Finances\2018report.xlsx Complete this lab as follows: 1. Compress a folder as follows: a. From the taskbar, open File Explorer. b. Maximize the window for easier viewing. c. In the left pane, expand This PC. d. Select Data (D:). e. Right-click Graphics and select Properties. f. On the General tab, select Advanced. g. Select Compress contents to save disk space. h. Click OK. i. Click OK. j. Make sure Apply changes to this folder, subfolders and files is selected. k. Click OK. 2. Hide a folder as follows: a. Right-click Finances and select Properties. b. Select Hidden. c. Click OK. 3. Set files to Read-only as follows: a. Double-click Finances to view its contents. b. Right-click 2017report.xlsx and select Properties. c. Select Read-only. d. Click OK. e. -

LAB MANUAL for Computer Network

LAB MANUAL for Computer Network CSE-310 F Computer Network Lab L T P - - 3 Class Work : 25 Marks Exam : 25 MARKS Total : 50 Marks This course provides students with hands on training regarding the design, troubleshooting, modeling and evaluation of computer networks. In this course, students are going to experiment in a real test-bed networking environment, and learn about network design and troubleshooting topics and tools such as: network addressing, Address Resolution Protocol (ARP), basic troubleshooting tools (e.g. ping, ICMP), IP routing (e,g, RIP), route discovery (e.g. traceroute), TCP and UDP, IP fragmentation and many others. Student will also be introduced to the network modeling and simulation, and they will have the opportunity to build some simple networking models using the tool and perform simulations that will help them evaluate their design approaches and expected network performance. S.No Experiment 1 Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. 2 Study of Network Devices in Detail. 3 Study of network IP. 4 Connect the computers in Local Area Network. 5 Study of basic network command and Network configuration commands. 6 Configure a Network topology using packet tracer software. 7 Configure a Network topology using packet tracer software. 8 Configure a Network using Distance Vector Routing protocol. 9 Configure Network using Link State Vector Routing protocol. Hardware and Software Requirement Hardware Requirement RJ-45 connector, Climping Tool, Twisted pair Cable Software Requirement Command Prompt And Packet Tracer. EXPERIMENT-1 Aim: Study of different types of Network cables and Practically implement the cross-wired cable and straight through cable using clamping tool. -

Deviceinstaller User Guide

Device Installer User Guide Part Number 900-325 Revision C 03/18 Table of Contents 1. Overview ...................................................................................................................................... 1 2. Devices ........................................................................................................................................ 2 Choose the Network Adapter for Communication ....................................................................... 2 Search for All Devices on the Network ........................................................................................ 2 Change Views .............................................................................................................................. 2 Add a Device to the List ............................................................................................................... 3 View Device Details ..................................................................................................................... 3 Device Lists ................................................................................................................................. 3 Save the Device List ................................................................................................................ 3 Open the Device List ............................................................................................................... 4 Print the Device List ................................................................................................................ -

Chapter 10 SHELL Substitution and I/O Operations

Chapter 10 SHELL Substitution and I/O Operations 10.1 Command Substitution Command substitution is the mechanism by which the shell performs a given set of commands and then substitutes their output in the place of the commands. Syntax: The command substitution is performed when a command is given as: `command` When performing command substitution make sure that you are using the backquote, not the single quote character. Example: Command substitution is generally used to assign the output of a command to a variable. Each of the following examples demonstrate command substitution: #!/bin/bash DATE=`date` echo "Date is $DATE" USERS=`who | wc -l` echo "Logged in user are $USERS" UP=`date ; uptime` echo "Uptime is $UP" This will produce following result: Date is Thu Jul 2 03:59:57 MST 2009 Logged in user are 1 Uptime is Thu Jul 2 03:59:57 MST 2009 03:59:57 up 20 days, 14:03, 1 user, load avg: 0.13, 0.07, 0.15 10.2 Shell Input/Output Redirections Most Unix system commands take input from your terminal and send the resulting output back to your terminal. A command normally reads its input from a place called standard input, which happens to be your terminal by default. Similarly, a command normally writes its output to standard output, which is also your terminal by default. Output Redirection: The output from a command normally intended for standard output can be easily diverted to a file instead. This capability is known as output redirection: If the notation > file is appended to any command that normally writes its output to standard output, the output of that command will be written to file instead of your terminal: Check following who command which would redirect complete output of the command in users file. -

The Linux Command Line Presentation to Linux Users of Victoria

The Linux Command Line Presentation to Linux Users of Victoria Beginners Workshop August 18, 2012 http://levlafayette.com What Is The Command Line? 1.1 A text-based user interface that provides an environment to access the shell, which interfaces with the kernel, which is the lowest abstraction layer to system resources (e.g., processors, i/o). Examples would include CP/M, MS-DOS, various UNIX command line interfaces. 1.2 Linux is the kernel; GNU is a typical suite of commands, utilities, and applications. The Linux kernel may be accessed by many different shells e.g., the original UNIX shell (sh), the TENEX C shell (tcsh), Korn shell (ksh), and explored in this presentation, the Bourne-Again Shell (bash). 1.3 The command line interface can be contrasted with the graphic user interface (GUI). A GUI interface typically consists of window, icon, menu, pointing-device (WIMP) suite, which is popular among casual users. Examples include MS-Windows, or the X- Window system. 1.4 A critical difference worth noting is that in UNIX-derived systems (such as Linux and Mac OS), the GUI interface is an application launched from the command-line interface, whereas with operating systems like contemporary versions of MS-Windows, the GUI is core and the command prompt is a native MS-Windows application. Why Use The Command Line? 2.1 The command line uses significantly less resources to carry out the same task; it requires less processor power, less memory, less hard-disk etc. Thus, it is preferred on systems where performance is considered critical e.g., supercomputers and embedded systems. -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Command-Line IP Utilities This Document Lists Windows Command-Line Utilities That You Can Use to Obtain TCP/IP Configuration Information and Test IP Connectivity

Guide to TCP/IP: IPv6 and IPv4, 5th Edition, ISBN 978-13059-4695-8 Command-Line IP Utilities This document lists Windows command-line utilities that you can use to obtain TCP/IP configuration information and test IP connectivity. Command parameters and uses are listed for the following utilities in Tables 1 through 9: ■ Arp ■ Ipconfig ■ Netsh ■ Netstat ■ Pathping ■ Ping ■ Route ■ Tracert ARP The Arp utility reads and manipulates local ARP tables (data link address-to-IP address tables). Syntax arp -s inet_addr eth_addr [if_addr] arp -d inet_addr [if_addr] arp -a [inet_address] [-N if_addr] [-v] Table 1 ARP command parameters and uses Parameter Description -a or -g Displays current entries in the ARP cache. If inet_addr is specified, the IP and data link address of the specified computer appear. If more than one network interface uses ARP, entries for each ARP table appear. inet_addr Specifies an Internet address. -N if_addr Displays the ARP entries for the network interface specified by if_addr. -v Displays the ARP entries in verbose mode. -d Deletes the host specified by inet_addr. -s Adds the host and associates the Internet address inet_addr with the data link address eth_addr. The physical address is given as six hexadecimal bytes separated by hyphens. The entry is permanent. eth_addr Specifies physical address. if_addr If present, this specifies the Internet address of the interface whose address translation table should be modified. If not present, the first applicable interface will be used. Pyles, Carrell, and Tittel 1 Guide to TCP/IP: IPv6 and IPv4, 5th Edition, ISBN 978-13059-4695-8 IPCONFIG The Ipconfig utility displays and modifies IP address configuration information. -

Traceroute Is One of the Key Tools Used for Network Troubleshooting and Scouting

1 Introduction Traceroute is one of the key tools used for network troubleshooting and scouting. It has been available since networking's early days. Because traceroute is based on TTL header modification, it crafts its own network packets. The goal of this assignment is to re-implement a traceroute with cutting edge techniques designed to improve its scouting capabilities and bypass SPI firewalls. In this assignment you will learn about packet injection and sniffing. The assignment also teaches about more subtle topics such as network latency, packet filtering, and Q.O.S. 1.1 Instructions This project has to be completed sequentially as each part depends on the previous ones. The required coding language is C. You should consider using libnet [1] and libpcap [2], however using raw sockets is permitted as well. Note that the exercises become progressively more difficult, with Exercise 5 likely to consume the greatest amount of effort. 1.2 Submission and Grading To compile your project, the script will use the command make, executed in the project directory. Then it will launch our tests, using an executable name "traceng" you have to make sure your make file results in this executable name, in the same project directory. Make sure that the command line options and the output format are correctly implemented as they will be used by our script during the grading process. Specific examples of how we are going to test your executable are provided later in this document, for each exercise. Your project directory should also contain a file called README which is text file describing the highlights of your solution to each exercise anything special that you did, information required in the specific exercise, what is the most interesting thing you learned in the exercise, and anything else we need to take into account. -

Lesson E19 En. Internet Troubleshooting, Disturbances, Maintenance

Leonardo da Vinci Programme – Project RO/03/B/P/PP175006 LESSON E19_EN. INTERNET TROUBLESHOOTING, DISTURBANCES, MAINTENANCE. Parent Entity: IPA SA, Bucharest, Romania, 167 bis, Calea Floreasca; Fax: + 40 21 316 16 20 Authors: Gheorghe Mincu Sandulescu, University Professor Dr., IPA SA, Bucharest, Romania, 167 bis, Calea Floreasca, Mariana Bistran, Principal Researcher, IPA SA, Bucharest, Romania, 167 bis, Calea Floreasca, e-mail: [email protected]. Consultations: Every working day between 9.00 a.m. and 12.00 p.m. After studying this lesson, you will acquire the following knowledge: Understanding the troubleshooting methodology and procedures. Ethical, economic and managerial aspects of the troubleshooting activities. Essential diagnosis tools for troubleshooting and their mode of use. The control of connectivity through the use of powerful and simple to apply troubleshooting tools. The use of the Microsoft ©®WINDOWS environment for troubleshooting. The use of elements from the Unix / Linux environment for troubleshooting. CONTENT OF THE LESSON 1. TROUBLESHOOTING PROCEDURES. 2. UNIX UTILITIES AND SYSTEM FILES RELATED TO NETWORKING AND TROUBLESHOOTING. 3. DIAGNOSIS TOOLS AND UTILITIES IN MICROSOFT ®WINDOWS. 4. PATHPING MICROSOFT ®WINDOWS DIAGNOSIS TOOL FOR TROUBLESHOOTING CONNECTIVITY. 5. THE Netstat DIAGNOSIS TOOL. MICROSOFT ®WINDOWS 6. OTHER DIAGNOSIS TOOLS. MICROSOFT ®WINDOWS LEARNING OBJECTIVES: After learning this lesson you will accomplish the ability to: apply the troubleshooting methodology and procedures. respect the ethical constraints and take into consideration the economic and managerial aspects of the troubleshooting activities. accomplish the necessary information for troubleshooting actions inside your specific activities, to apply the troubleshooting tools.. The control of connectivity through the using of the powerful and simple to apply troubleshooting tools.