PARSING-Brief Study-Tanya Sharma, Sumit Das & Vishal Bhalla

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Parser Tables for Non-LR(1) Grammars with Conflict Resolution Joel E

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Science of Computer Programming 75 (2010) 943–979 Contents lists available at ScienceDirect Science of Computer Programming journal homepage: www.elsevier.com/locate/scico The IELR(1) algorithm for generating minimal LR(1) parser tables for non-LR(1) grammars with conflict resolution Joel E. Denny ∗, Brian A. Malloy School of Computing, Clemson University, Clemson, SC 29634, USA article info a b s t r a c t Article history: There has been a recent effort in the literature to reconsider grammar-dependent software Received 17 July 2008 development from an engineering point of view. As part of that effort, we examine a Received in revised form 31 March 2009 deficiency in the state of the art of practical LR parser table generation. Specifically, LALR Accepted 12 August 2009 sometimes generates parser tables that do not accept the full language that the grammar Available online 10 September 2009 developer expects, but canonical LR is too inefficient to be practical particularly during grammar development. In response, many researchers have attempted to develop minimal Keywords: LR parser table generation algorithms. In this paper, we demonstrate that a well known Grammarware Canonical LR algorithm described by David Pager and implemented in Menhir, the most robust minimal LALR LR(1) implementation we have discovered, does not always achieve the full power of Minimal LR canonical LR(1) when the given grammar is non-LR(1) coupled with a specification for Yacc resolving conflicts. We also detail an original minimal LR(1) algorithm, IELR(1) (Inadequacy Bison Elimination LR(1)), which we have implemented as an extension of GNU Bison and which does not exhibit this deficiency. -

Chapter 4 Syntax Analysis

Chapter 4 Syntax Analysis By Varun Arora Outline Role of parser Context free grammars Top down parsing Bottom up parsing Parser generators By Varun Arora The role of parser token Source Lexical Parse tree Rest of Intermediate Parser program Analyzer Front End representation getNext Token Symbol table By Varun Arora Uses of grammars E -> E + T | T T -> T * F | F F -> (E) | id E -> TE’ E’ -> +TE’ | Ɛ T -> FT’ T’ -> *FT’ | Ɛ F -> (E) | id By Varun Arora Error handling Common programming errors Lexical errors Syntactic errors Semantic errors Lexical errors Error handler goals Report the presence of errors clearly and accurately Recover from each error quickly enough to detect subsequent errors Add minimal overhead to the processing of correct progrms By Varun Arora Error-recover strategies Panic mode recovery Discard input symbol one at a time until one of designated set of synchronization tokens is found Phrase level recovery Replacing a prefix of remaining input by some string that allows the parser to continue Error productions Augment the grammar with productions that generate the erroneous constructs Global correction Choosing minimal sequence of changes to obtain a globally least-cost correction By Varun Arora Context free grammars Terminals Nonterminals expression -> expression + term Start symbol expression -> expression – term productions expression -> term term -> term * factor term -> term / factor term -> factor factor -> (expression) factor -> id By Varun Arora Derivations Productions are treated as -

Unit-5 Parsers

UNIT-5 PARSERS LR Parsers: • The most powerful shift-reduce parsing (yet efficient) is: • LR parsing is attractive because: – LR parsing is most general non-backtracking shift-reduce parsing, yet it is still efficient. – The class of grammars that can be parsed using LR methods is a proper superset of the class of grammars that can be parsed with predictive parsers. LL(1)-Grammars ⊂ LR(1)-Grammars – An LR-parser can detect a syntactic error as soon as it is possible to do so a left-to-right scan of the input. – covers wide range of grammars. • SLR – simple LR parser • LR – most general LR parser • LALR – intermediate LR parser (look-head LR parser) • SLR, LR and LALR work same (they used the same algorithm), only their parsing tables are different. LR Parsing Algorithm: A Configuration of LR Parsing Algorithm: A configuration of a LR parsing is: • Sm and ai decides the parser action by consulting the parsing action table. (Initial Stack contains just So ) • A configuration of a LR parsing represents the right sentential form: X1 ... Xm ai ai+1 ... an $ Actions of A LR-Parser: 1. shift s -- shifts the next input symbol and the state s onto the stack ( So X1 S1 ... Xm Sm, ai ai+1 ... an $ ) è ( So X1 S1 ..Xm Sm ai s, ai+1 ...an $ ) 2. reduce Aβ→ (or rn where n is a production number) – pop 2|β| (=r) items from the stack; – then push A and s where s=goto[sm-r,A] ( So X1 S1 ... Xm Sm, ai ai+1 .. -

Bottom-Up Parsing Swarnendu Biswas

CS 335: Bottom-up Parsing Swarnendu Biswas Semester 2019-2020-II CSE, IIT Kanpur Content influenced by many excellent references, see References slide for acknowledgements. Rightmost Derivation of 푎푏푏푐푑푒 푆 → 푎퐴퐵푒 Input string: 푎푏푏푐푑푒 퐴 → 퐴푏푐 | 푏 푆 → 푎퐴퐵푒 퐵 → 푑 → 푎퐴푑푒 → 푎퐴푏푐푑푒 → 푎푏푏푐푑푒 푆 푟푚 푆 푟푚 푆 푟푚 푆 푟푚 푆 푎 퐴 퐵 푒 푎 퐴 퐵 푒 푎 퐴 퐵 푒 푎 퐴 퐵 푒 푑 퐴 푏 푐 푑 퐴 푏 푐 푑 푏 CS 335 Swarnendu Biswas Bottom-up Parsing Constructs the parse tree starting from the leaves and working up toward the root 푆 → 푎퐴퐵푒 Input string: 푎푏푏푐푑푒 퐴 → 퐴푏푐 | 푏 푆 → 푎퐴퐵푒 푎푏푏푐푑푒 퐵 → 푑 → 푎퐴푑푒 → 푎퐴푏푐푑푒 → 푎퐴푏푐푑푒 → 푎퐴푑푒 → 푎푏푏푐푑푒 → 푎퐴퐵푒 → 푆 reverse of rightmost CS 335 Swarnendu Biswas derivation Bottom-up Parsing 푆 → 푎퐴퐵푒 Input string: 푎푏푏푐푑푒 퐴 → 퐴푏푐 | 푏 푎푏푏푐푑푒 퐵 → 푑 → 푎퐴푏푐푑푒 → 푎퐴푑푒 → 푎퐴퐵푒 → 푆 푎푏푏푐푑푒⇒ 푎 퐴 푏 푐 푑 푒 ⇒ 푎 퐴 푑 푒 ⇒ 푎 퐴 퐵 푒 ⇒ 푆 푏 퐴 푏 푐 퐴 푏 푐 푑 푎 퐴 퐵 푒 푏 푏 퐴 푏 푐 푑 푏 CS 335 Swarnendu Biswas Reduction • Bottom-up parsing reduces a string 푤 to the start symbol 푆 • At each reduction step, a chosen substring that is the rhs (or body) of a production is replaced by the lhs (or head) nonterminal Derivation 푆 훾0 훾1 훾2 … 훾푛−1 훾푛 = 푤 푟푚 푟푚 푟푚 푟푚 푟푚 푟푚 Bottom-up Parser CS 335 Swarnendu Biswas Handle • Handle is a substring that matches the body of a production • Reducing the handle is one step in the reverse of the rightmost derivation Right Sentential Form Handle Reducing Production 퐸 → 퐸 + 푇 | 푇 ∗ 퐹 → 푇 → 푇 ∗ 퐹 | 퐹 id1 id2 id1 id 퐹 → 퐸 | id 퐹 ∗ id2 퐹 푇 → 퐹 푇 ∗ id2 id2 퐹 → id 푇 ∗ 퐹 푇 ∗ 퐹 푇 → 푇 ∗ 퐹 푇 푇 퐸 → 푇 CS 335 Swarnendu Biswas Handle Although 푇 is the body of the production 퐸 → 푇, -

Compiler Construction

Compiler construction PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 10 Dec 2011 02:23:02 UTC Contents Articles Introduction 1 Compiler construction 1 Compiler 2 Interpreter 10 History of compiler writing 14 Lexical analysis 22 Lexical analysis 22 Regular expression 26 Regular expression examples 37 Finite-state machine 41 Preprocessor 51 Syntactic analysis 54 Parsing 54 Lookahead 58 Symbol table 61 Abstract syntax 63 Abstract syntax tree 64 Context-free grammar 65 Terminal and nonterminal symbols 77 Left recursion 79 Backus–Naur Form 83 Extended Backus–Naur Form 86 TBNF 91 Top-down parsing 91 Recursive descent parser 93 Tail recursive parser 98 Parsing expression grammar 100 LL parser 106 LR parser 114 Parsing table 123 Simple LR parser 125 Canonical LR parser 127 GLR parser 129 LALR parser 130 Recursive ascent parser 133 Parser combinator 140 Bottom-up parsing 143 Chomsky normal form 148 CYK algorithm 150 Simple precedence grammar 153 Simple precedence parser 154 Operator-precedence grammar 156 Operator-precedence parser 159 Shunting-yard algorithm 163 Chart parser 173 Earley parser 174 The lexer hack 178 Scannerless parsing 180 Semantic analysis 182 Attribute grammar 182 L-attributed grammar 184 LR-attributed grammar 185 S-attributed grammar 185 ECLR-attributed grammar 186 Intermediate language 186 Control flow graph 188 Basic block 190 Call graph 192 Data-flow analysis 195 Use-define chain 201 Live variable analysis 204 Reaching definition 206 Three address -

Pondicherry Engineering College Puducherry – 605 014

1 PONDICHERRY ENGINEERING COLLEGE PUDUCHERRY – 605 014 Bachelor of Technology B.Tech., INFORMATION TECHNOLOGY Regulations, Curriculum & Syllabus Effective from the academic year 2013-2014 of PONDICHERRY UNIVERSITY PUDUCHERRY – 605 014. 2 PONDICHERRY UNIVERSITY BACHELOR OF TECHNOLOGY PROGRAMMES (EIGHT SEMESTERS) REGULATIONS 1. Conditions for Admission: (a ) Candidates for admission to the first semester of the 8 semester B.Tech Degree programme should be required to have passed : The Higher Secondary Examination of the (10+2) curriculum (Academic Stream) prescribed by the Government of Tamil Nadu or any other examination equivalent there to with minimum of 45% marks (40% marks for OBC and SC/ST candidates) in aggregate of subjects – Mathematics, Physics and any one of the following optional subjects: Chemistry / Biotechnology/ Computer Science / Biology (Botany & Zoology) or an Examination of any University or Authority recognized by the Executive Council of the Pondicherry University as equivalent thereto. (b) For Lateral entry in to third semester of the eight semester B.Tech programme : The minimum qualification for admission is a pass in three year diploma or four year sandwich diploma course in engineering / technology with a minimum of 45% marks ( 40% marks for OBC and SC/ST candidates) in aggregate in the subjects covered from 3 rd to final semester or a pass in any B.Sc. course with mathematics as one of the subjects of study in XII standard with a minimum of 45% marks ( 40% marks for OBC and a mere pass for SC/ST candidates) in aggregate in main and ancillary subjects excluding language subjects. The list of diploma programs approved for admission for each of the degree programs is given in Annexure A . -

Characteristic Parsing: a Framework for Producing Compact Deterministic Parsers, I1"

JOURNAL OF COMPUTER AND SYSTEM Sel1~'.Na)E.q 14, 318--343 (1977) Characteristic Parsing: A Framework for Producing Compact Deterministic Parsers, I1" MATTHEW M. GEI.LER* AND MICHAEL A. IIARRISON Computer Science Division, University of California at Berkeley, Berkeley, California 94720 Received September 22, 1975; revised August 4, 1976 This paper applies the theory of characteristic parsing to obtain very small parsers in three special cases. The first application is for strict deterministic grammars. A charac- teristic parser is constructed and its properties are exhibited. It is as fast as an LI,~ parser. It accepts every string in the language and rejects every string not in the language although it rejects later than a canonical LR parser. The parser is shown to be exponentially smaller than an LR parser (or SLR or LALR parser) on a sample family of grammars. General size bounds are obtained. A similar analysis is carried through for SLR and LAI,R grammars. INTRODUCTION In [8], which is essentially the first part of this work, characteristic parsing was intro- duced and a basic theory was developed. The fundamental idea of this framework is to regard an LR parser as a table-driven device. Since LR-like tables are derived from sets of items, a parameterized algorithm is given in [8] for generating sets of items based on "characteristics" of the underlying grammar. This technique provides parsers that work as fast as LR parsers; every string in the original language is accepted by the parser; every string not in the original language is rejected by the parser (although perhaps later). -

Regulation 2018 Revision I (I - VIII Semester)

CURRICULUM & SYLLABUS FOR B.TECH COMPUTER SCIENCE AND ENGINEERING (Based on Outcome Based Education) Regulation 2018 Revision I (I - VIII Semester) TABLE OF CONTENTS S.No Contents Page No. 1 Institute Vision and Mission 3 2 Department Vision and Mission 4 3 Programme Educational Objectives (PEO) 5 4 Programme Outcome (PO) 6 5 Graduate Attributes 7 6 B. Tech. (Computer Science and Engineering ) – Curriculum 9 7 B. Tech. (Computer Science and Engineering ) – Syllabus 14 ii PERIYAR MANIAMMAI INSTITUTE OF SCIENCE AND TECHNOLOGY Our University is committed to the following Vision, Mission and core values, which guide us in carrying out our Civil Engineering Department mission and realizing our vision: INSTITUTION VISION To be a University of global dynamism with excellence in knowledge and innovation ensuring social responsibility for creating an egalitarian society. INSTITUTION MISSION UM1 Offering well balanced programmes with scholarly faculty and state-of-art facilities to impart high level of knowledge. UM2 Providing student - centered education and foster their growth in critical thinking, creativity, entrepreneurship, problem solving and collaborative work. UM3 Involving progressive and meaningful research with concern for sustainable development. UM4 Enabling the students to acquire the skills for global competencies. UM5 Inculcating Universal values, Self respect, Gender equality, Dignity and Ethics. INSTITUTION CORE VALUES • Student – centric vocation • Academic excellence • Social Justice, equity, equality, diversity, empowerment, -

5. Bottom-Up Parsing

1 5. Bottom-Up Parsing • LR methods (Left-to-right, Rightmost derivation) – SLR, Canonical LR, LALR • Other special cases: – Shift-reduce parsing – Operator-precedence parsing 2 Shift-Reduce Parsing Grammar: Reducing a sentence: Shift-reduce corresponds S a A B e a b b c d e to a rightmost derivation: A A b c | b a A b c d e S rm a A B e B d a A de rm a A d e a A B e rm a A b c d e These match S rm a b b c d e production’s right-hand sides S A A A A A A B A B a b b c d e a b b c d e a b b c d e a b b c d e 3 Handles A handle is a substring of grammar symbols in a right-sentential form that matches a right-hand side of a production Grammar: a b b c d e S a A B e a A b c d e A A b c | b a A de Handle B d a A B e S a b b c d e a A b c d e NOT a handle, because a A A e further reductions will fail … ? (result is not a sentential form) 4 Stack Implementation of Shift-Reduce Parsing Stack Input Action $ id+id*id$ shift $id +id*id$ reduce E id How to $E +id*id$ shift Grammar: resolve E E + E $E+ id*id$ shift $E+id *id$ conflicts? E E * E reduce E id $E+E *id$ shift (or reduce?) E ( E ) $E+E* id$ shift E id $E+E*id $ reduce E id $E+E*E $ reduce E E * E $E+E $ reduce E E + E Found handles $E $ accept to reduce 5 Conflicts • Shift-reduce and reduce-reduce conflicts are caused by – The limitations of the LR parsing method (even when the grammar is unambiguous) – Ambiguity of the grammar 6 Shift-Reduce Parsing: Shift-Reduce Conflicts Stack Input Action $… …$ … $…if E then S else…$ shift or reduce? Ambiguous grammar: S if E then S | if E then S else S | other Resolve in favor of shift, so else matches closest if 7 Shift-Reduce Parsing: Reduce-Reduce Conflicts Stack Input Action $ aa$ shift $a a$ reduce A a or B a ? Grammar: C A B A a B a Resolve in favor of reducing A a, otherwise we’re stuck! 8 6. -

Cs6601 Distributed Systems Part A

VSB ENGINEERING COLLEGE, KARUR – 639 111 DEPARTMENT OF INFORMATION TECHNOLOGY Academic Year: 2017-18(EVEN Semester) CS6601 DISTRIBUTED SYSTEMS PART A UNIT-I INTRODUCTION 1. Define distributed system. A distributed system is a collection of independent computers that appears to its users as a single coherent system. A distributed system is one in which components located at networked communicate and coordinate their actions only by passing message. 2. List the characteristics of distributed system. Programs are executed concurrently There is no global time Components can fail independently 3. Mention the challenges in distributed system. Heterogeneity Openness Security Scalability Failure handling Concurrency Transparency 4. Define heterogeneity. The Internet enables users to access services and run applications over a heterogeneous collection of computers and networks. Heterogeneity (that is, variety and difference) applies to all of the following: Networks; Computer Hardware; Operating Systems; Programming Languages; Implementations By Different Developers. 5. Why do we need openness? The openness of a computer system is the characteristic that determines whether the system can be extended and reimplemented in various ways. The openness of distributed systems is determined primarily by the degree to which new resource-sharing services can be added and be made available for use by a variety of client programs. 6. Define scalability. Distributed systems operate effectively and efficiently at many different scales, ranging from a small intranet to the Internet. A system is described as scalable if it will remain effective when there is a significant increase in the number of resources and the number of users. 7. What are the types of transparencies? Various transparencies types are as follows, Access transparency Location transparency 1 Concurrency transparency Replication transparency Failure transparency Performance transparency Scaling transparency 8. -

IELR(1): Practical LR(1) Parser Tables for Non-LR(1) Grammars with Conflict Resolution

IELR(1): Practical LR(1) Parser Tables for Non-LR(1) Grammars with Conflict Resolution Joel E. Denny and Brian A. Malloy School of Computing Clemson University Clemson, SC 29634, USA {jdenny,malloy}@cs.clemson.edu ABSTRACT 1. INTRODUCTION There has been a recent effort in the literature to recon- Grammar-dependent software is omnipresent in software sider grammar-dependent software development from an en- development [18]. For example, compilers, document pro- gineering point of view. As part of that effort, we examine a cessors, browsers, import/export tools, and generative pro- deficiency in the state of the art of practical LR parser table gramming tools are used in software development in all phases. generation. Specifically, LALR sometimes generates parser These phases include comprehension, analysis, maintenance, tables that do not accept the full language that the gram- reverse-engineering, code manipulation, and visualization of mar developer expects, but canonical LR is too inefficient to the application program under study. However, construc- be practical. In response, many researchers have attempted tion of these tools relies on the correct recognition of the to develop minimal LR parser table generation algorithms. language constructs specified by the grammar. In this paper, we demonstrate that a well known algorithm Some aspects of grammar engineering are reasonably well described by David Pager and implemented in Menhir, the understood. For example, the study of grammars as defi- most robust minimal LR(1) implementation we have dis- nitions of formal languages, including the study of LL, LR, covered, does not always achieve the full power of canonical LALR, and SLR algorithms and the Chomsky hierarchy, LR(1) when the given grammar is non-LR(1) coupled with form an essential part of most computer science curricula. -

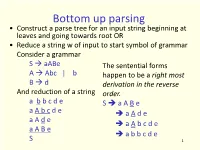

Bottom up Parsing

Bottom up parsing • Construct a parse tree for an input string beginning at leaves and going towards root OR • Reduce a string w of input to start symbol of grammar Consider a grammar S aABe The sentential forms A Abc | b happen to be a right most B d derivation in the reverse And reduction of a string order. a b b c d e S a A B e a A b c d e a A d e a A d e a A b c d e a A B e a b b c d e S 1 Shift reduce parsing • Split string being parsed into two parts – Two parts are separated by a special character “.” – Left part is a string of terminals and non terminals – Right part is a string of terminals • Initially the input is .w 2 Shift reduce parsing … • Bottom up parsing has two actions • Shift: move terminal symbol from right string to left string if string before shift is α.pqr then string after shift is αp.qr 3 Shift reduce parsing … • Reduce: immediately on the left of “.” identify a string same as RHS of a production and replace it by LHS if string before reduce action is αβ.pqr and Aβ is a production then string after reduction is αA.pqr 4 Example Assume grammar is E E+E | E*E | id Parse id*id+id Assume an oracle tells you when to shift / when to reduce String action (by oracle) .id*id+id shift id.*id+id reduce Eid E.*id+id shift E*.id+id shift E*id.+id reduce Eid E*E.+id reduce EE*E E.+id shift E+.id shift E+id.