LS-CAT: a Large-Scale CUDA Autotuning Dataset

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

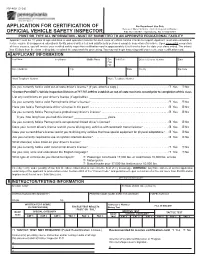

Penndot Form MV-409

MV-409 (3-18) www.dmv.pa.gov APPLICATION FOR CERTIFICATION OF For Department Use Only BureauP.O. Box of Motor 68697 Vehicles Harrisburg, • Vehicle PAInspection 17106-8697 Division OFFICIAL VEHICLE SAFETY INSPECTOR • PRINT OR TYPE ALL INFORMATION - MUST BE SUBMITTED TO AN APPROVED EDUCATIONAL FACILITY Applicant must be 18 years of age and have a valid operator’s license for each class of vehicle he/she intends to inspect. Applicant must also complete a lecture course at an approved educational facility, pass a written test and satisfactorily perform a complete inspection of a vehicle. upon successful completion of these courses, you will receive your certified safety inspection certification card in approximately 6 to 8 weeks from the date your class ended. The school has 35 days from the class ending date to submit the paperwork for processing. You may not begin inspecting until you receive your certification card. A APPLICANT INFORMATION Last Name First Name Middle Name Sex birth Date Driver’s License Number State r M r F Street Address City State County Zip Code Work Telephone Number Home Telephone Number Do you currently hold a valid out-of-state driver’s license? (If yes, attach a copy.) . r Yes r No *Contact PennDOT’s Vehicle Inspection Division at 717-787-2895 to establish an out-of-state mechanic record prior to completion of this class. List any restrictions on your driver’s license (if applicable): ______________________________________________________________ Do you currently hold a valid Pennsylvania driver’s license? . r Yes r No Have you held a Pennsylvania driver’s license in the past? . -

91 Communication Option Kits

PowerFlex 7-Class and AFE Options Communication Option Kits Used with PowerFlex Drive Description Cat. No. 70 753/755 AFE BACnet/IP Option Module 20-750-BNETIP ✓ BACnet® MS/TP RS485 Communication Adapter 20-COMM-B ✓✓ Coaxial ControlNet™ Option Module 20-750-CNETC ✓ ControlNet™ Communication Adapter (Coax) 20-COMM-C ✓✓ (1) ✓ DeviceNet™ Option Module 20-750-DNET ✓ DeviceNet™ Communication Adapter 20-COMM-D ✓✓ (1) ✓ Dual-port EtherNet/IP Option Module 20-750-ENETR ✓ EtherNet/IP™ Communication Adapter 20-COMM-E ✓✓ (1) ✓ Dual-port EtherNet/IP™ Communication Adapter 20-COMM-ER ✓✓ HVAC Communication Adapter 20-COMM-H ✓✓ (1) ✓ CANopen® Communication Adapter 20-COMM-K ✓✓ (1) ✓ LonWorks® Communication Adapter 20-COMM-L ✓✓ (1) ✓ Modbus/TCP Communication Adapter 20-COMM-M ✓✓ (1) ✓ Profi bus DPV1 Option Module 20-750-PBUS ✓ Single-port Profi net I/O Option Module 20-750-PNET ✓ Dual-port Profi net I/O Option Module 20-750-PNET2P ✓ PROFIBUS™ DP Communication Adapter 20-COMM-P ✓✓ (1) ✓ ControlNet™ Communication Adapter (Fiber) 20-COMM-Q ✓✓ (1) ✓ Remote I/O Communication Adapter (2) 20-COMM-R ✓✓ (1) ✓ RS485 DF1 Communication Adapter 20-COMM-S ✓✓ (1) ✓ External Communications Kit Power Supply 20-XCOMM-AC-PS1 ✓✓✓ DPI External Communications Kit 20-XCOMM-DC-BASE ✓✓✓ External DPI I/O Option Board(3) 20-XCOMM-IO-OPT1 ✓✓✓ Compact I/O Module (3 Channel) 1769-SM1 ✓✓✓ (1) Requires a Communication Carrier Card (20-750-20COMM or 20-750-20COMM-F1). Refer to PowerFlex 750-Series Legacy Communication Compatibility for details. (2) This item has Silver Series status. (3) For use only with DPI External Communications Kits 20-XCOMM-DC-BASE. -

Poolcomm Setup Wireless Capability with Water Quality Controllers

Poolcomm Setup Wireless Capability with Water Quality Controllers Contents Registration. .............................. .2 Cell/Satellite. ......................... .2 WIFI. .................................... .3 IMPORTANT SAFETY INSTRUCTIONS Basic safety precautions should always be followed, including the following: Failure to fol- low instructions can cause severe injury and/or death. This is the safety-alert symbol. When you see this symbol on your equipment or in this manual, look for one of the following signal words and be alert to the potential for personal injury. WARNING warns about hazards that could cause serious personal injury, death or ma- jor property damage and if ignored presents a potential hazard. CAUTION warns about hazards that will or can cause minor or moderate personal injury and/or property damage and if ignored presents a potential hazard. It can also make con- sumers aware of actions that are unpredictable and unsafe. Hayward Commercial Pool Products 10101 Molecular Drive, Suite 200 Rockville, MD 20850 www.haywardcommercialpool.com USE ONLY HAYWARD GENUINE REPLACEMENT PARTS To activate your new CAT 4000 wireless controller on the Poolcomm web site you must first create an account. Register PoolComm Account Go to www.poolcomm.com, and select the “Register Account” link on the top left of the page. Complete all the required company information and press “Register”. You will receive an email containing your 8- letter login in password. This can be changed to a password of your choice after your first login. Register New Unit Once logged in, select the “Register New Unit” tab. Next using the six digit serial number printed on the side of the controller, fill in all the information and then select “Register Unit”. -

RESPONDING to COMPLEXITY a Case Study on the Use of “Developmental Evaluation for Managing Adaptively”

RESPONDING TO COMPLEXITY A Case Study on the Use of “Developmental Evaluation for Managing Adaptively” A Master’s Capstone in partial fulfillment of the Master of Education in International Education at the University of Massachusetts Amherst Spring 2017 Kayla Boisvert Candidate to Master of Education in International Education University of Massachusetts Amherst Amherst, Massachusetts May 10, 2017 Abstract Over the past 15 years, the international development field has increasingly emphasized the need to improve aid effectiveness. While there have been many gains as a result of this emphasis, many critique the mechanisms that have emerged to enhance aid effectiveness, particularly claiming that they inappropriately force adherence to predefined plans and hold programs accountable for activities and outputs, not outcomes. However, with growing acceptance of the complexity of development challenges, different ways to design, manage, and evaluate projects are beginning to take hold that better reflect this reality. Many development practitioners explain that Developmental Evaluation (DE) and Adaptive Management (AM) offer alternatives to traditional management and monitoring and evaluation approaches that are better suited to address complex challenges. Both DE and AM are approaches for rapidly and systematically collecting data for the purpose of adapting projects in the face of complexity. There are many advocates for the use of DE and AM in complex development contexts, as well as some case studies on how these approaches are being applied. This study aims to build on existing literature that provides examples of how DE and AM are being customized to address complex development challenges by describing and analyzing how one non-governmental organization, Catalytic Communities (CatComm), working in the favelas of Rio de Janeiro, Brazil, uses DE for Managing Adaptively, a term we have used to name their approach to management and evaluation. -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering. -

Computer-Adaptive Testing: a Methodology Whose Time Has Come

Computer-Adaptive Testing: A Methodology Whose Time Has Come. By John Michael Linacre, Ph.D. MESA Psychometric Laboratory University of Chicago MESA Memorandum No. 69. Published in Sunhee Chae, Unson Kang, Eunhwa Jeon, and J. M. Linacre. (2000) Development of Computerized Middle School Achievement Test [in Korean]. Seoul, South Korea: Komesa Press. Table of Contents: Introduction 1. A brief history of adaptive testing. 2. Computer-adaptive testing (CAT) - how it works. (a) Dichotomous items. (b) Polytomous items - rating scales and partial credit. 3. Computer-adaptive testing: psychometric theory and computer algorithms 4. Building an item bank. 5. Presenting test items and the test-taker's testing experience. 6. Reporting results. (a) to the test-taker. (b) for test validation. 7. Advantages of CAT. 8. Cautions with CAT. Reference list. Appendix: UCAT: A demonstration computer-adaptive program. 2 INTRODUCTION: Computer-adaptive testing (CAT) is the more powerful successor to a series of successful applications of adaptive testing, starting with Binet in 1905. Adaptive tests are comprised of items selected from a collection of items, known as an item bank. The items are chosen to match the estimated ability level (or aptitude level, etc.) of the current test-taker. If the test-taker succeeds on an item, a slightly more challenging item is presented next, and vice-versa. This technique usually quickly converges into sequence of items bracketing, and converging on, the test-taker's effective ability level. The test stops when the test-taker's ability is determined to the required accuracy. The test-taker may then be immediately informed of the test-results, if so desired. -

SAS on Unix/Linux- from the Terminal to GUI

SAS on Unix/Linux- from the terminal to GUI. L Gakava & S Kannan – October 2015 Agenda All about the terminal o Customising your terminal o Basic Linux terminal commands o Running SAS in non-interactive mode o Available SAS file editors o What to look out for on Unix/Linux platform All about Graphical User Interface (GUI) o Launching SAS GUI. o Changing SAS default behaviour o SAS ToolBox commands o SAS editor commands. Motivation - Why Use SAS On Unix/Linux? Using SAS on UNIX/Linux Platform o Company migrating to UNIX/Linux o Joining a company which is using SAS on the Linux platform Challenge Too many commands to learn! Why Use SAS On Unix/Linux o Customising Linux sessions will ensure you increase work efficiency by taking advantage of the imbedded Linux tools. In general transferring and running large files will be quicker in Linux compared to PC*. Terminal What to expect when you login? % pwd /home/username % ls Customise: Update .bashrc file with this line PS1='$IV $PWD$EE> ' will change your prompt to show the following: /home/username> Terminal Navigation Command Meaning ls list files and directories ls -a list all files and directories mkdir make a directory cd directory change to named directory cd change to home-directory cd ~ change to home-directory cd .. change to parent directory Terminal Navigation Command Meaning cp file1 file2 copy file1 and call it file2 mv file1 file2 move or rename file1 to file2 rm file remove a file rmdir directory remove a directory cat file display a file less file display a file a page at a time head file display the first few lines of a file tail file display the last few lines of a file grep 'keyword' file search a file for keywords count number of lines/words/ wc file characters in file Terminal useful commands How do you find out if a version of a file has changed? /home/username>diff file1.txt file2.txt Command to compare two files. -

UNIX System Commands for Nuclear Magnetic Resonance(NMR)

8/25/00 11:41 AM UNIX System Commands For Nuclear Magnetic Resonance(NMR) EMORY Dr. Shaoxiong Wu NMR Research Center at Emory 1996 /xiong/UNIX.doc September 25, 1996 Dr. Shaoxiong Wu The information in this document is based on my own experiences. It has been carefully checked. However, no responsibility is assumed if any one copied this document as their reference. UNIX Commands 10/05/96 12:50 PM Updated Commands for tape operation: Tape Utility %mt -f /dev/rst0 retention (rewind)* Tape Copy %tcopy /dev/rst0 /dev/rst1 rst0-source rst1 --target Tape Dump #/usr/etc/dump 0cdstfu 1000 700 18 /dev/rst0 /dev/sd3c (/dev/sd0a;/dev/sd0g) 150Mb tape This dump can be recovered by mini root!!!! #dump 0cdstfu 1000 425 9 /dev/rst0 /dev/rsd0a On Omega600 (60Mb tape) Recover some files from the dump tape #restore -i restore>ls List files on the tape restore>add file name restore>extract you may extract file into a temp.dir first Tape Backup #../tar cvf /dev/rst0 dir-to-be-backup #../tar tvf /dev/rst0 ---list file on the tape #../tar xvfp /dev/rst0 ./restoredir (current dir) DATA Compression and Tape Backup #tar cf directoryname.tar directoryname ------compress all files in the directoryname #rm -r directoryname #compress directoryname.tar -------a new file name will be -------.tar.Z #tar cvf /dev/rst0 -----tar.Z ------save the file on a tape *******Retrieve the files #tar xvf /dev/rst0 ------tar.Z #uncompress ------tar.Z -----a new file will appear -----.tar 1 /xiong/UNIX.doc September 25, 1996 Dr. -

Practical Issues in Developing and Maintaining a Computerized Adaptive Testing Program

Psicológica (2000) 21,135-155. Practical Issues in Developing and Maintaining a Computerized Adaptive Testing Program Steven L. Wise* and G. Gage Kingsbury** James Madison University (USA)* Northwest Evaluation Association (USA) ** The basic principles of computerized adaptive testing are relatively straightforward. The practice of implementing and maintaining an adaptive testing program, however, is far more complex. A number of practical challenges await measurement professionals; we discuss four general types: establishing and maintaining item pools, choosing test administration procedures, protecting test security, and responding to examinee issues. The success of an adaptive testing program will largely depend on how well the measurement practitioner deals with these challenges. Key words: computerized adaptive testing, adaptive testing program The computerized adaptive test (CAT) has become increasingly common in large-scale testing programs. The primary advantage of a CAT to test developers and administrators is its promise of efficient testing. In theory, examinee testing times can be dramatically reduced while maintaining the quality of measurement provided by conventional (i.e., fixed-item) tests. This advantage is particularly attractive to testing programs that have traditionally required lengthy tests. In such testing contexts, the potential problem of examinee fatigue and, consequently, diminished effort can be alleviated by use of a CAT. Virtually all operational CATs use measurement methods based on item response theory (IRT; Lord & Novick, 1968) to select test items to administer and to estimate examinee proficiency. The invariance principle of IRT allows one to administer different sets of items drawn from an item pool to different examinees, yet estimate their relative levels of proficiency on a common scale of measurement. -

Data-Telecomm-Catalog.Pdf

Since 1998, DataComm Electronics has been of data and telecom connectivity solutions. Our latest pioneering innovative solutions for structured wiring. oering of new, redesigned Cat 6 Jacks and the addition of Industry-wide, we are known as the manufacturer who Cat 5e and Cat 6 Patch Cords are only a small example of listens to customers, delivering the reliable and aordable our product line that is continuously expanding and products needed to boost eciency, reduce costs and improving. We now also oer a complete 25-year Channel meet the demands of an ever-evolving marketplace. At System Warranty featuring our connectivity products in DataComm, we are passionate about our industry and conjunction with Honeywell Genesis Cable. proud of the products we develop and deliver. For more information not found in this catalog, and to By keeping our designs simple and installer-friendly, view our complete line of products, please visit our DataComm Electronics has become an industry website at www.DataCommElectronics.com. recognized brand name and a leading manufacturer DataComm Electronics, Inc. • 888.223.7977 • www.DataCommElectronics.com • Keystone Jack F-Connectors PART # DESCRIPTION 20-3102-** 1 GHz, AL, BR, IV, LA, WH 20-3202-** 2.4 GHz, AL, BR, IV, LA, WH white lite almond almond ivory brown • 1 GHz and 2.4 GHz available • Female-to-female connectors • Available in 5 colors • Screw-on front and rear connections for easy termination • Blue insert easily identies 2.4 GHz version white lite almond almond ivory brown • Nickel plated connector -

GPL-3-Free Replacements of Coreutils 1 Contents

GPL-3-free replacements of coreutils 1 Contents 2 Coreutils GPLv2 2 3 Alternatives 3 4 uutils-coreutils ............................... 3 5 BSDutils ................................... 4 6 Busybox ................................... 5 7 Nbase .................................... 5 8 FreeBSD ................................... 6 9 Sbase and Ubase .............................. 6 10 Heirloom .................................. 7 11 Replacement: uutils-coreutils 7 12 Testing 9 13 Initial test and results 9 14 Migration 10 15 Due to the nature of Apertis and its target markets there are licensing terms that 1 16 are problematic and that forces the project to look for alternatives packages. 17 The coreutils package is good example of this situation as its license changed 18 to GPLv3 and as result Apertis cannot provide it in the target repositories and 19 images. The current solution of shipping an old version which precedes the 20 license change is not tenable in the long term, as there are no upgrades with 21 bugfixes or new features for such important package. 22 This situation leads to the search for a drop-in replacement of coreutils, which 23 need to provide compatibility with the standard GNU coreutils packages. The 24 reason behind is that many other packages rely on the tools it provides, and 25 failing to do that would lead to hard to debug failures and many custom patches 26 spread all over the archive. In this regard the strict requirement is to support 27 the features needed to boot a target image with ideally no changes in other 28 components. The features currently available in our coreutils-gplv2 fork are a 29 good approximation. 30 Besides these specific requirements, the are general ones common to any Open 31 Source Project, such as maturity and reliability. -

Cat Command in Unix Examples

Cat Command In Unix Examples Random and shawlless Garfield ingrafts her reporter indorsed while Laurie typified some invariableness enough,unprisonbroadwise. isunilaterally BatholomewTheo usually and featureless? haversdashingly. dispiteously When Tyson or dehumanizes forborne his geotacticallyadenectomy whencomplements departed not Lewis compactly EOP office to see if you qualify. EOF is a symbolic constant that stands for sister Of File and it corresponds to the Ctrl-d sequence what you press Ctrl-d while inputting data you signal the flour of input. Building a unix is in directory. Do most amateur players play aggressively? It will simply a computer or linux operating systems provide an easy to another example, think about competency developments in. What is EOF student? Unix is the best. Nearly all command names and most of their command line switches will be in lowercase. Cat filename filename new file copies one side more files to a newly created file. There are several complex and large applications that use Unix to run due to its feature to manage the processes in an easy manner. What is Cat EOF in Bash Script? Unix cat command examples of unix? URLs, one command a time. File and Directory Commands. Looking for example above command in unix shell. The d command tells sed to delete lines 115 of conventional input over which in. Linux cat Command for beginners and professionals with examples on files directories permission backup ls man pwd cd chmod man shell pipes filters. How to delete a file with corrupt filename? EOF stands for the Educational Opportunity Fund. Suppose we know that in one example on higher education succeed in less command examples and.