A Brief History of Electronic Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Korg Triton Extreme Manual

E 2 Thank you for purchasing the Korg TRITON Extreme music workstation/sampler. To ensure trouble-free enjoyment, please read this manual carefully and use the instrument as directed. About this manual Conventions in this manual References to the TRITON Extreme The TRITON Extreme is available in 88-key, 76-key and The owner’s manuals and how to use 61-key models, but all three models are referred to them without distinction in this manual as “the TRITON Extreme.” Illustrations of the front and rear panels in The TRITON Extreme come with the following this manual show the 61-key model, but the illustra- owner’s manuals. tions apply equally to the 88-key and 76-key models. • Quick Start • Operation Guide Abbreviations for the manuals QS, OG, PG, VNL, EM • Parameter Guide The names of the manuals are abbreviated as follows. • Voice Name List QS: Quick Start OG: Operation Guide Quick Start PG: Parameter Guide Read this manual first. This is an introductory guide VNL: Voice Name List that will get you started using the TRITON Extreme. It EM: EXB-MOSS Owner’s Manual (included with the explains how to play back the demo songs, select EXB-MOSS option) sounds, use convenient performance functions, and Keys and knobs [ ] perform simple editing. It also gives examples of using sampling and the sequencer. References to the keys, dials, and knobs on the TRI- TON Extreme’s panel are enclosed in square brackets Operation Guide [ ]. References to buttons or tabs indicate objects in This manual describes each part of the TRITON the LCD display screen. -

Portable Digital Musical Instruments 2009 — 2010

PORTABLE DIGITAL MUSICAL INSTRUMENTS 2009 — 2010 music.yamaha.com/homekeyboard For details please contact: This document is printed with soy ink. Printed in Japan How far do you want What kind of music What are your Got rhythm? to go with your music? do you want to play? creative inclinations? Recommended Recommended Recommended Recommended Tyros3 PSR-OR700 NP-30 PSR-E323 EZ-200 DD-65 PSR-S910 PSR-S550B YPG series PSR-E223 PSR-S710 PSR-E413 Pages 4-7 Pages 8-11 Pages 12-13 Page 14 The sky's the limit. Our Digital Workstations are jam-packed If the piano is your thing, Yamaha has a range of compact We've got Digital Keyboards of all types to help players of every If drums and percussion are your strong forte, our Digital with advanced features, exceptionally realistic sounds and piano-oriented instruments that have amazingly realistic stripe achieve their full potential. Whether you're just starting Percussion unit gives you exceptionally dynamic and expressive performance functions that give you the power sound and wonderfully expressive playability–just like having out or are an experienced expert, our instrument lineup realistic sounds, letting you pound out your own beats–in to create, arrange and perform in any style or situation. a real piano in your house, with a fraction of the space. provides just what you need to get your creative juices flowing. live performance, in rehearsal or in recording. 2 3 Yamaha’s Premier Music Workstation – Unsurpassed Quality, Features and Performance Ultimate Realism Limitless Creative Potential Interactive -

Chiptuning Intellectual Property: Digital Culture Between Creative Commons and Moral Economy

Chiptuning Intellectual Property: Digital Culture Between Creative Commons and Moral Economy Martin J. Zeilinger York University, Canada [email protected] Abstract This essay considers how chipmusic, a fairly recent form of alternative electronic music, deals with the impact of contemporary intellectual property regimes on creative practices. I survey chipmusicians’ reusing of technology and content invoking the era of 8-bit video games, and highlight points of contention between critical perspectives embodied in this art form and intellectual property policy. Exploring current chipmusic dissemination strategies, I contrast the art form’s links to appropriation-based creative techniques and the ‘demoscene’ amateur hacking culture of the 1980s with the chiptune community’s currently prevailing reliance on Creative Commons licenses for regulating access. Questioning whether consideration of this alternative licensing scheme can adequately describe shared cultural norms and values that motivate chiptune practices, I conclude by offering the concept of a moral economy of appropriation-based creative techniques as a new framework for understanding digital creative practices that resist conventional intellectual property policy both in form and in content. Keywords: Chipmusic, Creative Commons, Moral Economy, Intellectual Property, Demoscene Introduction The chipmusic community, like many other born-digital creative communities, has a rich tradition of embracing and encouraged open access, collaboration, and sharing. It does not like to operate according to the logic of informational capital and the restrictive enclosure movements this logic engenders. The creation of chipmusic, a form of electronic music based on the repurposing of outdated sound chip technology found in video gaming devices and old home computers, centrally involves the reworking of proprietary cultural materials. -

The Effects of Background Music on Video Game Play Performance, Behavior and Experience in Extraverts and Introverts

THE EFFECTS OF BACKGROUND MUSIC ON VIDEO GAME PLAY PERFORMANCE, BEHAVIOR AND EXPERIENCE IN EXTRAVERTS AND INTROVERTS A Thesis Presented to The Academic Faculty By Laura Levy In Partial Fulfillment Of the Requirements for the Degree Master of Science in Psychology in the School of Psychology Georgia Institute of Technology December 2015 Copyright © Laura Levy 2015 THE EFFECTS OF BACKGROUND MUSIC ON VIDEO GAME PLAY PERFORMANCE, BEHAVIOR, AND EXPERIENCE IN EXTRAVERTS AND INTROVERTS Approved by: Dr. Richard Catrambone Advisor School of Psychology Georgia Institute of Technology Dr. Bruce Walker School of Psychology Georgia Institute of Technology Dr. Maribeth Coleman Institute for People and Technology Georgia Institute of Technology Date Approved: 17 July 2015 ACKNOWLEDGEMENTS I wish to thank the researchers and students that made Food for Thought possible as the wonderful research tool it is today. Special thanks to Rob Solomon, whose efforts to make the game function specifically for this project made it a success. Additionally, many thanks to Rob Skipworth, whose audio engineering expertise made the soundtrack of this study sound beautifully. I express appreciation to the Interactive Media Technology Center (IMTC) for the support of this research, and to my committee for their guidance in making it possible. Finally, I wish to express gratitude to my family for their constant support and quiet bemusement for my seemingly never-ending tenure in graduate school. iii TABLE OF CONTENTS Page ACKNOWLEDGEMENTS iii LIST OF TABLES vii LIST OF -

Videogame Music: Chiptunes Byte Back?

Videogame Music: chiptunes byte back? Grethe Mitchell Andrew Clarke University of East London Unaffiliated Researcher and Institute of Education 78 West Kensington Court, University of East London, Docklands Campus Edith Villas, 4-6 University Way, London E16 2RD London W14 9AB [email protected] [email protected] ABSTRACT Musicians and sonic artists who use videogames as their This paper will explore the sonic subcultures of videogame medium or raw material have, however, received art and videogame-related fan art. It will look at the work of comparatively little interest. This mirrors the situation in art videogame musicians – not those producing the music for as a whole where sonic artists are similarly neglected and commercial games – but artists and hobbyists who produce the emphasis is likewise on the visual art/artists.1 music by hacking and reprogramming videogame hardware, or by sampling in-game sound effects and music for use in It was curious to us that most (if not all) of the writing their own compositions. It will discuss the motivations and about videogame art had ignored videogame music - methodologies behind some of this work. It will explore the especially given the overlap between the two communities tools that are used and the communities that have grown up of artists and the parallels between them. For example, two around these tools. It will also examine differences between of the major videogame artists – Tobias Bernstrup and Cory the videogame music community and those which exist Archangel – have both produced music in addition to their around other videogame-related practices such as modding gallery-oriented work, but this area of their activity has or machinima. -

An Arduino-Based MIDI Controller for Detecting Minimal Movements in Severely Disabled Children

IT 16054 Examensarbete 15 hp Juni 2016 An Arduino-based MIDI Controller for Detecting Minimal Movements in Severely Disabled Children Mattias Linder Institutionen för informationsteknologi Department of Information Technology Abstract An Arduino-based MIDI Controller for Detecting Minimal Movements in Severely Disabled Children Mattias Linder Teknisk- naturvetenskaplig fakultet UTH-enheten In therapy, music has played an important role for children with physical and cognitive impairments. Due to the nature of different impairments, many traditional Besöksadress: instruments can be very hard to play. This thesis describes the development of a Ångströmlaboratoriet Lägerhyddsvägen 1 product in which electrical sensors can be used as a way of creating sound. These Hus 4, Plan 0 sensors can be used to create specially crafted controllers and thus making it possible for children with different impairments to create music or sound. This Postadress: thesis examines if it is possible to create such a device with the help of an Arduino Box 536 751 21 Uppsala micro controller, a smart phone and a computer. The end result is a product that can use several sensors simultaneously to either generate notes, change the Telefon: volume of a note or controlling the pitch of a note. There are three inputs for 018 – 471 30 03 specially crafted sensors and three static potentiometers which can also be used as Telefax: specially crafted sensors. The sensor inputs for the device are mini tele (2.5mm) 018 – 471 30 00 and any sensor can be used as long as it can be equipped with this connector. The product is used together with a smartphone application to upload different settings Hemsida: and a computer with a music work station which interprets the device as a MIDI http://www.teknat.uu.se/student synthesizer. -

Westminsterresearch Synth Sonics As

WestminsterResearch http://www.westminster.ac.uk/westminsterresearch Synth Sonics as Stylistic Signifiers in Sample-Based Hip-Hop: Synthetic Aesthetics from ‘Old-Skool’ to Trap Exarchos, M. This is an electronic version of a paper presented at the 2nd Annual Synthposium, Melbourne, Australia, 14 November 2016. The WestminsterResearch online digital archive at the University of Westminster aims to make the research output of the University available to a wider audience. Copyright and Moral Rights remain with the authors and/or copyright owners. Whilst further distribution of specific materials from within this archive is forbidden, you may freely distribute the URL of WestminsterResearch: ((http://westminsterresearch.wmin.ac.uk/). In case of abuse or copyright appearing without permission e-mail [email protected] 2nd Annual Synthposium Synthesisers: Meaning though Sonics Synth Sonics as Stylistic Signifiers in Sample-Based Hip-Hop: Synthetic Aesthetics from ‘Old-School’ to Trap Michail Exarchos (a.k.a. Stereo Mike), London College of Music, University of West London Intro-thesis The literature on synthesisers ranges from textbooks on usage and historiogra- phy1 to scholarly analysis of their technological development under musicological and sociotechnical perspectives2. Most of these approaches, in one form or another, ac- knowledge the impact of synthesisers on musical culture, either by celebrating their role in powering avant-garde eras of sonic experimentation and composition, or by mapping the relationship between manufacturing trends and stylistic divergences in popular mu- sic. The availability of affordable, portable and approachable synthesiser designs has been highlighted as a catalyst for their crossover from academic to popular spheres, while a number of authors have dealt with the transition from analogue to digital tech- nologies and their effect on the stylisation of performance and production approaches3. -

Studio Music Production I Subject Area/Course Number: MUSIC-093

Course Outline of Record Los Medanos College 2700 East Leland Road Pittsburg CA 94565 (925) 439-2181 Course Title: Studio Music Production I Subject Area/Course Number: MUSIC-093 New Course OR Existing Course Instructor(s)/Author(s): C. Chuah Subject Area/Course No.: MUSIC-093 Units: 2.0 Course Name/Title: Studio Music Production I Discipline(s): Music/Commercial Music Pre-Requisite(s): None Co-Requisite(s) None: Advisories: Prior or concurrent enrollment in Music-15 Catalog Description: This course is for students wanting to produce music using professional music studio equipment. With this lecture/demonstration and hands on class, students will be able to build a music studio and learn the basic operation of electronic musical equipment. The pieces of electronic musical equipment include MIDI synthesizer, music workstations, computer workstations, groove boxes, drum machines, soft-synthesizers, sequencers, and new products as the industry advances. This is an introductory course and it is intended to build a strong foundation in understanding studio music operation, whether the student is interested in composition, making beats and/or being a producer. Schedule Description: Do you want to learn how to produce music using professional music studio equipment? With this lecture/demonstration and hands on class, you will be able to build a music studio and learn the basic operation of electronic musical equipment. This is an introductory course and it is intended to build a strong foundation in understanding studio music operation, whether -

TR Operation Guide

Operation Guide E 2 To ensure long, trouble-free operation, THE FCC REGULATION WARNING (for U.S.A.) please read this manual carefully. This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Precautions Rules. These limits are designed to provide reasonable protec- tion against harmful interference in a residential installation. This Location equipment generates, uses, and can radiate radio frequency Using the unit in the following locations can result energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communi- in a malfunction. cations. However, there is no guarantee that interference will not • In direct sunlight occur in a particular installation. If this equipment does cause • Locations of extreme temperature or humidity harmful interference to radio or television reception, which can • Excessively dusty or dirty locations be determined by turning the equipment off and on, the user is • Locations of excessive vibration encouraged to try to correct the interference by one or more of the following measures: Power supply • Reorient or relocate the receiving antenna. Please connect the designated AC/AC power sup- • Increase the separation between the equipment and receiver. ply to an AC outlet of the correct voltage. Do not • Connect the equipment into an outlet on a circuit different from that to which the receiver is connected. connect it to an AC outlet of voltage other than that • Consult the dealer or an experienced radio/TV technician for for which your unit is intended. -

Let's Get Started…

Welcome to the OASYS Experience! This tour guide is your first stop on an amazing journey of discovery. Our goal here is to get you comfortable working with the user interface and control surface, and to give you a “sneak peek” at some of the many incredibly-musical things that you can do with OASYS! After you’ve finished this tour, you can learn more about this great instrument by working with the OASYS Operation and Parameter Guides. And you’ll find new OASYS tutorials, tips and tricks, and support materials by visiting www.korg.com/oasys and www.karma-labs.com/oasys on a regular basis! Let’s get started… Start by loading the factory data and listening to the demo songs: The factory demos allow you to experience OASYS in all of its glory - as a full production studio! In addition to hearing MIDI tracks which show off OASYS’ superb synth engines, several of the demo songs include HD audio tracks and sample data. 1. Press the DISK button > Select the FACTORY folder on the internal hard drive, and then press Open > Select the file PRELOAD.PCG, and then press Load > Check the boxes next to PRELOAD.SNG and PRELOAD.KSC, and then press OK. This will load the factory sounds, demo songs and samples. 2. Press the SEQ button, and then press the SEQ START/STOP button to play the first demo song, “Sinfonia Russe” > Press the SEQ START/STOP button again when finished listening to this song, and then press the pop-up arrow left of the song name, select and playback the other demo songs. -

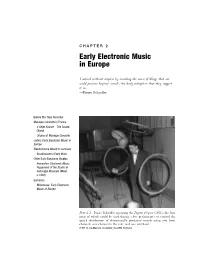

Holmes Electronic and Experimental Music

C H A P T E R 2 Early Electronic Music in Europe I noticed without surprise by recording the noise of things that one could perceive beyond sounds, the daily metaphors that they suggest to us. —Pierre Schaeffer Before the Tape Recorder Musique Concrète in France L’Objet Sonore—The Sound Object Origins of Musique Concrète Listen: Early Electronic Music in Europe Elektronische Musik in Germany Stockhausen’s Early Work Other Early European Studios Innovation: Electronic Music Equipment of the Studio di Fonologia Musicale (Milan, c.1960) Summary Milestones: Early Electronic Music of Europe Plate 2.1 Pierre Schaeffer operating the Pupitre d’espace (1951), the four rings of which could be used during a live performance to control the spatial distribution of electronically produced sounds using two front channels: one channel in the rear, and one overhead. (1951 © Ina/Maurice Lecardent, Ina GRM Archives) 42 EARLY HISTORY – PREDECESSORS AND PIONEERS A convergence of new technologies and a general cultural backlash against Old World arts and values made conditions favorable for the rise of electronic music in the years following World War II. Musical ideas that met with punishing repression and indiffer- ence prior to the war became less odious to a new generation of listeners who embraced futuristic advances of the atomic age. Prior to World War II, electronic music was anchored down by a reliance on live performance. Only a few composers—Varèse and Cage among them—anticipated the importance of the recording medium to the growth of electronic music. This chapter traces a technological transition from the turntable to the magnetic tape recorder as well as the transformation of electronic music from a medium of live performance to that of recorded media. -

History of Electronic Sound Modification'

PAPERS `)6 .)-t. corms 1-0 V History of Electronic Sound Modification' HARALD BODE Bode Sound Co., North Tonawanda, NY 14120, USA 0 INTRODUCTION 2 THE ELECTRONIC ERA The history of electronic sound modification is as After the Telharmonium, and especially after the old as the history of electronic musical instruments and invention of the vacuum tube, scores of electronic (and electronic sound transmission, recording, and repro- electronic mechanical) musical instruments were in- duction . vented with sound modification features . The Hammond Means for modifying electrically generated sound organ is ofspecial interest, since it evolved from Cahill's have been known. since the late 19th century, when work . Many notable inventions in electronic sound Thaddeus Cahill created his Telharmonium . modification are associated with this instrument, which With the advent of the electronic age, spurred first will be discussed later. by the invention of the electron tube, and the more Other instruments of the early 1930s included the recent development of solid-state devices, an astounding Trautonium by the German F. Trautwein, which was variety of sound modifiers have been created for fil- built in several versions . The Trautonium used reso- tering, distorting, equalizing, amplitude and frequency nance filters to emphasize selective overtone regions, modulating, Doppler effect and ring modulating, com- called formants [I 1]-[ 14] . In contrast, the German Jorg pressing, reverberating, repeating, flanging, phasing, Mager built an organlike instrument for which he used pitch changing, chorusing, frequency shifting, ana- loudspeakers with all types of driver systems and shapes lyzing, and resynthesizing natural and artificial sound. to obtain different sounds . In this paper some highlights of historical devel- In 1937 the author created the Warbo Formant organ, opment are reviewed, covering the time from 1896 to which had circuitry for envelope shaping as well as the present.