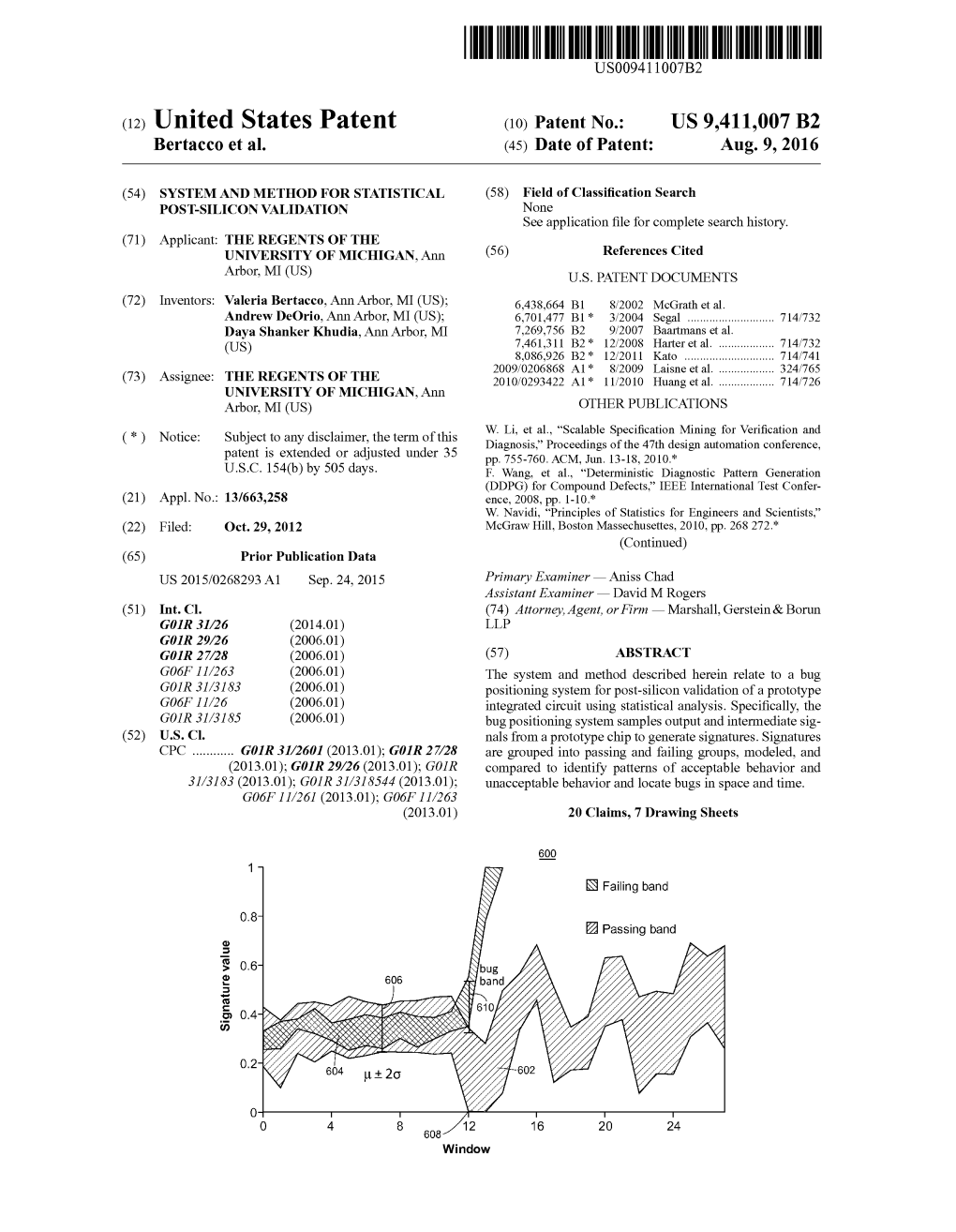

O.O. 68 Passing Band

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mongolia MODERATE ADVANCEMENT

Mongolia MODERATE ADVANCEMENT In 2013, Mongolia made a moderate advancement in efforts to eliminate the worst forms of child labor. In July, the Mongolia National Statistics Office officially released the Mongolia National Child Labor Survey Report. The Government established an Anti-Trafficking Sub-Council within the Ministry of Justice (MOJ), and Mongolia’s National Human Rights Commission (NHRC) conducted trainings on trafficking and forced labor for lawyers, judges, and law enforcement officers. The Government also established a coordinating council and allocated a budget for the National Plan of Action for Ending the Worst Forms of Child Labor. However, children in Mongolia continue to engage in child labor in animal husbandry and herding. Enforcement mechanisms for reducing child labor are minimal, and gaps persist in the legal framework and operating procedures for prosecuting criminal offenders, specifically regarding commercial sexual exploitation. I. PREVALENCE AND SECTORAL DISTRIBUTION OF CHILD LABOR Children in Mongolia are engaged in child labor in animal husbandry and herding.(1-6) In July 2013, the Mongolia National Statistics Office officially released the Mongolia National Child Labor Survey Report.(4) The report indicates that 11 percent of working children were engaged in hazardous work with boys comprising 8 out of 10 children. (4) The majority of child labor in Mongolia takes place in the informal sector in which there is little oversight and enforcement of labor laws.(7) Table 1 provides key indicators on children’s work and education in Mongolia. Table 1. Statistics on Children’s Work and Education Figure 1. Working Children by Sector, Ages 5-14 Children Age Percent Industry Services 2.5% Working (% and population) 5-14 yrs. -

October 2014

Issue 2- October 2014 Homecoming 2014:We’re on Top of the World by Caroline Zhou, Senior Class President This year’s homecoming dance fell on an earlier date than usual which made it extra stressful to organize it efficiently and successfully. That also means that there was less time for people to get crafty to ask a date to the dance; however, that didn’t stop homecoming from having a Artist of the Month ... page 2 great turnout. Fun Facts about Ebola The song chosen for the theme of the dance was “Top of the World” by Imagine Dragons as it perfectly represents JMM spirit. It was also used ... page 3 for the senior video, which incorporated actual video as opposed to the Prep Profiles ... page 4 usual slideshow, making it extra impressive. (Special shoutout to Renee Sports ... page 5 Kar-Johnson for making the video and to Brian Luo for providing a voice over). The pep rally Horoscopes ... page 6 was also a huge hit. The The Nerd Word, Part 2 MCs were Nasitta Keita, Drake Singleton, Haley Prudent, Payton Beverly, and Claire Franken. There was nonstop laughing during the lip-sync ...page 7 contest where there was even a special appearance from Mr. Schlitz and Admissions to College Mr. Voss during “Ice Ice Baby”. The seniors impressively swept all of the ...page 8 competitions except for the tug-of-war competition (won by the juniors). A money booth event replaced homecoming court, which wasn’t missed by many. The “Guy-Girl” dance performed by the Poms team was based off of Grease, and was one to remember! Hilarious and fun, all of the Sandys and Dannys captured the attention of their audience. -

Deloitte Innovation Summit 2018

Deloitte Innovation Summit 2018 Innovation and Italian Excellences – Officine Food, Boating, Manufacturing Innovazione Brochure / report title goes here | Section title goes here Innovation as growth engine for the country’s economic development: where to start and how to create value? The value of our national Assets is impressive and the potential of investing in innovation is so high that our Entrepreneurial Ecosystem has the opportunity to move as soon as possible, as long as a structured, practical and creative approach will be adopted. 02 Deloitte Innovation Summit 2018 | Innovation and Italian Excellences – Food, Boating, Manufacturing Innovation is confirmed to be a complex phenomenon as well as an absolute priority, as it leads to new perspectives for the economic and social growth of a 1country Given the changes that have been affecting We are not always capable of our social and economic environments, understanding the utility of new innovation is becoming such a complex technological advancements. Nouriel phenomenon that it often leaves behind Roubini has recently expressed his doubts many uncertainties we do not fully about the contribution of blockchain understand. technologies, defining them as the fraud of our century3. Furthermore, there For instance, we are not sure of where we are doubts about the validity of genetic are going: according to a recent study by manipulation, especially when it is about Dell, “Emerging technologies’ impact on humans4. society & work in 2030”, 50% of business Lately, during the “Data Protection and decision makers declares not to know Privacy Commissioners” conference in how their sector will evolve in the next 3 Brussels, Tim Cook has stated that the years and about 45% of them declared diffusion of personal data has become a to be worried of lagging behind with the weapon against the population. -

Cedars, January 21, 2000 Cedarville College

Masthead Logo Cedarville University DigitalCommons@Cedarville Cedars 1-21-2000 Cedars, January 21, 2000 Cedarville College Follow this and additional works at: https://digitalcommons.cedarville.edu/cedars Part of the Journalism Studies Commons, and the Organizational Communication Commons DigitalCommons@Cedarville provides a platform for archiving the scholarly, creative, and historical record of Cedarville University. The views, opinions, and sentiments expressed in the articles published in the university’s student newspaper, Cedars (formerly Whispering Cedars), do not necessarily indicate the endorsement or reflect the views of DigitalCommons@Cedarville, the Centennial Library, or Cedarville University and its employees. The uthora s of, and those interviewed for, the articles in this paper are solely responsible for the content of those articles. Please address questions to [email protected]. Recommended Citation Cedarville College, "Cedars, January 21, 2000" (2000). Cedars. 727. https://digitalcommons.cedarville.edu/cedars/727 This Issue is brought to you for free and open access by Footer Logo DigitalCommons@Cedarville, a service of the Centennial Library. It has been accepted for inclusion in Cedars by an authorized administrator of DigitalCommons@Cedarville. For more information, please contact [email protected]. January 21, 200 oe Stowell..................... 2 Drunk with Marty Ph.Ds......................3 .....................2 Senior Recitalists............. 4 Cedar Faces.................... 5 Well-oiled Honors Band...................7 machines Graves on Worship........... 8 ••••••••• a**** 5 Music Reviews................. 9 Flock flies north Women's Basketball........10 Men's Basketball............ 11 .................... 7 A CEDARVILLE COLLEGE STUDENT PUBLICATION Sidewalk Talk................. 12 CCM star Chris Rice performs on campus again Kristin Rosner but not the sophistication that Staff Writer often comes with the success that Rice has experienced lately. -

J I Mt Iron Forks

. .r T- :••• :'-J;. ,• •.< ' •?:• ' 7 " : ,: : : •". V - ^.V-- v";- ^ " ' ' • . ' mss-: '* -. : ' ' ': : ' » &!f, XXI y. ..vriy ! m&'Vj. I J I '}?%m WHOLE NUMBEB 1674. HORWALK, CONNECTICUT, TUESDAY, FEBRUARY 3, 1880. VOL. LXIII.--NUMBEB g. ,! Olivia. steamer,and 3 o'clock next morning she went TED. "Oh, Ted! my husband will pay you fpr "He Know'd. I'd be Thar. NORWALK GAZETTE, All the Latest Styles in REAL ESTATE. HELEN OF TYRE down. A heavy sea capsized our bale, and this!" Derrick Dood (F, K. Gass^way) in San Francisco we had hard work getting on it again. My He stopped. Post. PUBLISHED EVERY TUESDAY RI0R1IRS, OR SAIIE.—Three Building Lots on the HENRY W. LONGFLLOW. BY KLI.EN M. HTJTOHINSON. St. John Farm, at a bargain, For partic "It's very dusty," and Mrs. Laure Amber- "Aunt Laure, its no use to go. I can't companion, whose name I learned was Cox, The. following perfectly true story is hard Fulars call on or address, BEJf J. J. STXJJ1GES, What phantom is this that ley shook slightly the glossy folds of her Real Estate Broker, No. i Gazette Building, Nor appears Witli jibe and pout and dainty frown got discouraged, and' soon after daylight find the ford, it's got so dark." ly entitled to a place in this column, but as The Second Oldest roper la tlie State. FALL AND WINTER. gray traveling dress. walk, Conn. 4t4 .Through the purple mists of the yean, For this, for that, Olivia teased: slipped off the bale and went down without He waB panting. -

FALCON FLYER a Secret Identity Inside This Issue By

Issue 1 Date: 1/8/2016 FALCON FLYER A Secret Identity Inside this Issue By. Mr. Hool Pg. 2 Gravity Falls If you’re anything like me, you’ve had things in your life that Pg. 3 College Foot- you’ve loved and enjoyed, but you’ve been too shy to show it in public. ball Playoff Whether it be Pokémon cards, manga, Dora the Explorer, Star Wars, pro Pg. 4 Upcoming Su- wrestling, or a passion for comic books, we love them, but we’re afraid perhero Films of what other people will think about it. No matter how much we love Pg. 5 FNAF4 our interests, we often keep them hidden from the rest of the world. Pg. 6 The Flash Pg. 7 Wonder If you know anything about me, you know I’m a big superhero fan. Pg. 8 Pranks I always have been, for as long as I can remember. It was something Pg. 8 Guess Who? about those primary color clad do-gooders that I was immediately Pg. 9 The Walking drawn to. Heroic acts, helping others, being selfless, striving to be Dead better, these were all themes and ideas that I could get behind. Pg. 10 Home I remember sitting with my cousin, Scott, for hours, reading com- Pg. 10 Taco Bell ics, drawing superheroes, acting out their greatest battles in my back- Pg. 11 Twaimz yard. It was my world. It was my everything...and then middle school Pg. 12 Easy Oreo came along. All of the sudden, none of my friends liked comics and su- Truffles perheroes anymore. -

CHAPTER 8 Urban Gangs and Violence

CHAPTER 8 UrbanUrban GangsGangs andand ViolenceViolence Introduction distribute Urban gang problems are examined in this chapter. The main focusor here is on serious violent gangs in large cities. New research is presented that groups cities according to their experiences with serious gang violence over a 14-year period. Case studies of five cities’ gang histories are reviewed to illustrate nation- wide differences in serious violent gang problems. Both the correlates (features of gangs themselves) and the context (the local urban and regional environment) of serious gang violence are then examined. The chapter begins with research on the intensity ofpost, gang activity in urban areas. Serious violent gangs have been characterized by a diverse set of criteria (see Serious Violent Gangs in the Further Reading section at the end of this chapter). Most notably, they are typically found in large cities and distinguished by a number of structural features, the first of which is the degree of organiza- tion (e.g., they have rules, meetings, and leadership roles, a large membership—at least 15 gangs and more than 200 members but with wide variations—and a multiple-year history). Second, these gangs are involved in violent crimes thatcopy, physically harm victims, including homicide, aggravated assault, robbery, and nonlethal firearm injuries. These violent crimes frequently occur out of doors, on the streets, and in very small set spaces in neighborhoods with high violence rates. Moreover, such violent crimes likely involve multiplenot victims and suspects and have a contagious feature. Third, drug traffick- ing and firearm offenses are also very common. Fourth, these gangs tend to have both female and male members. -

Detection of Platelet Sensitivity to Inhibitors of COX-1, P2Y1, and P2Y12 Using a Whole Blood Microfluidic flow Assay

Thrombosis Research 133 (2014) 203–210 Contents lists available at ScienceDirect Thrombosis Research journal homepage: www.elsevier.com/locate/thromres Regular Article Detection of platelet sensitivity to inhibitors of COX-1, P2Y1, and P2Y12 using a whole blood microfluidic flow assay Ruizhi Li, Scott L. Diamond ⁎ Institute for Medicine and Engineering, Department of Chemical and Biomolecular Engineering, 1024 Vagelos Research Laboratories, University of Pennsylvania, Philadelphia, PA 19104, USA article info abstract Article history: Background: Microfluidic devices recreate the hemodynamic conditions of thrombosis. Received 30 July 2013 Methods: Whole blood inhibited with PPACK was treated ex vivo with inhibitors and perfused over collagen for Received in revised form 28 October 2013 300 s (wall shear rate = 200 s-1)usingamicrofluidic flow assay. Platelet accumulation was measured in the pres- Accepted 29 October 2013 ence of COX-1 inhibitor (aspirin, ASA), P2Y1 inhibitor (MRS 2179), P2Y12 inhibitor (2MeSAMP) or combined P2Y1 Available online 6 November 2013 and P2Y12 inhibitors. Results: High dose ASA (500 μM), 2MeSAMP (100 μM), MRS 2179 (10 μM), or combined 2MeSAMP and MRS 2179 Keywords: decreased total platelet accumulation by 27.5%, 75.6%, 77.7%, and 87.9% (p b 0.01), respectively. ASA reduced sec- platelet cyclooxygenase ondary aggregation rate between 150 and 300 s without effect on primary deposition rate on collagen from 60 to ADP 150 s. In contrast, 2MeSAMP and MRS 2179 acted earlier and reduced primary deposition to collagen between 60 thromboxane and 105 s and secondary aggregation between 105 and 300 s. RCOX and RP2Y (defined as a ratio of secondary aggre- hemodynamic gation rate to primary deposition rate) demonstrated 9 of 10 subjects had RCOX b 1orRP2Y b 1followingASAor 2MeSAMP addition, while 6 of 10 subjects had RP2Y b 1 following MRS 2179 addition. -

Removal Methods Questioned ESRP Expert Responds to the Handling Of

1.:;::::::::iiii Renegade Pantry I) The Renegade Rip 1-• J @the_renegade_rip Read SGA president member for mayor CJ @bc_rip candidate statements News, Page 5 www.therip.com Campus, Page 4 ene BAKERSFIELD COLLEGE Vol. 87 · No. 12 Wednesday, April 6, 2016 JOE BERGMAN / THE RIP The three light spots of dirt on the hillside in Memorial Stadium, shown in a March 17, 2016 photo, used to be kit-fox dens, and now they are go~e. _Th~ inset photo was given to The Rip by Brian Cypher of ESRP's Bakersfield program staff, and shows a kit fox with its pups in their natural habitat before work started on the h11ls1de m February of 2015. Removal ESRP expert responds to methods the handling of kit foxes By Mason J. Rockfellow propriate procedures and provided contact info for Editor in Chief agencies and individuals that could assist them." When destroying an endangered species' habitat questioned Regarding the issue of eradicating kit foxes or relocating tl1at species, tl1ere are a lot of vari from Bakersfield College's Memorial Stadium, an ables tl1at can stand in tl1e way. expert from the Environmental Species Recovery According to Cypher, the U.S. Fish and Wild By Joe Bergman Program said that ESRP was never contacted for life Service and California Department of Fish and Photo & Sports Editor advice or guidance on the matter. Wildlife states that to remove a den from an area, Brian L. Cypher, associate director and research The Renegade Rip has been investigating claims the den must be monitored to make sure that no kit ecologist, coordinates several of ESRP's research foxes are present. -

Prosecution of Rhino Poachers: the Need to Focus on Prosecution of the Higher Echelons of Organised Crime Networks

Please provide footnote text African Journal of Legal Studies 9 (2016) 221–234 brill.com/ajls Prosecution of Rhino Poachers: The Need to Focus on Prosecution of the Higher Echelons of Organised Crime Networks Robert Doya Nanima LLD Candidate and Graduate Lecturing Assistant, Faculty of Law, University of the Western Cape, Cape Town, South Africa [email protected] Abstract There has been a focus on the prosecution of persons who are arrested in the course of poaching rhinos in national parks, other than the members who form the high- er echelons of the networks. This contribution advances the argument that there is need to create a framework that leads to the prosecution of the higher echelons of the organised crime groups, who are usually beyond South Africa’s borders. To advance this argument, the author evaluates the classification system of networks, the legal regime and the prosecutorial gaps and the evaluation system of progress employed by the National Prosecuting Authority and the Department of Environmental Affairs. Recommendations for reform follow. Keywords Conviction rates – classification – higher echelons – networks – prosecution – rhino poachers 1 Introduction From 2008, there has been a significant increase in rhino horn poach- ing in South Africa to meet the demand in the Asian markets of China1 and 1 Ellis Katherine, ‘Tackling the demand for the rhino horn’ (Save the rhino) <https://www.sa vetherhino.org/rhino_info/thorny_issues/tackling_the_demand_for_rhino_horn> accessed 27 December 2016. © koninklijke brill nv, leiden, ���7 | doi �0.��63/�7087384-��3400Downloaded�� from Brill.com09/28/2021 10:00:39PM via free access 222 Nanima Vietnam.2 This poaching is attributed to Organised Crime Groups (networks) operating within South Africa, Mozambique, and East Asia.3 This article seeks to state that there is need to refocus on the prosecution of the higher echelons of the organised crime groups who are usually beyond South Africa’s borders. -

Pastoral Burnout of African American Pastors: Creating Healthy Support Systems and Balance

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Liberty University Digital Commons Liberty University School of Divinity PASTORAL BURNOUT OF AFRICAN AMERICAN PASTORS: CREATING HEALTHY SUPPORT SYSTEMS AND BALANCE A Thesis Project Submitted to The Faculty of Liberty University School of Divinity In partial fulfillment of requirements For the degree Doctor of Ministry By William E. Johnson, III Lynchburg, Virginia Fall 2018 Copyright © 2018 by William E. Johnson, III All Rights Reserved ii Liberty University School of Divinity Thesis Project Approval Sheet ___________________ Mentor Dr. Johnny Baker ___________________ Reader Dr. David W. Hirschman iii ABSTRACT PASTORAL BURNOUT OF AFRICAN-AMERICAN PASTORS: CREATING HEALTHY SUPPORT SYSTEMS AND BALANCE William E. Johnson, III Liberty University School of Divinity, 2018 Mentor: Dr. Johnny Baker Pastoral self-care is critical in minimizing the symptoms of burnout in ministry. Caught up in the daily demands of ministry, pastors are under tremendous stress as they try to serve God’s people in multiple roles in ministry. Often, pastors are called upon to be counselors, preachers, project managers, students, social activists, and a moral compass for those they lead. Too often, pastors are expected to perform duties with superhuman perfection, life and ministry can get so crowded with obligations and emergencies that pastor’s maintenance of a healthy lifestyle can become unbalanced or cease to exist. This research study will complement the literature on pastor burnout, physical and spiritual exhaustion, and ministry resiliency. Research information will be a collection of qualitative and quantitative methods. African American pastors of various ages will be interviewed and surveyed about ministry support systems and balances. -

"Don't Be Such a Girl, I'm Only Joking!" Post-Alternative Comedy, British Panel Shows, and Masculine Spaces" (2017)

University of Wisconsin Milwaukee UWM Digital Commons Theses and Dissertations August 2017 "Don't Be Such a Girl, I'm Only Joking!" Post- alternative Comedy, British Panel Shows, and Masculine Spaces Lindsay Anne Weber University of Wisconsin-Milwaukee Follow this and additional works at: https://dc.uwm.edu/etd Part of the Communication Commons, Film and Media Studies Commons, and the Gender and Sexuality Commons Recommended Citation Weber, Lindsay Anne, ""Don't Be Such a Girl, I'm Only Joking!" Post-alternative Comedy, British Panel Shows, and Masculine Spaces" (2017). Theses and Dissertations. 1721. https://dc.uwm.edu/etd/1721 This Thesis is brought to you for free and open access by UWM Digital Commons. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of UWM Digital Commons. For more information, please contact [email protected]. “DON’T BE SUCH A GIRL, I’M ONLY JOKING!” POST-ALTERNATIVE COMEDY, BRITISH PANEL SHOWS, AND MASCULINE SPACES by Lindsay Weber A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts in Media Studies at The University of Wisconsin-Milwaukee August 2017 ABSTRACT “DON’T BE SUCH A GIRL, I’M ONLY JOKING!” POST-ALTERNATIVE COMEDY, BRITISH PANEL SHOWS, AND MASCULINE SPACES by Lindsay Weber The University of Wisconsin-Milwaukee, 2017 Under the Supervision of Professor Elana Levine This thesis discusses the gender disparity in British panel shows. In February 2014 the BBC’s Director of Television enforced a quota stating panel shows needed to include one woman per episode. This quota did not fully address the ways in which the gender imbalance was created.