SWAIE 2014 the Third Workshop on Semantic Web and Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

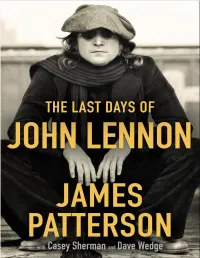

The Last Days of John Lennon

Copyright © 2020 by James Patterson Hachette Book Group supports the right to free expression and the value of copyright. The purpose of copyright is to encourage writers and artists to produce creative works that enrich our culture. The scanning, uploading, and distribution of this book without permission is a theft of the author’s intellectual property. If you would like permission to use material from the book (other than for review purposes), please contact [email protected]. Thank you for your support of the author’s rights. Little, Brown and Company Hachette Book Group 1290 Avenue of the Americas, New York, NY 10104 littlebrown.com twitter.com/littlebrown facebook.com/littlebrownandcompany First ebook edition: December 2020 Little, Brown and Company is a division of Hachette Book Group, Inc. The Little, Brown name and logo are trademarks of Hachette Book Group, Inc. The publisher is not responsible for websites (or their content) that are not owned by the publisher. The Hachette Speakers Bureau provides a wide range of authors for speaking events. To find out more, go to hachettespeakersbureau.com or call (866) 376-6591. ISBN 978-0-316-42907-8 Library of Congress Control Number: 2020945289 E3-111020-DA-ORI Table of Contents Cover Title Page Copyright Dedication Prologue Chapter 1 Chapter 2 Chapter 3 Chapter 4 Chapter 5 — Chapter 6 Chapter 7 Chapter 8 Chapter 9 Chapter 10 Chapter 11 Chapter 12 Chapter 13 Chapter 14 Chapter 15 Chapter 16 Chapter 17 Chapter 18 — Chapter 19 Chapter 20 Chapter 21 Chapter 22 Chapter 23 Chapter 24 -

Alternative Rock Plus Grands Chanteurs De Rock Et L’Un Des Syndicalistes Les Plus Charismatiques

Alternative 485 Rock Alternative Traduit de l’anglais par Jean-Pierre Pugi Dans les entrailles d’un paquebot transformé en palace, Rock deux hommes pleurent la mort d’un de leurs amis en écoutant le douzième album des Beatles… qui n’a jamais existé. À l’atterrissage de son avion, Buddy Holly ne sait pas qu’il va participer au plus grand concert de sa vie. Elvis le rouge restera dans les mémoires comme l’un des Alternative Rock plus grands chanteurs de rock et l’un des syndicalistes les plus charismatiques. Revenu d’entre les morts, Jimi Hendrix se paye une dernière virée avec un de ses roadies. Difficile de trouver un boulot quand on s’appelle John Lennon et qu’on a quitté un groupe qui a, par la suite, connu un certain succès : les Beatles. Stephen Baxter, Gardner Dozois, Jack Dann, Michael Swanwick, Walter Jon Williams, Michael Moorcock et Ian R. MacLeod nous offrent cinq nouvelles où le rock’n’roll est roi, cinq textes mettant en scène des icônes de la musique du xxe siècle, cinq alternatives à notre triste réalité. A45831 catégorie F7 ISBN 978-2-07-045831-8 -:HSMARA=YZ]XV]: folio-lesite.fr/foliosf Olffen. Illustration de Sam Van A45831-Alternative rock.indd 1-3 27/03/14 14:26 FOLIO SCIENCE- FICTION Stephen Baxter, Gardner Dozois, Jack Dann, Michael Swanwick, Walter Jon Williams, Michael Moorcock, Ian R. MacLeod Alternative Rock Traduit de l’anglais par Jean-Pierre Pugi Gallimard Les nouvelles « En tournée », « Elvis le rouge » et « Un chanteur mort » ont été précédemment publiées aux Éditions Denoël. -

Lita Ford and Doro Interviewed Inside Explores the Brightest Void and the Shadow Self

COMES WITH 78 FREE SONGS AND BONUS INTERVIEWS! Issue 75 £5.99 SUMMER Jul-Sep 2016 9 771754 958015 75> EXPLORES THE BRIGHTEST VOID AND THE SHADOW SELF LITA FORD AND DORO INTERVIEWED INSIDE Plus: Blues Pills, Scorpion Child, Witness PAUL GILBERT F DARE F FROST* F JOE LYNN TURNER THE MUSIC IS OUT THERE... FIREWORKS MAGAZINE PRESENTS 78 FREE SONGS WITH ISSUE #75! GROUP ONE: MELODIC HARD 22. Maessorr Structorr - Lonely Mariner 42. Axon-Neuron - Erasure 61. Zark - Lord Rat ROCK/AOR From the album: Rise At Fall From the album: Metamorphosis From the album: Tales of the Expected www.maessorrstructorr.com www.axonneuron.com www.facebook.com/zarkbanduk 1. Lotta Lené - Souls From the single: Souls 23. 21st Century Fugitives - Losing Time 43. Dimh Project - Wolves In The 62. Dejanira - Birth of the www.lottalene.com From the album: Losing Time Streets Unconquerable Sun www.facebook. From the album: Victim & Maker From the album: Behind The Scenes 2. Tarja - No Bitter End com/21stCenturyFugitives www.facebook.com/dimhproject www.dejanira.org From the album: The Brightest Void www.tarjaturunen.com 24. Darkness Light - Long Ago 44. Mercutio - Shed Your Skin 63. Sfyrokalymnon - Son of Sin From the album: Living With The Danger From the album: Back To Nowhere From the album: The Sign Of Concrete 3. Grandhour - All In Or Nothing http://darknesslight.de Mercutio.me Creation From the album: Bombs & Bullets www.sfyrokalymnon.com www.grandhourband.com GROUP TWO: 70s RETRO ROCK/ 45. Medusa - Queima PSYCHEDELIC/BLUES/SOUTHERN From the album: Monstrologia (Lado A) 64. Chaosmic - Forever Feast 4. -

Vector 229 Butler 2003-05 BSFA

May/June 2003 £2.50 £ 229 The Critical Journal of the BSFA BSFA Officials • Chair(s): Paul and Elizabeth Billinger - 1 Long Row Close, Everedon, Daventry NN11 3BE Email: [email protected] Vector 9 • Membership Secretary: Estelle Roberts - 97 Sharp Street, Newland Avenue, Hull, HU5 2AE The Critical Journal of the BFSA Email: [email protected] • Treasurer: Martin Potts - 61 Ivy Croft Road, Warton, Nr Tamworth B79 OJJ Email: [email protected] • Publications Manager: Kathy Taylor - Contents Email: [email protected] • Orbiters: Carol Ann Kerry-Green - 278 Victoria Avenue, 3 Editorial - The View From the Empty Bottle by Andrew Butler Hull, HU5 3DZ Email: [email protected] 4 TO Letters to Vector • Awards: Tanya Brown - Flat 8, Century House, Armoury 4 Freedom in an Owned World Road, London, SE8 4LH Stephen Baxter on Warhammer fiction and Email: [email protected] the Interzone Generation • Publicity/Promotions: Email: [email protected] 18 First Impressions Book Reviews edited by Paul Billinger • London Meeting Coordinator: Paul Hood -112 Meadowside, Eltham, London SE9 6BB Email: [email protected] COVER • Webmistress: Tanya Brown - Flat 8, Century House, Armoury Road, London, SE8 4LH The cover of Comeback Tour by Jack Yeovil (aka Kim Newman). A Email: [email protected] Carnes Workshop book, published by Boxtree Ltd in 1993. Art by Cary Walton 1993 BSFA Membership The first time Vector has had Elvis on the cover, I think. UK Residents: £21 or £14 (unwaged) peryear. Please enquire, or see the BSFA web page for overseas rates. Editorial Team Renewals and New Members - Estelle Roberts - 97 • Production and General Editing: Tony Cullen - 16 Sharp Street, Newland Avenue, Hull, HU5 2AE Weaver's Way, Camden, London NW1 OXE Email: [email protected] Email: [email protected] • Features, Editorial and Letters: Andrew M. -

BWTB George Martin Show

1 PLAYLIST MARCH 13th 2016 2 Celebrating the production genius of George Martin 9AM The Beatles - Besame Mucho /June 6th 1962 (1st session with GM) 3 The Beatles - Love Me Do – Please Please Me (McCartney-Lennon) Lead vocal: John and Paul The Beatles’ first single release for EMI’s Parlophone label. Released October 5, 1962, it reached #17 on the British charts. Principally written by Paul McCartney in 1958 and 1959. Recorded with three different drummers: Pete Best (June 6, 1962, EMI), Ringo Starr (September 4, 1962), and Andy White (September 11, 1962 with Ringo playing tambourine). The 45 rpm single lists the songwriters as Lennon-McCartney. One of several Beatles songs Paul McCartney owns with Yoko Ono. Starting with the songs recorded for their debut album on February 11, 1963, Lennon and McCartney’s output was attached to their Northern Songs publishing company. Because their first single was released before John and Paul had contracted with a music publisher, EMI assigned it to their own, a company called Ardmore and Beechwood, which took the two songs “Love Me Do” and “P.S. I Love You.” Decades later McCartney and Ono were able to purchase the songs for their respective companies, MPL Communications and Lenono Music. Fun fact: John Lennon shoplifted the harmonica he played on the song from a shop in Holland. On U.S. albums: Introducing… The Beatles (Version 1) - Vee-Jay LP The Early Beatles - Capitol LP The Beatles – How Do You Do it - Recorded Sept 4th 1962 (2nd session with GM) BREAK 4 The Beatles - Please Please Me – Please Please Me (McCartney-Lennon) Lead vocal: John and Paul The Beatles’ second single release for EMI’s Parlophone label. -

Catalogue 110 to Place Your Order, Call, Write, E-Mail Or Fax

james cummins bookseller catalogue 110 To place your order, call, write, e-mail or fax: james cummins bookseller 699 Madison Avenue, New York City, 10065 Telephone (212) 688-6441 Fax (212) 688-6192 e-mail: [email protected] www.jamescumminsbookseller.com hours: Monday – Friday 10:00 – 6:00, Saturday 10:00 – 5:00 Members A.B.A.A., I.L.A.B. front cover: item 52 inside front cover: item 92 inside rear cover: item 98 rear cover: item 110 terms of payment: All items, as usual, are guaranteed as described and are returnable within 10 days for any reason. All books are shipped UPS (please provide a street address) unless otherwise requested. Overseas orders should specify a shipping preference. All postage is extra. New clients are requested to send remittance with orders. Libraries may apply for deferred billing. All New York and New Jersey residents must add the appropriate sales tax. We accept American Express, Master Card, and Visa. 1 (ABCEDARY LETTERPRESS) Carol, Mark Philip, and Alan James Robinson [illustrator]. Banging Rocks: A Dissertation on the Origin of a Species of Rock That Descended With Modification From the Ancient Piroboli, Complete With Elaborate Descriptions of Its Social and Sexual Habits, Information Regarding Its Behaviors and Activities, As Well As Details Pertaining to Its Care and Feeding. Small 8vo, [Easthampton, MA: ABCedary Letterpress], 1990. First edition, deluxe issue. Copy “ii” of twenty-five copies numbered in roman, signed by the author and illustrator. Quarter morocco and bird boards, morocco fore-edges. Fine, in publisher’s custom-made wooden box with flaps concealing insets containing two rocks. -

The Scottish House of Roger, with Notes Respecting the Families Of

~ Lit rsusj^x U-^ ^ ^- A PL i/h^^. THE Scottish House of Roger WITH NOTES RESPECTING THE FAMILIES OF PLA YFAIR AND HALDANE OF BERMONY REV. CHARLES ROGERS, LL.D., HISTORIOGRAPHER TO THE ROYAL HISTORICAL SOCIETY, FELLOW OF THE SOCIETY OF ANTIQUARIES OF SCOTLAND, AND CORRESPONDING MEMBER OF THE HISTORICAL AND GENEALOGICAL SOCIETY OF NEW ENGLAND SECOND EDITION EDINBURGH PRINTED FOR PRIVATE CIRCULATION 1875 THE SCOTTISH HOUSE OF ROGER, ETC. Several centuries before the introduction of surnames, and its adoption as a family designation, the name of Roger was common over Europe. Derived from the Latin words rudis a rod, and gcro I bear, the mediseval designation was Rudiger, signifying seneschal or chamberlain. About the ninth century the name was abbreviated Roger ; a form in which both as a name and surname it has been used in all European countries.* Rolf, Rollo, or Rou, a Danish sea-king, founded the Norman dynasty at Rouen, to which place he gave name, and where he reigned sixteen years (A.D. 927 to 943). He was progenitor of William the Conqueror, and of that illustrious race who have since the Conquest borne the English sceptre. During the century following the reign of Rolf at Rouen we find Roger de Toesny, who claimed descent from Malahulc, uncle of Rolf, fighting valiantly against the infidels in Spain, and achieving great victories, not without cruelty and violence. Roger married the daughter of the widowed Countess of Barcelona, "a princess," remarks Mr Freeman, " whose dominions were practically Spanish, though her forrnal allegiance was due to the Parisian king. -

7 UMJETNOST. ARHITEKTURA. FOTOGRAFIJA. GLAZBA. SPORT 78 GLAZBA 78 GLOCKE GLOCKENGELAEUTE 78 Glockegeläute Der Kirche Rüschlikon

POINT Software Varaždin Str. 1 7 UMJETNOST. ARHITEKTURA. FOTOGRAFIJA. GLAZBA. SPORT 78 GLAZBA 78 GLOCKE GLOCKENGELAEUTE 78 Glockegeläute der Kirche Rüschlikon. - [s.l.] : His Master's Voice[s.a.]. - 1 MP : mono 78 ; 30 cm Bakelite gramofonska ploča 78 TEST p1 TEST ploča 78 Test ploča za tehničko proveravanje profesionalnih i kućnih HI-FI uređaja. - Beograd : RTB, [s.a.]. - 1 LP 33,3 ; 30 cm TEST gramofonska ploča Test ploča je prevashodno namenjena za ispitivanje i podešavanje studijskih i kućnih Hi-Fi gramofona. Međutim, ovim se mogućnost njene primene ne iscrpljuje. Ploča je urađena prema savremenim, važećim, svetskim standardima (RIAA, IEC. DIN...). Pored ispitivanja rada predpojačavača i pojačavača snage, moguće je proveriti ispravnost snimanja, kako na kasetofonu, tako i na magnetofonu sa otvorenom trakom. Bitno je da oba kanala sistema (uključujući i zvučnike) budu u fazi, kako bi se dobio pravi stereo zvuk. Jedan od uspisanih signala na ovoj ploči omogućuje proveru faze. Znači, pojedini delovi ploče mogu uz dobar gramofon da posluže umesto generatora tona kojim se mogu obaviti razna ispitivanja uređaja Osim što se uz pomoć ove ploče može proveriti podešenost ručice i zvučnice, njome se može utvrditi kada je vreme za zamenu igle, kada je oštećena, odnosno istrošena. Ploča je napravljena direktnim urezivanjem signala iz režijskog stola. Ovo je urađeno u jesen 1981. godine u studiju X. Radio Beograda. Ovaj studio je, za ovu priliku, povezan direktno sa rezačnicom PGP RTB. Korišćeni su sledeći uređaji: Mikrofon NEUMANN SM 69 (stereo) režijski sto MCI JH 542, ton generator B & K 2002 sa analizatorom frekvencijskog odziva B & K 4712. -

Download Without the Beatles Here

A possible history of pop ... DAVID JOHNSTON To musicians and music-lovers, everywhere, and forever. A possible history of pop… DAVID JOHNSTON Copyright © 2020 David Johnston. All rights reserved. First published in 2020. ISBN 978-1-64999-623-7 This format is available for free, single-use digital transmission. Multiple copy prints, or publication for profit not authorised. Interested publishers can contact the author: [email protected] Book and cover design, layout, typesetting and editing by the author. Body type set in Adobe Garamond 11/15; headers Futura Extra Bold, Bold and Book Cover inspired by the covers of the Beatles’ first and last recorded LPs, Please Please Me and Abbey Road. CONTENTS PROLOGUE 1 I BEFORE THE BEATLES 1 GALAXIES OF STARS 5 2 POP BEGINNINGS 7 3 THE BIRTH OF ROCK’N’ROLL 9 4 NOT ONLY ROCK’N’ROLL 13 5 THE DEATH OF ROCK’N’ROLL AND THE RISE OF THE TEENAGE IDOLS 18 6 SKIFFLE – AND THE BRITISH TEENAGE IDOLS 20 7 SOME MARK TIME, OTHERS MAKE THEIR MARK 26 II WITHOUT THE BEATLES A hypothetical 8 SCOUSER STARTERS 37 9 SCOUSER STAYERS 48 10 MANCUNIANS AND BRUMMIES 64 11 THAMESBEAT 76 12 BACK IN THE USA 86 13 BLACK IN THE USA 103 14 FOLK ON THE MOVE 113 15 BLUES FROM THE DEEP SOUTH OF ENGLAND (& OTHER PARTS) 130 III AFTER THE BEATLES 16 FROM POP STARS TO ROCK GODS: A NEW REALITY 159 17 DERIVATIVES AND ALTERNATIVES 163 18 EX-BEATLES 190 19 NEXT BEATLES 195 20 THE NEVER-ENDING END 201 APPENDIX 210 SOURCES 212 SONGS AND OTHER MUSIC 216 INDEX 221 “We were just a band who made it very, very big. -

PRESS PACK (Spring 2018) CONTENTS of PACK

PULSE RECORDS LTD in association with BILL ELMS presents MISSING FOR 60 YEARS... NOW WORTH MILLIONS TO WHOEVER FINDS IT ! A new comedy stage play by ROB FENNAH Directed by MARK HELLER Based on the novel ‘Julia’s Banjo’ by Rob Fennah & Helen A Jones PRESS PACK (Spring 2018) CONTENTS OF PACK Page 2 Synopsis of Lennon’s Banjo 3 The Holy Grail of pop memorabilia (will it ever be found)? 5 Biographies and characters 18 Pete Best Q&A 19 Julia Lennon Q&A 22 Eric Potts Q&A 25 Mark Moraghan Q&A 28 John Lennon timeline 30 Beatle memorabilia at auction 31 Beatles photographs 32 Pete Best photograph PULSE RECORDS LTD I LENNON’S BANJO PRESS PACK 1 Synopsis of Lennon’s Banjo When Beatles tour guide and Fab Four nerd Barry Seddon finds a letter written by John Lennon, he unearths a clue to solving the greatest mystery in pop history – the wherea- bouts of Lennon’s first musical instrument which has been missing for 60 years. But Barry’s loose tongue alerts tourist and Texan dealer, Travis Lawson, to the priceless relic. In an attempt to get his hands on the letter, and the clues within it, Travis persuades his beautiful wife Cheryl to befriend the hapless tour guide and win his affections. The race to find the holy grail of pop memorabilia is on! So where do the facts end, and the fiction begin? Everything will be revealed in this fast- paced comic caper. PULSE RECORDS LTD I LENNON’S BANJO PRESS PACK 2 The Holy Grail of pop memorabila will it ever be found? by Rob Fennah It is always difficult finding something new to say about the Fab Four; after all, hasn’t everything already been written? Well no, not quite.. -

January 1986

VOL. 10, NO. 1 FEATURES THE STATE OF THE ART MD Hall Of Fame members Buddy Rich, Neil Peart, Steve Gadd, and Louie Bellson, as well as Readers Poll winners Larrie Londin, Danny Gottlieb, Omar Hakim, Sly Dunbar, Alan Dawson, and David Garibaldi discuss past, present, and future trends in drumming. 13 Photo by Veryl Oakland EQUIPMENT IN MD: AN HISTORICAL OVERVIEW A survey of drum equipment over the past ten years, taken from the pages Photo by Dimo Safari of MD. 34 MD SOUND SUPPLEMENT: STUDIO DRUM SOUNDS To find out how today's drum sounds are being created in the studio, we took drummer Andy Newmark and electronics expert Jimmy Bralower into the Power Station, and we've put the results onto our first Soundsheet. 50 Photo by Ebet Roberts Photo by Deborah Stuer COLUMNS EQUIPMENT INDUSTRY HAPPENINGS 126 PRODUCT CLOSE-UP MD's Drum Product Consumers Poll DEPARTMENTS by Bob Saydlowski, Jr. 62 EDUCATION EDITOR'S OVERVIEW 2 JUST DRUMS 128 STRICTLY TECHNIQUE READER'S PLATFORM 4 South Indian Rhythmic System ASK A PRO 6 by Jamey Haddad 76 PROFILES SLIGHTLY OFFBEAT PORTRAITS CLUB SCENE MD Trivia 118 On The Rise: Part 1 Jules Moss: Prepared For The Unusual by Rick Van Horn 110 by Rick Van Horn 96 DRUM MARKET 120 IT'S QUESTIONABLE 124 ROCK PERSPECTIVES NEWS Jim Gordon: Style & Analysis IN MEMORIAM by Bradley Branscum 114 UPDATE 8 Gone But Not Forgotten 130 PUBLISHER Ronald Spagnardi 10th Anniversary Issue ASSOCIATE PUBLISHER Modern Drummer enters its 10th year of publication with this issue. At Isabel Spagnardi the risk of sounding a bit immodest, I must say that I’m extremely proud of what the magazine has accomplished over the past decade and what it EDITOR Ronald Spagnardi has come to represent in our industry. -

Vector 276 Morgan 2014-Su

VECTOR 276 — SUMMER 2014 VVectorector THE CRITICAL JOURNAL OF THE BRITISH SCIENCE FICTION ASSOCIATION LAURA SNEDDON TALKS COMICS IN HER NEW PLUS JO WALTON, GABRIEL COLUMN SEQUENTIALS , ‘ ’ GARCÍA MÁRQUEZ, EUROPE’S BIGGEST SF BOOKSHOP, AND MORE... NO. 276 SUMMEpageR 1 2014 £4.00 VECTOR 276 — SUMMER 2014 Vector 276 The critical journal of the British Science Fiction Association ARTICLES Torque Control Editorial by Glyn Morgan ....................... 3 Vector http://vectoreditors.wordpress.com The Jo Walton Interview Features, Editorial Glyn Morgan by Farah Mendlesohn ............................. 4 and Letters: 17 Sandringham Drive, Liverpool L17 4JN Gabriel García Márquez: [email protected] Conjurer of Reality Book Reviews: Martin Petto by Leimar García-Siino ......................... 12 27 Elmfield Road, Walthamstow E17 7HJ [email protected] The Biggest SF Bookshop in Europe by Ian Watson ........................................ 16 Production/Layout: Alex Bardy [email protected] British Science Fiction Association Ltd RECURRENT The BSFA was founded in 1958 and is a non-profitmaking organisation entirely staffed by unpaid volunteers. Registered in England. Limited Kincaid in Short: Paul Kincaid ................. 19 by guarantee. Resonances: Stephen Baxter ................... 22 BSFA Website www.bsfa.co.uk Sequentials: Laura Sneddon .................... 24 Company No. 921500 Foundation Favourites: Andy Sawyer ... 28 Registered address: 61 Ivycroft Road, Warton, Tamworth, Staffordshire B79 0JJ President Stephen Baxter THE BSFA REVIEW Vice President Jon Courtenay Grimwood Inside The BSFA Review .................... 30 Chair Donna Scott Editorial by Martin Petto ................... 31 [email protected] In this issue, Maureen Kincaid Speller Treasurer Martin Potts visits the Mothership, Paul Graham Raven 61 Ivy Croft Road, Warton, is Looking Landwards, Sandra Unerman Nr. Tamworth B79 0JJ [email protected] returns to The Many-Coloured Land, and Membership Services Martin Potts (see above) Jonathan McCalmont discusses Ender’s Game and Philosophy.